Python has become one of the most influential languages in backend development, powering global platforms such as Instagram, Spotify, Reddit, YouTube, and even core infrastructure at NASA. Its dominance is the result of a pragmatic philosophy emphasizing clarity, simplicity, and developer productivity, combined with a mature ecosystem of frameworks and libraries. Today, Python sits at the intersection of backend engineering, artificial intelligence, and automation, making it a strategic choice for organizations that need rapid development without sacrificing scalability or security.

Python was created by Guido van Rossum in the early 1990s with a clear mission: make code easy to read and write by reducing unnecessary complexity. The language follows the principle that code should be understandable by humans before being executed by machines. This design decision positioned Python as the opposite of earlier server-side languages like Perl or Java, where verbosity and configuration-heavy setups slowed down iteration. As the internet matured, Python evolved alongside it. The release of Django in 2005 marked a turning point and introduced the “batteries included” philosophy — developers could build fully functional, production-grade web applications without assembling dozens of external plugins. Features such as ORM, admin dashboards, session management, and templating support drastically accelerated backend development. Later, Flask and FastAPI expanded Python’s reach to lightweight microservices and high-performance API workloads. This evolution explains why Python functions equally well for monolithic systems, distributed microservices, or API gateways supporting AI/ML inference.

Python’s adoption by some of the world’s largest digital platforms demonstrates its reliability at massive scale. Instagram migrated to Python and Django to manage billions of daily interactions and credits the language for helping small teams ship features quickly. YouTube uses Python extensively to manage backend services, automation, and supporting infrastructure behind video processing and content workflows. Spotify applies Python ecosystem tools for data analysis and backend automation that supports user personalization and catalog intelligence. These companies manage staggering amounts of traffic, complex data pipelines, and large-scale distributed systems, showing that Python is not merely beginner-friendly; it is capable of supporting demanding enterprise workloads.

Technical leaders often compare Python with languages such as Node.js, Java, and Ruby. Node.js is known for performance in real-time events or streaming, yet often requires more boilerplate and third-party modules to assemble backend fundamentals. Java offers exceptional performance and strict, compile-time structure, making it a favorite for industries like finance, telecom, and banking, but the tradeoff is long development cycles and complex configuration. Ruby on Rails introduced rapid development concepts and convention-over-configuration, but community momentum and ecosystem growth have slowed. Python strikes a balance among these: fast to develop, scalable for enterprise workloads, and fully aligned with modern AI and automation use cases. In benchmarking studies, FastAPI demonstrates performance competitive with Node.js while offering far more simplicity in designing services and contracts for APIs.

Startups choose Python because speed to market is their survival strategy. Frameworks such as Django and FastAPI provide ready-to-use features like authentication, routing, data validation, and ORM support. This means teams focus on business logic instead of plumbing. Python also removes the friction of cross-language stacks when AI, data analytics, or automation is involved. If a product roadmap includes personalization, forecasting, or intelligent features, the ability to unify backend logic and machine learning workflows under one language accelerates development.

Enterprise engineering teams choose Python for long-term maintainability. Python’s syntax removes clutter and reduces bugs. Its readability lowers onboarding time, especially when teams grow or rotate across projects. Python integrates naturally with modern DevOps practices — CI/CD pipelines, Dockerized deployments, Kubernetes clusters, serverless workloads, and auto-scaling infrastructure across cloud platforms. FastAPI, in particular, allows construction of asynchronous, event-driven APIs that handle large throughput while maintaining exceptional developer ergonomics.

Ultimately, Python dominates backend development because it aligns perfectly with modern software expectations: fast iteration, integration with AI and automation, reliability under load, and maintainable codebases that can survive growth. Whether a team is building a minimum viable product, a high-performance API, or a complex enterprise-grade system, Python offers the rare combination of simplicity, scalability, and long-term strategic advantage.

What is Backend Development?

Backend development refers to the engineering of the server side of a digital product. While the frontend is everything a user sees and interacts with on the screen, the backend is the system that processes requests, executes business logic, interacts with databases, enforces security rules, and delivers the correct data back to the user interface. In modern software architecture, backend development is a critical component of every web and mobile application, whether it is a social media platform, banking system, eCommerce store, or enterprise SaaS product. Without a backend, applications cannot store information, authenticate users, manage payments, or connect to third party services.

To understand backend development, it helps to visualize the flow of information during a typical user action. When a user opens Uber, enters a destination, and taps Confirm Booking, the mobile app sends a request to the backend. The backend calculates ride availability, checks user authentication, verifies stored payment details, and returns available drivers. These actions are invisible to the user. Everything that happens after the button is clicked and before data appears on the screen is the backend.

Backend development is not tied to one specific technology. It combines programming languages, frameworks, servers, cloud platforms, and databases to create a secure, scalable, and efficient data processing engine that powers the frontend. In modern cloud architecture, the backend rarely exists as a single large application. Instead, it often spans multiple microservices, APIs, queues, worker processes, caching layers, and distributed systems. Backend developers design and maintain these systems so that applications remain stable under real world usage, including thousands of simultaneous requests and unpredictable spikes in traffic.

How the Backend Connects Frontend, Database, Server, and APIs

The backend is the connective tissue of an application. It mediates every interaction between the user interface, application logic, and the data layer. A backend typically interacts with four core components:

- Frontend (UI): The backend receives requests from the frontend, processes them, and returns responses. For example, a React web app or Flutter mobile app communicates with Python APIs to retrieve or send data.

- Database: The backend reads, writes, updates, and deletes records from databases. The database might be relational (PostgreSQL, MySQL) or non relational (MongoDB, Redis), depending on the use case.

- Server: Backend applications run on servers, whether physical machines, virtual servers (AWS EC2), containers (Docker), or serverless environments (AWS Lambda).

- External APIs and services: Backend systems integrate with third party platforms such as Stripe for payments, SendGrid for email, or Twilio for SMS notifications.

When a user performs an action frontside, the backend handles routing of the request, authentication of the user, data validation, business logic execution, integration with other systems, and response formatting. The frontend plays the role of the view layer, while the backend is responsible for processing and decision-making.

Core Backend Functions

Modern backend development involves several critical technical functions that ensure the correct operation of an application.

Data Processing

The backend transforms raw inputs into structured outputs. For example, when an analytics tool collects usage data, the backend aggregates and processes the data into readable reports. When a user uploads an image, the backend compresses and stores it. These workflows require careful handling to avoid performance bottlenecks.

Authentication and Authorization

Authentication confirms identity. Authorization determines access rights. Backend developers implement secure systems using standards such as JWT (JSON Web Tokens) or OAuth2, ensuring that users only access what they are permitted to see. Security failures in this area can lead to data breaches, unauthorized transactions, and compliance violations.

Routing and Request Handling

Routing determines how incoming URLs or API endpoints are handled. For example, an endpoint such as /api/orders may handle order creation when a POST request is received and fetch order details when a GET request is received. Backend frameworks like Django, Flask, and FastAPI simplify routing and request management.

Integration with External Systems

Modern backend applications rarely exist in isolation. They integrate with payment systems, third party authentication providers, logistics systems, AI inference engines, CRM platforms, and cloud services. Backend developers design integration logic and manage authentication tokens, API keys, and secure webhooks that handle external communication.

Responsibilities of a Backend Developer

A backend developer is responsible for designing, building, and maintaining the server side logic of an application. Key responsibilities include:

- Designing application logic and modular architecture

- Creating and managing databases and data models

- Building APIs and routing for frontend interaction

- Integrating third party systems and cloud services

- Writing secure authentication and authorization systems

- Optimizing performance, caching, and query efficiency

- Implementing scalability strategies such as microservices and load balancing

- Monitoring and debugging live production systems

Backend developers require both theoretical knowledge and practical problem solving. They work closely with frontend developers, DevOps teams, QA engineers, and product managers. The role combines engineering rigor with business understanding, as backend logic often encodes business rules, pricing logic, workflow automation, or user permissions.

Backend vs Frontend vs Full Stack

To understand backend development in context, here is a clear distinction among the three common engineering roles:

Area | Focus | Tools and Tech |

Frontend | User interface and visual interaction | JavaScript, TypeScript, React, Vue, Flutter, HTML, CSS |

Backend | Server logic, database, APIs, integration, security | Python, Java, Node.js, SQL, PostgreSQL, Docker, Redis |

Full Stack | Combines frontend and backend responsibilities | Mix of frontend and backend tools |

Frontend development answers the question, “How does the application look and behave on the screen?” Backend development answers, “How does the application work behind the scenes?” Full stack developers work on both sides, especially in startups or small teams.

Backend development is not visible to the end user, yet it directly determines whether the application is reliable, secure, scalable, and fast. Poor backend design can lead to slow loading pages, inconsistent data, security vulnerabilities, and application crashes. Strong backend design enables seamless user experience, rapid feature rollout, and efficient handling of large data volumes.

Backend development is the invisible foundation of modern digital products. It is where the actual business happens: storing data, enforcing logic, securing access, and integrating systems. Without a backend, applications would be static pages unable to process input or interact with the real world. Understanding backend development allows organizations to make informed decisions about architecture, technology stack, scalability, and developer expertise.

Why Python for Backend? Strengths, Limitations, and When to Choose It

Python has become one of the most dependable and widely adopted backend programming languages because it substantially reduces engineering complexity. Organizations across multiple domains use it because it accelerates development, improves maintainability, and integrates seamlessly with modern data and AI workflows. Python is one of the most popular programming languages in the world, consistently ranking high globally, and a primary driver of that success is its suitability for backend development. Its intellectual simplicity, large ecosystem of frameworks, and community support allow engineering teams to build and scale backend systems faster than many other languages.

Readability and Developer Productivity

Python’s most significant advantage is its readability. The language emphasizes clean, expressive syntax that closely resembles human language. Python was intentionally designed around the principle that code should be easy to understand. This helps engineers focus on solving business problems rather than wrestling with complex syntax or boilerplate. Readability translates directly into productivity. Teams spend less time deciphering code, onboarding new developers becomes easier, and maintenance costs decrease.

For enterprise teams, readable code means long term maintainability. Enterprise platforms evolve through multiple development cycles, new team members join frequently, and code often lives for many years. Code readability reduces the probability of defects and speeds up feature development. For startups, readability accelerates experimentation and iteration. Python enables teams to create prototypes quickly and validate ideas without investing excessive resources into architecture from day one.

Vast Library Ecosystem and Framework Maturity

Python’s ecosystem is mature and extensive. Nearly every backend requirement already has well established libraries or frameworks within the Python ecosystem. Developers do not need to reinvent foundational components such as authentication, database integration, email handling, form validation, input sanitization, caching, or background task processing. Instead, they assemble backend components from a rich ecosystem of prebuilt modules.

Several well established backend frameworks have contributed to Python’s dominance:

Django provides a full stack web development architecture with built in admin panel, ORM, authentication, security middleware, routing, and templating. Django follows the “batteries included” philosophy, offering everything needed to build large systems out of the box.

Flask is a microframework that gives greater control over architecture decisions. It is ideal for lightweight applications, microservices, or APIs that require modularity.

FastAPI is a modern and performant asynchronous framework optimized for building high speed APIs. It supports automatic documentation (OpenAPI and Swagger) and integrates natively with type annotations.

In addition to frameworks, Python offers powerful libraries for:

- Data processing (Pandas, NumPy)

- Machine learning (TensorFlow, PyTorch)

- Automation (Celery)

- Database access (SQLAlchemy, ORM tools)

- Caching (Redis and Memcached clients)

These libraries reduce development time significantly. Rather than building functionality from scratch, engineers can assemble robust backend architectures using well tested components.

Performance Considerations Compared to Compiled Languages

Python is an interpreted language, not a compiled one. Compared to languages such as Java, Go, or C++, Python executes code at runtime instead of compiling it into machine code. This has performance implications:

- Python executes more slowly than compiled languages in CPU intensive operations

- Python has higher overhead for multithreaded tasks due to the Global Interpreter Lock (GIL)

- Python is not ideal for tasks that require extremely low latency

However, these concerns are often overstated for most backend workloads. Backend systems are typically I/O bound rather than CPU bound. They depend more on database responsiveness, network latency, and external service calls than raw code execution speed. Frameworks like FastAPI take advantage of asynchronous execution and can match or exceed Node.js performance for many API based systems.

Where performance is critical, Python integrates with faster components. For example:

- Computationally intensive tasks can be offloaded to libraries written in C or C++

- Heavy workloads can be delegated to microservices written in Go or Rust

Engineering teams choose Python not because it is the fastest language, but because it drastically reduces development and iteration time while still offering adequate performance for the majority of backend applications.

Built In Security Within Backend Frameworks

Security is a critical factor in backend development. Python frameworks provide built in security features that protect applications from common vulnerabilities. Django and FastAPI come with security practices pre configured, reducing the risk of mistakes in implementation.

Examples of security features in Django:

- Automatic defense against SQL injection

- Input sanitization to prevent cross site scripting

- CSRF protection for forms

- Secure session and cookie management

- Built in authentication and role based access control

FastAPI enforces type validation and input schema validation, which reduces attack surface from malformed or malicious requests. Python’s security benefits come not only from the language design but from the maturity of the frameworks used to build applications. Each framework includes secure defaults and encourages best practices so developers avoid exposing the system to known vulnerabilities.

Use Cases Where Python Shines

Python’s dominance comes from its ability to handle diverse workloads. It excels in projects requiring rapid development, API driven architectures, or integration with AI and machine learning.

- Building REST and GraphQL APIs

Python frameworks such as FastAPI and Flask are lightweight, fast, and ideal for modern API architectures. Python’s async capabilities enable high concurrency, especially useful for microservices architectures and cloud native deployments.

- AI Driven Applications and Machine Learning Backends

Python is the global standard for machine learning. When an application needs to expose AI functionality through an API, Python becomes the default choice. Using Python on both backend and AI layers eliminates the complexity of multi language integration and reduces maintenance risk.

- Startup MVPs and Rapid Prototyping

Python allows new products to be built and tested quickly. Django, with its built in ORM and admin interface, allows teams to go from idea to functional prototype in days rather than months.

- Enterprise Workflow Automation and Tooling

Python is used extensively in DevOps automation, internal admin tools, ETL systems, automation scripts, and integration services. Its flexibility makes it the ideal language for solving diverse backend challenges across large organizations.

When Not to Choose Python

Although Python provides significant advantages, it is not suitable for every backend use case. There are situations where alternative languages outperform Python.

Python should not be the default choice for:

High Frequency Trading Systems

These applications demand microsecond level performance. Financial trading platforms typically use C++ or Java because execution speed directly affects revenue.

Ultra Low Latency Systems

Applications that must respond instantly at high volume, such as embedded systems or real time gaming servers, require languages optimized for speed and memory efficiency, such as Rust or Go.

High Concurrency Real Time Applications

While Python can scale through async frameworks, Node.js and Go handle high concurrency more efficiently for real time systems like chat servers or multiplayer game engines.

In most typical business applications, performance constraints can be resolved through architecture improvements rather than language change.

Python is chosen for backend development because it prioritizes clarity, speed of development, flexibility, and integration with the AI and data ecosystem. It reduces complexity in backend engineering, provides secure frameworks, and allows companies to scale both applications and development teams. While Python may not be the best choice for ultra low latency or high frequency workloads, it is an exceptional default for APIs, web applications, automation platforms, AI driven services, and MVPs. In modern backend architecture, speed of iteration matters as much as performance, and Python consistently maximizes development velocity without sacrificing reliability.

Python Backend Frameworks Compared

Python’s rise as a backend powerhouse is closely linked to its frameworks. A backend framework defines structure, automates common server tasks, and gives developers a proven architecture to build on. The Python ecosystem offers frameworks suited for almost every type of backend — from large feature rich monolithic systems to lightweight microservices and high performance asynchronous APIs.

Among the many available frameworks, three stand out as dominant choices: Django, Flask, and FastAPI. Each was built with a distinct philosophy and is optimized for different use cases. Surrounding these are specialized frameworks such as Pyramid, Tornado, and AIOHTTP, which serve niche architectural requirements, particularly where real time communication or highly custom architectures are needed.

Selecting the right framework early in a project significantly affects development speed, scalability, and maintainability. This section dives deep into the strengths of each framework, their architecture paradigms, and the situations where each is the best choice.

-

Django

Django is a full stack backend framework designed to deliver high productivity and enforce clean architectural patterns. It follows the philosophy of “batteries included”, meaning the framework provides nearly every component required to build a production ready backend: routing, templating, database integration, security mechanisms, session handling, and admin interfaces.

Built In ORM, Admin Panel, Security Middleware

Django includes its own ORM (Object Relational Mapping), enabling developers to interact with databases using Python objects instead of writing raw SQL. This abstraction layer reduces database complexity and drastically improves security, as Django automatically escapes inputs and handles safe query generation.

One of Django’s strongest features is its automatic admin panel. As soon as models are defined, Django creates a fully functioning backend dashboard where administrators can manage data, review records, and perform CRUD operations. Many companies choose Django for internal systems or business dashboards exclusively because the admin interface saves hundreds of development hours.

Security is tightly integrated into Django. It provides internal protections against the most common attack vectors faced in backend systems, including cross site scripting, SQL injection, session hijacking, and forgery based attacks. Instead of relying on optional add ons, Django enforces secure defaults out of the box.

Best for Monolithic Applications

Django excels in monolithic architectures — systems where backend logic, routing, templating, and database layers exist within the same framework. This is ideal for feature rich products that need strong structure and consistency.

Examples include:

- SaaS platforms with dashboards

- Social networks

- Marketplace platforms with admin management

- Multi role enterprise applications

Django focuses on speeding up development without requiring developers to make architectural decisions for routine elements. Many engineering leaders describe Django as a “framework that gives you structure even before you ask for it.”

Example Architecture

A typical Django application contains:

- URL routing layer (handles user requests)

- View layer (contains business logic and connects to the database)

- Model layer (database mapping through ORM)

- Template layer (optional if serving HTML)

- Middleware (security, authentication, session management)

Django’s opinionated structure ensures all applications follow a clean, maintainable pattern, making it easier to scale teams and codebases as projects grow.

-

Flask

Flask is the opposite of Django in philosophy. Where Django gives structure and batteries included, Flask gives freedom and minimalism. Flask is a microframework, meaning it intentionally includes only the essentials for request handling and routing. Developers bring in additional components only when required.

Many senior backend teams prefer Flask because it lets them design architecture without constraints. Instead of starting with a skeleton full of preconfigured choices, Flask offers a blank canvas.

Microframework Flexibility

Flask does not include built in ORM, validation, authentication, or admin dashboards. Instead, developers assemble these components through extensions or custom implementations. This flexibility leads to highly tailored backend architecture designs.

Flask is ideal when:

- The project needs full control over structure and dependencies

- The backend will operate as a lightweight API or microservice

- Developers want minimal overhead and faster boot times

- The application integrates with AI or ML systems and needs simplicity

Many Fortune 500 companies use Flask for internal service layers and API gateways because microservices require smaller codebases and faster deployments, both of which align with Flask’s lightweight nature.

When Minimalism Is Better

Flask shines in environments where architecture decisions cannot be dictated by an opinionated framework.

Situations where Flask is the strategic choice:

- Applications composed of microservices

- Backend systems acting primarily as REST or RPC endpoints

- Cloud native deployments requiring isolated services

- Teams with established best practices and desire for custom configurations

Startups use Flask when the product vision is evolving and architecture may change drastically. Enterprises use Flask when integrating with complex distributed systems.

-

FastAPI

FastAPI is the newest major Python backend framework and has become the fastest growing one globally. It was created to address modern development requirements that Django and Flask were not initially designed for: async concurrency, high throughput APIs, and machine learning microservices.

FastAPI is asynchronous by default, using the ASGI (Asynchronous Server Gateway Interface) standard, which allows it to handle high concurrent workloads efficiently.

Async First Design

Traditional Python web servers handle one request per thread. FastAPI uses an event loop and async I/O to allow thousands of requests to be handled concurrently using significantly fewer system resources. This architecture makes FastAPI a serious contender in environments where performance is critical.

FastAPI simplifies development while supporting advanced features like:

- Automatic data validation through typing

- Auto generated OpenAPI documentation

- High concurrency without additional plugins

The framework introduces modern engineering practices into backend development. Engineers using FastAPI benefit from cleaner validation, typed request handling, and built in documentation tools.

High Performance for APIs

FastAPI benchmarks show performance comparable to Node.js and Go, which are traditionally considered leaders in API speed. In large API centric systems, especially those with multiple integrations or high request frequency, FastAPI’s speed directly reduces hosting costs and enhances user experience.

Microservices, mobile app backends, and third party API providers benefit from FastAPI’s responsiveness.

Ideal for AI and ML Microservices

FastAPI has become the default framework for ML inference models. Since Python dominates AI, using FastAPI removes friction between research code and production deployment. AI models are deployed as lightweight service endpoints without architectural overhead.

Use cases include:

- Exposing ML predictions via API

- Running inference workloads at scale

- Integrating with streaming platforms or event queues

FastAPI enables data science teams to deploy models without needing extensive backend engineering support.

Other Frameworks (Brief Overview)

While Django, Flask, and FastAPI collectively dominate Python backend development, several other frameworks fulfill niche roles.

Framework | Best Use Case |

AIOHTTP | Real time applications requiring asynchronous networking, web sockets, or streaming responses |

Pyramid | Flexible applications where teams want to scale from microframework simplicity to full stack architecture without switching frameworks |

Tornado | Long lived connections such as event streaming, chat systems, or high concurrency applications |

These frameworks are less commonly used in mainstream SaaS but remain relevant in infrastructure and specialized networking systems.

Framework Comparison Table

Framework | Speed | Learning Curve | Best Use Case | Architecture Style |

Django | Moderate | Moderate | Monolithic apps, dashboards, enterprise systems | Full stack, batteries included |

Flask | Fast | Easy | Custom microservices, flexible architectures | Minimalist, microframework |

FastAPI | Very fast | Easy–Moderate | High performance APIs, ML microservices, async workloads | Async API first |

AIOHTTP / Tornado / Pyramid | High | Advanced | Streaming, networking heavy systems | Async networking |

Django, Flask, and FastAPI are the backbone of Python backend development. They reflect three architectural philosophies:

- Django: structure, completeness, and rapid development for full scale monolithic systems.

- Flask: customization, minimalism, and flexibility for modular architectures and microservices.

- FastAPI: speed, async concurrency, and integration with ML/AI workloads.

Choosing the right one depends on project goals. When a team values structure and needs to ship a complete product quickly, Django accelerates delivery. When flexibility is required and architecture may evolve, Flask provides maximum freedom. When performance and scalability matter, particularly for APIs or AI systems, FastAPI delivers exceptional results.

Correct framework selection is a strategic architectural decision — and one that impacts scalability, cost, and time to market.

Databases in Python Backend Development

A backend application succeeds or fails based on how efficiently it stores, retrieves, and manages data. Every application — whether a grocery ordering app, a hospital EMR system, or a fintech platform — needs a structured approach to handling information. Databases are the foundation of that structure. Python excels in backend development partly because it integrates smoothly with the full spectrum of database options: relational databases, NoSQL databases, in memory storage engines, and distributed data systems.

Unlike frontend code, backend code interacts constantly with data. A user logs in, the backend checks credentials. An admin updates product inventory, the backend writes to a database. A dashboard displays analytics, the backend performs queries and aggregates results. Each of these operations places demands on the database layer, making database architecture a core component of backend reliability, scalability, and user experience.

Python provides native drivers, Object Relational Mappers (ORMs) like Django ORM and SQLAlchemy, and ecosystem support for both SQL and NoSQL systems. Understanding database types and how they align with business requirements is crucial before writing a single line of backend code.

Relational Databases

Relational databases store information in well-defined, structured tables with rows and columns. Each table represents an entity — for example, a table for users, another for orders, another for payments — and relationships between tables are enforced using foreign keys. These systems rely on Structured Query Language (SQL) for querying and manipulating data.

The most widely used relational databases in Python backend projects are PostgreSQL and MySQL.

- PostgreSQL

PostgreSQL is known for being powerful, reliable, and standards compliant. It is preferred when applications require complex queries, transactional reliability, or strong data integrity. PostgreSQL excels in financial systems, analytics dashboards, multi tenant SaaS platforms, and enterprise software where accuracy and consistency are non negotiable.

What makes PostgreSQL stand out is its ability to handle both structured and semistructured data. It supports advanced indexing, full text search, and even JSON storage with indexing capabilities. The ability to mix traditional relational data with JSON gives teams flexibility without abandoning the structure that relational systems provide.

- MySQL

MySQL is one of the most widely deployed databases globally. It powers large scale websites and remains popular due to its simplicity, performance, and wide hosting support. Applications that require fast reads, predictable behavior, and efficient scaling often choose MySQL. eCommerce platforms, CMS systems, and customer facing web applications frequently rely on MySQL because performance optimization, replication, and clustering are well documented and mature.

When to Use Structured Data and Relational Databases

Relational databases are the best choice when:

- The data has a clear structure and defined relationships.

- Accuracy and consistency are more important than flexibility.

- Business logic requires constraints such as unique keys or required fields.

- Transactions must be reliable, such as updating an order and payment together.

Finance, healthcare, HR systems, and inventory platforms all rely on relational databases because incorrect or inconsistent data can create significant business risk.

- ORM vs Raw SQL

Python backends interact with relational databases using one of two approaches: raw SQL or ORMs.

ORMs allow developers to interact with the database using Python objects instead of writing manual SQL queries. This reduces repetitive boilerplate code and protects against SQL injection attacks. Django ORM allows developers to define database schema using Python classes, while SQLAlchemy provides a powerful abstraction layer and query builder.

However, ORMs can sometimes generate inefficient queries, particularly for complex joins or reporting queries involving aggregation. In those cases, backend developers still rely on raw SQL because it offers efficiency, performance optimization, and fine grain control over how the database processes data.

A practical rule in backend architecture is: use ORM for CRUD operations and developer efficiency, and use raw SQL when optimizing high performance queries.

NoSQL Databases

NoSQL databases emerged to solve challenges that relational systems were not designed for — massive scale, rapidly changing schema, or high velocity data ingestion. These databases store data in formats other than tables, allowing for flexibility in structure. Instead of enforcing a rigid schema, NoSQL systems store data however it arrives.

Python integrates seamlessly with NoSQL through native drivers and libraries. The most commonly used NoSQL databases in Python based systems are MongoDB, Redis, and Cassandra.

- MongoDB

MongoDB stores records as document objects that resemble JSON. Instead of tables, data is stored in collections, and each record may have different fields. This flexibility is powerful in modern applications where user generated content may not follow strict uniform patterns.

MongoDB is ideal for:

- Content management systems

- Rapidly changing data, such as product metadata or user profiles

- Backend systems that evolve without needing schema migrations

Developers favor MongoDB because they can modify data models without altering the entire database structure. Teams building MVPs often choose MongoDB because it enables rapid iteration.

- Redis

Redis is an in memory key value store designed for speed rather than persistent storage. Since data is stored in RAM, reads and writes are extremely fast, making Redis ideal for caching. Backend applications frequently use Redis in front of relational databases to reduce load and improve responsiveness.

Redis is used for:

- Caching frequently accessed data to improve performance

- Session management in authentication

- Counters, queues, real time analytics, and rate limiting

While Redis is not a primary data store, its impact on application responsiveness is significant. Using Redis correctly can reduce database load by orders of magnitude.

- Cassandra

Cassandra is designed for massive scale and distributed write heavy workloads. It excels when an application requires data availability across multiple geographic locations. Instead of relying on a single master node, Cassandra distributes data across multiple nodes, making it fault tolerant and highly scalable.

Cassandra is suitable for:

- Applications handling huge volumes of data

- Logging, event collection, IoT systems, and telemetry data

- Platforms that must remain available even if servers fail

While harder to operate than MongoDB or Redis, Cassandra provides incredible resilience for systems where uptime is critical.

When to Use NoSQL

NoSQL databases are ideal when:

- Data does not follow a predictable structure

- The system requires horizontal scaling across multiple nodes

- High write speed and throughput matter more than strong data consistency

Real world examples include social networks, recommendation engines, chat systems, and analytics pipelines — all environments where user behavior cannot be modeled neatly into relational table structures and scalability demands are high.

Choosing the Right Database

Selecting a database is not only a technical decision but a strategic one. It affects scalability, cost, development speed, and reliability. Backend architects evaluate database choice using three lenses: data structure, performance needs, and growth trajectory.

The CAP Theorem helps evaluators understand unavoidable trade offs when designing distributed systems. It states that in any distributed database, you may achieve only two of the following simultaneously:

- Consistency — all nodes show the same data at the same time.

- Availability — every request receives a response even if nodes fail.

- Partition tolerance — the system continues operating even if communication between nodes is lost.

Relational databases typically favor consistency and availability. NoSQL databases often favor availability and partition tolerance for better scalability. Understanding this trade off prevents choosing a database that fails under load or sacrifices necessary consistency.

Scalability decisions follow naturally. If data consistency is paramount — for example, updating a bank balance — a relational database is the correct choice. If scalability and distributed writes matter more — for example, storing millions of sensor readings per minute — NoSQL becomes the better option.

Modern backend architecture often combines multiple databases. A SaaS platform might use PostgreSQL to store account data and billing records, Redis to cache frequently viewed information, and MongoDB to handle user generated content. This approach is called polyglot persistence — using different databases for different tasks.

Databases are not just storage; they define how the backend behaves at scale, under pressure, and over time. Relational databases like PostgreSQL and MySQL offer structure, consistency, and transaction safety — making them ideal for systems where accuracy matters. NoSQL databases like MongoDB, Redis, and Cassandra provide flexibility, speed, and scalability for dynamic or high volume workloads.

Python supports all these databases with rich tooling, ORMs, and extensive ecosystem support, giving backend developers freedom to architect systems that evolve as the product grows. Choosing the right database upfront sets the foundation for performance, reliability, and long term scalability.

Python Backend Architecture Patterns

The architecture of a backend system defines how its components are structured, how responsibilities are distributed, and how the system evolves over time. The choice of architecture affects every dimension of a project: performance, maintainability, scalability, team productivity, deployment strategy, and long term system evolution. Python’s flexibility and mature ecosystem enable it to support multiple architectural styles, from tightly structured monoliths to distributed event driven and microservices systems.

Backend architecture is not just a technical decision; it is a business strategy. Startups choose architectures that enable speed and iteration. Enterprises prioritize scalability, reliability, and maintainability. Python is suited to both ends of the spectrum because it adapts well to different architecture patterns.

This section explores how Python fits into key architecture patterns: monolithic, microservices, and event driven models. It also explains MVC and MVT patterns used in web applications and introduces the concept of clean architecture, an increasingly popular approach for maintaining large Python codebases.

Monolithic Architecture

A monolithic architecture is a single unified codebase where all backend components — routing, business logic, authentication, database operations, and API handling — reside within one application. At runtime, this application is deployed as a single executable package or process.

Monoliths are commonly used during the early stages of a product because they are faster to build and easier to manage. Python frameworks such as Django are intentionally designed with monolithic development in mind. They include integrated components such as ORM, authentication handling, middleware, and routing, enabling full featured backend development without having to assemble a system from multiple components.

Advantages of monolithic architecture include:

- Faster development — ideal for MVPs, startups, and small teams

- Simpler deployment — one application to deploy, one pipeline to maintain

- Consistency — a common codebase with shared models and shared configuration

- Easier debugging — problems can be traced without distributed tracing or inter service dependencies

However, monoliths can become challenging when scaling beyond a certain size. As the application grows, deployments become riskier because changes in one module may inadvertently affect others. Scaling is also vertical in most cases, meaning developers scale by adding more resources to the server rather than distributing functionality.

Monoliths are well suited for projects where:

- The domain is well understood and not rapidly evolving

- The team is small and prefers shared context

- The product requires fast time to market

Django’s success in powering large platforms like Instagram demonstrates that monoliths can scale when the architecture is well maintained and supported by caching, horizontal database scaling, and load balancing.

Microservices Architecture

Microservices architecture breaks a large application into independent services, each responsible for a specific business capability. Instead of a single codebase that handles everything, multiple small services communicate with each other, often via REST or async messaging.

Python is particularly strong in microservices environments because lightweight frameworks such as Flask and FastAPI make it easy to build small independent services. Microservices are popular in companies with complex systems and large engineering teams where services evolve independently.

Key benefits of microservices include:

- Independent deployment: Teams can deploy one service without affecting the entire system.

- Technology flexibility: One service may use Python, another may use Go or Rust, depending on performance needs.

- Fault isolation: If one service fails, it doesn’t necessarily bring down the whole system.

- Scalability: Individual services can scale based on demand instead of scaling the whole app.

However, microservices introduce new challenges: service discovery, distributed tracing, network communication, and increased DevOps complexity. The design demands clear separation of responsibilities, robust communication patterns, and infrastructure support.

Microservices are ideal when:

- A system has multiple independent domains (billing, authentication, analytics)

- Many developers or teams contribute to the same product

- The application must scale horizontally across nodes and geographic regions

Python excels in microservices because it supports rapid iteration and integrates naturally with asynchronous communication patterns, message queues, and cloud native architecture.

Event Driven Architecture

Event driven systems process information based on events rather than direct function calls. Instead of a service asking another service for data, systems publish events into a queue or event stream, and other services subscribe to receive them. Python integrates well with event driven architecture because of its mature implementations of messaging and task queues (Celery, RabbitMQ, Redis, Kafka clients).

In an event driven system:

- Services produce events (such as “order placed”)

- Events are stored or queued

- Other services react asynchronously (such as “send confirmation message” or “generate invoice”)

This pattern enables loose coupling between components. The service responsible for creating orders does not need to know anything about the messaging service or billing service. It simply publishes an event and continues its execution. Event driven systems are resilient, scalable, and well suited for real time applications.

Modern use cases include:

- Order processing in eCommerce

- IoT systems receiving thousands of sensor updates

- Notification systems that trigger emails or SMS messages

Python is often used in event driven systems to create worker services that respond to queued tasks. With tools like Celery or Kafka consumers, Python backends can handle large async workloads efficiently.

MVC and MVT Patterns in Python Frameworks

Architectural patterns such as MVC (Model View Controller) help organize code into clear roles. Many backend frameworks use variations of MVC to maintain separation of concerns.

In the MVC model:

- Models handle data and business logic

- Views manage presentation

- Controllers handle requests and interactions

Python frameworks adopt variations of this structure to keep projects organized.

Django and the MVT Pattern

Django uses Model View Template (MVT), a close relative of MVC. Instead of a controller, Django routes interact directly with the view layer, and templates render output. The key components are:

- Model: Defines data structure and business logic; tightly integrated with Django ORM

- View: Controls application logic and returns responses

- Template: Creates the final UI representation, usually HTML or JSON

This architecture enforces separation, making the codebase easier to maintain. Because Django provides strong conventions, development teams benefit from consistency even in large applications.

In structured frameworks like Django, architecture is not an afterthought — it’s provided upfront. Developers can start building features immediately without deciding folder structures or layering logic manually.

Clean Architecture for Large Python Codebases

Clean architecture addresses the challenges of growing codebases. In monoliths and microservices alike, complexity increases when business logic is deeply intertwined with infrastructure code, database models, and frameworks.

Clean architecture promotes separation of business rules from implementation details. Instead of writing logic directly inside controllers or views, a clean architecture isolates domain logic in independent modules that do not depend on frameworks or external services.

Core principles include:

- Business logic should not depend on database choice, framework, or delivery mechanism.

- The system should remain flexible enough to swap out components without rewriting core logic.

- Dependencies point inward, meaning external systems depend on business rules, not vice versa.

In practice, this leads to layered architecture:

- Domain layer models business rules

- Application layer coordinates use cases

- Infrastructure layer deals with frameworks, databases, APIs

- Presentation layer delivers output to clients

Python is well suited to clean architecture because of its readability and modularity. Large engineering organizations use clean architecture to avoid technical debt, ensure longevity, and reduce coupling between components.

Architecture defines the backbone of a backend system. Monolithic architectures accelerate development and are ideal for early stage products. Microservices support scalability and organizational independence, making them the go to choice for large engineering teams. Event driven architecture optimizes responsiveness and enables real time systems. MVC and MVT patterns provide structure in web frameworks like Django, while clean architecture ensures maintainability and separation of concerns in large codebases.

Python doesn’t force a particular architecture. Instead, it gives teams the freedom to adopt the pattern that best fits their product stage, performance needs, and team structure. For fast moving startups, monoliths keep complexity low. For enterprise scale systems, microservices and clean architecture offer modularity and long term sustainability.

With Python, architecture becomes a strategic accelerator rather than a constraint.

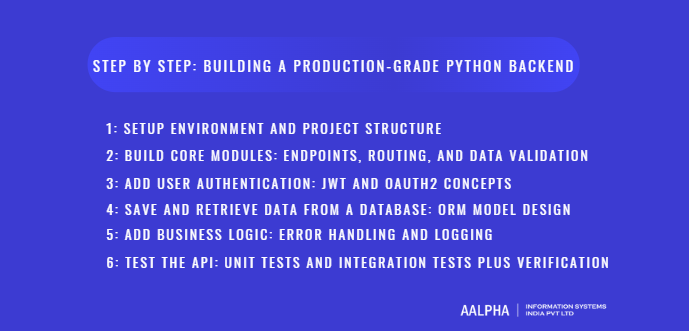

Step by Step: Building a Production-Grade Python Backend

Building a production-grade Python backend is a sequence of deliberate engineering decisions. Each step must be designed with maintainability, observability, and operational realities in mind. The goal is to move beyond a toy project and create a system that can be owned by teams, survive real traffic, and evolve safely. In many cases, practices followed by a Python development company highlight how careful architecture and tooling choices shape long-term scalability and performance. What follows is a comprehensive, developer- and operator-focused walkthrough that covers environment setup, project structure, core modules, authentication, persistence, business logic, error handling and logging, and testing. The guidance is framework agnostic; it describes concrete practices that apply whether the codebase uses Django, Flask, FastAPI, or a different Python web stack.

-

Setup environment and project structure

Begin by establishing an isolated, reproducible environment. Use virtual environments to ensure dependency separation between projects. Prefer tools that give deterministic dependency resolution and lockfiles: Poetry for dependency and packaging management or pip combined with a requirements lock file are both acceptable. The team should decide on one tool and standardize it in the repository so all developers and CI runners install identical dependency versions. Store a lockfile in version control and update it deliberately as part of dependency upgrades.

Adopt a clear project layout from day one. A consistent layout reduces cognitive load and makes onboarding faster. Top level directories typically include an application package with well named modules for models, services, controllers or views, and schemas or serializers for request and response validation, along with separate folders for tests, migrations, and infrastructure code such as Docker and CI pipelines. Keep configuration separate from code: use environment variables for runtime settings and a small configuration layer in the code that validates and types environment values at startup. Secrets should never be committed to source control. Use a secrets manager in production and a secure local secret store for development.

Containerization should be in the plan from the start. Create a simple container build that runs the application in a production style process environment. The container image defines the runtime environment and makes local development closer to production. Add a lightweight development compose configuration that runs the application together with dependent services such as a database, a cache, and a message broker so the team can run the full stack locally.

Finally, include developer tooling: linters, formatters, type checkers, and pre commit hooks. Use a linter to enforce consistent style and catch common errors. Type checking with optional static typing improves developer confidence and helps runtime stability. Integrate these checks into CI so every change is validated automatically.

-

Build core modules: endpoints, routing, and data validation

Design the API contract first. Define resources, routes, expected payloads, response shapes, error schemas, and API versioning strategy. Use human readable API documentation formats such as OpenAPI for REST or SDL for GraphQL and keep documentation synchronized with implementation. Contracts make it easier for frontend and mobile teams to work in parallel and reduce integration friction.

Organize code around domain concepts rather than technical layers. For each domain area create a module containing its models, validation schemas, and service level logic. Routing should be thin: controllers or route handlers must orchestrate input validation, call the domain service, and format responses. Keep transformation, validation, and serialization logic in dedicated schema modules so the controllers remain small and easy to test.

Data validation is a first class citizen. Use robust schema-validation tools to enforce types, required fields, ranges, and shape of nested structures at the boundary. Validate and canonicalize input early to avoid propagating malformed data into business logic. Validation errors must map to clear error codes and human friendly messages that clients can act on.

Design idempotent endpoints where applicable and document side effects. Introduce pagination, filtering, and sorting for collection endpoints. Define consistent response envelopes for success and failure cases. Establish a coherent approach to API versioning from day one to avoid breaking clients when the backend evolves.

-

Add user authentication: JWT and OAuth2 concepts

Authentication and authorization are central to backend security. Choose an approach that fits the product and threat model. For simple APIs, token based authentication using signed tokens such as JSON Web Tokens is common. When you adopt JWTs, separate access and refresh tokens, minimize token lifetimes, and store refresh tokens securely. Avoid placing sensitive data inside tokens. Enforce token revocation, use secure cookie flags if tokens are stored in browser cookies, and plan token rotation strategies.

For enterprise and third party integrations, OAuth2 is the standard. Supporting OAuth2 means handling authorization code flows, client credentials flows, scopes, and refresh tokens correctly. Use well tested libraries and a central authorization service if multiple microservices need consistent access control. Implement role based access control and, where appropriate, attribute based access control for fine grained permissions. Keep authentication logic centralized so access rules do not drift across services.

Always hash passwords with a modern key derivation function that is designed for password storage rather than a general purpose cryptographic hash. Protect authentication endpoints with rate limiting and monitoring to detect brute force attempts. Enforce multi factor authentication for sensitive actions and provide secure recovery flows.

-

Save and retrieve data from a database: ORM model design

Design your data models deliberately. Start with a normalized model for transactional data to avoid anomalies. Use clear naming, explicit relationships, and comprehensive constraints to protect data integrity. For read heavy endpoints or analytical needs, consider complementary denormalized tables or materialized views that are maintained via background jobs. Do not prematurely optimize by denormalizing unless you have measured bottlenecks.

Use your ORM to maintain consistency and to simplify common CRUD operations, but be mindful of generated SQL. Inspect queries produced by the ORM and add explicit joins, indexing, or raw queries where the ORM is insufficiently performant. Always add database migrations under version control and run them deterministically through CI and deployment pipelines.

Plan for indexing strategy early. Indexes speed reads but slow writes and increase storage. Use analytics and slow query logs to drive index decisions rather than guesswork. For operations requiring strong transactional guarantees spanning multiple resources, use database transactions with proper isolation settings. Document which operations require transactional semantics and which are eventual consistent.

For scale, consider polyglot persistence. Use the right database for the right workload: relational systems for transactional data, key value stores for caching and session state, document stores for flexible user content, and specialized time series stores for telemetry.

-

Add business logic: error handling and logging

Separate business rules from infrastructure and presentation. Implement a service layer that captures domain use cases. Services encapsulate complex operations and orchestrate interactions between repositories, external APIs, and asynchronous workers. Keeping business logic isolated improves testability and reduces accidental coupling to frameworks or external services.

Error handling must be consistent and informative. Capture and classify errors into categories: client errors, validation errors, transient infrastructure errors, and fatal server errors. Return meaningful HTTP status codes and include structured error payloads that contain machine readable error codes and human readable messages. Avoid leaking internal implementation details to clients.

Observability is mandatory for production systems. Implement structured logging with JSON formatted entries that include request identifiers, user identifiers where appropriate, and relevant metadata. These logs must be emitted at appropriate levels and forwarded to a centralized log management system. Additionally, instrument metrics for request rates, error rates, latency percentiles, and background job health. Distributed tracing should be enabled for multi service flows to quickly locate bottlenecks and failure points. Establish alerting thresholds based on SLOs so engineers are notified when the system deviates from expected behavior.

-

Test the API: unit tests and integration tests plus verification

Testing must be layered. Unit tests exercise individual components such as validators, small utility functions, and domain services. Integration tests exercise interactions between controllers, the database, and external services, often using test doubles or ephemeral test instances. End to end tests simulate user flows against a staging environment that mirrors production. Use a test database that runs migrations automatically and seed realistic test data.

Define clear test coverage goals driven by risk. Core business logic and security related code deserve the most coverage. Keep tests fast and deterministic. For external dependencies, prefer local test doubles or recorded network interactions rather than live calls to third party services.

Maintain a collection of API contract tests. These tests verify that the API conforms to the documented contract. Providing a Postman collection or an automated API contract test suite helps frontend teams validate expectations. Use contract tests as part of CI so breaking changes are detected early.

Beyond functional tests, include load testing and chaos experiments. Load testing validates horizontal scaling assumptions and the effectiveness of caching. Chaos experiments simulate failures such as database node loss or network partitions to ensure the system fails gracefully and recovers.

Putting it together: a high level example flow

Imagine a user facing resource for managing orders. The lifecycle starts when a client sends a create order request. The API validates the payload against a schema, authenticates the user token, and forwards the request to a domain service responsible for placing orders. That service performs business validations such as stock availability and pricing rules, begins a database transaction, writes order and payment records, emits an order created event to the message broker, and returns a response. The controller serializes the response and returns it to the client. Downstream listeners asynchronously process the order created event to send confirmation emails, update inventory in analytic stores, and notify logistics systems. All steps are logged with a request correlation id that is visible in logs, traces, and monitoring dashboards. Errors are reported to the error tracking system and retries are attempted for transient failures.

This is the production pattern to aim for: clear contracts, isolated domain logic, robust persistence, asynchronous decoupling for non blocking work, and complete observability.

Before shipping to production, ensure the pipeline includes static analysis, tests, security scanning, container image scanning, and automated migrations under control. Provide a staging environment that mirrors production and run smoke tests on deployments. Define a rollback plan and readable runbooks for on call engineers. Establish SLOs that reflect user experience and instrument the system to prove compliance.

Following these steps transforms a prototype into a production grade Python backend that can be maintained by teams, scaled responsibly, and iterated upon with confidence.

APIs, Integrations, and Async Programming in Python

Modern backend systems revolve around communication. Applications rarely operate in isolation anymore. They accept requests from multiple clients — web apps, mobile apps, IoT devices — and interact with external systems such as payment gateways, email providers, analytics services, or machine learning inference engines. APIs, integrations, and asynchronous execution form the backbone of this communication layer.

Python excels at backend integration because of its rich ecosystem, straightforward syntax, and strong async capabilities. As business systems become more connected, the ability to expose clean APIs, handle multiple external integrations, and manage concurrent operations becomes a competitive advantage. This section explains the difference between REST and GraphQL API styles, explores asynchronous programming in Python with FastAPI, and outlines best practices for integrating third-party services such as Stripe, SendGrid, and Twilio.

REST API vs GraphQL: Choosing the Right API Style

When exposing data to clients, two dominant architectural approaches exist: REST and GraphQL.

REST (Representational State Transfer) is the traditional and widely adopted API paradigm. It organizes resources around predictable URIs, such as /orders, /users, or /products, and uses HTTP methods — GET, POST, PUT, DELETE — to operate on them. REST enforces a clean separation between client and server, offers built-in caching advantages, and works well for most CRUD-focused applications.

A REST design aligns with domain thinking: each endpoint corresponds to a business concept. It remains the default choice for enterprise platforms, microservices, SaaS backends, and API-centric systems because clients can work efficiently without negotiating complex schemas.

However, REST can lead to over-fetching or under-fetching of data. Clients may receive more data than needed or may require multiple requests just to assemble the required information. Consider a mobile application needing both a user profile and recent transactions. In REST, these might require two separate calls, increasing round-trip latency.

GraphQL, originally developed by Facebook, solves this by allowing clients to specify exactly what data they need. Instead of multiple endpoints, GraphQL exposes a single endpoint and relies on a schema describing all available data types and relationships. A client requests a custom shape that aligns with its UI needs and receives exactly that structure in response.

GraphQL becomes valuable when frontends are complex, when devices have limited network capacity, or when data aggregation spans multiple entities. Client-driven data requests reduce wasted bandwidth and eliminate the need for versioned endpoints, allowing backend teams to evolve the schema without breaking clients.

REST remains ideal when:

- The application maps cleanly to CRUD operations.

- Strong cacheability and predictable responses are important.

- The API will be consumed by many third parties.

GraphQL is compelling when:

- Frontend requirements shift rapidly, especially in multi-platform applications.

- Minimizing network calls is crucial, such as in mobile or IoT environments.

- Client flexibility outweighs backend simplicity.

Backend architects should avoid dogma — REST and GraphQL can coexist. Many production systems expose core business operations as REST while using GraphQL for internal data aggregation or customer-facing dashboards.

Async Programming and Async IO in FastAPI

Traditional Python web frameworks process requests sequentially. While this model works for many apps, workloads involving large numbers of concurrent requests — streaming, chat, notifications, real-time APIs — benefit from asynchronous execution. Async IO allows the server to continue handling other requests even while waiting for external operations such as network I/O or database operations to complete.

FastAPI, built on the ASGI specification (Asynchronous Server Gateway Interface), embraces asynchronous execution as a first-class programming model. Unlike synchronous frameworks that assign a thread to each connection, FastAPI uses a non-blocking event loop. The server hands off I/O operations, freeing the event loop to handle new requests without blocking.

Async architecture matters when:

- The backend interacts frequently with external systems.

- A large number of connections remain open simultaneously (streaming, web sockets).

- Speed and scalability are business requirements.

Companies deploying AI inference services, chat applications, or real-time dashboards use FastAPI specifically because async execution unlocks high throughput with fewer computing resources. Instead of scaling vertically by increasing CPU and RAM, teams can scale horizontally with small, independent FastAPI instances.

Implementing async logic requires discipline. Background tasks, database drivers, and network clients must be async-aware to avoid blocking the event loop. Backend engineers must be intentional about not mixing sync and async libraries in performance-critical paths. The benefit is substantial: a well-architected async Python service can handle thousands of concurrent requests without degrading user experience.

Best Practices for Third-Party Integrations (Stripe, SendGrid, Twilio)

Most backend systems integrate with external services. Payments, messaging, notifications, CRM updates, analytics events — these operations typically require interaction with third-party APIs. Python’s strength lies in providing mature SDKs for the most common integration targets.

The three most frequently integrated services are:

- Stripe for payment processing (subscriptions, invoicing, digital commerce)

- SendGrid for transactional email (verification emails, password resets, order confirmations)

- Twilio for messaging (SMS, WhatsApp, multi-factor authentication)

To build stable, production-grade third-party integrations, follow these principles:

- Wrap external API calls in a service layer

Never scatter external API calls throughout the codebase. Create internal adapters or gateways that isolate integration logic. This ensures future changes — such as switching providers — do not require touching multiple modules. - Use asynchronous I/O for network calls

Third-party integrations involve network round trips. In an async architecture, treat these calls as non-blocking operations so the request handler can continue serving other tasks. - Never block the main request flow for non-critical work

Payment authorization might be part of the critical path, but sending a confirmation email should not delay the client response. Offload non-critical tasks to background job processors such as Celery or cloud-hosted task services. - Centralize retry logic

API calls may fail due to temporary network issues or rate limits. Instead of complicating business logic with retry loops, use a standard retry mechanism with exponential backoff. This prevents accidental denial-of-service loops. - Manage secrets securely

API keys should not exist in your codebase. Keep them in a secure vault or your platform’s secret manager service. Rotate keys periodically and grant the minimum required permissions. - Log request metadata, not sensitive data

Logging payloads from payment providers is dangerous. Instead of logging card numbers or personal contact details, log only identifiers, request IDs, and status codes. - Use webhooks for event-driven workflows

Many external services notify backends asynchronously through webhooks. Stripe notifies when a payment succeeds. Twilio notifies when an SMS is delivered. Rather than polling external systems, respond to webhook events efficiently and idempotently. - Define failure monitoring and alerting

Treat integrations as dependencies that can fail. Establish alerts for increased error rates or delays in processing.

Enterprises break integrations into three layers: domain logic, integration adapters, and retry/background execution layers. This modular approach keeps the core application logic clean while isolating integration complexity.

Python’s backend capabilities extend far beyond routing and data storage. Modern backend systems are ecosystems of connected services — internal microservices, external APIs, messaging pipelines, payment providers, email delivery systems, analytics, and more. When designed correctly, APIs become part of the product, not merely a transport mechanism.

REST remains the default architectural pattern for backend APIs because it maps directly to business resources and supports predictable interactions. GraphQL offers frontend flexibility and eliminates over-fetching, making it valuable in complex UI environments. FastAPI’s asynchronous model enables Python to handle thousands of concurrent requests, especially useful for AI microservices or real-time applications.

Integrations with services such as Stripe, SendGrid, and Twilio require thoughtful architecture: isolate integration layers, secure secrets, handle retries gracefully, and offload non-critical tasks to background workers.

Behind every successful Python backend is intentionality — in how data flows, how services communicate, and how failures are handled. The more predictable and modular the architecture, the easier it becomes to scale, maintain, and evolve the system over time.

Performance, Caching, and Scalability

High performance and scalability are critical attributes of a production-grade backend. Users expect applications to load quickly and handle traffic spikes without failing. Python backends can scale effectively when performance is treated proactively rather than reactively. Performance tuning does not begin when a server crashes under load; it begins while designing the architecture.

This section covers key strategies used by modern engineering teams to build scalable Python backends: caching with Redis, load balancing using Nginx, horizontal scaling with Kubernetes, and distributed task execution through Celery and message queues.

Redis Caching: Reducing Response Times and Database Load

Caching is the most powerful and cost-effective way to boost backend performance. Many slow applications do not suffer from slow code — they suffer from unnecessary database requests. Redis is an in-memory data store designed for speed. It retrieves data in microseconds, making it ideal for caching frequently accessed information.

Common caching scenarios include:

- User session data

- Authentication tokens

- Frequently accessed database queries

- Rate limiting counters

- API response caching

Instead of hitting the database for every request, the backend checks Redis first. If data exists in Redis, it returns instantly. If not, it fetches from the database and stores the result in Redis for next time. This dramatically reduces database load and improves responsiveness.

Caching strategy must include expiration rules and invalidation logic. Stale data is a common risk when cached values are not refreshed after updates. Design caching around business rules — for example, cache product listings aggressively but not payment-related data.

Caching should be implemented early as part of the architecture, especially for read-heavy systems such as dashboards, social feeds, or eCommerce product pages.

Load Balancing with Nginx: Distributing Traffic Efficiently

A backend server can only handle a limited number of simultaneous connections. When too many users hit the same server, response times slow down and failures increase. Load balancing distributes incoming requests across multiple backend instances, improving availability and spreading load evenly.

Nginx is the preferred load balancer for Python backend deployments because it excels at:

- Handling large numbers of concurrent connections

- Serving static content efficiently

- Acting as a reverse proxy in front of backend services

Load balancing also enables rolling deployments. While one instance is being updated, traffic can be routed to healthy instances. If a server becomes unhealthy, Nginx removes it from the routing pool, protecting the user experience.

A load balancer becomes essential when moving from a single-server monolith to a multi-instance architecture. Instead of increasing server size (vertical scaling), Nginx makes horizontal scaling possible.

Horizontal Scaling with Kubernetes

Once an application can run across multiple backend instances, Kubernetes automates deployment, scaling, and health monitoring. Kubernetes ensures that applications remain available even if individual servers fail. It abstracts away the infrastructure and gives teams a declarative way to manage application state.

Key benefits include:

- Automatic scaling: Increase or decrease the number of backend instances based on CPU, memory, or request load.

- Self-healing: Restart failed containers automatically.

- Rolling updates: Deploy new versions without downtime.

- Cluster management: Run services across multiple nodes for higher fault tolerance.

For high-traffic applications, horizontal scaling is superior to vertical scaling. Instead of upgrading a server to a bigger one, Kubernetes allows hundreds of lightweight instances to run in parallel. This is particularly valuable when traffic is bursty — such as during holiday sales for eCommerce or live-stream spikes on social platforms.

Kubernetes also encourages good architectural decisions. Applications must become stateless, meaning they cannot rely on local disk or memory for critical information. Sessions and state must be stored in Redis or a database instead of in-memory. This transition unlocks true distributed performance.

Queues and Asynchronous Workers: Celery, RabbitMQ, Redis

Not all tasks should be handled inside the main web request. A common mistake is allowing slow processes — like sending emails, resizing images, or making third-party API calls — to delay the user response. Backend APIs are not designed to do long work on the main execution thread.

Task queues solve this problem by offloading slow processes to background workers.

Core components include:

- A queue to store tasks (RabbitMQ, Redis)

- A worker to execute tasks asynchronously (Celery)

- A broker that transports messages between services

For example, when a user places an order:

- The API responds immediately: “Order received.”

- A background worker then processes the order confirmation, invoice generation, and notification sending.