Artificial Intelligence (AI) has shifted from being a futuristic concept to a core pillar of modern app development. Whether you’re building a consumer-facing mobile app, a SaaS dashboard, or an enterprise web portal, users now expect experiences that are not just reactive, but predictive and adaptive. Apps are no longer judged solely on UI polish or backend speed—they’re increasingly evaluated by how “smart” they are.

This comprehensive guide is designed to help you navigate the entire AI integration journey: from scoping features and selecting models to deploying, monitoring, and optimizing AI functionality in real-world software applications. It distills complex concepts into practical, implementation-ready advice, combining the technical insights of developers with the strategic lens of product leaders.

At its core, AI integration is about enabling your application to learn from data, interact intelligently, and improve over time. This goes far beyond a simple chatbot plugin. We’re talking about embedded intelligence—recommendation engines, image classifiers, speech-to-text transcription, anomaly detection, semantic search, and even AI agents that automate workflows.

But integrating AI isn’t just a matter of plugging in an API. It requires upfront planning, model selection, user experience alignment, privacy safeguards, and continuous iteration. That’s why this guide balances both the why and the how—backed by real examples and best practices.

TL;DR Summary

AI integration transforms mobile and web apps by enabling intelligent features like chatbots, recommendation engines, smart search, and voice transcription. The process involves defining clear use cases, preparing quality data, selecting the right models (e.g., OpenAI, Google ML Kit, Hugging Face), and choosing between on-device or cloud inference based on performance needs. Key benefits include personalization, automation, predictive insights, and monetization through premium AI features. Challenges such as model accuracy, privacy compliance (GDPR, HIPAA), and explainability must be addressed through robust design, testing, and secure architecture.

Aalpha helps businesses implement end-to-end AI solutions that are secure, scalable, and aligned with real user value.

What This Guide Covers:

- The strategic value of AI in apps and why it matters today.

- Step-by-step technical process for AI integration into mobile and web platforms.

- Common use cases (chatbots, NLP, computer vision, personalization, etc.).

- How to choose AI tools, APIs, models, and cloud vs edge deployment options.

- How to handle performance, compliance, and user experience considerations.

- Real-world case studies and what you can learn from them.

- Future trends shaping the next generation of AI-native applications.

Whether you’re a developer looking to integrate an LLM into your backend, a product manager aiming to add smart features, or a founder validating AI-based differentiators for your MVP—this guide provides a complete, actionable blueprint. With AI capabilities becoming a baseline expectation, not an optional enhancement, the time to integrate is now.

Understanding AI Integration

Artificial Intelligence is no longer a luxury in modern app development—it’s a foundational capability. Whether you’re creating a finance app that predicts spending patterns or a healthcare platform that transcribes patient consultations in real-time, AI adds context, automation, and intelligence. But before diving into the mechanics of how to integrate AI into your app, it’s important to clearly define what AI integration really means in a software development context—and what it doesn’t.

Did you know? The global artificial intelligence (AI) market was valued at USD 638.23 billion in 2024 and 2025. It is projected to grow to approximately USD 3,680.47 billion by 2034, registering a CAGR of 19.20% between 2025 and 2034.

What Does AI Integration Mean in Software Development?

AI integration refers to the process of embedding machine-driven decision-making, learning, or perception capabilities into your application’s workflow. In practical terms, this could mean adding a recommendation engine to a shopping app, voice-to-text capabilities in a health diary, or a smart camera filter in a social app.

But what does it look like under the hood? In software terms, AI integration typically involves three layers:

- Capturing and preprocessing relevant data (text, images, audio, behavioral signals),

- Passing that data through a model—pretrained or custom—either on-device or via a cloud endpoint,

- Returning intelligent outputs like predictions, classifications, or generated content.

It’s not just about using AI for a standalone task. The goal is to embed AI within user flows—so that it enhances experience without the user realizing they’re interacting with a model.

Built-In vs Third-Party AI: What’s the Right Choice?

Should you build your own AI from scratch, or should you use an off-the-shelf API? This is one of the first decisions every product team faces. So what’s the difference between the two?

Built-in AI refers to models and logic developed in-house or heavily customized using open-source frameworks like TensorFlow, PyTorch, or ONNX. These are usually integrated tightly into the app’s backend or, in the case of on-device AI, directly into the mobile codebase. The upside? Full control over the model behavior, no vendor lock-in, and often better privacy since data doesn’t leave your infrastructure.

Third-party AI, on the other hand, leverages external APIs from providers like OpenAI (for language models), Google ML Kit (for mobile vision/speech), or AWS Rekognition (for image analysis). These services abstract away the model training and infrastructure concerns. Why use third-party AI? It’s faster to implement, scalable, and ideal for MVPs or when you don’t have a data science team in-house. The trade-off: recurring costs, potential latency issues, and limited customizability.

For example, if you’re wondering whether to build your own facial recognition model or use Google’s Face Detection API, the decision will depend on your performance requirements, team expertise, and budget.

How Is AI Different from Machine Learning and Deep Learning in Apps?

People often use the terms AI, ML, and deep learning interchangeably, but they refer to distinct layers of capability. So what’s the actual difference?

- Artificial Intelligence (AI) is the broadest term. It refers to any system that can perform tasks typically requiring human intelligence—like reasoning, perception, or decision-making.

- Machine Learning (ML) is a subset of AI. It focuses on systems that learn from data and improve over time without being explicitly programmed.

- Deep Learning (DL) is a further subset of ML. It involves neural networks with many layers, designed to process vast amounts of unstructured data such as images, text, or audio.

In app development, these distinctions help define the right tool for the job. Want to classify whether a transaction is fraudulent based on structured metadata? ML models like random forests or gradient boosting may be sufficient. Want to analyze user-uploaded voice notes for sentiment? You’ll likely need a deep learning model like a transformer or convolutional neural net.

When integrating AI into your app, it’s not just about picking “AI” in a general sense. You must select the right class of models and frameworks based on your use case, latency requirements, and available data.

What’s the Difference Between On-Device AI and Cloud-Based AI?

If your app is using AI, where should the actual inference—the part where the model makes a decision—take place? This is a core architectural question.

Cloud-based AI processes data on remote servers. This is ideal for compute-heavy models like GPT-4 or large vision transformers. The pros? Virtually unlimited compute power, access to large foundational models, and easier updates. The cons? Requires internet connection, may introduce latency, and comes with privacy considerations.

On-device AI, by contrast, runs locally on the user’s device—using CoreML (iOS), TensorFlow Lite (Android), or MediaPipe. Why go this route? Lower latency, offline functionality, and better data privacy. For instance, real-time gesture recognition in a fitness app or AI-powered keyboard suggestions often use on-device AI to deliver instantaneous responses without sending sensitive data to the cloud.

A common question app teams face is: when should you use on-device AI instead of cloud-based models? If your AI use case needs instant response (e.g., camera filters, gesture detection) or must work offline (e.g., translation while traveling), on-device is essential. Otherwise, cloud is the default for advanced language and vision models.

What Is an AI Agent, and How Does It Differ from an API?

As AI capabilities mature, many teams are exploring AI agents—autonomous software components that can reason, act, and adapt over time. But how are these different from the APIs we’ve used for years?

An AI agent is an intelligent entity capable of goal-directed behavior. It can take input, decide what to do next based on rules or learned patterns, and execute actions—sometimes across multiple tools or platforms. These agents can be stateful (remembering prior context), and often operate semi-autonomously to complete complex tasks like booking appointments, generating content, or managing customer support tickets.

In contrast, an API is a fixed-function interface: you send data, it returns output. It’s stateless and deterministic. For example, calling OpenAI’s embedding endpoint is an API call. But wrapping that API in a loop that monitors input, adapts its response, calls external databases, and then replies intelligently? That’s an AI agent.

In practical app development, an AI API is a tool, while an AI agent is a behavior pattern or persona built from multiple tools. Developers building modern apps increasingly ask: can we replace our rigid workflows with flexible agents that learn and optimize over time?

Key Benefits of AI Integration in Mobile and Web Apps

Artificial intelligence is not just a technical upgrade—it’s a strategic enabler that can reshape how users interact with your product and how your app delivers value. As user expectations rise and markets become saturated, apps that harness AI to offer intelligent, adaptive experiences consistently outperform those that rely on static logic. But what are the specific advantages of integrating AI into mobile and web applications? Below are five high-impact benefits that justify the investment.

-

Enhanced User Personalization and UX

One of the most powerful benefits of AI integration is the ability to deliver deeply personalized experiences at scale. How can an app understand what a user wants before they explicitly ask? AI-driven personalization makes this possible.

By analyzing user behavior, preferences, context, and even emotion (via NLP or computer vision), AI models can adapt UI elements, content, product recommendations, or app flows in real time. In streaming platforms like Netflix or YouTube, AI determines which thumbnails you see, in what order, and which videos to auto-play. In eCommerce, apps like Amazon use AI to personalize product recommendations, discounts, and even homepage layouts based on individual purchase intent.

For startups, integrating recommendation engines, behavioral segmentation, and adaptive onboarding flows helps close the gap between generic UX and user-specific value. The result? Higher user satisfaction, faster time-to-value, and reduced churn.

-

Intelligent Automation of Backend Processes

Can AI streamline operations behind the scenes? Yes—and it’s one of its most overlooked benefits. AI is no longer limited to front-end features like chatbots or personalization; it also transforms backend workflows with intelligent automation.

AI agents and models can automate everything from fraud detection in transactions to smart ticket routing in customer support systems. For example, a fintech app might use an anomaly detection model to flag suspicious withdrawals before they’re processed. A healthcare scheduling system could use AI to dynamically adjust appointment slots based on patient behavior, staff availability, and forecasted no-show rates.

By training models on operational data—tickets, logs, forms, user actions—developers can reduce manual intervention, speed up processes, and increase accuracy. This not only reduces operational costs but also frees up human teams to focus on higher-impact work.

If you’ve ever asked, “How can we reduce our manual workload without compromising quality?”—AI automation is the answer. It brings the scale of software with the judgment of experience.

-

Improved Decision-Making with Predictive Analytics

What if your app could predict what users want, when they’ll churn, or how revenue will trend next quarter? Predictive analytics enables applications to move from being reactive to proactive, delivering insights before problems arise.

With the right models and clean datasets, AI can analyze historical behavior and current activity to forecast future outcomes. In SaaS platforms, this could mean predicting customer churn and triggering retention workflows before it’s too late. In logistics apps, AI can forecast delays based on route data, driver patterns, and historical congestion. Retail apps can use demand forecasting to optimize inventory, dynamic pricing, and marketing spend.

For product teams, these capabilities allow data-driven decisions at every level—from feature prioritization to marketing optimization. And for users, it translates into apps that anticipate their needs, not just respond to them.

Crucially, these models don’t require massive AI infrastructure. Lightweight machine learning models or cloud-hosted AI services like AWS Forecast or Google AutoML can deliver real-time predictions integrated directly into dashboards or app logic.

-

Increased Engagement and Retention

Why do users keep coming back to some apps while abandoning others after the first session? In many cases, the difference lies in how well the app adapts to the user’s behavior—and that’s where AI makes a measurable impact.

AI-driven features such as personalized content feeds, intelligent push notifications, contextual reminders, and usage-based UX adaptations have been shown to dramatically improve key engagement metrics. For example, a language learning app using AI to dynamically adjust difficulty levels and recommend practice sessions at optimal times will retain users longer than one-size-fits-all solutions.

Moreover, AI enables gamification strategies like adaptive challenges or personalized rewards that match a user’s motivations. It can detect when a user is struggling and offer proactive support, nudges, or community prompts. These subtle adjustments, powered by machine learning, create a “stickiness” that drives daily active usage and long-term retention.

Apps that implement these features often see lower acquisition costs over time because their existing users are more loyal and more likely to refer others. In short, AI makes your app habit-forming—in a good way.

-

Monetization Opportunities (Premium AI Features)

Can AI itself become a revenue stream? Absolutely. One of the emerging trends in mobile and SaaS app monetization is the packaging of AI features as premium upgrades.

AI-powered capabilities—such as smart analytics, automatic transcription, advanced filters, real-time language translation, or intelligent writing assistants—are often gated behind paywalls or offered as value-adds in enterprise tiers. Tools like Grammarly, Notion AI, and Adobe’s generative AI suite are excellent examples of products that successfully monetize their AI capabilities.

This presents a unique opportunity for both B2B and B2C apps: offer base-level functionality to all users but reserve high-value AI tools for paying customers. You can also offer “pay-per-use” AI features (e.g., audio transcription credits or image generation tokens) to unlock new revenue streams.

For founders and product managers, AI isn’t just a tool to enhance the product—it’s a way to differentiate pricing tiers, increase average revenue per user (ARPU), and justify subscription upgrades.

Common AI Use Cases in Apps

AI integration in apps isn’t limited to futuristic scenarios or large tech companies. Today, even lean startups and mid-sized SaaS businesses are embedding AI into their products to improve functionality, reduce costs, and elevate user experience. But where exactly does AI fit within an app’s architecture? And which use cases deliver the most value to users and developers alike?

This section explores the most common and high-impact AI use cases in mobile and web applications—each grounded in real-world needs across industries such as healthcare, eCommerce, fintech, education, and productivity tools.

-

Customer Support AI

When users encounter issues in your app, how quickly they get help often determines whether they stay or churn. AI is now central to delivering 24/7, scalable customer support without bloating your human support team.

-

AI Chatbots

AI chatbots are one of the most adopted AI use cases in apps today. But what makes a chatbot truly intelligent?

Instead of using fixed rule-based scripts, modern chatbots leverage natural language understanding (NLU) to interpret user queries, infer intent, and generate relevant responses dynamically. Tools like OpenAI’s GPT-4, Google Dialogflow, or Rasa let you embed conversational agents directly into apps via SDKs or APIs.

For example, a ride-sharing app might use a chatbot to handle refund requests, ETA queries, or driver rating issues. A SaaS dashboard could guide users through feature onboarding via AI-driven chat widgets.

Beyond basic Q&A, these bots can escalate to human agents when needed, log support tickets, and even update user settings—all without requiring human intervention.

-

Support Ticket Triaging

AI doesn’t just assist users directly—it also helps your internal teams.

One growing use case is automated ticket classification and routing. Instead of manually sorting support tickets by priority or department, machine learning models can analyze incoming messages, detect sentiment, and tag issues for faster resolution.

If a fintech app receives a high volume of “failed transaction” complaints, AI models can flag them as critical, auto-assign them to the billing team, and detect recurring patterns to assist in root-cause analysis. This shortens resolution times and improves CSAT (Customer Satisfaction) scores across the board.

-

Recommendation Systems

Ever wondered how Spotify knows what song you might like next? Or how Netflix curates your watchlist so well? Recommendation engines are one of the most commercially impactful AI use cases in both consumer and enterprise apps.

-

For eCommerce, Content Apps, and Marketplaces

In eCommerce platforms, recommendation models use collaborative filtering, content-based filtering, or hybrid approaches to suggest products that users are likely to buy. These models analyze user behavior, historical purchases, browsing patterns, location, and even time-of-day to serve personalized suggestions.

In marketplaces like Etsy or service platforms like UrbanClap, AI-driven recommendations help match users with the most relevant sellers or service providers. For content apps (news, podcasts, or video), AI recommends stories that align with user interests and engagement history.

Developers often use tools like Amazon Personalize, Google Recommendations AI, or open-source libraries like LightFM to build scalable, real-time recommendation systems into their apps.

-

Natural Language Processing (NLP)

Apps that work with user-generated content, documents, emails, chats, or reviews can benefit immensely from NLP-powered features. So how can apps “understand” human language?

Text Classification, Summarization, and Sentiment Analysis

- Text classification enables apps to categorize input—e.g., labeling customer feedback as “positive,” “feature request,” or “bug report.”

- Summarization lets apps distill long-form content into key points. This is especially useful in reading apps, knowledge tools, or educational platforms.

- Sentiment analysis is commonly used in feedback systems, social media monitoring apps, or customer service platforms to detect user tone and urgency.

For example, a mental wellness app might analyze journal entries to detect signs of stress or anxiety, triggering a coaching session. NLP tools like HuggingFace Transformers, Cohere, or even OpenAI’s language models make these capabilities accessible via simple API calls.

-

Computer Vision

When an app needs to “see” the real world through the camera or images, computer vision enables a host of advanced features—from real-time detection to spatial understanding.

Image Recognition, Barcode Scanning, and Face Detection

- Image recognition allows users to scan and identify products, plants, or documents directly through the app.

- Barcode and QR code scanning is essential in logistics, inventory, and eCommerce platforms for quick identification and tracking.

- Face detection and analysis powers biometric logins, liveness detection, and personalized content (e.g., AR filters or beauty apps).

Apps like Pinterest use AI to power visual search, where users upload an image and discover related products. Healthcare apps use vision models to assist in diagnostics by analyzing images of skin lesions or X-rays.

Frameworks like TensorFlow Lite, OpenCV, or Apple’s VisionKit allow developers to integrate computer vision models into native mobile applications with low latency and even offline support.

-

Speech Recognition and Voice AI

Can apps understand and transcribe human speech in real time? With modern speech recognition models, the answer is yes—and the applications are rapidly expanding.

AI Transcription and Voice Assistants

Speech-to-text engines are commonly used in:

- Telemedicine apps to transcribe doctor-patient conversations,

- Language learning apps for pronunciation scoring,

- Voice memo apps that auto-convert audio to text.

On the other end, voice assistants allow users to control apps with voice commands, ask questions, or complete tasks—just like Alexa or Siri. Custom voice agents can be built using tools like Google Cloud Speech-to-Text, Whisper by OpenAI, or Microsoft Azure Speech SDK.

In contexts where typing is inconvenient—like driving, walking, or accessibility needs—speech-powered AI significantly improves usability and user engagement.

-

AI Search

Search is no longer about matching keywords. Today, users expect intelligent, contextual search experiences. That’s where AI search comes into play.

Semantic Search, Autocomplete, and Auto-Tagging

- Semantic search allows users to type natural language queries like “find red shoes under ₹2,000” and get relevant results—even if exact keywords don’t match.

- Autocomplete suggestions improve typing efficiency and help users discover new content.

- Auto-tagging enables apps to automatically assign categories or tags to user-generated content (posts, uploads, listings), improving organization and discoverability.

AI search engines like Pinecone, Weaviate, and OpenSearch with vector embeddings are now being embedded directly into apps for smarter, faster search results—especially in marketplaces, educational platforms, and large content catalogs.

-

Fraud Detection and Anomaly Monitoring

Security-sensitive applications—especially in fintech, insurance, and enterprise platforms—use AI to detect suspicious behavior in real time. But how?

Models trained on user activity logs, transaction data, and geolocation can flag anomalies like:

- Unexpected logins from new locations,

- High-value transactions after long inactivity,

- Repeated failed payment attempts.

Using clustering, time-series analysis, and outlier detection models, apps can proactively prevent fraud, enforce security policies, or trigger identity verification workflows. Tools like Amazon Fraud Detector or custom-built XGBoost models are common in fraud use cases.

-

Behavior Prediction and Retention Models

Wouldn’t it be valuable to know which users are likely to uninstall your app next week? Or who might upgrade to premium? AI models trained on engagement data, feature usage, and churn patterns make this possible.

Retention models are especially common in:

- SaaS apps (forecasting renewals and cancellations),

- EdTech apps (predicting dropout risk),

- Gaming apps (identifying disengaged players).

By proactively identifying drop-off risks, you can deploy targeted interventions—discounts, re-engagement emails, in-app nudges—at exactly the right time.

These models often rely on supervised learning techniques like logistic regression, decision trees, or neural networks, and can be integrated using platforms like Vertex AI, DataRobot, or custom cloud pipelines.

These use cases demonstrate that AI isn’t just a buzzword—it’s a practical tool for solving real product challenges and driving measurable business value. In the next section, we’ll walk through pre-integration planning: how to scope your AI features, decide between build vs buy, and ensure your data and architecture are AI-ready.

Pre-Integration Planning

Before you integrate any AI capability into your app, it’s crucial to go beyond the hype and ask: What problem are we solving? AI is not a one-size-fits-all solution, and poorly planned integrations often result in bloated features, unscalable infrastructure, or privacy risks. Successful AI implementation begins not with code, but with planning and clarity—on the business problem, data ecosystem, technical constraints, and cross-functional ownership.

This section walks through the critical decisions you must make before writing a single line of AI code. It’s the blueprint that determines whether your integration is strategic and sustainable—or just a costly experiment.

-

Identifying Clear Business and User Goals

What is the core purpose of introducing AI into your app? Are you trying to improve user engagement, reduce manual workflows, boost conversion rates, or enable a new premium feature?

A common mistake is to start with the AI capability (“Let’s add a chatbot!”) rather than the outcome (“We need to reduce support ticket load by 40% while maintaining CSAT”). Without a measurable goal, AI features often become superficial—delivering little value to users or the business.

Start by asking:

- What user pain point are we solving?

- What business metric will this AI feature improve?

- How will we measure success? (Retention uplift, support resolution time, revenue per user?)

For example, if your app has a high bounce rate during onboarding, you might explore AI-driven onboarding assistants that personalize the user journey. If your team is buried in repetitive support tickets, AI classification and triage may be the first automation to implement.

Clarity here ensures that you’re not just adding AI because it’s trendy—but because it solves a real problem that matters.

-

Mapping Features to AI Capabilities

Once goals are defined, the next step is matching them to AI functionalities that can deliver results. AI is a vast field—so how do you determine which type of intelligence best fits your use case?

Here are a few mappings to consider:

Use Case | AI Capability |

Personalized product recommendations | Collaborative or content-based filtering |

Automated customer support | NLP-based chatbot or intent classifier |

Smart document tagging | Named Entity Recognition (NER), text classification |

Anomaly detection in transactions | Unsupervised ML, time-series analysis |

Auto-transcription of voice notes | Speech-to-text model (ASR) |

Facial login | On-device face detection model |

For each target feature, ask:

- Does this require classification, generation, recommendation, or prediction?

- Will the model work with the available data modalities (text, image, audio, logs)?

- Do we need this in real time, or can it be processed in batch?

By aligning functional needs with specific AI capabilities, you create a focused development plan and avoid over-engineering.

Build vs Buy: Should You Use Pre-Trained Models or Build Your Own?

Should you train your own AI model or use a third-party service? This is one of the most important architectural and financial decisions in the pre-integration phase.

Use Pre-Trained or Hosted Models When:

- Your use case is common (e.g., chat summarization, sentiment analysis, image tagging)

- You lack in-house machine learning expertise

- You need fast time-to-market

- You prefer a pay-as-you-scale pricing model

Examples: OpenAI for language models, AWS Rekognition for vision, Google ML Kit for mobile apps.

Build Custom Models When:

- You need tight control over model behavior

- Your use case is highly domain-specific (e.g., detecting anomalies in radiology images)

- You want to avoid sending data to external servers for privacy or regulatory reasons

- Long-term cost reduction justifies upfront model training investment

Even when building custom models, it’s common to fine-tune pre-trained open-source models like BERT, YOLO, or Whisper using your domain data.

The real question to ask is: “Do we want to optimize for speed and ease—or for customization and control?”

Understanding Data Availability, Structure, and Labeling

No AI model performs better than the data it’s trained or evaluated on. So before you commit to a particular AI feature, assess the current state of your data infrastructure.

Ask:

- Do we have enough high-quality data for training or fine-tuning?

- Is the data structured (e.g., tabular), semi-structured (e.g., JSON), or unstructured (e.g., text, images)?

- Do we have ground truth labels for supervised learning tasks?

- Is the data clean, de-duplicated, and compliant with privacy laws?

For example, building a recommendation engine requires historical user behavior logs. A fraud detection system needs labeled instances of both normal and anomalous transactions. An AI transcription service performs better if it’s trained on voice samples from your specific user demographic.

If your data isn’t ready, consider:

- Starting with zero-shot models that don’t need custom training

- Investing in a data labeling pipeline (manual or semi-automated)

- Logging user interactions now to prepare for model training later

AI success hinges less on code—and more on data readiness.

Performance Considerations: Latency, Inference Speed, Storage

How fast should the AI output be delivered? Can the model run on-device, or does it require cloud infrastructure? These aren’t just engineering questions—they affect user experience and business cost.

Key performance variables:

- Latency: Real-time UX (e.g., AI keyboard, filters, autocompletion) often requires sub-200ms response times.

- Inference Speed: Larger models are powerful but may require GPUs and introduce wait times.

- Storage: On-device models need to be lightweight (<100MB), while server-based models can be large but require bandwidth and compute resources.

- Power Usage: Critical for mobile apps where battery consumption matters.

For example:

- Voice transcription may need to work offline in a travel app—requiring on-device AI with quantized models.

- A deep learning recommendation engine for a B2B SaaS dashboard can be processed server-side with batch jobs.

In short: performance constraints should guide model selection, deployment strategy, and UX design.

Stakeholders to Involve (Dev, Product, Compliance, UX)

AI integration is not just a developer task. It requires input from across the organization to avoid pitfalls and maximize value.

- Product Managers: Define the use case, metrics, and success criteria. They also validate feature-market fit.

- Engineers (Frontend & Backend): Handle data pipelines, model integration, and API orchestration.

- ML/AI Specialists (if in-house): Select, train, fine-tune, and evaluate models.

- Compliance & Legal: Review data handling, model transparency, user consent, and third-party API risks.

- UX/UI Designers: Ensure the AI-enhanced experience is intuitive and trustworthy. This includes fallback behaviors and explainability.

Even for small teams, getting early buy-in from all these roles prevents misalignment down the line.

For example, deploying a facial recognition feature without UX involvement might result in poor camera prompts or inconsistent lighting UX—leading to user frustration. Or launching an AI chatbot without compliance review may violate data privacy laws if logs are stored improperly.

Pre-integration planning is where you lay the groundwork for AI that actually works—for users and the business. By clarifying goals, matching features to the right AI technique, evaluating the build vs buy trade-off, ensuring data readiness, and aligning stakeholders, you can avoid common pitfalls and move confidently into development.

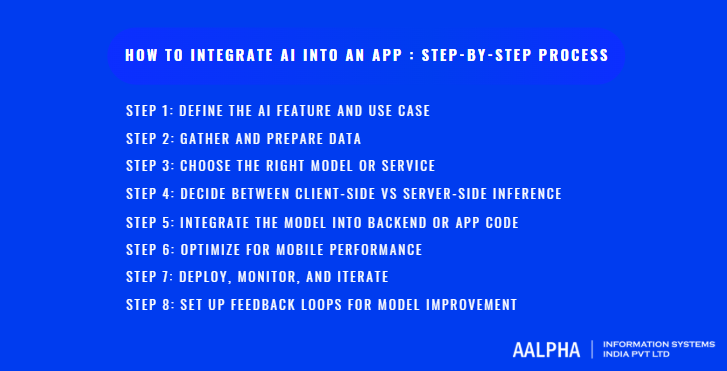

How to Integrate AI into an App : Step-by-Step Process

Successfully integrating AI into an app is not just about choosing a tool or plugging in a model. It requires a well-defined roadmap that spans business alignment, data preparation, model selection, deployment architecture, and feedback-driven optimization. This step-by-step section provides a comprehensive guide that software development teams can follow—whether they’re adding AI to an MVP or scaling it across a production-grade system.

Let’s walk through each stage, from concept to deployment.

Step 1: Define the AI Feature and Use Case

Before writing a single line of code, clarify the purpose behind the AI integration. What specific task do you want AI to perform, and how will it benefit the user?

For example:

- A fitness app might need smart search that understands natural language queries like “low-impact home workouts under 20 minutes.”

- A B2B SaaS tool might want to offer a chatbot that answers FAQs, updates account settings, and escalates issues.

- An eCommerce platform may need personalized product recommendations that adapt in real-time to browsing behavior.

Start with these key framing questions:

- What decision or task are we automating or enhancing?

- How will this feature improve a specific business metric (e.g., support resolution time, conversion rate)?

- Is this AI output deterministic (e.g., classification) or generative (e.g., content creation)?

This step aligns product goals with technical feasibility and keeps the implementation grounded in user value.

If your team lacks in-house AI expertise or struggles to scope the right use case, working with a specialized AI integration partner like Aalpha can accelerate the process. Aalpha helps businesses map out use cases that align with core KPIs, whether it’s integrating smart search into a headless commerce platform or deploying AI-driven personalization into an existing SaaS product. Their approach blends technical execution with outcome-driven strategy—making them ideal for companies launching AI-powered features for the first time.

Step 2: Gather and Prepare Data

AI models are only as good as the data they learn from. So how do you prepare your data for AI use?

Start by identifying the data required to power the feature. For a recommendation engine, this might include user behavior logs, purchase history, and product metadata. For a support triage system, it might involve labeled tickets categorized by urgency and topic.

Once the raw data is identified, focus on:

- Cleaning: Remove duplicates, fill missing values, correct errors.

- Normalization: Standardize formats—e.g., timestamps, prices, categories.

- Labeling: Add human-generated tags or labels if you’re using supervised learning. This step is essential for training classification or regression models.

- Structuring: Convert data into model-friendly formats like CSVs, JSON, or tensors (NumPy arrays, TFRecords, etc.).

If you don’t have large proprietary datasets, consider:

- Synthetic data generation (for low-sample use cases),

- Pre-labeled datasets from open repositories like Kaggle or Hugging Face Datasets,

- Or start with zero-shot or few-shot models that require minimal data.

Also consider privacy. Are you collecting user data that falls under GDPR or CCPA? If yes, anonymization or consent-based logging may be required.

Step 3: Choose the Right Model or Service

Once you have a clean, labeled dataset or clear use case, the next step is selecting the model that powers your feature. Should you fine-tune an open-source model, use a hosted API, or train your own from scratch?

Here are the most common paths:

1. Pre-trained hosted APIs

Ideal for common use cases like summarization, transcription, sentiment analysis, or image tagging. Providers include:

- OpenAI (ChatGPT, Whisper, Embedding APIs),

- Google ML Kit (mobile-friendly NLP and vision),

- Amazon Rekognition (image and video analysis),

- AssemblyAI, Cohere, Replicate, etc.

Fastest to deploy and lowest complexity—but often limited customization.

2. Open-source model libraries

Best for when you need more control. Hugging Face offers models for NLP, vision, audio, and more. Popular frameworks include:

- Transformers (Hugging Face),

- YOLOv8 (vision),

- Stable Diffusion (image generation),

- T5, BERT, LLaMA (language tasks).

You can fine-tune these on your domain-specific data.

3. Custom model training

Suitable for companies with unique data or proprietary needs—like detecting fraudulent invoices or optimizing warehouse logistics. This requires training from scratch using PyTorch, TensorFlow, or Keras, and typically involves:

- GPU-based infrastructure (local or cloud),

- MLOps pipelines for training and testing,

- Hyperparameter tuning, validation sets, and regularization.

Ask yourself: Is this use case general (off-the-shelf models work), or specific (custom training needed)?

Step 4: Decide Between Client-Side vs Server-Side Inference

Where should your model run—on the user’s device or your cloud servers? This decision impacts latency, privacy, architecture, and cost.

Client-Side AI (on-device inference)

Run the model inside the app, using mobile SDKs.

Best for:

- Real-time responsiveness (e.g., smart camera filters)

- Offline support (e.g., travel apps)

- Privacy-sensitive use cases (e.g., health data)

Tools:

- CoreML (iOS),

- TensorFlow Lite (Android),

- MediaPipe, ONNX Runtime Mobile

Constraints: Limited model size, requires quantization, device-specific testing.

Server-Side AI (cloud inference)

Run models on cloud servers via API requests.

Best for:

- Large models like GPT-4 or LLaMA 3

- Workflows requiring external data sources

- Real-time integrations across tools

Tools: AWS SageMaker, Google Vertex AI, custom REST APIs

Trade-offs: Slower if network latency is high, but better for complex reasoning and multi-user scale.

In general: If your AI task must happen instantly and offline, go on-device. If it involves dynamic data, large models, or sensitive logic, use server-side inference.

Step 5: Integrate the Model into Backend or App Code

Once the model is selected and hosted appropriately, the next step is technical integration. How do you plug the AI capability into your mobile or web app?

Options for Integration:

- REST APIs:

Use HTTP endpoints to send data and receive AI output. Most AI platforms expose REST interfaces.

- gRPC or WebSockets:

Use for lower latency or streaming outputs (e.g., transcription, chat). Ideal for real-time apps. - Mobile SDKs:

Google ML Kit, Apple VisionKit, or Hugging Face’s iOS/Android libraries provide model access with native bindings. - Edge deployment packages:

Use ONNX or TFLite to bundle models directly into app packages for on-device inference.

Integrate the AI logic into the feature’s controller or service layer. Always provide fallback logic in case of API failure or edge-case errors.

Step 6: Optimize for Mobile Performance

When deploying to mobile, performance matters. You can’t afford a model that slows the app, drains battery, or eats bandwidth.

Key Optimization Techniques:

- Model quantization: Convert 32-bit float weights to 8-bit integers. Reduces model size and improves speed.

- Pruning: Remove redundant neurons or weights to reduce inference time.

- Edge inference frameworks: Use TensorFlow Lite Delegates, Apple Neural Engine, or NNAPI.

- Batching: If sending to server, batch requests to reduce API calls.

Also, cache results where possible. For instance, if the user has already generated a product description via AI, store it locally instead of reprocessing it each time.

Step 7: Deploy, Monitor, and Iterate

Once integrated, the work isn’t done. AI models can drift over time or behave unpredictably in production. So how do you ensure the AI feature performs as expected?

Deployment Strategies:

- Shadow launches: Run AI in the background without exposing results to users, to validate accuracy.

- A/B testing: Compare AI-enhanced UX to baseline UX.

- Progressive rollout: Deploy the model to 10%, 25%, 100% of users in phases.

Monitoring Metrics:

- Inference time (latency),

- Model confidence scores,

- Conversion or engagement uplift,

- User satisfaction or complaint rates,

- System errors or fallbacks triggered.

Use tools like Datadog, Sentry, or custom dashboards to monitor real-time outputs and performance metrics.

Always assume that your model will need fine-tuning or updating as your app scales and new data becomes available.

Step 8: Set Up Feedback Loops for Model Improvement

AI isn’t static. To improve accuracy and performance, set up mechanisms to collect user feedback, validate model outputs, and retrain periodically.

Types of Feedback Loops:

- Explicit feedback: Users rate suggestions, label results, or flag errors.

- Implicit feedback: App logs user actions (e.g., “clicked recommended item,” “ignored suggestion”).

- Human-in-the-loop: Manual QA teams validate outputs at regular intervals.

- Retraining pipelines: Automatically retrain models on fresh data every 30/60/90 days.

The goal is to use production signals—not just test datasets—to make your model smarter over time.

Over time, this leads to:

- Better personalization,

- Higher model confidence,

- Reduced edge cases or false positives,

- Competitive differentiation through model learning.

Building AI features is a structured process that combines strategic planning, robust data pipelines, architectural decisions, and rigorous post-launch monitoring. When executed well, AI moves from a “wow” feature to a core engine that drives engagement, monetization, and operational efficiency.

Choosing the Right AI Tools, APIs & Frameworks

Selecting the right set of tools, APIs, and frameworks is one of the most important strategic decisions in any AI-powered app project. With dozens of mature platforms available—from OpenAI and Google ML Kit to Hugging Face and AssemblyAI—it’s easy to get lost in the noise. The real challenge isn’t finding tools, but choosing the ones that fit your app’s functional goals, technical constraints, and user expectations.

This section breaks down the most widely used AI platforms, explains how to align them with specific use cases like vision, speech, or recommendations, and guides you through deployment decisions like cloud versus edge. We’ll also address whether to rely on proprietary APIs or open-source frameworks—and where Aalpha fits in if you need expert assistance in choosing your stack.

Understanding the Leading AI Platforms

Let’s start with a plain-language breakdown of the top platforms developers turn to for integrating AI into apps.

OpenAI is the go-to platform when your app needs natural language processing at a high level—like generating content, summarizing text, powering conversational chatbots, or running semantic search. Tools like GPT-4, GPT-3.5, Whisper (for speech recognition), and DALL·E (for image generation) are available via a well-documented REST API. The upside is that you get access to powerful large language models without managing any infrastructure. The downside is limited transparency and high per-token costs for apps with millions of users.

Google ML Kit is ideal if you’re building for mobile platforms and need on-device AI features like face detection, barcode scanning, text recognition, translation, or real-time pose estimation. It works on both Android and iOS through native SDKs, and everything runs locally on the device, meaning your app can offer AI features even when offline. It’s not suited for deep NLP tasks or customizable models, but it excels in performance-sensitive, lightweight mobile applications.

AWS AI Services, including Rekognition for image/video analysis, Comprehend for NLP, Transcribe for audio, and SageMaker for training/deployment, provide a robust enterprise-grade ecosystem. These tools are built for companies that need tight security, audit trails, and compliance support. They also integrate deeply with other AWS services like Lambda, DynamoDB, and Kinesis. That makes AWS a strong choice for building healthcare, fintech, or logistics applications that require scalability and reliability across distributed infrastructure.

Hugging Face is the leader in open-source machine learning. If your team wants full control over model training, fine-tuning, and deployment, their Transformers library gives you access to thousands of pre-trained models across NLP, computer vision, and multimodal AI. You can use models like BERT, LLaMA, T5, and CLIP, or fine-tune them on your domain-specific data. It’s more complex than a simple API call, but it gives you flexibility and cost control in the long term.

AssemblyAI focuses exclusively on audio intelligence. If your app deals with transcriptions, voice commands, call recordings, or podcasts, this platform provides speech-to-text, sentiment detection, summarization, speaker diarization, and topic analysis—all in one API. It’s ideal for building note-taking tools, audio-based learning apps, or voice analytics in customer support systems.

Replicate gives you access to trending open-source AI models through cloud-hosted APIs. It’s especially useful if you want to experiment with models like Stable Diffusion, Whisper, or GPT-J without managing servers or containers. For quick deployment of generative features—like image creation or voice cloning—it’s one of the easiest tools to work with. The trade-off is you don’t have much control over performance tuning or model customization.

Pinecone is a specialized vector database platform used to implement semantic search, LLM memory, and recommendation engines. It allows you to store and retrieve high-dimensional vectors (like those from OpenAI embeddings or Sentence Transformers), enabling similarity-based retrieval across documents, products, or user interactions. If your app requires AI-powered search, real-time personalization, or persistent memory for agents, Pinecone is essential infrastructure.

Matching Tools to Specific AI Use Cases

The best way to narrow down your toolset is by mapping tools to the specific functionality you’re implementing.

For chatbots, semantic search, document summarization, or content generation, platforms like OpenAI or Hugging Face are the most natural fit. They offer pre-trained models with strong support for natural language understanding and generation tasks.

If you’re building a feature that involves voice commands, audio transcription, or voice-based analytics, then tools like Whisper, AssemblyAI, or Google Cloud Speech-to-Text are more appropriate. These services offer high-accuracy models with speaker labeling, sentiment analysis, and keyword spotting.

In the case of image recognition, document scanning, or real-time camera use, consider Google ML Kit for on-device use or AWS Rekognition for cloud-based vision. For more customizable pipelines, open-source models like YOLO or MobileNet can be fine-tuned using TensorFlow or PyTorch.

Apps that rely on recommendations, semantic similarity, or personalization—like eCommerce platforms or learning tools—often combine vector search tools like Pinecone with LLM embeddings from OpenAI, Cohere, or open-source models. This pairing allows your app to match products, users, or articles based on meaning, not just keywords.

When your app involves smart search, content tagging, or information retrieval, semantic vector indexing becomes essential. This is where Pinecone, Weaviate, or Vespa come into play—paired with embedding models to power modern, AI-native search functions.

Deciding Between Cloud and Edge AI

Another key decision is where your AI runs. Should it happen on-device (edge inference) or on your server (cloud inference)?

Cloud-based inference gives you access to more powerful models like GPT-4, Stable Diffusion, or fine-tuned LLaMA. It’s ideal when you need to perform heavy computation, access real-time external data, or coordinate workflows across systems. However, cloud inference requires stable internet connectivity and introduces latency.

On-device inference—typically implemented via TensorFlow Lite, Apple CoreML, or MediaPipe—offers instant performance, privacy, and offline functionality. This is critical in mobile apps where users expect real-time responsiveness, such as camera-based apps, fitness trackers, or translation tools. The trade-off is that model sizes must be small and inference logic must be efficient.

Some apps adopt a hybrid model: run basic AI locally (e.g., for detection or user feedback), and call cloud models for more advanced processing when bandwidth and battery permit.

SDKs for Mobile Platforms

For developers building AI-powered mobile apps, SDK availability is crucial. Google ML Kit provides a highly-optimized set of SDKs for Android and iOS, with built-in modules for image labeling, face detection, and translation. Apple’s CoreML allows iOS apps to run machine learning models natively, integrating with VisionKit, Siri, and ARKit.

TensorFlow Lite is the most flexible option for Android apps needing lightweight custom models. You can train a model in TensorFlow or PyTorch and convert it for mobile deployment. Hugging Face also offers mobile bindings and lightweight model variants, although they require more setup.

MediaPipe is a powerful library for on-device vision tasks—especially real-time pose detection and hand tracking. It’s widely used in fitness, AR, and social apps where performance is critical.

If you’re planning to launch a cross-platform AI-powered mobile app, these SDKs determine what’s realistically possible within your memory, power, and speed constraints.

Open-Source vs Proprietary AI Tools

A common question is whether to build with open-source models or rely on proprietary APIs.

Proprietary tools like OpenAI, AssemblyAI, and AWS provide high performance, fast integration, and managed infrastructure. They’re perfect for MVPs, small teams, and non-technical founders. But you pay per request, have limited transparency, and often can’t customize the model beyond a prompt.

Open-source tools like Hugging Face Transformers, Sentence Transformers, or YOLO give you full control, no recurring cost, and the ability to fine-tune models on your own data. However, they require ML engineering resources and come with the burden of managing model deployment, updates, and monitoring.

For many businesses, the best strategy is hybrid: start with proprietary APIs to validate your feature, then migrate to open-source models when you need control or scale.

How Aalpha Helps You Choose and Implement the Right AI Stack

If your internal team lacks deep experience in machine learning infrastructure, model selection, or cross-platform SDK integration, this is where Aalpha can make a strategic difference.

Aalpha acts as an AI consulting and implementation partner, helping software companies and digital product teams:

- Evaluate use cases and identify the right tool-model combination,

- Compare hosted vs self-hosted options based on privacy, latency, and cost,

- Fine-tune open-source models when proprietary APIs hit limits,

- Architect scalable pipelines for real-time AI features across web and mobile.

Whether you’re embedding AI into a fintech dashboard, a healthcare mobile app, or an eCommerce platform, Aalpha brings both engineering precision and business strategy to your stack selection—ensuring your AI implementation is fast, secure, and sustainable.

Challenges and How to Overcome Them

Integrating AI into your app introduces tremendous capabilities—but it also comes with complex technical, ethical, and usability challenges that aren’t present in traditional software. If not properly addressed, these challenges can compromise product quality, degrade user trust, and significantly increase AI development costs. This section outlines the most common pitfalls in AI integration and provides practical guidance to overcome them.

Model Accuracy and Generalization

AI models rarely perform at 100% accuracy, especially when exposed to real-world data that differs from their training set. A language model trained on generic public datasets may misunderstand domain-specific queries. A vision model trained on clean images might fail on low-light or cluttered photos from user devices.

This mismatch between training and production environments is called generalization error, and it’s one of the primary reasons AI features behave inconsistently in live apps.

To mitigate this, use these strategies:

- Fine-tune models on data specific to your domain or user base.

- Incorporate data augmentation techniques to simulate real-world variability.

- Regularly monitor model performance in production using precision, recall, and error rates.

- Use shadow mode deployment to test new models without exposing outputs to users.

For example, an app offering AI-powered job matching should train on resumes and job descriptions relevant to its specific region and industry, not just general English text from the internet.

Data Privacy and User Trust

When apps collect behavioral, biometric, or conversational data to feed AI models, privacy becomes a front-and-center concern. Users are increasingly wary of apps that feel invasive or that don’t explain how data is used. Regulations like GDPR, HIPAA, and CCPA make this not just a design issue—but a legal one.

To maintain compliance and build user trust:

- Minimize data collection—capture only what is essential for the AI feature to function.

- Use on-device inference wherever possible to avoid transmitting sensitive data.

- Ensure data is anonymized before being logged or used for training.

- Offer transparent user consent, clearly stating what data is collected and how it will be used.

- Allow users to opt out of AI-powered features or data sharing.

For instance, a journaling app offering sentiment analysis should allow users to disable emotional insights and ensure that no journal content is stored on external servers.

Device Limitations (Storage, Compute, Battery)

AI models—especially those involving computer vision, language generation, or real-time inference—can be resource-intensive. On mobile devices, this can lead to long load times, increased battery drain, and app crashes due to memory overload.

To address this, optimize your models and infrastructure:

- Use quantized or distilled models for mobile environments. These models are lighter without sacrificing much accuracy.

- Offload heavy computation to server-side inference when the user is online.

- Employ lazy loading to download models only when needed.

- Cache outputs or reuse inferences to avoid repeated computations.

- Profile performance using tools like Android Profiler or Instruments (iOS) during development.

For example, a photo editing app that adds AI-generated filters should use on-device inference only for lightweight models, and stream more complex effects from the cloud.

Poor UX from Over-Automation

AI is powerful, but when it automates tasks too aggressively—or unpredictably—it can frustrate users. Features that interrupt natural workflows, make incorrect assumptions, or override user intent damage trust and lead to abandonment.

To avoid this:

- Maintain user control—AI should suggest, not dictate. Always provide manual override options.

- Ensure AI outputs are previewable and reversible (e.g., undo buttons, confirmation prompts).

- Allow feedback on AI decisions to capture user sentiment and gather training data.

- Clearly explain what the AI is doing, using simple language or visual cues.

For instance, if a document scanner auto-crops and enhances an image, let the user review and adjust the results before saving. This balance between automation and agency is crucial for long-term adoption.

AI Hallucinations and Explainability

Large language models and other generative AI systems often produce hallucinations—plausible-sounding but factually incorrect outputs. This can be particularly dangerous in healthcare, legal, financial, or educational contexts.

Compounding this is the black-box nature of many models. Users and developers alike may not understand how a given prediction was made.

To handle this:

- Use domain-constrained prompts or input templates to limit hallucinations.

- Apply retrieval-augmented generation (RAG) to ground AI outputs in verifiable documents.

- Implement explanation UIs, showing why the AI made a recommendation or prediction.

- Highlight confidence levels or uncertainty when relevant, rather than overpromising precision.

For example, a finance app using GPT-4 to summarize monthly transactions should clearly indicate that the summary is AI-generated and link back to source data.

How to Test and QA AI-Driven Features

Traditional QA approaches fall short when it comes to AI. There’s no fixed “expected output” for many AI features, and the variability of real-world inputs makes edge cases difficult to predict.

Effective AI QA involves a combination of:

- Test datasets: Curate representative datasets that simulate edge cases, adversarial inputs, and domain-specific examples.

- Unit and integration testing: Test how AI outputs affect downstream app components.

- Bias audits: Evaluate how models perform across different user demographics, device types, or geographies.

- Human review loops: Have QA testers rate AI outputs for quality, especially in language, vision, or audio tasks.

- Monitoring in production: Track drift in accuracy, input failure rates, and frequency of user corrections or rejections.

Also consider canary deployments—release AI features to a small user segment before scaling. This allows you to test behavior at scale while minimizing risk.

For apps that rely heavily on LLMs or generative outputs, it may also be helpful to build a sandbox environment where your product, UX, and QA teams can run exploratory tests without affecting production data.

AI integration is as much about risk management as it is about innovation. From ensuring data privacy and performance to managing hallucinations and automation fatigue, building trustworthy and reliable AI features requires a disciplined approach. By anticipating these challenges early—through model evaluation, performance testing, user feedback, and governance—you can launch AI-driven apps that are not only powerful, but also safe, ethical, and user-centric.

Compliance, Privacy & Security Considerations

As AI becomes embedded in everyday apps—from health tracking and finance to messaging and shopping—data privacy and compliance can no longer be treated as afterthoughts. AI models rely on large volumes of user-generated data to make accurate predictions, recommendations, or classifications. This reality puts developers and founders in direct contact with legal frameworks like GDPR, CCPA, and HIPAA—and raises new questions around fairness, transparency, and security in AI-powered systems.

This section explores the critical privacy and compliance issues that AI-enabled apps must address, along with actionable strategies to secure data pipelines, protect users, and meet regulatory expectations across jurisdictions.

Handling User Data Ethically and Legally

AI systems depend on data to function, but not all data is ethically or legally equal. When you collect user data to train or fine-tune a model, or even just to pass it through an AI API, you are assuming responsibility for how that data is stored, processed, and—most importantly—interpreted.

So what does ethical data handling look like?

First, apps must collect only the data they need, for clearly defined purposes. If you’re building an AI model that recommends products, there is no justification for collecting voice recordings or biometric data. Second, user data must be explicitly consented to, especially if it’s being used for training purposes or shared with third-party APIs. That means using opt-ins rather than opt-outs, and offering meaningful control—not just a checkbox buried in terms of service.

Transparency is equally critical. Users should know:

- What data is collected

- How it’s used

- Whether it’s stored or transmitted externally

- Whether AI is involved in decision-making

In short, ethical AI data practices are not just about legal compliance—they’re essential for earning user trust and long-term loyalty.

GDPR, CCPA, and HIPAA Implications for AI Apps

Different regions impose different legal obligations for handling personal data, and AI integration amplifies the complexity. Here’s a breakdown of three key regulations app developers must understand:

- GDPR (General Data Protection Regulation) applies to any app that processes the personal data of EU citizens. Under GDPR, users have the right to be informed about AI decisions, to access and delete their data, and to opt out of automated profiling. For AI apps, this means disclosing model use in privacy policies, honoring deletion requests, and enabling human review for critical decisions.

- CCPA (California Consumer Privacy Act) grants California residents rights to access, delete, and opt out of the sale of personal data. If your app uses user behavior data to train recommendation models or personalize experiences, you must disclose this and provide opt-out options.

- HIPAA (Health Insurance Portability and Accountability Act) governs health data in the U.S. Any AI-enabled app handling protected health information (PHI)—such as diagnoses, lab results, or therapy notes—must implement strict safeguards. This includes encryption, access controls, and audit logs. If using third-party AI APIs, ensure they’re HIPAA-compliant or enter into Business Associate Agreements (BAAs) with vendors.

Non-compliance isn’t theoretical. Penalties for violations can range from millions in fines to class-action lawsuits and bans in key markets. So if you’re building a healthcare, fintech, or productivity app with AI-driven insights, compliance must be built into the development lifecycle from day one.

Best Practices for Securing AI APIs and Inference Endpoints

Many apps rely on cloud-hosted AI services, calling APIs to perform tasks like transcription, image labeling, or natural language processing. These endpoints can become attack surfaces if not properly secured.

To protect your AI stack:

- Always use HTTPS to encrypt data in transit.

- Authenticate every API request using API keys, OAuth tokens, or signed requests.

- Implement rate limiting to prevent abuse or brute-force attacks.

- Use input validation and sanitation to protect models from adversarial inputs.

- Avoid logging sensitive data like personally identifiable information (PII) or access tokens in your API call traces.

If you deploy your own models behind APIs (e.g., using FastAPI or Flask), consider containerizing them with Docker and deploying behind an API gateway with throttling, logging, and authentication built in. For public-facing AI features, it’s also wise to isolate model inference environments from core app infrastructure to contain potential breaches.

Anonymization and Differential Privacy

Even when you’re not directly storing user names or emails, AI systems can infer identity based on behavioral patterns, device data, or writing style. This makes anonymization a necessary—though technically complex—safeguard.

To protect users:

- Strip or hash identifying fields before storing data used for training.

- Remove metadata (timestamps, GPS, user agent strings) where possible.

- Aggregate logs in a way that doesn’t tie actions to a unique session or ID.

Differential privacy adds a layer of mathematical protection by injecting randomness into data or outputs, making it statistically impossible to determine whether a specific individual’s data was included in a dataset. Apple and Google both use differential privacy in their data collection pipelines.

If you’re building an AI model that learns from app usage patterns or shared user content, incorporating differential privacy mechanisms can help satisfy both compliance and ethical expectations.

Explainability and Auditability

AI features must not only work—they must be understandable. Users increasingly demand to know why an AI recommended a product, flagged a message, or declined a transaction.

To build explainability into your app:

- Use model-agnostic techniques like LIME or SHAP to surface key decision factors.

- Show confidence levels or prediction probabilities.

- Provide natural language explanations alongside AI outputs when possible.

- Allow users to challenge AI decisions or provide corrective feedback.

From a compliance perspective, auditability means you can reproduce and explain a model’s output for a given input—even months later. This is especially important in regulated sectors like finance, healthcare, or insurance, where audit trails may be required by law.

Maintaining reproducible AI logs (with anonymized inputs and versioned models) enables teams to debug issues, train improved models, and demonstrate compliance during audits or legal reviews.

Privacy, compliance, and security are not barriers to AI innovation—they’re prerequisites for responsible AI deployment. By building systems that honor user rights, secure data flows, and make decisions transparently, developers can create apps that are not only intelligent but also trustworthy. As regulations evolve and public scrutiny increases, these considerations will only grow in importance—especially for founders and product teams aiming to scale AI capabilities in sensitive domains.

Future of AI in Mobile and Web Apps

The integration of AI in mobile and web applications is no longer a competitive edge—it’s becoming foundational. But the next evolution isn’t just about embedding smarter features. It’s about transforming how apps are built, how they behave, and how users interact with them. Five major trends are reshaping the future of app development, all driven by breakthroughs in AI agents, generative models, and large language systems.

-

Rise of AI Agents Replacing Hard-Coded Workflows

Traditional apps operate through predefined logic: click a button, call an API, display a screen. But AI agents are replacing these rigid, deterministic workflows with adaptive, autonomous ones. Instead of hard-coding user journeys, apps will increasingly rely on multi-step agents that interpret user intent, gather data across systems, reason through decisions, and take actions—much like a human assistant would.

This shift means fewer menus and more goal-driven interactions. A healthcare app could have an agent that books appointments, checks lab availability, and follows up with reminders—all dynamically orchestrated based on user preferences and system conditions.

-

Generative UI and Voice-First Interfaces

As generative AI improves, it’s starting to influence not just content—but interface design. Apps will move toward generative UIs that adapt layouts, visuals, and flows based on user goals. Instead of building static forms, developers can let AI generate interactive components on the fly—tailored to user context.

In parallel, voice-first interfaces are becoming more natural. Apps that traditionally relied on taps and swipes will soon offer conversational interfaces as the primary method of interaction. With improved speech recognition and real-time synthesis, the line between voice assistant and app UI will blur.

-

Real-Time, Always-On AI Copilots

The future of AI in apps is persistent assistance. Imagine an always-on AI layer that observes user behavior, anticipates needs, and suggests actions—without being explicitly invoked. From writing assistance to proactive reminders or financial forecasting, these AI copilots will operate in the background, seamlessly enhancing user workflows.

-

Multi-Modal AI in Everyday Apps

The next generation of apps won’t just process text or images—they’ll understand and respond across multiple modalities at once. Multi-modal AI will allow users to describe a product with a photo, voice, and a caption—and get intelligent responses that combine all three inputs. This is already happening in experimental interfaces and will become mainstream in retail, design, travel, and education.

-

Role of LLMs in Redefining Product Experiences

Large Language Models (LLMs) like GPT-4, Claude, and LLaMA 3 are redefining what apps can do. They’re not just tools for text—they’re reasoning engines, interface layers, and knowledge systems. LLMs allow product teams to ship features that were previously impossible: semantic search, contextual recommendations, generative onboarding flows, and domain-specific AI advisors.

In the near future, many apps won’t just contain AI—they’ll be AI. That shift will demand new design paradigms, faster iteration cycles, and a deep focus on transparency and safety.

Conclusion

AI is no longer an enhancement—it’s the engine powering the next generation of mobile and web applications. Whether you’re launching a new app or modernizing an existing one, the ability to integrate intelligent features is what will separate category leaders from feature-chasing competitors. From dynamic personalization and voice assistants to real-time copilots and multi-modal interfaces, AI unlocks experiences that users not only value—but increasingly expect.

But the path from idea to implementation is rarely straightforward. AI integration involves more than selecting the right model or API. It demands strategic alignment, data readiness, secure architecture, regulatory compliance, and an uncompromising focus on user trust. The technical challenges are real—and the business implications even more so.

This is where execution becomes critical. Working with a dedicated AI development partner like Aalpha can help you move beyond experimentation and into high-impact deployment. Aalpha brings deep expertise in building AI-powered mobile and web applications—blending machine learning, automation, and UX strategy into production-ready solutions. Whether you’re implementing a GPT-powered chatbot, an on-device vision model, or an AI agent to streamline workflows, Aalpha helps ensure your product is both technically sound and commercially viable.

If you’re ready to turn your AI ideas into real, user-facing features—now is the time. The opportunity is no longer theoretical. The tools are accessible, the infrastructure is scalable, and the market is receptive. But execution is everything.

To build smarter apps that lead your category—not just follow it—partner with Aalpha and bring intelligent product experiences to life.

FAQs on How to Integrate AI into an App

1. What is AI integration in app development?

AI integration involves embedding machine learning models or intelligent APIs into mobile or web applications to perform tasks such as text analysis, image recognition, smart search, or personalized recommendations. It allows apps to learn from user behavior, make predictions, and automate decisions in real time.

2. Which types of apps benefit the most from AI integration?

AI adds value across a wide range of apps including eCommerce (recommendations, dynamic pricing), healthcare (voice transcription, diagnostics), finance (fraud detection, risk scoring), SaaS (chatbots, smart search), and EdTech (adaptive learning, speech scoring). Any app that uses large data streams or repetitive user interaction can benefit.

3. How do I choose between OpenAI, Google ML Kit, and Hugging Face?

Choose OpenAI for LLMs and generative features, Google ML Kit for mobile on-device vision and language tasks, and Hugging Face when you need full control over model fine-tuning and prefer open-source. Your decision should depend on the use case, latency needs, data privacy, and your team’s ML expertise.

4. Is it better to build my own AI model or use a third-party API?

Use third-party APIs for rapid development, low complexity, and general tasks like summarization or OCR. Build custom models when your data is highly domain-specific or when you need tight control over inference logic, training data, and output behavior. Many successful apps start with APIs, then transition to in-house models at scale.

5. How do I ensure my AI features comply with GDPR or HIPAA?

Use explicit user consent, limit data collection to only what’s necessary, anonymize or pseudonymize personal data, and use secure APIs. For healthcare apps, ensure any third-party vendors are HIPAA-compliant and provide Business Associate Agreements (BAAs). Always log access, changes, and usage of AI models to maintain auditability.

6. Can AI models run on mobile devices without internet access?

Yes. On-device AI is possible using tools like TensorFlow Lite, Apple CoreML, or Google ML Kit. These frameworks allow you to deploy optimized models directly inside mobile apps, supporting offline functionality with fast response times and greater data privacy. However, model size and complexity must be carefully managed.

7. How much data is needed to train an AI model for my app?

It depends on the complexity of the task. Simple classifiers may need a few thousand labeled samples, while generative models or recommendation systems might require millions. If data is limited, you can start with pre-trained models and fine-tune them using transfer learning or opt for zero-shot/few-shot APIs.

8. What are the biggest challenges in AI integration?

Common challenges include ensuring model accuracy in real-world settings, handling user data securely, dealing with mobile performance constraints, preventing over-automation that harms UX, and debugging unpredictable outputs from generative models. Robust testing, explainability tools, and feedback loops are essential to address these issues.

9. How can I monitor and improve AI performance post-launch?

Use logging and analytics to track model predictions, latency, and failure rates. Collect user feedback on AI outputs. Implement A/B testing to compare different models or prompts. Over time, retrain your models using real-world usage data to reduce drift and improve personalization.

10. Can Aalpha help with integrating AI into my app?

Yes. Aalpha specializes in building and integrating AI-powered features into mobile and web applications. From LLM-driven chatbots and recommendation engines to edge-optimized vision models, Aalpha provides end-to-end strategy, implementation, and post-launch support.

Build smarter apps with AI—faster, safer, and tailored to your business goals. Partner with Aalpha to turn your AI vision into a production-ready solution.