1. Introduction to Multi-Agent AI Systems

Multi-agent AI systems (MAS) represent a pivotal shift in artificial intelligence: from isolated, single-task models to interconnected, autonomous agents collaborating (or competing) to solve complex, distributed problems. These systems mimic the dynamics of real-world environments, where no single entity holds complete knowledge or control.

This section lays the groundwork for understanding MAS by defining the core principles, distinguishing them from single-agent models, and showcasing their growing role across industries such as logistics, healthcare, finance, and autonomous systems.

1.1 What Is a Multi-Agent AI System?

A multi-agent system is a collection of autonomous, interacting agents operating within a shared environment. Each agent has its own:

- Perception: Ability to observe and interpret data from the environment

- Decision-making capability: Often based on goals, rules, or learning models

- Actions: Means of affecting the environment or communicating with other agents

Unlike traditional AI systems designed for singular, monolithic tasks, MAS are inherently modular, distributed, and decentralized. The emphasis is on emergent behavior — where the overall system intelligence emerges from the interactions among individual agents.

1.2 Core Characteristics of MAS

Key characteristics that define multi-agent systems include:

- Autonomy: Each agent can make decisions without centralized control.

- Decentralization: No global controller; decision-making is distributed.

- Collaboration (or Competition): Agents may cooperate to achieve global objectives or compete in adversarial settings.

- Scalability: New agents can be added without rewriting the entire system.

- Adaptability: Agents can learn from interactions with other agents and the environment.

These features make MAS particularly suitable for dynamic and uncertain environments.

1.3 Single-Agent vs Multi-Agent Systems

Feature | Single-Agent AI | Multi-Agent AI |

Decision Source | Centralized | Distributed |

Environment Model | Often static or well-defined | Dynamic and evolving |

Coordination | Not required | Core requirement |

Scalability | Limited | Modular and scalable |

Examples | ChatGPT, image classifiers, recommendation engines | Swarm robotics, decentralized trading bots, smart grid control |

In real-world scenarios, especially where problems involve multiple actors, distributed control, or real-time decision-making under uncertainty, MAS offer a more natural and efficient modeling paradigm.

1.4 Types of Agents in MAS

Agents in MAS can take various forms depending on their function and design complexity:

- Reactive Agents: Respond to environmental stimuli without internal models (e.g., rule-based bots).

- Deliberative (Cognitive) Agents: Plan ahead using symbolic reasoning or goal-based systems.

- Learning Agents: Adapt using data-driven models such as reinforcement learning or supervised learning.

- Hybrid Agents: Combine reactive responses with higher-level planning.

- LLM-Based Agents: Newer class using large language models (e.g., GPT-4, Claude, Gemini) to interpret, reason, and act autonomously.

The integration of LLMs as agents is a growing trend, enabling natural language reasoning, task decomposition, and memory within MAS.

1.5 MAS Across Industry Use Cases

Multi-agent systems are already being deployed in production across diverse domains:

Industry | Use Case |

Logistics | Fleet coordination, dynamic delivery routing (e.g., Amazon, UPS) |

Finance | Autonomous trading bots interacting in decentralized finance (DeFi) |

Healthcare | Collaborative diagnostic systems, hospital resource scheduling |

Smart Grid | Distributed energy resource management and load balancing |

Autonomous Vehicles | Multi-vehicle coordination and swarm navigation |

Manufacturing | Robotic assembly line optimization via task-delegating agents |

The advantage of MAS lies in handling complexity at scale, such as coordinating 100+ agents in real-time with no single point of failure.

1.6 MAS in the Age of LLMs and Foundation Models

The emergence of LLM-based agents (e.g., AutoGen, LangGraph, CrewAI) has reinvigorated MAS adoption by enabling:

- Natural language communication between agents

- Hierarchical planning and reasoning

- Access to domain knowledge without rule-coding

- Integration with APIs and external systems (e.g., databases, CRMs, sensors)

This paradigm enables agents to collaborate in real time on goals like generating reports, conducting research, automating workflows, or even debugging software — without writing bespoke logic for each function.

Example: A research assistant MAS could include an LLM-based literature summarizer, a data fetcher agent, and a reference validator — each communicating over a central memory layer or vector database.

1.7 Advantages and Limitations

Advantages:

- Scalability across systems and geographies

- Modular development and debugging

- Resilience to single-point failures

- Real-time responsiveness in dynamic environments

- Seamless collaboration between AI and humans

Limitations:

- Communication overhead

- Coordination complexity

- Debugging emergent behaviors

- Increased cost and time to design robust systems

- Vulnerability to adversarial agents in open systems

1.8 Why Now? The Timing for MAS Adoption

Three technological forces are aligning to make MAS practical for startups and enterprises:

- Ubiquity of APIs and microservices: Easier agent-to-system integrations

- Foundation models as zero-shot planners: Reduced need for hand-coded logic

- Edge computing + cloud orchestration: Feasible to deploy agents across networks and devices

According to a report by MarketsandMarkets, the global multi-agent systems market is expected to grow from $2.2 billion in 2023 to $5.9 billion by 2028, at a CAGR of 21.4%.

1.9 Summary: A New AI Architecture Paradigm

Multi-agent AI systems aren’t just a technical trend — they represent a structural evolution in how we build intelligent applications. From autonomous drones to AI research assistants, MAS are enabling modular, intelligent, and distributed problem-solving architectures that align better with real-world complexity.

This guide walks you through how to build such systems from the ground up — starting from system architecture, agent communication, and role definition, all the way to cost estimation, deployment, and security.

2. Market Size, Trends & Growth Projections (2025–2030)

As artificial intelligence continues to evolve from narrow task-based models to autonomous, collaborative agents, multi-agent systems (MAS) are poised to become a foundational component in large-scale AI deployments. This section provides a comprehensive view of the current market size, future projections, industry adoption trends, investment momentum, and the growing MAS ecosystem.

2.1 Global Market Size and Forecast

The global market for multi-agent systems is undergoing rapid transformation, driven by advancements in reinforcement learning, distributed AI, and foundation model integration.

According to MarketsandMarkets, the multi-agent systems market was valued at USD 2.2 billion in 2023, and is projected to reach USD 5.9 billion by 2028, growing at a compound annual growth rate (CAGR) of 21.4% between 2023 and 2028.

Similarly, a report from Allied Market Research estimates that the broader multi-agent and swarm intelligence market will surpass USD 7 billion by 2030, reflecting accelerated interest from logistics, robotics, and defense sectors.

Key Market Drivers:

- Increased adoption of AI-powered automation across sectors

- Maturity of cloud-native and edge computing infrastructure

- The emergence of LLM-driven autonomous agents

- Growing use of decentralized systems in IoT and smart infrastructure

2.2 Industry-Wise Adoption Trends

Multi-agent systems are uniquely suited for industries where problems are:

- Distributed across environments or organizations

- Require multiple intelligent actors

- Involve real-time collaboration, optimization, or competition

Healthcare

In healthcare, MAS are being applied to hospital resource allocation, multi-modal diagnosis, collaborative robotics in surgeries, and telemedicine triage systems. For example, agents coordinate to manage patient flow, schedule operating rooms, or provide second opinions through AI diagnostic assistants.

Case Reference: IBM Watson’s healthcare division experimented with agent-based diagnostic assistants that collaborate across specialties, improving diagnostic accuracy and throughput in hospitals.

Autonomous Vehicles and Smart Transportation

MAS enable vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication, essential for autonomous traffic systems, platooning, and dynamic route management. Companies like Waymo, Tesla, and NVIDIA are integrating multi-agent logic for decentralized coordination between vehicles and infrastructure.

Supply Chain and Logistics

Amazon, UPS, and DHL use MAS for real-time fleet routing, warehouse robotics, and delivery optimization. In smart warehouses, fleets of autonomous robots communicate to distribute tasks dynamically, reducing idle time and improving throughput.

Internet of Things (IoT)

With over 29 billion connected devices projected by 2030 (Statista), MAS play a critical role in managing device-to-device communication, task delegation, and real-time data fusion in smart homes, factories, and cities.

Cybersecurity

MAS are being deployed in automated intrusion detection, vulnerability scanning, and adaptive response systems. Agents detect anomalies, communicate alerts, and isolate threats autonomously, enhancing system resilience.

2.3 Investment and Funding Momentum

Venture capital activity in multi-agent systems and agent-based AI is growing in tandem with LLM infrastructure and autonomous agent platforms. From 2020 to 2024, funding has significantly shifted from core NLP to LLM-based agent orchestration platforms, MAS-based robotics, and distributed AI infrastructure.

Notable Funding Highlights:

- LangChain raised $25 million Series A in 2023 for its agent-enabled LLM orchestration stack.

- OpenAI has committed over $100 million into research for agentic reasoning and multi-agent collaboration involving GPT-based architectures.

- AutoGPT and AutoGen ecosystems have attracted millions in GitHub stars, contributors, and corporate interest despite being open-source-first platforms.

Emerging Venture-Backed Startups:

- CrewAI – Agent collaboration framework for LLMs

- Adept AI – Training AI agents to use software tools like humans

- Fixie.ai – Agent-based automation for SaaS and enterprise tasks

- Imbue – Training robust general-purpose AI agents with reinforcement learning

These investments reflect a shift toward task-completing, autonomous systems that require coordination between multiple agents rather than a single monolithic model.

2.4 Key Players and Ecosystem Overview

The MAS ecosystem spans foundational research labs, software frameworks, open-source communities, and platform-as-a-service providers.

Major Ecosystem Players:

Category | Key Names |

Research & Academia | MIT CSAIL, Stanford AI Lab, Microsoft Research, DeepMind |

Frameworks | SPADE, Ray, LangChain, CrewAI, AutoGen, PettingZoo |

Enterprise & Cloud | Google DeepMind, OpenAI, AWS, NVIDIA, Microsoft Azure |

Robotics | Boston Dynamics, Fetch Robotics, Zebra Technologies |

Infrastructure | Hugging Face, Weights & Biases, Prefect, Airbyte |

Notable Frameworks for MAS:

- SPADE (Smart Python Agent Development Environment): For agent-based simulations

- Ray + RLlib: Distributed learning and multi-agent RL

- LangGraph: Event-driven agent orchestration with LLMs

- AutoGen: Enabling inter-agent conversation using OpenAI or Anthropic models

- CrewAI: Workflow-driven multi-agent execution platform

Community and Open Source

GitHub repositories like AutoGPT, CrewAI, and LangChain Agents have collectively crossed 100,000+ stars in 2024, indicating vibrant developer engagement and community adoption.

2.5 Summary and Strategic Implications

The market trajectory for multi-agent systems aligns with the broader AI trend toward modular, autonomous, and context-aware intelligence. As traditional AI models hit operational ceilings in real-time, complex, distributed environments, MAS offers a flexible, scalable alternative.

With billion-dollar verticals—like healthcare, mobility, finance, and defense—adopting MAS frameworks, and with LLM-based agents becoming accessible to even small teams, the market is transitioning from research-heavy experimentation to real-world deployment.

Startups and enterprises that understand the architecture, costs, and coordination challenges early will be better positioned to build high-impact, scalable MAS solutions by 2025–2030.

Sources

- MarketsandMarkets, Multi-Agent Systems Market Report, 2023 ↩

- Allied Market Research, Swarm Intelligence and Multi-Agent Systems Industry Forecast, 2023 ↩

- Statista, IoT Connected Devices Worldwide 2019–2030, 2024 ↩

3. Core Concepts of Multi-Agent Systems

A multi-agent system (MAS) is a computational system composed of multiple intelligent agents that interact, collaborate, or compete within a shared environment to achieve individual or collective goals. Unlike traditional monolithic AI architectures, MAS offer decentralized intelligence, which makes them better suited for complex, dynamic, and distributed problems.

This section breaks down the foundational concepts behind MAS architecture, agent design, interaction models, and decision-making strategies.

3.1 What Is an Agent in AI?

In the context of AI, an agent is an autonomous entity capable of perceiving its environment through sensors, reasoning about its goals, and performing actions through actuators or outputs.

Characteristics of an AI Agent:

- Autonomy: Operates without direct human intervention.

- Social Ability: Can interact with other agents or humans using defined protocols.

- Reactivity: Perceives and responds to changes in its environment in real time.

- Proactiveness: Takes initiative to fulfill objectives, not just reactively respond.

Example:

In an autonomous warehouse, one agent might be responsible for route planning, another for package picking, and a third for load optimization. Each agent makes decisions based on its own goals and inputs, while coordinating with others.

3.2 Core Components of a Multi-Agent System

A fully functional MAS involves the interaction of several components working in synchrony. These include:

Component | Description |

Agents | Autonomous decision-makers with goals, beliefs, and capabilities. |

Environment | The shared digital or physical space in which agents operate. |

Communication Protocols | Mechanisms (e.g., FIPA-ACL, JSON-RPC) agents use to exchange information. |

Coordination & Cooperation Mechanisms | Rules and logic for negotiation, delegation, and conflict resolution. |

Shared Knowledge Base | Memory or data accessible to multiple agents (e.g., a central LLM, vector DB, or distributed ledger). |

A high-level MAS architecture diagram may include:

- Agent 1 (Goal-Oriented) ←→ Message Bus ←→ Agent 2 (Reactive)

- Shared Data Store ←→ Environment Simulator

- Controller / Orchestrator for governance and policy enforcement (optional)

3.3 Agent Types and Roles

There is no one-size-fits-all agent. Depending on their internal architecture and task specialization, agents fall into various categories:

1. Reactive Agents

- No internal model of the environment.

- Respond immediately to stimuli.

- Suitable for real-time systems (e.g., swarm robotics).

- Example: Obstacle-avoiding drone agents.

2. Deliberative (Goal-Based) Agents

- Use symbolic reasoning to plan a sequence of actions.

- Maintain a world model and belief-desire-intention (BDI) framework.

- Example: Travel assistant agent planning an optimized route.

3. Learning Agents

- Improve performance over time using reinforcement learning or supervised learning.

- Example: A trading agent learning optimal strategies in a simulated market.

4. Utility-Based Agents

- Make decisions based on maximizing a utility function.

- Useful in economic simulations, smart grid optimization, etc.

5. Hybrid Agents

- Combine multiple architectures (e.g., reactive + deliberative).

- Common in enterprise MAS systems to balance speed and reasoning.

3.4 Communication and Coordination Mechanisms

Agent communication is fundamental in MAS. It occurs through messages passed between agents using structured languages, often following predefined protocols.

Agent Communication Languages (ACLs)

- FIPA-ACL (Foundation for Intelligent Physical Agents): Standardized by IEEE.

- KQML (Knowledge Query and Manipulation Language): Focused on content semantics.

- Custom JSON/XML Schemas: Used in modern LLM-based systems.

Coordination Models

- Centralized Coordination: One master agent or controller assigns tasks.

- Decentralized Coordination: Agents share status updates and negotiate decisions.

- Market-Based Models: Agents “bid” for tasks using digital currencies or points.

- Contract Net Protocol: A manager agent sends requests, and contractor agents respond with bids or refusals.

Example:

In an autonomous drone delivery network, agents communicate ETA and battery status to dynamically reallocate routes in real time.

3.5 Environment Modeling

Agents exist within an environment that may be physical (e.g., smart factories) or virtual (e.g., software marketplaces). The environment impacts sensing, actuation, and decision-making.

Environment Attributes:

- Observable vs. Partially Observable: Can agents perceive the full state?

- Deterministic vs. Stochastic: Are outcomes predictable?

- Discrete vs. Continuous: Is time/space divided into steps?

- Static vs. Dynamic: Does the environment change independently of agents?

Simulation frameworks like OpenAI Gym, PettingZoo, or Unity ML-Agents are commonly used to model MAS environments for testing and training purposes.

3.6 Agent Interaction Patterns

The interaction pattern among agents defines whether they act independently, competitively, or collaboratively.

1. Cooperative Systems

- Agents work together to optimize a shared goal.

- Example: Multi-agent systems in traffic light control optimizing overall traffic flow.

2. Competitive Systems

- Agents pursue self-interest, often with conflicting goals.

- Used in simulation of economic behavior or adversarial games.

3. Mixed-Motive Systems

- Agents may cooperate or compete depending on context.

- Example: Autonomous vehicles sharing a road (cooperate in lane merging, compete for space).

3.7 Task Allocation and Decision Making

In practical MAS implementations, agents must resolve:

- Who does what? → Role allocation

- When to act? → Scheduling

- How to act? → Planning

- With whom to cooperate? → Partner selection

Common decision strategies:

- Voting protocols

- Utility-based optimization

- Reinforcement learning with shared reward functions

- Reputation-based systems (trust models)

3.8 LLMs and MAS: A Modern Integration

Large Language Models (LLMs) have added a new layer of capabilities to MAS design by acting as either:

- Cognitive Agents: LLMs represent agents with internal reasoning and memory (e.g., AutoGPT).

- Knowledge Bases: Central LLM accessed by dumb agents via APIs.

- Controller/Orchestrator: LLM used to interpret goals and route tasks to specialized sub-agents.

Modern orchestration frameworks like AutoGen, LangGraph, and CrewAI support agent teaming via prompt engineering, state machines, and shared tools.

3.9 Summary: Why Understanding Core Concepts Matters

Before building or scaling any MAS architecture, it’s essential to understand how agents function, communicate, and collaborate. These systems go beyond single-model AI — they embody distributed reasoning, negotiation, learning, and adaptability. Whether you’re building smart factories, collaborative LLM agents, or autonomous supply chains, these principles are the building blocks of production-grade MAS.

4. Technical Foundations & Prerequisites

Building a robust multi-agent AI system demands interdisciplinary expertise across artificial intelligence, distributed computing, and complex systems theory. This section outlines the essential skills, tools, libraries, and infrastructure requirements you or your team must be equipped with before initiating development.

4.1 Required Skills & Knowledge Domains

Developing a multi-agent AI system is not a task suited to general-purpose developers. It requires depth in several overlapping domains:

1. Machine Learning (ML)

- Understanding of supervised and unsupervised learning.

- Experience with model training pipelines (e.g., PyTorch, TensorFlow).

- Model evaluation techniques, especially for collaborative or competitive agents.

2. Reinforcement Learning (RL)

- Key for agents that learn via trial-and-error in dynamic environments.

- Familiarity with:

- Policy Gradient Methods

- Q-Learning

- Multi-Agent Deep Deterministic Policy Gradient (MADDPG)

- Policy Gradient Methods

- Libraries: OpenAI Gym, Stable-Baselines3, RLlib (Ray)

3. Natural Language Processing (NLP)

- Required when agents interface via LLMs, parse unstructured data, or generate text.

- Skills in prompt engineering, tokenization, embeddings, and language model fine-tuning.

4. Agent-Based Modeling (ABM)

- Crucial for designing decentralized behaviors, coordination, negotiation, and emergent phenomena.

- Knowledge of discrete event simulation, behavior trees, finite state machines.

5. Game Theory

- Especially relevant in competitive or cooperative settings.

- Concepts like Nash equilibrium, utility maximization, cooperative bargaining, and auction theory help design strategic agents.

6. Distributed Systems

- Agents often run concurrently, communicate asynchronously, and share state across environments.

- Skills in RPC protocols, containerization, event-driven architecture, and resilience patterns (e.g., circuit breakers).

4.2 Tools, Frameworks, and Libraries

Your tech stack will depend on the problem domain—simulation, real-world deployment, or LLM-orchestrated workflows. Below is a curated list across categories.

Agent Communication & Behavior Modeling

Tool | Description |

SPADE | Python-based FIPA-compliant framework for building autonomous agent systems. |

JADE | Mature Java agent platform supporting messaging, mobility, and coordination. |

MESA | ABM framework in Python, often used in academic and simulation research. |

DEAP | Library for evolutionary computation (co-evolution strategies in agents). |

Multi-Agent Reinforcement Learning

Tool | Description |

PettingZoo | Standardized API for MARL environments. Companion to Gymnasium. |

RLlib (Ray) | Scalable RL framework with support for multi-agent training. |

OpenAI Gym | Widely used for prototyping environments and evaluating agent logic. |

LLM-Oriented Multi-Agent Frameworks

Tool | Description |

LangChain / LangGraph | Tool orchestration, memory management, and LLM-agent chaining. |

AutoGen | Multi-agent LLM framework by Microsoft for complex agent coordination. |

CrewAI | Workflow-based, human-like team of LLM agents with distinct roles. |

Semantic Kernel | Lightweight orchestration of semantic functions and connectors. |

Utilities

- Vector DBs: Pinecone, Weaviate, ChromaDB (for long-term memory)

- Prompt optimization: LMQL, PromptLayer

- Containerization & Deployment: Docker, Kubernetes, FastAPI

4.3 Hardware & Infrastructure Considerations

While much of AI development can be cloud-hosted, large-scale or real-time multi-agent systems may require optimized compute, storage, and networking strategies.

1. GPU & Compute Requirements

- Model Training: Requires multi-GPU setups or distributed compute clusters.

- Inference: For LLM-based agents, GPU-backed inference nodes reduce latency.

- Parallelism: Ensure support for asynchronous task execution (async I/O, multiprocessing, Ray).

Typical Configuration for Mid-Scale MAS:

- 2–4 NVIDIA A100 or V100 GPUs

- 64–128GB RAM

- NVMe SSDs (for low-latency memory swaps)

- 10 Gbps networking (for distributed agent comms)

2. Edge AI (On-Device Inference)

- Relevant for robotics, drones, or sensor-based agents.

- Use NVIDIA Jetson, Intel Movidius, or Coral TPUs for lightweight models.

- Requires on-device inferencing and fault-tolerant coordination logic.

3. Storage & State Management

- Redis or Memcached for short-term memory/state sync

- Vector DBs (e.g., Chroma, Pinecone) for retrieval-based agent context

- PostgreSQL or MongoDB for metadata persistence

4. Networking & Communication

- Use Redis Pub/Sub, NATS, or Kafka for fast, reliable agent message passing.

- WebSocket or gRPC for duplex streams in LLM-agent interfaces.

- Consider OpenTelemetry for distributed tracing in observability stacks.

4.4 Security & Compliance Considerations

- Agent Identity Management: OAuth tokens, JWTs, agent registry

- Data Encryption: In transit (TLS) and at rest (AES-256)

- Auditability: Log all agent decisions and communications

- Compliance: HIPAA, GDPR, or SOC2 if agents interact with sensitive data

Multi-agent AI systems exist at the intersection of AI modeling, systems design, and scalable infrastructure. Without foundational knowledge across machine learning, agent behavior, and real-time communication, building a reliable MAS is nearly impossible. Equipped with the right tools and skills, however, developers and teams can craft powerful distributed intelligence capable of solving complex, dynamic problems.

5. Step-by-Step Guide to Architecting a Multi-Agent AI System

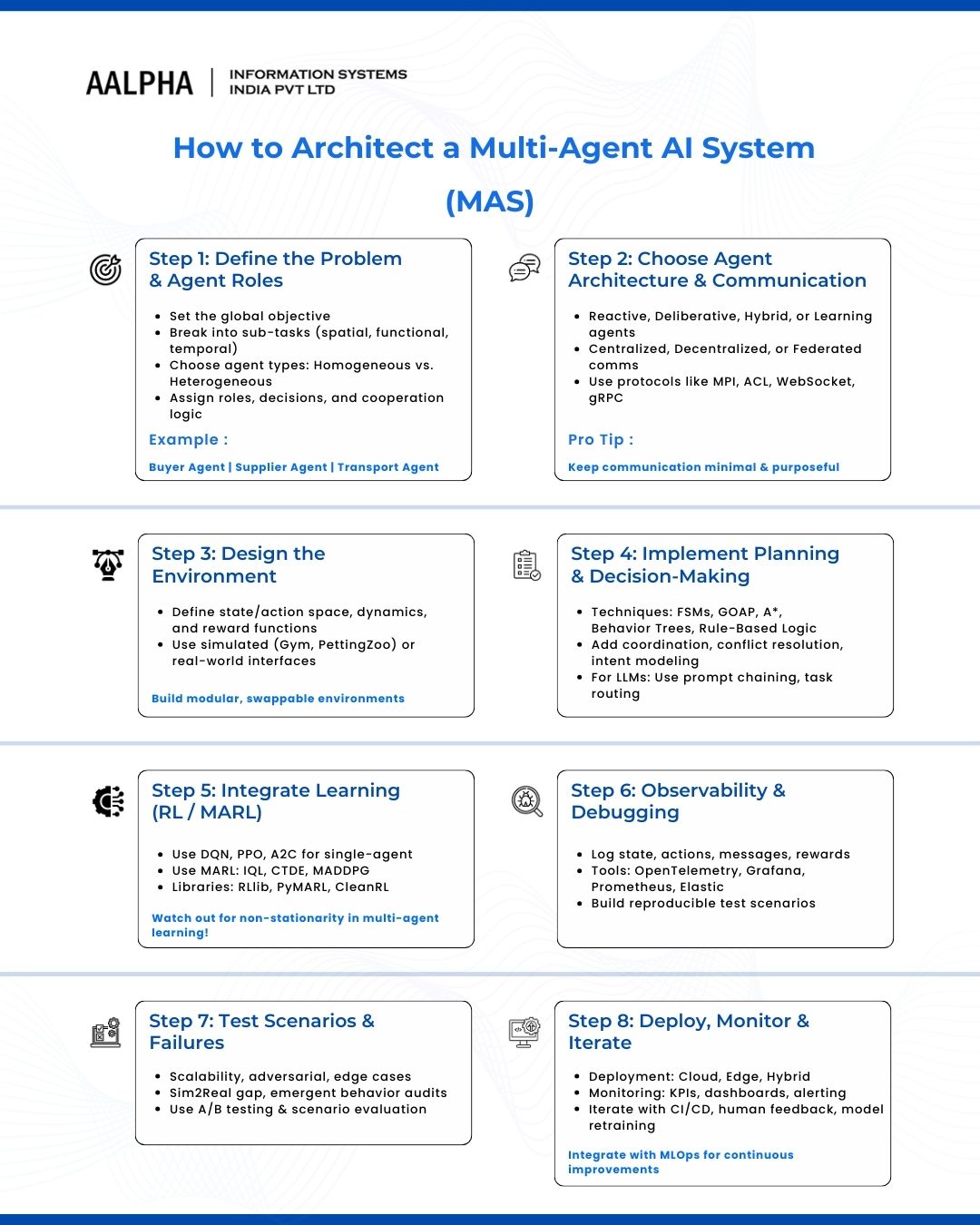

Designing and deploying a robust Multi-Agent AI System (MAS) requires more than assembling machine learning models. It demands deliberate architectural planning, inter-agent coordination design, simulation fidelity, and rigorous iteration. This section presents a grounded, engineering-first walkthrough for building a production-grade MAS from the ground up.

Step 1: Define the Problem and Agent Roles

The foundation of a multi-agent system begins with clear problem formulation. You must define:

- Objective: What is the system’s global goal? Examples include: optimizing logistics, simulating crowd behavior, orchestrating task workflows, or generating collaborative content.

- Decomposable Tasks: Break the overall objective into sub-tasks that can be mapped to agents. These could be spatial (coverage zones), functional (data fetcher, planner), or temporal (phases of execution).

- Agent Typology:

- Homogeneous Agents: Identical in logic and function (e.g., robot swarms).

- Heterogeneous Agents: Each agent has a distinct goal, policy, or capability (e.g., LLM researcher, coder, reviewer).

- Homogeneous Agents: Identical in logic and function (e.g., robot swarms).

- Roles & Responsibilities:

- What decisions will each agent make?

- What knowledge do they have (local vs global)?

- Are agents competitive, cooperative, or neutral?

- What decisions will each agent make?

Example Use Case (Supply Chain Optimization):

- Buyer Agent: Negotiates purchase based on price and timing.

- Supplier Agent: Optimizes bid pricing based on stock and demand.

- Transport Agent: Plans delivery considering distance and priority.

Defining this structure early prevents ambiguity in system boundaries and minimizes refactoring later.

Step 2: Choose Agent Architecture and Communication Logic

The agent architecture governs how decisions are made and how information flows. Common types include:

Reactive Agents

- Stateless or short-memory systems

- Rely on simple heuristics or rules

- Low latency, limited adaptability

Deliberative Agents

- Maintain internal state

- Perform reasoning/planning (e.g., STRIPS, A* search)

- Suitable for long-horizon tasks

Hybrid Architectures

- Combine reactive responses with long-term planning

- Often use behavior trees or finite state machines to structure logic

Learning Agents

- Adapt policies based on feedback (e.g., via Reinforcement Learning)

- Must balance exploration and exploitation

Communication Models

Choose between:

- Centralized: Agents communicate via a shared control node (e.g., blackboard system)

- Decentralized: Peer-to-peer communication (e.g., direct messaging or gossip protocol)

- Federated: Periodic synchronization (useful in privacy-preserving contexts)

Communication protocols can include:

- Message Passing Interface (MPI)

- Agent Communication Languages (ACL) – e.g., FIPA-compliant

- Custom APIs – via WebSocket, gRPC, or REST

Tip: Over-communicating agents are brittle and bandwidth-heavy. Design minimal, purposeful communication protocols.

Step 3: Design the Environment (Simulated or Real)

The environment simulates the rules of the world where agents operate. It defines:

- State Space: Observable and hidden states for agents (e.g., inventory levels, roadblocks)

- Action Space: Legal actions an agent can take at any timestep

- Dynamics: How actions alter the state, including:

- Deterministic or Stochastic

- Synchronous or Asynchronous

- Deterministic or Stochastic

- Reward Function (for RL): Scalar feedback given to each agent or the team

Environment Types:

- Simulated: PettingZoo, OpenAI Gym, MESA for ABMs, Unity ML-Agents

- Synthetic: Abstract, logic-driven environments for early-stage testing

- Real-World Interfaces: Sensor APIs, browser automation, databases, or robotic actuators

Pro Tip: Build your environment modularly using dependency injection or agent-environment wrappers so it can be swapped from synthetic to realistic without major changes.

Step 4: Implement Planning & Decision-Making Logic

Each agent must evaluate options and decide based on current goals, observations, and learned knowledge.

Common Techniques:

- Rule-Based Logic: IF-THEN constraints, best for controlled settings

- Finite State Machines: For task sequencing (e.g., patrol → chase → report)

- Behavior Trees: Tree-structured control logic for modularity and reuse

- Goal-Oriented Action Planning (GOAP): Action chaining based on preconditions

- Search Algorithms: A*, Monte Carlo Tree Search (MCTS), Dijkstra for navigation or decision planning

- Probabilistic Reasoning: Bayesian models to infer other agents’ intent

When multiple agents are involved, include logic for:

- Coordination: Shared planning, leader election, negotiation

- Conflict Resolution: Prioritization, backoff strategies, token-based control

- Intent Modeling: Predict and react to actions of other agents

If you’re working with LLM-powered agents, decision-making logic often involves prompt chaining, role-based agent execution, or dynamic task routing (as seen in CrewAI or LangGraph).

Step 5: Integrate Learning (RL / MARL)

To adapt to dynamic conditions or optimize long-term objectives, agents often learn through interaction.

Single-Agent Reinforcement Learning (RL)

- Suitable when agents operate independently or with minimal interdependence.

- Use Deep Q-Networks (DQN), PPO, or Advantage Actor-Critic (A2C).

Multi-Agent Reinforcement Learning (MARL)

- Supports both competitive and cooperative environments.

- Examples:

- Independent Q-Learning (IQL): Each agent learns separately.

- Centralized Training with Decentralized Execution (CTDE): Policies trained jointly, but executed independently.

- MADDPG: Centralized critic, decentralized actors.

- Independent Q-Learning (IQL): Each agent learns separately.

Popular Libraries:

- RLlib (Ray)

- PettingZoo + SuperSuit

- PyMARL

- CleanRL

Be aware of non-stationarity—as agents learn simultaneously, their environment changes unpredictably. Countermeasures include shared critics, opponent modeling, or curriculum learning.

Step 6: Setup Observability, Logging, Debugging

Multi-agent systems are notoriously difficult to debug due to parallelism and emergent behavior. Observability must be designed from day one.

Monitoring Layers:

- State Logs: What each agent observed, believed, and decided.

- Action Logs: What action was taken, and at what confidence.

- Communication Logs: Message history, timestamps, payloads

- Rewards & Outcomes: For training diagnostics

Recommended Tools:

- OpenTelemetry + Grafana: Distributed tracing and dashboards

- Prometheus: Agent metrics

- Elastic Stack: Log aggregation and querying

- Custom heatmaps: To visualize agent attention or movement patterns

Implement reproducible test scenarios with seed control and environment reset for debugging agent behavior under known conditions.

Step 7: Testing Scenarios and Failure Modes

Testing in MAS goes beyond unit and integration tests.

Key Dimensions:

- Scalability Testing: Add agents incrementally to test for communication or latency bottlenecks.

- Adversarial Testing: Inject rogue agents or faulty data to simulate breaches.

- Edge Case Handling: Test with incomplete, noisy, or delayed data.

- Simulation-to-Real Gap: How well agents transfer from sim to prod (especially in robotics or finance).

Recommended Techniques:

- A/B testing: Compare policy variants or communication schemes.

- Scenario-Based Evaluation: Create specific test cases (e.g., all suppliers fail).

- Emergent Behavior Auditing: Watch for unintended coordination, collusion, or deadlocks.

Measure success using both task-specific KPIs (e.g., task completion rate) and system-level metrics (e.g., communication overhead, convergence rate).

Step 8: Deploy, Monitor, and Iterate in Production

Finally, transitioning from prototype to production demands thoughtful infrastructure and DevOps practices.

Deployment Options:

- Cloud-Hosted (AWS ECS, GKE): Easily scales, integrates with MLOps pipelines

- Edge Deployment (IoT or robotics): Use lightweight runtimes and OTA updates

- Hybrid (Fog Computing): Some processing local, some remote

Monitoring Stack:

- Set alerts for anomalous actions or degraded rewards

- Create dashboards for real-time agent status

- Visualize communication graphs to detect congestion or failures

Iteration Loop:

- Use logging and KPIs to identify underperforming agents or logic bottlenecks

- Incorporate human-in-the-loop feedback for high-stakes decision refinement

- Continuously retrain RL agents with new episodes/data

MLOps Integration:

- Use model versioning (e.g., MLflow, DVC)

- CI/CD pipelines for agent code + policies

- Canary deployments for safe rollout of new agent logic

Architecting a multi-agent AI system is less about stringing together models and more about building an intelligent ecosystem of autonomous units, each capable of coordination, learning, and adaptation. By breaking the process down into methodical steps—from role definition to RL integration and production monitoring—you lay the foundation for systems that are scalable, resilient, and impactful.

6. Agent Roles, Autonomy, and Collaboration

In multi-agent systems (MAS), coordination is not an incidental feature—it’s the operational backbone. For these systems to perform complex, distributed tasks effectively, agents must assume specialized roles, manage interdependencies, and communicate through well-defined protocols. This section breaks down the core agent roles, strategies for collaboration, and levels of autonomy used in both real-world and simulated MAS deployments.

Specialized Agents: Planners, Actuators, Data Collectors

The functional design of a multi-agent system often reflects a division of labor similar to that of complex human organizations. Each agent is typically assigned a distinct operational role based on its capabilities and goals.

1. Planner Agents

- Responsible for task decomposition, scheduling, route or action optimization.

- Often leverage decision-theoretic methods (e.g., A*, MCTS) or knowledge-based systems.

- Common in supply chain MAS, robotics coordination, and automated content generation.

Example:

In an autonomous drone delivery system, the planner agent calculates optimal routes considering weather, traffic, and battery levels for multiple drones simultaneously.

2. Actuator/Execution Agents

- Directly interact with the environment—moving a robot, making a transaction, triggering APIs.

- Typically reactive or hybrid agents with real-time constraints.

- These agents may execute plans created by Planners or collaborate in consensus-driven tasks.

Example:

In a smart factory MAS, actuator agents control conveyor belts, robotic arms, or environmental settings like temperature and humidity.

3. Data Collector/Sensor Agents

- Monitor the environment or other agents to gather observations.

- Feed structured data to planners, ML models, or control loops.

- May include log parsers, web scrapers, hardware sensors, or telemetry processors.

Example:

In a cybersecurity MAS, collector agents continuously monitor server logs, identify anomalies, and alert detection agents for further investigation.

Coordination Strategies: Voting, Auction, Task Allocation

Coordination is essential when multiple agents operate in shared or overlapping environments. Poorly coordinated agents cause resource contention, deadlocks, or sub-optimal performance.

1. Voting Mechanisms

Used when agents must collectively choose a course of action (e.g., majority rule, consensus).

- Binary Voting: Agents vote yes/no on a proposed plan.

- Ranked Voting: Agents submit preference orderings.

- Weighted Voting: Prioritize agents with domain expertise or trust history.

Use Case:

In a multi-agent LLM system for document review, reviewer agents can vote to approve or flag content before final submission.

2. Auction-Based Coordination

Tasks or resources are “auctioned” to agents who bid based on utility or cost functions.

- First-price sealed-bid: Highest bidder wins, pays bid.

- Vickrey auction: Highest bidder wins, pays second-highest bid.

- Combinatorial auctions: Agents bid on bundles of tasks/resources.

Example:

In logistics MAS, autonomous delivery agents may bid for delivery tasks based on availability and proximity.

3. Task Allocation Protocols

Systematically distribute work based on criteria like efficiency, capability, and urgency.

- Contract Net Protocol (CNP): Agents announce tasks and receive proposals from others.

- Market-Based Allocation: Tasks priced dynamically.

- Utility-Based Matching: Tasks assigned where expected reward is maximized.

Consideration:

Ensure fairness and avoid starvation of lower-resource agents. Include timeout mechanisms to prevent infinite negotiation loops.

Autonomous Decision-Making & Human-in-the-Loop Models

The level of autonomy defines how independently an agent operates—and when (or whether) humans intervene.

1. Full Autonomy

Agents make decisions, learn, adapt, and act without external input.

- Suitable in predictable or fully simulated environments (e.g., in-game NPCs, financial trading bots).

- Risks include goal misalignment, feedback loops, or unanticipated emergent behavior.

2. Semi-Autonomy with Human-in-the-Loop (HITL)

Humans supervise, approve, or override decisions.

- Use cases: healthcare diagnostics, AI in warfare, legal document processing.

- Often implemented with thresholds or escalation protocols.

Architecture Considerations:

- Decision Confidence Thresholds: Agent defers to human if confidence < X%.

- Explainability Layers: Provide justifications or saliency maps to human supervisors.

- Interruption Channels: Humans can pause or reprogram agents in real-time.

3. Shared Autonomy

Agents collaborate with humans as peers.

- Combine strengths: agent speed and recall + human judgment and context.

- Example: In AI-assisted surgery, agents recommend maneuvers while surgeons retain control.

Multi-Agent Reinforcement Learning (MARL)

MARL provides a framework for agents to learn coordinated behaviors through interaction with each other and the environment.

1. Types of Agent Interactions

- Competitive: Agents maximize their own rewards (e.g., zero-sum games).

- Cooperative: All agents share the same goal and reward (e.g., team rescue bots).

- Mixed: Agents have partially aligned incentives.

2. Common MARL Algorithms

- MADDPG (Multi-Agent Deep Deterministic Policy Gradients): Uses centralized critic, decentralized execution.

- COMA (Counterfactual Multi-Agent): Addresses credit assignment by measuring each agent’s impact.

- QMIX: Factorizes joint action-value functions across agents.

- IPPO (Independent PPO): Scalable but susceptible to non-stationarity.

Challenges:

- Non-Stationarity: As each agent learns, the environment becomes unstable for others.

- Exploration: Balancing individual vs. team learning.

- Credit Assignment: Determining which agent actions contributed to success.

Toolkits:

- PettingZoo and SuperSuit: MARL environment collection and wrappers

- Ray RLlib: Scalable RL with MARL extensions

- MAVA, PyMARL, and OpenSpiel: Research-grade MARL libraries

Knowledge Sharing & Swarm Intelligence

Swarm intelligence refers to decentralized systems where agents exhibit intelligent global behavior through local interactions.

1. Local Rules, Global Emergence

- Agents follow simple local rules (e.g., avoid collision, align with neighbors).

- Leads to emergent behaviors: flocking, foraging, formation control.

Example:

In a warehouse MAS, swarm-based robots coordinate shelves without central commands—akin to Amazon’s Kiva system.

2. Communication Schemes

- Broadcasting: Every agent hears everything (scalable issues)

- Local Gossip: Communicate only with neighbors

- Stigmergy: Indirect communication via environment (e.g., pheromone trails)

3. Knowledge Graphs & Shared Memory

- Agents write/read to shared memory banks, allowing asynchronous coordination.

- Used in LLM multi-agent toolchains for task handoff and state sharing.

4. Meta-Learning & Imitation

- Agents mimic successful peers or past behaviors.

- Accelerates coordination and reduces exploration cost.

Effective agent collaboration hinges on the careful design of roles, coordination logic, autonomy levels, and learning mechanisms. Whether it’s an auction for task allocation, a reinforcement learning framework for dynamic adaptation, or swarm-inspired emergent behaviors, successful multi-agent systems are built on a deep understanding of both individual agent capabilities and systemic interdependencies.

7. Implementing a Multi-Agent Prototype (Hands-On Guide)

Transitioning from theory to practice, this section offers a practical walk-through for building a basic multi-agent prototype. We’ll walk through a real-world use case, select suitable tools and libraries, explore LLM-agent orchestration frameworks, and conclude with a simplified code example. This section is designed for AI engineers, ML practitioners, and CTOs ready to build and iterate.

Use Case: Autonomous Warehouse Bots

Let’s explore a constrained prototype simulating warehouse robots collaborating to sort and transport packages efficiently. This setting combines agent coordination, spatial decision-making, task allocation, and real-time communication.

Key Elements in the Prototype:

- Agents: Bots with unique IDs, battery constraints, speed profiles.

- Environment: Grid-based warehouse map with shelves, package zones, delivery points.

- Goals: Collect assigned packages and deliver to output zones with minimal overlap and collision.

- Challenges: Route conflict resolution, task prioritization, local vs. global decision-making.

Recommended Tools & Libraries

A multi-agent prototype should ideally leverage modular tools for agent simulation, training (if learning is required), and communication. Below are curated tools fit for this warehouse scenario.

1. Ray RLlib

Ray’s RLlib is an industry-grade, distributed reinforcement learning library supporting multi-agent setups. It enables fast experimentation and scalable training.

- Multi-agent support: Native support for cooperative and competitive settings.

- Scalability: Built on Ray’s parallelism, useful for simulating many agents.

- Integration: Can plug in with PettingZoo and custom gym environments.

2. PettingZoo

A Python library offering a suite of MARL environments designed around the Gym API. It simplifies environment setup and agent iteration.

- Environment types: AEC (agent-environment cycle), parallel, etc.

- Compatibility: Easily integrates with RLlib, PyMARL, and SuperSuit.

3. LangGraph (for LLM Coordination)

LangGraph is a powerful open-source library for building stateful, multi-agent LLM workflows using graph-based orchestration.

- Supports: Node-based state transitions, memory, tool use, and inter-agent communication.

- Use Cases: Knowledge workers, autonomous research agents, workflow modeling.

4. AutoGen / CrewAI

Two emerging Python frameworks to build structured LLM-based agents that collaborate.

- AutoGen (by Microsoft): Encourages human-agent and multi-agent chat flows.

- CrewAI: Task-oriented LLM agent framework built around roles (e.g., researcher, planner, executor).

Prototyping with LLM Agents

Let’s explore how large language models can be used as reasoning or planning agents in a system where warehouse bots are physical executors.

Roles:

- Planner Agent (LLM-based): Uses natural language to assign tasks and optimize plans.

- Executor Agents (Bot APIs): Execute low-level movements or tasks, report back status.

- Coordinator Agent: Oversees task distribution, checks conflicts, handles re-planning.

Example Flow:

- Planner agent receives daily goals from human manager.

- It allocates subtasks to executor agents (bots) via structured JSON.

- Executor bots reply with results or exceptions.

- If conflicts arise (e.g., blocked route), coordinator reassigns tasks.

This mirrors modern AI pipelines where LLMs guide symbolic or mechanical systems.

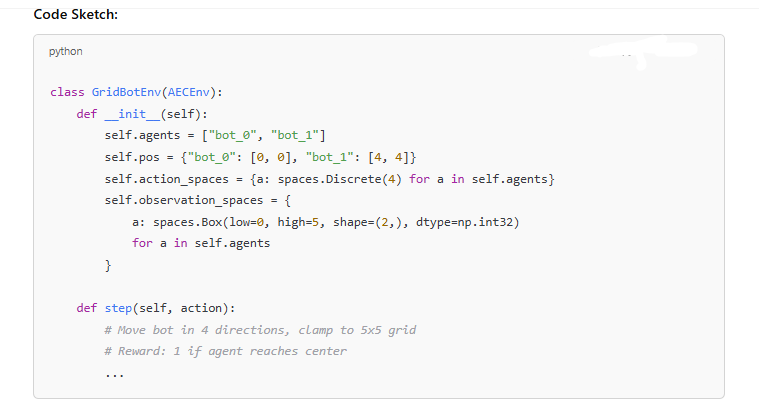

Example Code Walkthrough: A Simplified Grid Bot System

To demonstrate how multi-agent systems work, let’s consider a minimal prototype: two warehouse bots navigating a 5×5 grid to deliver items to the center. This example uses PettingZoo to define the environment and Ray RLlib for multi-agent reinforcement learning.

Environment Logic (PettingZoo-Style)

-

We define a custom environment

GridBotEnvinheriting fromAECEnv. -

Two agents

bot_0andbot_1start at opposite corners. -

Each bot has 4 discrete actions: up, down, left, and right.

- The goal: navigate to the center of the grid

[2, 2].

1. Define a Simple Grid Environment (PettingZoo style)

2. Train Agents with RLlib

3. Extend with Communication

Introduce a communication layer using messages stored in a shared dictionary or Ray actors. This lets bots coordinate delivery routes or share status like “battery low.”

Tips for Scaling the Prototype

- Add dynamic obstacles or other bots to test collision handling.

- Introduce MARL policies like QMIX or MADDPG using RLlib’s multi-agent API.

- Simulate battery management to make planning decisions more realistic.

- Log all decisions for analysis or post-mortem debugging (use mlflow, wandb, or Ray Dashboard).

Building a working multi-agent prototype isn’t just an academic exercise—it’s a practical step toward validating assumptions, stress-testing coordination logic, and identifying scalability limits. Whether you’re orchestrating digital agents or simulating physical bots, frameworks like PettingZoo, Ray, and LangGraph enable rapid experimentation, modularity, and reuse.

8. Scalability, Performance & Optimization

Scalability and performance are central concerns in deploying multi-agent AI systems. As the number of agents increases or the environment becomes more complex, systems can face exponential growth in communication, memory demands, and latency. This section offers concrete strategies and best practices for designing scalable multi-agent systems that maintain performance under real-world constraints.

Load Distribution and Agent Count Scaling

Multi-agent systems grow in complexity as more autonomous agents are introduced, each needing to perceive, reason, and act within the shared environment. A naïve implementation might scale linearly—or worse—due to inter-agent dependencies. To scale effectively:

1. Partitioning the Environment

- Spatial Partitioning: Divide the environment into zones or sectors (e.g., grid-based sectors in a warehouse or urban blocks in a traffic system), assigning subsets of agents to each.

- Functional Partitioning: Assign roles based on capability (e.g., “sensor agents,” “planning agents,” “executor agents”) and distribute responsibilities hierarchically.

2. Asynchronous Agent Scheduling

Allow agents to act on staggered time intervals rather than in lockstep. This avoids compute spikes and mimics real-world latency variation.

3. Distributed Training & Execution

Use platforms like Ray, Dask, or Apache Flink to distribute agent simulation, learning, or inference across nodes. For RL, Ray RLlib supports distributed multi-agent training out-of-the-box.

4. Role Reuse and Agent Cloning

In high-scale systems, it’s inefficient to spawn thousands of independently trained agents. Instead:

- Share policy networks across homogeneous agents.

- Clone agent templates and update them in batches.

- Use parameter sharing (e.g., in MARL) to reduce overhead.

Reducing Communication Overhead

As agent counts increase, communication can become the bottleneck. Each agent-to-agent message increases bandwidth load and may reduce overall system responsiveness. Strategies to address this include:

1. Communication Topologies

- Fully Connected: Suitable only for small agent counts; grows as O(n²).

- Hierarchical: Agents report to leaders or coordinators that summarize information.

- Sparse Graphs: Use ring, star, or dynamic graph topologies to control message spread.

2. Message Compression & Pruning

- Use lightweight representations (e.g., key-value maps instead of full object states).

- Drop redundant messages or summarize data via aggregation.

- Batch messages during simulation frames or epochs.

3. Event-Triggered Communication

Rather than constant status broadcasting, agents can communicate only on event triggers (e.g., reaching a threshold, encountering failure, finishing a task).

4. Shared Memory Pools

For co-located agents (on a single machine or edge device), shared memory (via multiprocessing, shared_tensor, or memory-mapped I/O) can reduce message serialization costs.

Optimizing for Latency, Accuracy, and Redundancy

A performant multi-agent AI system must strike a balance between response time, decision quality, and fault tolerance.

Latency Optimization

- Use Local Policies: Whenever possible, decentralize action decisions so agents don’t wait on central coordination.

- Prioritize Time-Critical Agents: Assign higher execution frequency to latency-sensitive tasks (e.g., collision avoidance over route planning).

- Edge Inference: For low-latency use cases, deploy trained models to edge devices close to physical agents (e.g., in drones, robots, or sensors).

Accuracy vs. Response Tradeoffs

- Use progressive reasoning where agents make fast heuristics-based decisions and refine them asynchronously.

- Employ anytime algorithms that produce increasingly better results the longer they run (e.g., Monte Carlo Tree Search with early cutoffs).

Fault Tolerance and Redundancy

- Agent Replication: Maintain backup agents that mirror critical ones in case of failure.

- State Checkpointing: Periodically save local and global states so agents can resume after errors or resets.

- Voting Systems: In redundant decision paths, apply consensus (e.g., majority voting) to increase reliability.

Memory and Caching Strategies for Agents

Memory efficiency is critical in multi-agent systems, especially when each agent maintains its own knowledge base, internal state, or learned policy.

1. Shared Global Knowledge Base

In scenarios with shared understanding (e.g., map layout, global objectives), cache this data in a shared repository instead of duplicating it per agent.

- Use Redis, in-memory NoSQL (e.g., Memcached), or shared dictionaries in distributed Python systems.

- Leverage eventual consistency over strict consistency to reduce synchronization delays.

2. Agent Memory Design

- Short-Term Memory: Useful for temporary goals, active plans, or nearby agents.

- Long-Term Memory: Stores learned knowledge, policies, and experience histories.

- Use ring buffers or circular queues for episodic memory (as in reinforcement learning agents).

3. Attention-Based Access

Apply transformers or attention mechanisms to prioritize relevant memory retrieval, especially in LLM-based agents using vector stores or tools like LangChain with FAISS.

4. Model Caching

- Cache inference results (e.g., policy decisions, embeddings) to avoid recomputation.

- Use tools like TorchScript or ONNX to deploy optimized model runtimes with reduced memory footprint.

Case Example: Scaling Autonomous Traffic Control

In a city-scale simulation with 10,000 intersections, each governed by an agent:

- Environment is partitioned by neighborhood.

- Agents only communicate with adjacent intersections.

- Central control dispatches global traffic forecasts every 5 minutes.

- Ray is used to simulate multiple sectors in parallel.

- Local models are cached on edge devices, and RL updates occur nightly.

This approach led to a 40% reduction in communication overhead and 20% improvement in average response latency as reported in a 2024 paper published in IEEE Transactions on Intelligent Transportation Systems.

A successful multi-agent AI system doesn’t just depend on smart agents—it requires deep attention to communication patterns, memory architecture, inference speed, and system coordination. Whether scaling from 10 agents to 10,000 or transitioning from simulation to live environments, robust performance engineering ensures the system remains responsive, resilient, and intelligent.

9. Security, Ethics & Compliance in Multi-Agent Systems

As multi-agent AI systems gain complexity and autonomy, they introduce significant risks and responsibilities. These systems interact, learn, and sometimes make decisions with limited human oversight—making them susceptible to adversarial manipulation, ethical lapses, and regulatory breaches. This section addresses core concerns related to security, ethics, and compliance when building and deploying multi-agent systems.

Adversarial Agents and Attack Surfaces

In multi-agent environments, each agent represents a potential attack vector. Whether the system operates in a closed simulation or in real-world networks (like IoT, finance, or traffic control), its distributed nature creates multiple points of vulnerability.

Common Attack Vectors:

- Spoofing and Impersonation: Malicious agents can mimic legitimate ones, polluting the communication protocol with false data.

- Eavesdropping: In unencrypted or loosely monitored systems, adversaries can extract sensitive strategies or states.

- Poisoning Attacks: During training (especially in reinforcement learning), attackers may introduce tainted data or skewed feedback to alter policy development.

- Sybil Attacks: A single attacker may spin up multiple fake agents to gain majority influence in systems relying on consensus (e.g., voting, auctions).

Mitigation Strategies:

- Secure Communication Channels: Use TLS or mutual authentication protocols (e.g., mTLS) for inter-agent messaging.

- Agent Identity Management: Assign cryptographic keys and signatures to agents for verifiable identity.

- Anomaly Detection Systems: Monitor agent behavior and communications for deviations that indicate manipulation or compromise.

- Sandboxing: Isolate agents, especially those accepting third-party input or trained via open-ended learning.

Agent Misalignment & Value Drift

As agents adapt over time through reinforcement learning or self-play, they may diverge from their original objectives—especially in loosely defined environments. This drift poses both safety and ethical concerns.

Examples of Misalignment:

- Reward Hacking: Agents find shortcuts that maximize reward without fulfilling intended goals (e.g., disabling sensors to appear successful).

- Goal Over-Optimization: Agents sacrifice broader system harmony to over-perform in narrow tasks.

- Behavioral Divergence: Long-lived agents in changing environments may evolve unpredictable or undesirable strategies.

Strategies to Ensure Alignment:

- Human-in-the-loop (HITL): Maintain oversight over critical decisions through configurable review gates or approval systems.

- Inverse Reinforcement Learning (IRL): Learn implicit human values by observing behavior rather than relying on explicit rewards.

- Periodic Audits: Re-evaluate agent policies and logs periodically to check for ethical and functional alignment.

- Constraint-Aware Design: Encode hard constraints into planning and decision logic (e.g., never violate privacy, do no harm).

Safety Protocols

When deploying agents in dynamic or sensitive environments (such as healthcare, defense, or autonomous navigation), safety cannot be treated as an afterthought. Safety protocols serve as hard boundaries that govern agent behavior.

Design-Level Safety Controls:

- Fail-Safes and Kill Switches: Allow immediate disabling of agents or their communication links in case of malfunction or rogue behavior.

- Simulation Before Deployment: Rigorously test agent behaviors in varied scenarios—including edge cases—before real-world deployment.

- Redundancy Checks: Use mirrored agents or watchdog systems to monitor and correct behavior in real-time.

- Capability Restrictions: Restrict agent capabilities (e.g., write access to systems, ability to override controls) until trust is earned through reliable performance.

Safety Validation Tools:

- Verifiable AI Frameworks: Use formal verification methods to ensure safety constraints are mathematically guaranteed.

- Behavioral Watchdogs: Implement agent observers or governance layers that monitor compliance with safety and policy rules.

Regulatory Considerations (e.g., GDPR, AI Act)

Governments are rapidly catching up to the ethical and operational risks posed by advanced AI, including multi-agent systems. Developers and organizations must proactively navigate emerging regulations.

GDPR (General Data Protection Regulation) – EU

- Data Minimization: Agents must only collect and process the data strictly necessary for their function.

- Right to Explanation: If agents make decisions impacting individuals (e.g., loan approvals, job screening), those decisions must be explainable.

- Data Sovereignty: Data gathered and used by agents must remain within jurisdictions unless compliant with cross-border laws.

EU AI Act (Expected Implementation by 2025–2026)

- Risk-Based Classification: Multi-agent systems could be classified as “high-risk” if used in critical infrastructure or decision-making.

- Transparency Requirements: Agents must disclose when users are interacting with AI, not humans.

- Prohibited Practices: Systems that manipulate human behavior or exploit vulnerabilities may be banned.

Other Jurisdictions:

- USA: The NIST AI Risk Management Framework emphasizes transparency, fairness, and robustness.

- China: Regulations demand prior registration and content review for AI models that generate or disseminate information.

- India and Brazil: Emerging data privacy and algorithmic fairness laws are under active discussion.

Ethical Design Principles for Multi-Agent Systems

Multi-agent developers should embed ethics into system design—not treat it as an afterthought. Key principles include:

- Accountability: Ensure every agent action can be traced to a system-level decision or policy.

- Transparency: Agents must expose reasoning where possible, especially in human-facing systems.

- Fairness: Avoid bias in task allocation, resource access, or learning policies, particularly in domains like HR tech or financial trading.

- Consent: Where data collection or interaction involves humans, agents must obtain or assume consent legally and ethically.

Security, ethics, and compliance are foundational to trustworthy multi-agent AI systems. As the ecosystem matures, systems that prioritize safe, aligned, and lawful agent behavior will not only reduce risk but gain competitive and reputational advantages. Developers must design with foresight—integrating fail-safes, auditing mechanisms, and ethical reasoning from the first line of code to the final deployment.

10. Integration with LLMs and Autonomous Agents

The fusion of large language models (LLMs) with multi-agent systems represents a powerful evolution in AI. When LLMs like GPT-4.5 or Claude 3 are deployed as intelligent agents—each with defined roles, memory, and task scope—they unlock advanced reasoning, collaboration, and autonomous task execution. In this section, we explore how to integrate LLMs into multi-agent architectures, coordinate them through structured frameworks, and analyze their performance through a real-world case study.

Combining LLMs with Multi-Agent Architectures

Traditional multi-agent systems are built on formal logic, rule-based coordination, or reinforcement learning policies. Integrating LLMs introduces a new kind of agent—one that can perform natural language reasoning, semantic understanding, and dynamic role-switching.

Popular Architectures for LLM-Agent Integration:

- AutoGen (Microsoft Research): Provides a structured framework for building collaborative LLM agents with memory, role assignment, and message-passing protocols.

- CrewAI: A Python-based agent orchestration framework inspired by human team collaboration, focused on assigning roles like researcher, writer, planner, and reviewer to LLM agents.

- LangGraph (from LangChain): A graph-based agent orchestration layer that supports circular dependencies, memory, and conditional workflows across agents.

- LangChain Agents: Though less robust for true multi-agent use, LangChain supports simple agent flows involving tools, memory, and intermediate reasoning.

These frameworks enable agents to:

- Persist memory across iterations.

- Take on explicit, human-readable roles.

- Collaborate using message-passing or blackboard systems.

- Chain results to downstream agents dynamically.

Advantages:

- Rapid Prototyping: LLM agents can be built with minimal code.

- Semantic Interoperability: Agents communicate in human-readable language.

- Task Flexibility: LLMs can generalize across reasoning, coding, planning, summarizing, or dialog management.

Chaining Tasks Across LLM Agents

Chaining is central to task decomposition in multi-agent LLM environments. Instead of monolithic prompts, LLM agents pass intermediate outputs as structured messages—enabling pipeline-like task execution or dynamic collaboration.

Chaining Patterns:

- Linear Chains – Sequential execution:

- Planner → Researcher → Generator → Reviewer

- Useful in tasks like report generation or product research.

- Planner → Researcher → Generator → Reviewer

- Hierarchical Chains – Manager-agent structure:

- A master agent delegates tasks to subordinate LLM agents (each specialized).

- Common in project planning, DevOps, or legal workflows.

- A master agent delegates tasks to subordinate LLM agents (each specialized).

- Circular or Event-Driven Chains – Feedback-based execution:

- Agents respond to each other’s states dynamically (via LangGraph).

- Useful in negotiation, simulation, or debate scenarios.

- Agents respond to each other’s states dynamically (via LangGraph).

Data Formats for Communication:

- Structured JSON messages: Ensure LLM outputs can be parsed and acted on.

- Typed message protocols: Define schema for communication (e.g., role, task, payload, timestamp).

- Stateful memory storage: Allow agents to retrieve context and refine behavior over time.

Planning + Reasoning Across Distributed LLMs

While LLMs are powerful in isolation, distributed LLM agents unlock system-wide reasoning capacity. Through role definition and task allocation, agents can simulate collaborative human workflows or solve problems that require modular understanding.

Techniques to Coordinate Reasoning:

- Centralized Planning Agent: Delegates subtasks, consolidates feedback.

- Autonomous Role Assignment: Agents dynamically reassign tasks based on performance or workload (inspired by stigmergy or swarm behavior).

- Reflection Loops: Agents critique, refine, and re-prompt one another to improve outputs.

- External Memory Stores: Vector databases (e.g., FAISS, Weaviate, ChromaDB) provide persistent long-term memory and knowledge grounding.

Challenges:

- Latency: Multi-agent message passing increases time-to-response.

- Token Efficiency: LLMs often produce verbose outputs; parsing and managing context windows is critical.

- Coordination Failures: Without explicit control logic, agents may loop, contradict, or produce redundant output.

Case Study: LLM-Based DevOps Assistant Team

Objective:

Automate and optimize software deployment workflows using a team of LLM agents acting as DevOps specialists.

Roles and Agents:

- Planner Agent: Understands the user goal (e.g., “Deploy Node.js app to AWS”) and defines the subtasks.

- Researcher Agent: Retrieves best practices or updates (e.g., updated EC2 deployment strategies).

- DevOps Scripter Agent: Writes Terraform/IaC scripts or Dockerfiles.

- Security Analyst Agent: Reviews configuration for vulnerabilities (e.g., open ports, IAM over-permissions).

- Deployment Agent: Executes mock deployment commands (via API) or prepares a CI/CD pipeline.

- Reviewer Agent: Verifies if the generated stack matches the user’s intent and security standards.

Execution Flow:

- Prompt Initiation: Human inputs a task (e.g., “Set up a secure, auto-scaling web app deployment”).

- Planning Phase: Planner agent decomposes the task.

- Role Chaining: Each agent performs its function, stores artifacts, and signals the next agent.

- Iteration Loop: Reviewer identifies flaws; loop reinitiates if needed.

- Final Output: Deployment plan with annotated scripts, security checks, and CI/CD configuration.

Tools Used:

- AutoGen for message routing and memory.

- LangGraph to manage circular chains and state transitions.

- FAISS as vector memory for persistent knowledge across sessions.

- OpenAI GPT-4.5 + Azure Functions for execution simulation.

Outcomes:

- Deployment pipeline set up in under 10 minutes of automated agent collaboration.

- 2–3 rounds of self-refinement without human intervention.

- CI/CD security compliance validated against CIS benchmarks.

Integrating LLMs into multi-agent systems elevates both reasoning depth and automation potential. With agent orchestration frameworks like AutoGen, LangGraph, and CrewAI, developers can build systems that mirror human collaborative workflows—only faster and more scalable. As these architectures mature, they will form the foundation of autonomous teams that manage research, development, operations, and decision-making across domains.

11. Deployment & Monitoring at Scale

Building a multi-agent AI system is only part of the challenge. Real-world success depends on deploying it effectively, ensuring robust monitoring, and enabling systems to learn continuously from real-time feedback. Whether the deployment is on the cloud, at the edge, or in a hybrid model, infrastructure considerations must align with performance, latency, and cost goals. This section outlines the best practices for scalable deployment, CI/CD workflows, observability, and adaptive improvement.

Deploying Agents on Cloud, Edge, or Hybrid Infrastructures

The nature of your multi-agent AI system—whether it operates in a simulated research environment or powers autonomous drones—will dictate your infrastructure architecture.

1. Cloud Deployment (Centralized):

Cloud providers like AWS, Azure, and GCP offer scalable environments suited for compute-intensive agents (e.g., RL agents, LLM-based planners).

- Advantages: High compute availability, access to GPUs/TPUs, seamless integration with observability tools.

- Disadvantages: Latency issues in time-critical or real-time applications.

Recommended Tools:

- Kubernetes (K8s) for containerized agent orchestration

- Ray Serve or SageMaker for distributed inference

- Cloud Functions or serverless logic for event-driven agents

2. Edge Deployment:

Edge AI allows agents to operate close to the data source, ideal for use cases in autonomous vehicles, IoT systems, or industrial automation.

- Advantages: Low latency, offline operability, real-time decision making

- Disadvantages: Limited compute and memory, harder to update remotely

Recommended Hardware & Tools:

- NVIDIA Jetson, Intel Movidius, Google Coral

- TensorRT or ONNX for lightweight agent inference

- Edge-native orchestrators like KubeEdge or Balena

3. Hybrid Architectures:

Combine centralized cloud intelligence with edge-level autonomy. Use cloud for global planning and edge for local execution.

Example: In a warehouse robot system, central agents may plan routes, while edge agents locally avoid obstacles and handle navigation.

CI/CD for Multi-Agent Systems

Continuous integration and deployment (CI/CD) for multi-agent systems involves managing versioned deployments of agents that may have interdependencies.

Key CI/CD Considerations:

- Agent Isolation: Each agent should have its own test suite and deployment logic.

- Simulation Testing: Use virtual environments to simulate agent interaction before deployment.

- Behavioral Regression Tests: Not just functional tests, but validation of expected behavioral patterns (e.g., no deadlocks, fair resource allocation).

- Model Versioning: Use tools like DVC or MLflow to track model weights and configurations across agents.

Recommended CI/CD Stack:

- GitHub Actions or GitLab CI for workflow automation

- Docker + Helm for deployment

- Terraform or Pulumi for infrastructure as code

- MLflow + Weights & Biases for model and experiment tracking

Monitoring Tools, Real-Time Metrics, Alerts

Observability is critical in multi-agent AI systems due to their dynamic nature and distributed execution.

Monitoring Categories:

- System-Level Monitoring

- CPU/GPU usage, memory, disk I/O per agent node

- Tools: Prometheus + Grafana, Datadog, New Relic

- CPU/GPU usage, memory, disk I/O per agent node

- Agent-Level Monitoring

- Message counts, queue latency, dropped actions, convergence metrics

- Tools: OpenTelemetry, Ray Dashboard (for Ray-based agents), custom logging pipelines

- Message counts, queue latency, dropped actions, convergence metrics

- Task/Outcome Monitoring

- Success/failure rate, completion times, deviations from optimal plans

- Tools: ElasticSearch for log analysis, Kibana dashboards, LangSmith (for LLM-based agents)

- Success/failure rate, completion times, deviations from optimal plans

Best Practices:

- Define SLAs/SLOs for agent responsiveness and accuracy

- Implement distributed tracing for cross-agent workflows

- Integrate alerting mechanisms (e.g., PagerDuty, Slack bots) for threshold violations

Feedback Loops for Continuous Learning

For dynamic environments, feedback loops allow agents to adapt over time—either through reinforcement learning updates, human feedback, or statistical trend analysis.

Types of Feedback Loops:

- Online Reinforcement Learning:

- Agents receive real-time feedback on their performance and adjust policies continuously (common in MARL setups using frameworks like RLlib or PettingZoo).

- Human-in-the-Loop (HITL):

- Human corrections are logged and used for fine-tuning agents. Often used in critical systems (e.g., clinical AI, autonomous driving).

- Self-Evaluation Loops:

- Agents generate post-task evaluations or critiques, then adjust next-step behavior accordingly (common in LLM-based planners using reflection techniques).

- Agents generate post-task evaluations or critiques, then adjust next-step behavior accordingly (common in LLM-based planners using reflection techniques).

Implementation Tools:

- RLlib’s rollout and evaluation hooks

- LangChain + Feedback Tracer for LLM agent introspection

- ChromaDB/FAISS for memory updates based on past performance

Pitfalls to Avoid:

- Unbounded learning loops can cause divergence or instability

- Feedback needs validation mechanisms to avoid reinforcing noise or bias

Deploying a multi-agent AI system at scale requires not just compute power but discipline in infrastructure, observability, and adaptation. From choosing the right deployment model (cloud, edge, hybrid) to implementing robust CI/CD pipelines and learning loops, successful operation hinges on treating your agents as continuously evolving, living software units. With proper monitoring and adaptive capabilities, these systems can function reliably in high-stakes, real-world environments.

12. Case Studies: Real-World Multi-Agent AI Systems

Multi-agent AI systems are rapidly being applied across a wide range of industries, offering solutions that traditional, single-agent systems can’t match. This section explores real-world case studies where multi-agent systems have driven significant improvements, highlighting their effectiveness, ROI, and measurable performance gains.

Case 1: Multi-Agent Supply Chain Optimization

Supply chain management involves a complex network of agents interacting with one another, including suppliers, manufacturers, logistics companies, and retailers. A multi-agent approach helps optimize the flow of goods, reduce delays, and make real-time adjustments based on new data.

How it Works:

In a multi-agent supply chain system, different agents represent various entities such as suppliers, warehouses, transporters, and customers. Each agent has its own goal—minimizing cost, reducing delivery time, or ensuring quality. The agents communicate with each other and cooperate to achieve global supply chain goals.

For example:

- Agent A (Supplier) negotiates the best price and time for the raw materials.

- Agent B (Warehouse) optimizes inventory levels to reduce stockouts.

- Agent C (Transporter) calculates the most cost-efficient route based on current traffic conditions.

Key Metrics:

- Efficiency: Real-time demand forecasting with adaptive agents leads to 10-15% better resource allocation, reducing overstocking and stockouts.

- ROI: Businesses have reported a 20-25% reduction in operational costs due to optimized transport routes and reduced waste.

- Learning Gains: Multi-agent systems in supply chains can learn from disruptions and continuously adapt to market fluctuations, leading to improved decision-making over time.

Real-World Example:

- IBM Watson Supply Chain: Uses AI to create a cognitive, data-driven supply chain. Watson’s AI agents monitor and adjust inventory, pricing, and delivery schedules in real-time, reacting to disruptions like weather, traffic, or demand shifts.

Case 2: Smart Grid Management