What is an AI Support Agent?

An AI support agent is a software-driven system that autonomously handles customer queries across digital channels such as web chat, email, WhatsApp, and SMS using artificial intelligence, primarily large language models (LLMs). Unlike simple chatbots that rely on pre-written scripts or decision trees, AI agents use natural language understanding (NLU), contextual memory, and retrieval-augmented generation (RAG) to engage in meaningful, dynamic conversations.

These agents are capable of recognizing user intent, extracting relevant entities, retrieving data from integrated knowledge bases or APIs, and delivering accurate, human-like responses—all in real time. Whether assisting a customer with order tracking, resetting a password, or resolving billing disputes, AI agents are designed to simulate the experience of speaking with a trained human agent.

Business owners often ask, “Can I use GPT-based AI to automate my customer service?” The answer is yes—but only if it’s implemented with clear objectives, proper data sources, and structured fallback logic. Modern AI support agents can deflect up to 70% of Tier-1 and Tier-2 queries when properly trained and deployed.

AI Agents vs Traditional Chatbots

To understand the magnitude of this transformation, it’s important to distinguish AI agents from the earlier generation of customer support tools—namely, traditional chatbots. While both serve the goal of automating interactions, their underlying architecture, capabilities, and outcomes are fundamentally different.

Traditional chatbots operate on predefined rules, decision trees, or keyword triggers. If you’ve ever typed a question and received a frustrating “Sorry, I didn’t understand that” response, you’ve experienced the limitations of a rule-based bot. These systems can only respond to inputs that precisely match their scripted logic. They struggle with ambiguous phrasing, don’t retain context across multiple turns, and fail when the conversation veers off the expected path.

In contrast, AI agents—especially those powered by large language models—are dynamic, adaptive, and capable of true natural language understanding. Instead of rigid scripts, they rely on intent recognition and semantic similarity to interpret user inputs. They maintain context across multiple exchanges, understand compound queries, and retrieve accurate answers from integrated knowledge bases, CRMs, or APIs in real time.

For example, when a customer types, “I want to cancel my plan and get a refund,” a rule-based bot may not handle this properly because it involves two separate intents. An AI agent, on the other hand, can process both actions in a single interaction, confirming the cancellation and initiating the refund while keeping the user informed throughout.

Moreover, traditional chatbots are often limited to a single channel, such as a website widget. AI agents are designed for omnichannel environments—they can operate across web chat, mobile apps, WhatsApp, SMS, and even voice platforms. This flexibility makes them suitable for businesses that aim to support users on their preferred channels without compromising on consistency or speed.

Another key difference lies in adaptability. Traditional bots are static and require manual updates to evolve. AI agents, by contrast, improve over time through data-driven refinements and supervised learning. They can be updated quickly with new product information or FAQs and fine-tuned to adapt to changing business rules or language patterns.

In short, traditional chatbots are rule followers. AI agents are problem solvers. And as customer expectations shift toward real-time, intelligent, and personalized experiences, only the latter can keep up with the scale and complexity of modern support demands.

The Strategic Importance of Automating Tier-1 and Tier-2 Support

Customer service teams are chronically overwhelmed. In a typical SaaS or eCommerce environment, Tier-1 tickets (basic inquiries) account for nearly 60–80% of total volume—questions like “Where’s my order?” or “How do I reset my password?”. Tier-2 tickets (moderately complex issues) may involve refund eligibility, subscription downgrades, or product troubleshooting.

Hiring humans to manage these repetitive interactions is costly, inconsistent, and unsustainable at scale. According to McKinsey, companies that implement AI support systems can reduce customer service costs by up to 30% while improving first response time and customer satisfaction scores (CSAT).

By automating Tier-1 and Tier-2 support:

- Businesses reduce response time from hours to seconds

- Agents are freed to focus on complex, high-empathy issues

- Support capacity scales without linear cost increase

- Customers receive instant, accurate resolutions 24/7

Many companies wonder, “Which support queries should be automated first?” Start with high-frequency, low-complexity requests like tracking orders, modifying subscriptions, account verification, and general FAQs—these deliver the fastest ROI with minimal risk.

LLMs: A Breakthrough in Conversation Quality

The rise of Large Language Models (LLMs)—such as OpenAI’s GPT-4o, Anthropic’s Claude, and Google’s Gemini—has radically changed what’s possible in customer service automation.

Unlike older NLP systems that required extensive training on narrow intent libraries, LLMs:

- Understand user input in natural, conversational phrasing

- Can generate answers dynamically based on semantic similarity

- Are capable of few-shot learning with minimal fine-tuning

- Handle multi-turn, branching logic without hardcoded flows

For example, a GPT-4-powered agent can generate empathetic responses like:

“I completely understand how frustrating that must be. Let me check your delivery status right now…”

This is a far cry from the robotic tone of most bots. Additionally, LLMs can use vector search and RAG techniques to retrieve precise answers from product manuals, knowledge bases, and FAQs—creating a hybrid system that balances generative flexibility with grounded accuracy.

An emerging concern is: “Can LLMs hallucinate?” Yes—and this is where structured prompt engineering, guardrails, and retrieval-based answering systems play a critical role. A well-architected AI agent does not rely solely on generation; it verifies responses against known data sources before responding.

Why Most Customer Support is Ripe for AI Disruption

The global business environment is undergoing a paradigm shift. Customers now expect 24/7 availability, instant replies, and personalized resolutions. But most support teams struggle to keep up due to:

- Increasing interaction volumes across web, app, and messaging

- Labor shortages and agent attrition

- Inconsistent service quality across time zones

- Rising operational costs in scaling human teams

AI agents are emerging as the only sustainable answer. Gartner estimates that by 2026, over 80% of customer interactions will be handled by AI—either entirely or through hybrid models. Companies that delay adoption risk falling behind on both cost efficiency and customer satisfaction.

Consider the real-world case of Klarna, the fintech giant: by deploying a GPT-4-based AI agent, they automated 65% of all customer interactions, reduced average handling time by two-thirds, and improved CSAT by over 20% in the first quarter post-launch.

The implications are clear: AI agents aren’t just support tools—they are becoming core infrastructure for customer-centric businesses.

2. Market Size, Growth, and Industry Trends

Global Market Outlook for AI in Customer Service

TThe global AI for customer service market is valued at USD 12.06 billion in 2024 and is projected to reach USD 47.82 billion by 2030, growing at a compound annual growth rate (CAGR) of 25.8% during the forecast period (2024–2030), according to a MarketsandMarkets report.

Key Drivers Behind the Growth of AI-Powered Support

Several macro and microeconomic factors are converging to make AI support agents indispensable across industries. Below are the most significant drivers accelerating this shift:

1. Rising Customer Expectations for 24/7 Support

Modern consumers expect instant, around-the-clock responses across every digital touchpoint—web, app, WhatsApp, email, or even SMS. According to Salesforce’s State of the Connected Customer report, 78% of consumers expect consistent interactions regardless of time or channel. For global companies, hiring 24/7 human support across time zones is logistically complex and prohibitively expensive. AI agents solve this by offering real-time availability at fractional costs.

2. Explosion in Customer Interaction Volume

As digital channels multiply, so does customer engagement volume. A single user may message a brand on Instagram, file a ticket on Zendesk, and follow up via WhatsApp—all within hours. Businesses are now handling tens of thousands of micro-interactions daily. Manual triage and human response systems simply cannot scale to meet this volume without automation.

3. Labor Cost Pressures in Tier-1 Support

Tier-1 support typically handles repetitive inquiries like account access, shipping status, returns, or basic how-to questions. These roles are labor-intensive but deliver low-value impact per interaction. By automating Tier-1 workflows, companies reduce dependency on large agent teams, improve response time, and optimize cost per ticket. In high-cost geographies like the U.S. and EU, the ROI of deploying AI agents becomes evident within weeks.

4. Maturity of LLM-Based Tools and Frameworks

The emergence of LLM APIs and agent frameworks—such as OpenAI’s GPT-4o, LangChain, AutoGen, and RAG-based architectures—has made it far easier for companies to build intelligent, personalized AI agents. These tools allow for hybrid workflows combining generative conversation with structured logic and real-time API lookups. The previous barrier to entry (needing a full NLP team) is now replaced with accessible developer tools and hosted platforms.

Industry Adoption Trends by Sector

Early adoption patterns indicate a clear sequence of industries moving into AI-first support models.

1. SaaS and eCommerce

These sectors were among the first to automate repetitive support. Subscription changes, onboarding, plan upgrades, and order management are ideal use cases. Shopify, Intercom, and Drift have all launched LLM-powered agents to reduce churn and scale customer engagement.

2. Fintech and Travel

With high ticket volumes and real-time urgency, fintech apps and online travel agencies are leaning into AI support to handle KYC queries, transaction disputes, itinerary changes, and booking issues. Companies like Klarna and MakeMyTrip have already demonstrated significant gains using GPT-powered agents.

3. Healthcare, Insurance, and Telecom

Historically slower to adopt automation due to compliance and complexity, these industries are now entering the AI support space. In healthcare industry, healthcare AI agents assist with appointment reminders and pre-authorizations. In insurance, they manage claims status and policy queries. Telecoms are automating SIM activation, plan upgrades, and outage reporting with AI.

For decision-makers wondering “Is my industry too complex for AI support?”—the trend suggests no. Even regulation-heavy sectors are now safely adopting AI agents by building guardrails into design and enforcing fallback-to-human mechanisms.

AI Agents’ Role in 2030 Customer Service Models

By the end of this decade, AI agents are expected to become the default first responder in customer support stacks.

- Analysts predict that by 2030, AI support agents will handle up to 70% of Tier-1 and Tier-2 queries in mid to large enterprises.

- This automation is forecasted to reduce the average cost per resolution by 60–80%, according to a report by Deloitte.

- AI agents will not only triage and resolve tickets—they’ll also personalize support by referencing CRM data, analyzing past behaviors, and applying customer segmentation logic in real time.

What was once a chatbot-driven FAQ experience will evolve into a full-service AI concierge, capable of resolving complex tasks like subscription negotiations, returns, proactive upselling, and compliance disclosures—without human intervention.

The Strategic Imperative: Automate or Lag Behind

The question for modern enterprises is no longer “Should we use AI for support?”—but rather “What’s the cost of not using it?”

Firms that fail to integrate AI into their support operations risk:

- Slower resolution times and higher customer churn

- Poor CSAT/NPS due to inconsistent service levels

- Rising operational costs with diminishing returns

- Brand erosion from inefficient or unavailable support

Meanwhile, AI-first companies will outpace competitors by delivering scalable, 24/7, personalized service—at a fraction of the cost.

In an era where customer support is a core brand differentiator, building and deploying an AI agent is no longer optional. It’s a strategic imperative.

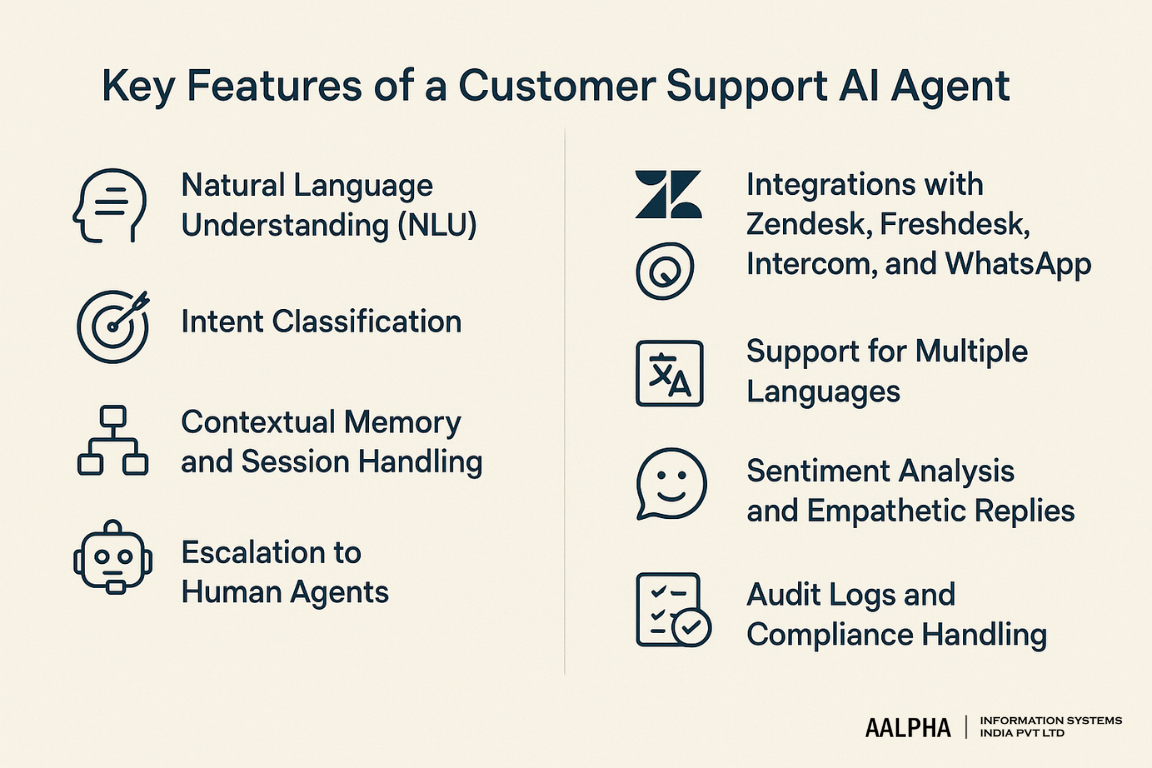

3. Key Features of a Customer Support AI Agent

As businesses begin to transition from human-centric to AI-augmented support systems, understanding the technical foundation of an effective AI support agent becomes essential. While some companies attempt to deploy rudimentary chatbots, modern customer expectations demand far more: intelligent, context-aware, multichannel agents that resolve issues in real time while preserving tone, accuracy, and compliance.

Below are the essential capabilities that every serious AI support agent must offer—regardless of industry or channel.

1. Natural Language Understanding (NLU)

At the heart of any AI support agent lies natural language understanding (NLU)—the ability to accurately interpret user inputs expressed in conversational language. Customers rarely phrase their issues like database queries. Instead, they write or speak informally, with slang, abbreviations, or incomplete sentences.

A robust NLU system deciphers the underlying meaning of user statements. For example:

- “Hey, I need help with my bill from last month—it looks off.”

- “My order still hasn’t arrived. What’s the deal?”

- “Can you cancel the blue T-shirt but keep the rest?”

In each case, the AI must extract actionable meaning: identify the intent (billing issue, delivery status, partial order cancellation), pull relevant entities (dates, product types), and route the request accordingly.

LLMs like GPT-4o, Claude 3, or open-source alternatives like Mistral and LLaMA 3 have made NLU significantly more powerful by enabling semantic parsing without rigid keyword matching. This allows agents to understand nuance, tone, and mixed intents with much higher accuracy.

2. Intent Classification

Intent classification is the process of identifying what a customer wants to accomplish. While NLU parses the input, intent classification categorizes it into predefined support categories like:

- Request refund

- Track shipment

- Reset password

- Cancel subscription

- Upgrade plan

- Report issue

Well-designed support agents use multi-intent classification to handle complex or compound inputs. For instance, if a customer types, “I want to cancel my order and get a refund,” the agent should recognize and trigger both cancellation and refund workflows.

Intent classifiers are often built using transformer models fine-tuned on industry-specific support data, augmented with rule-based validation for higher precision. Tools like LangChain, Rasa NLU, and Dialogflow CX support custom intent classification pipelines.

When companies ask, “How do I make sure the AI handles the right query?”—this is the layer where most of that accuracy lives.

3. Contextual Memory and Session Handling

True customer support requires contextual continuity. That means the AI agent should remember what was said earlier in the conversation and respond appropriately without forcing the user to repeat themselves.

For example:

- Customer: “I’m still waiting for my return label.”

- AI Agent: “I see we sent it on July 8. Let me resend it now.”

To achieve this, the agent must:

- Store key entities and decisions throughout the session

- Handle pronouns, ellipses, and implied references

- Resume interrupted conversations seamlessly

Modern frameworks use short-term session memory and long-term user memory, often stored in Redis, PostgreSQL, or vector databases, to maintain context. Session handling is especially critical in asynchronous channels like WhatsApp or SMS, where a user may return hours later expecting continuity.

4. Escalation to Human Agents

Even the best AI agents will encounter edge cases—especially for emotional, legal, or highly technical issues. In these moments, the agent must gracefully hand off the session to a human without losing the conversation context.

A seamless escalation flow includes:

- Triggering based on confidence threshold or sentiment score

- Transferring chat history and context to the live agent

- Notifying human reps via tools like Zendesk, Freshchat, Intercom

- Offering the customer clear information about the escalation status

It’s common for users to ask, “Can I talk to someone real?” Your AI agent should recognize such intents and respond without frustration or deflection. Human fallback is not a weakness—it’s a trust-preserving mechanism and a critical feature for enterprise-grade AI deployment.

5. Integrations with Zendesk, Freshdesk, Intercom, and WhatsApp

To function in real-world operations, AI agents must plug into the existing customer support infrastructure. This includes:

- Helpdesk platforms (Zendesk, Freshdesk, Zoho Desk)

- CRM tools (HubSpot, Salesforce, Pipedrive)

- Communication channels (WhatsApp Business API, web chat, in-app messaging)

- Order management systems (Shopify, WooCommerce, Magento)

Integrations enable the agent to retrieve tickets, update orders, send refund confirmations, or create support cases without requiring human assistance. For example:

- A customer says, “Cancel order #1234,”

- The AI connects to Shopify or WooCommerce via API, checks status, initiates cancellation, and sends confirmation.

Multichannel integration also means users can start a conversation on WhatsApp and continue it on the web without starting over. This interoperability is central to omnichannel support strategies.

6. Support for Multiple Languages

In a global market, multilingual support is no longer optional. AI agents must handle user queries in the customer’s native language—whether it’s Spanish, Hindi, Arabic, French, or Tagalog—without degrading performance.

LLMs have significantly improved the ability to understand and respond in dozens of languages natively. For critical support use cases, it’s recommended to:

- Pair multilingual LLMs with localized content sources

- Set fallbacks for unsupported languages or unknown dialects

- Offer real-time translation when direct handling isn’t possible

Multilingual capability also increases inclusivity and accessibility, enabling businesses to serve underserved segments without hiring multi-language support teams.

7. Sentiment Analysis and Empathetic Replies

Support isn’t just about facts—it’s also about emotional intelligence. An effective AI agent recognizes when a user is frustrated, confused, or angry and adjusts its tone and behavior accordingly.

Sentiment analysis, powered by fine-tuned classification models, can detect:

- Negative emotion (“I’m really disappointed in your service”)

- Urgency (“This is urgent. My account was charged twice!”)

- Confusion (“I don’t understand what you mean by plan limit.”)

Based on these signals, the AI can:

- Use softer, more empathetic language

- Escalate faster to a human

- Prioritize follow-up actions

Empathy isn’t just a nice-to-have—it’s directly correlated with customer satisfaction (CSAT). A cold, robotic response—even if accurate—can alienate users and damage brand trust.

8. Audit Logs and Compliance Handling

For regulated industries like finance, healthcare, or insurance, compliance is non-negotiable. AI agents must maintain transparent logs of all conversations, actions taken, and data accessed.

A compliant AI support system includes:

- Audit trails for all automated interactions

- Data access logs and masking of sensitive fields

- Role-based access controls for human overrides

- Retention policies aligned with GDPR, HIPAA, or local data laws

For example, if a customer requests “Delete all my chat history,” the AI must not only comply but also log this action for audit review.

Additionally, many enterprises are now deploying policy-guardrails—like redaction filters and hallucination detection layers—to ensure agents never generate inappropriate, biased, or non-factual content during support interactions.

An AI agent is more than just a chatbot with a fancy model. It’s a deeply integrated, context-aware system that operates across channels, handles real-world complexity, adapts to user emotion, and works within your compliance boundaries.

When companies ask, “What makes an AI agent production-ready?”—the answer lies in these eight pillars. If even one of them is missing, the system may fail to deliver the efficiency, trust, and consistency that modern customer support demands.

4. Common Use Cases for AI Support Agents

AI agents are now central to customer service operations across industries, from eCommerce and SaaS to healthcare and enterprise IT. While many companies start with FAQ automation, the most impactful gains come from agents handling operationally heavy, high-volume queries that would otherwise overwhelm human support teams. These real-world deployments highlight the most common use cases of AI agents, where they don’t just supplement human agents but take ownership of repetitive, time-sensitive, and scalable tasks.

Below are six of the most common and valuable use cases where AI support agents deliver measurable results—from cost savings to faster resolution times and improved customer satisfaction.

1. Order & Delivery Status (eCommerce and Logistics)

One of the most frequently asked questions in retail and logistics is: “Where is my order?” This query, while simple, accounts for a large share of support volume—especially during high-traffic periods like holiday sales or product launches.

An AI agent integrated with order management systems (Shopify, WooCommerce, or ERP platforms) can instantly:

- Retrieve order details based on email, phone, or tracking number

- Display delivery status with real-time carrier API integration (e.g., Shiprocket, FedEx, Delhivery)

- Notify customers about delays, rescheduling, or failed deliveries

- Escalate issues related to missing or damaged items

These agents eliminate the need for customers to wait in queues or manually search tracking portals, improving the post-purchase experience while reducing strain on Tier-1 support.

2. Technical Troubleshooting (SaaS, IT, and Consumer Electronics)

Support teams often receive vague, technical complaints like:

- “The app crashes when I try to export a PDF.”

- “My internet is connected, but the software says offline.”

- “How do I change my DNS settings on Mac?”

AI support agents trained on product documentation, help articles, and known bug repositories can:

- Guide users through multi-step troubleshooting workflows

- Ask clarifying questions to narrow down the issue

- Escalate only when user frustration or issue complexity exceeds thresholds

- Offer version-specific guidance by identifying the user’s device or software version

In SaaS, these agents can also check backend service status or log files to proactively detect and resolve known issues. For internal IT departments, agents integrated with ticketing systems (like Jira Service Desk or Freshservice) can triage issues before assigning them to engineers.

When IT leaders ask, “Can AI handle our Tier-1 tech support?”—this is one of the strongest use cases to start with.

3. Billing, Refunds, and Subscription Management

In subscription-based businesses (SaaS, media, eCommerce), queries related to billing generate high interaction volume:

- “Why was I charged twice this month?”

- “Can I pause my subscription?”

- “I want a refund for last week’s purchase.”

An AI agent with access to billing systems like Stripe, Razorpay, Chargebee, or PayPal can:

- Retrieve invoices and payment histories

- Explain charges, renewal terms, and tax components

- Initiate refunds within business policy limits

- Assist with upgrades, downgrades, and cancellations

- Offer retention options like discounts or trial extensions

Support automation in billing not only reduces costs but also builds transparency and trust. Companies that proactively explain charges and allow instant self-service see lower churn and fewer payment disputes.

4. Appointment Rescheduling and Booking Changes

Industries like healthcare, education, and professional services handle a constant stream of appointment-related support:

- “I can’t make it on Monday—can I reschedule to Wednesday?”

- “Is Dr. Patel available this weekend?”

- “Cancel my therapy session for tomorrow.”

AI agents integrated with calendars (Google Calendar, Calendly, or custom booking systems) can:

- Check availability across time slots and providers

- Offer real-time rescheduling options

- Handle cancellations and apply no-show policies

- Send confirmation messages across WhatsApp, SMS, or email

For clinics or coaching businesses, these agents reduce no-shows, improve scheduling efficiency, and eliminate the back-and-forth that frustrates users.

When users ask, “Can I reschedule my appointment without calling?”—AI agents provide that instant self-service capability that today’s users expect.

5. Internal IT Helpdesk Automation (Enterprise Use)

Enterprises running internal support teams (IT, HR, Ops) often face repetitive questions like:

- “How do I reset my VPN password?”

- “Where can I download the latest onboarding forms?”

- “Is the server under maintenance right now?”

AI agents deployed internally via Slack, Microsoft Teams, or email can:

- Deflect common requests through instant answers or links

- Initiate automated workflows (e.g., password resets via Okta/AD)

- Log tickets in ITSM tools like ServiceNow or Jira

- Route unresolved issues to the right support queues

This internal support automation not only reduces MTTR (Mean Time to Resolution) but also improves employee productivity by removing friction from everyday technical or procedural tasks.

6. B2B Customer Onboarding and Training

For B2B products, onboarding is often complex—requiring the user to complete integrations, setup steps, or compliance checks. Customers frequently ask:

- “How do I connect our CRM to your platform?”

- “What’s the API key setup process?”

- “Where’s the user training module?”

AI support agents trained on product documentation, API guides, and video tutorials can:

- Walk users through onboarding workflows step by step

- Serve personalized help based on user role or account stage

- Provide contextual support inside dashboards via tooltips or chat widgets

- Recommend relevant training resources or upcoming webinars

The result is faster time to value (TTV), lower onboarding drop-off, and reduced load on CSMs and product specialists.

For teams asking, “Can AI reduce onboarding friction for new clients?”—this use case provides a clear path to measurable ROI.

These six use cases represent just the beginning. As AI agents continue to improve, they will take on more complex support workflows, including compliance verification, upsell interactions, and even proactive customer education. The key is to start with high-volume, low-complexity interactions that map cleanly to your support KPIs—and scale from there.

5. Building a Customer Support AI Agent – Step-by-Step Process

Building a high-performing AI agent for customer support is not just about plugging in a language model. It requires a structured approach that combines problem scoping, model selection, knowledge integration, real-world testing, and multi-system orchestration.

Below is a comprehensive, seven-step blueprint to guide you from ideation to deployment, optimized for teams building either in-house or through a service provider.

Step 1: Define Use Cases and Success Goals

Before writing a single line of code, start by clearly answering: What exactly should this AI agent do?

Many businesses fail by trying to build a generalist AI that does everything. The most successful agents are purpose-built for specific, high-volume support scenarios. Focus on Tier-1 and Tier-2 queries that are repetitive, easy to automate, and don’t require emotional nuance or legal decision-making.

Key activities:

- Analyze top 100–500 historical support tickets

- Segment by category: billing, orders, troubleshooting, scheduling

- Estimate automation potential (e.g., “60% of refund requests are repeatable”)

- Define fallback conditions where a human should intervene

- Set measurable KPIs: CSAT score, resolution rate, time to first response, deflection percentage

For example, if 40% of your inbound tickets are about order tracking, that becomes the first intent you automate. Success here creates a solid base to expand into more complex use cases.

Step 2: Select the Right LLM/NLP Stack

The core intelligence of your agent depends on the language model and orchestration stack you choose. This decision impacts accuracy, latency, cost, and extensibility.

LLM Options:

- OpenAI GPT-4o: High accuracy, reasoning, and multi-modal capability

- Anthropic Claude 3: Long-context performance, better at safety and tone control

- Google Gemini 1.5: Strong on retrieval and enterprise integration

- Open-source models: Mistral, LLaMA 3, DeepSeek, Falcon—ideal for cost control and private hosting

NLP Orchestration Tools:

- LangChain: Modular LLM agent architecture with memory, tools, RAG

- Rasa: Intent-based NLP engine with open-source flexibility

- AutoGen: Multi-agent coordination using GPT

- Haystack or LlamaIndex: RAG pipelines for doc QA

- LangSmith or PromptLayer: Prompt monitoring and evaluation

When business leaders ask, “Which LLM is best for customer support?”—the answer depends on factors like latency tolerance, data privacy needs, budget, and control. For most companies, a hosted model like GPT-4o or Claude 3 is a safe and scalable starting point.

Step 3: Design Intents and Dialog Flows

At this stage, you begin to structure the conversation logic that governs how the agent understands and responds to user messages. This combines natural language understanding (NLU), entity extraction, and flow control.

Key tasks:

- Define primary intents: e.g., “Track Order,” “Reset Password,” “Cancel Subscription”

- Map required entities for each intent: e.g., Order ID, Email, Product Name

- Design fallback phrases: “I didn’t catch that. Could you clarify?”

- Create multi-turn flows: branching based on user answers or input gaps

- Add interrupt handling: if a user switches topic mid-flow

- Use hybrid approach: scripted flows for business-critical processes + LLM for freeform Q&A

Visual design tools like Voiceflow, Botpress, and Dialogflow CX can help non-technical teams collaborate on flow design.

Avoid overcomplicating your flows in v1. Start simple and expand as you observe real user behavior post-deployment.

Step 4: Train the Agent on Knowledge Base and Documents (RAG)

To answer factual, policy-based, or procedural queries, your AI agent needs access to your organization’s internal knowledge—product guides, return policies, help docs, and troubleshooting workflows.

This is best achieved via retrieval-augmented generation (RAG), which allows the model to search documents and generate answers grounded in source material.

Implementation flow:

- Document ingestion: PDFs, HTML, Notion pages, Confluence docs, Zendesk articles

- Text chunking: Break large docs into semantically coherent sections (e.g., 500-token blocks)

- Embedding: Convert text chunks into vector format using OpenAI, HuggingFace, or Cohere embeddings

- Vector storage: Use Pinecone, Weaviate, Qdrant, or ChromaDB for scalable semantic search

- Query + retrieval: Agent sends user input to retriever → matches top 3–5 docs

- Answer generation: LLM uses retrieved context to formulate answer

- Citation handling: Optionally return source links with responses

For teams asking, “How do I make sure the AI gives accurate answers?”—this is the most critical architectural piece. RAG reduces hallucinations and gives your agent explainable reliability.

Step 5: Integrate with Tools and Deployment Channels

An AI agent isn’t useful until it’s embedded into your support workflows and customer touchpoints.

Integration layers:

- Helpdesk systems: Zendesk, Freshdesk, Intercom, Gorgias

- CRM and billing: Salesforce, HubSpot, Chargebee, Stripe

- E-commerce platforms: Shopify, WooCommerce, Magento

- Calendars and schedulers: Google Calendar, Calendly, internal booking APIs

- Communication channels:

- WhatsApp Business API

- Web chat (Crisp, Drift, custom React widget)

- SMS (Twilio, Gupshup)

- In-app support SDKs (mobile and desktop)

- WhatsApp Business API

Security considerations:

- Enforce session-based memory with secure tokens

- Mask sensitive data in prompts and logs (emails, card numbers)

- Implement user authentication (OAuth2, JWT) before accessing private data

For developers asking, “How do I connect my support bot to our tools?”—the answer lies in writing middleware APIs that allow the AI agent to query, write, or update records safely via authenticated endpoints.

Step 6: Test with Real User Scenarios

Before launch, your agent must be tested across edge cases, malformed queries, and operational stress loads.

Testing process:

- Simulate 100+ real customer queries across top intents

- Track metrics: confidence score, fallback rate, hallucination frequency, token usage

- Check multi-turn dialog continuity, especially on mobile channels

- Verify all API actions: refund requests, subscription changes, booking updates

- Run security audits: injection testing, output filtering

- Conduct usability testing with support agents or beta users

Tools like LangSmith, PromptLayer, or TestMyPrompt can help log, debug, and fine-tune LLM behavior across scenarios.

Plan for an internal alpha followed by a staged beta (5–10% traffic rollout) before full production launch.

Step 7: Launch, Monitor, and Continuously Improve

Going live is just the beginning. To keep your AI agent relevant, accurate, and safe, you must continuously observe and iterate.

Monitoring stack:

- Fallback logs: Identify unhandled intents or repeated clarifications

- Prompt monitoring: Evaluate output consistency and tone

- Session logs: Track average session length, resolution rate, sentiment shifts

- Feedback collection: Let users rate helpfulness or flag incorrect answers

- Analytics: CSAT, containment rate, time to resolution, escalation frequency

Improvement loop:

- Weekly review of failed sessions and misunderstood intents

- Add training examples or update prompt structure

- Enrich knowledge base with missing content

- Tweak prompt instructions for tone or verbosity

- Retrain or re-index vector DBs as new content is added

Successful AI teams treat their agents like products—iterating based on data, expanding scope gradually, and maintaining a backlog of improvements.

Building a customer support AI agent isn’t just about connecting an LLM to a chat widget. It’s about orchestrating multiple systems—conversation logic, memory, retrieval, tooling, and monitoring—into a seamless experience that meets real business objectives.

Whether you’re a startup founder asking, “How can I automate our Tier-1 support with AI?” or an enterprise leader exploring LLM adoption, following these seven steps provides a practical roadmap to build a production-grade AI agent from scratch.

6. Tools & Platforms to Build AI Support Agents

The modern AI support agent isn’t built from a single tool—it’s a stack. At the core is a powerful language model (LLM), but true production-grade performance depends on orchestration layers, retrieval infrastructure, deployment channels, and automation logic. Selecting the right tools at each layer is essential for building a scalable, maintainable, and secure support automation system.

This section breaks down the top tools used by leading teams to develop, deploy, and manage customer support AI agents in 2025.

Large Language Models (LLMs)

The LLM is the brain of your support agent. Choosing the right one affects reasoning ability, latency, cost, tone, and contextual accuracy.

1. GPT-4o by OpenAI

- Strengths: World-class reasoning, multi-modal input/output, real-time performance

- Use Case Fit: High-quality customer interactions, dynamic Q&A, empathetic replies

- Pricing: Pay-per-token API pricing; optimized for production-scale workloads

- Notes: Ideal for teams looking for quick deployment with powerful generalization

2. Claude 3 by Anthropic

- Strengths: Long context handling (100K+ tokens), strong alignment and tone control

- Use Case Fit: Knowledge-intensive workflows, RAG pipelines, sensitive brand voice

- Pricing: Comparable to OpenAI; separate models for performance tiers

- Notes: Claude is preferred for use cases requiring precision and guardrails

3. Gemini 1.5 by Google

- Strengths: Fast retrieval-native performance, good grounding, Google Workspace integration

- Use Case Fit: Enterprise deployments, internal support agents, multilingual support

- Pricing: Tied to Google Cloud usage; strong SLAs for enterprise teams

- Notes: Gemini integrates well with Google APIs for calendar, Gmail, Docs, and Drive

If you’re asking, “Which LLM is best for support agents?”—start with GPT-4o for general usage, use Claude 3 if tone and accuracy matter most, and consider Gemini if your systems are already on Google Cloud.

Agent Frameworks & Orchestration Tools

Once you’ve selected an LLM, you’ll need an orchestration layer to manage memory, tools, state handling, and external actions.

1. LangChain

- Purpose: Agent orchestration, tool calling, and memory

- Use Case Fit: Complex multi-step agents that interact with APIs, databases, and logic chains

- Ecosystem: Integrates with OpenAI, Pinecone, Chroma, custom tools, LangSmith

- Strengths: Modular, open source, widely adopted in production agents

- Ideal For: Teams building custom multi-agent systems with full control

2. LlamaIndex

- Purpose: Indexing and querying private data sources for RAG

- Use Case Fit: Document-based support agents (knowledge base, policy, SOP)

- Strengths: Built-in support for embeddings, query engines, and multi-source fusion

- Ideal For: Enterprises wanting explainable AI responses backed by citations

3. Rasa

- Purpose: NLU engine and conversation flow management

- Use Case Fit: Intent classification, deterministic logic, open-source flexibility

- Strengths: Built for traditional chatbots; now supports hybrid LLM + rule-based flows

- Ideal For: Enterprises in regulated sectors or with on-prem deployment needs

Agent Builders and UI Platforms

These tools simplify building, testing, and launching support agents, especially for teams without full-stack AI infrastructure.

1. Botpress

- What it does: Visual agent builder with LLM support, integrations, flow designer

- Strengths: Multilingual support, real-time deployment, hosted or self-hosted options

- Use Case Fit: Customer support bots that require rich UI/UX with hybrid LLM logic

- Ideal For: Product teams that want control without deep NLP engineering

2. Flowise

- What it does: Visual interface for LangChain workflows and prompt chains

- Strengths: Easy prototyping of LLM pipelines, vector search, and tool use

- Use Case Fit: MVPs, internal agents, or proof-of-concept deployments

- Ideal For: Early-stage teams or agencies delivering quick AI support prototypes

3. CustomGPT

- What it does: Hosted platform to ingest business docs and auto-generate GPT-based agents

- Strengths: Fast onboarding, no-code interface, easy chat widget embedding

- Use Case Fit: Businesses needing quick AI support for FAQs and document Q&A

- Ideal For: SMBs without technical teams

Frontend Deployment Tools

Once your agent is functional, you’ll need to deliver it to users via web, app, or messaging interfaces.

1. Vercel

- Use Case: Deploying frontend apps (React, Next.js) that host web chat interfaces

- Strengths: Lightning-fast CDN, global edge network, serverless functions

- Best For: Frontend-heavy AI chat apps, customer portals with embedded agents

2. WhatsApp Cloud API

- Use Case: Delivering support agents on WhatsApp (the most used support channel in many regions)

- Strengths: Rich media support, session-based logic, verified business messaging

- Best For: Clinics, eCommerce brands, and logistics companies serving mobile-first audiences

- Notes: Requires Facebook Business verification and session flow compliance

Businesses often ask, “Can I deploy AI support on WhatsApp?”—yes, via the official Cloud API with backend session logic and security layers.

Vector Databases (for RAG Search)

Retrieval-augmented generation (RAG) relies on a vector store to fetch semantically relevant documents for the LLM.

1. Pinecone

- Fully managed vector DB with high availability and fast similarity search

- Supports filtering, metadata tagging, and hybrid search (text + vector)

- Scales with multi-tenant workloads, production ready

2. Weaviate

- Open-source vector DB with GraphQL query support

- Native support for hybrid search and schema enforcement

- Strong for self-hosted or privacy-sensitive deployments

LLM developers often ask, “How do I feed my knowledge base into the agent?”—vector databases like Pinecone and Weaviate are the backbone of that architecture.

Middleware Automation: Make.com, n8n, Zapier

To bridge your AI agent with CRMs, billing systems, or internal APIs, middleware tools handle orchestration without full backend builds.

1. Make.com

- Visual low-code interface to automate workflows

- Supports 1,000+ SaaS integrations

- Ideal for building refund workflows, ticket creation, status updates

2. n8n

- Open-source alternative with full backend logic customization

- Excellent for teams with moderate technical skill and data sensitivity

- Preferred for on-prem or hybrid AI agent deployment

3. Zapier

- Fastest to set up but less flexible for complex flows

- Best for MVPs or lightweight support bots that need minimal integrations

When companies ask, “How do I automate workflows behind the AI agent?”—these tools provide the integration glue between front-end conversations and backend actions.

Choosing the right tools to build your AI support agent isn’t about chasing the most hyped platform—it’s about assembling a AI agent tech stack that fits your support volume, infrastructure maturity, and business goals.

From LLMs and retrieval pipelines to frontend delivery and automation logic, every piece plays a role. The best agents are built not by chance, but by deliberate integration of proven tools working in harmony.

7. Real-World Examples and Case Studies

As AI support agents move from concept to competitive advantage, many leading companies have already proven the model at scale. These real-world examples demonstrate how customer support AI is delivering operational efficiency, faster response times, and measurable gains in customer satisfaction.

Whether you’re a startup wondering, “How are other companies using GPT-powered agents?” or an enterprise evaluating LLM deployment, the following case studies offer actionable insights into how AI agents are being built and what results they can deliver.

1. Klarna: 2/3rds of Customer Chats Handled by AI

Problem

As a fast-growing fintech platform serving millions of users across Europe and the U.S., Klarna faced high volumes of repetitive customer service requests—everything from payment confirmations and delivery tracking to dispute resolution. With over 2 million daily support interactions, maintaining human-only operations was increasingly unsustainable.

Solution

Klarna implemented an AI support agent powered by OpenAI’s GPT-4, integrated across its website and app interfaces. The agent was trained to handle a wide range of structured queries while using internal APIs to fetch transaction data and delivery status.

The system employed retrieval-augmented generation (RAG) for policy references and used business logic rules to determine when to escalate to human agents.

Results

- 65% of all support interactions are now fully automated

- Reduced average handling time by 66%

- Increased first contact resolution rate by over 25%

- CSAT scores remained stable or improved across most channels

Tech Stack

- LLM: OpenAI GPT-4

- Orchestration: LangChain + custom middleware

- Deployment: Klarna app and web chat

- Integration: Internal CRM, payment APIs, logistics platforms

2. Intercom Fin: GPT-4 Fine-Tuned for Tier-1 Support

Problem

Intercom, a customer messaging platform, wanted to offer automated Tier-1 support to its SaaS customers without degrading the tone, speed, or helpfulness that its live agents were known for.

Solution

Intercom launched “Fin,” a GPT-4-powered support agent that sits natively inside its own chat platform. It combines semantic search over the company’s knowledge base with real-time conversation handling.

Fin uses retrieval-augmented generation (RAG) to answer technical questions, interpret user context, and deliver answers backed by documentation. It also integrates with Intercom’s ticketing and inbox system to ensure seamless handoffs.

Results

- Resolution rate of 70% for high-frequency questions

- Average response time reduced to less than 3 seconds

- Improved agent efficiency by reducing workload on human reps by 50%

- Trusted by thousands of Intercom’s paying customers out of the box

Tech Stack

- LLM: GPT-4 via OpenAI API

- Search: Internal KB + custom vector embeddings

- Frontend: Native Intercom widget

- Monitoring: Fin dashboard with fallback tracking

3. Shopify: Automating Seller Support at Scale

Problem

Shopify, powering millions of online stores, receives massive volumes of merchant support requests ranging from setup and product uploads to billing and returns. As its seller base scaled, so did demand on its human support teams.

Solution

Shopify deployed an AI agent trained to assist sellers with onboarding, store setup, app integrations, and product catalog issues. The agent used both scripted flows for deterministic tasks and LLMs for freeform queries.

It integrated with Shopify’s backend to provide contextual support—showing sellers their store analytics, shipping settings, or pending payouts—all within the chat interface.

Results

- Over 60% of common support queries deflected

- Reduced merchant onboarding time by up to 30%

- Helped new users become “active” faster, improving seller retention

- Human agents were reassigned to high-impact partner success roles

Tech Stack

- LLM: Custom fine-tuned GPT + internal classifiers

- Integrations: Shopify store APIs, order data, shipping modules

- Channels: In-dashboard chat, email replies

- Automation logic: Conditional flows + LLM fallback

4. Freshdesk Freddy AI: Contextual GPT Integration

Problem

Freshworks wanted to differentiate its customer support suite, Freshdesk, by embedding AI into the agent workflow to assist both customers and internal support staff with contextual replies and actions.

Solution

They built Freddy AI, a support automation layer powered by GPT-style LLMs that works behind the scenes in Freshdesk. Freddy surfaces relevant KB articles, auto-suggests replies, and even drafts responses during live chat or email conversations.

For end users, Freddy AI automates interactions like status checks, ticket creation, and FAQ resolution. For agents, it provides recommended next actions and response templates based on historical data.

Results

- 25–40% improvement in agent productivity

- Time-to-resolution cut by 20% for support teams using Freddy

- Customers receive context-aware answers without navigating long articles

- Freddy is now deployed across multiple Freshworks modules including CRM and ITSM

Tech Stack

- LLM: GPT-based custom model

- Deployment: Embedded in Freshdesk and Freshchat

- Integration: CRM, ticketing, analytics modules

- Support flow: Agent-assist + end-user chatbot

From fintech and SaaS to eCommerce and CX platforms, these examples prove that AI agents are not speculative—they are delivering production-ready results at scale. Common elements across all success stories include:

- Clear problem scoping

- Thoughtful LLM integration

- RAG or knowledge retrieval pipelines

- Seamless fallback to human agents

- Continuous feedback and performance monitoring

These case studies offer a blueprint for any company asking, “Will an AI agent actually work in our support stack?”—The evidence says yes, when built and deployed correctly.

8. Technical Challenges and Solutions

Even the most advanced AI agents encounter practical limitations during real-world deployment. From hallucinations and context loss to security vulnerabilities and escalation gaps, these challenges can undermine user trust if left unaddressed. Understanding these risks—and implementing robust solutions—is essential for building reliable, scalable customer support automation.

Here are six of the most pressing technical challenges teams face when launching AI agents, along with proven mitigation strategies.

1. Hallucinations: Preventing Inaccurate or Fabricated Answers

The Problem:

AI agents powered by large language models (LLMs) like GPT-4 or Claude can generate fluent, confident responses—even when the underlying facts are incorrect or fabricated. In customer support, this can lead to dangerous situations: an agent might misstate return policies, provide the wrong refund amount, or invent a nonexistent troubleshooting step.

The Solution:

To prevent hallucinations, most teams now deploy retrieval-augmented generation (RAG). This approach grounds the AI’s responses in actual documentation or databases, reducing the risk of fabricated content.

Best practices:

- Use a vector database (Pinecone, Weaviate) to store product manuals, FAQs, and policy docs

- Embed all prompts with clear instructions: “Only answer if relevant context is found”

- Set up fallback behavior: “I wasn’t able to find a verified answer to that”

- Implement confidence thresholds—when the model’s confidence score drops, it either asks for clarification or escalates to a human

If your team is asking, “How do we make sure our AI agent doesn’t say the wrong thing?”—implementing RAG with strict retrieval gating is the first step.

2. Escalation Failures: Missed or Delayed Handoffs to Humans

The Problem:

One of the most common user frustrations is when an AI agent doesn’t recognize that it’s failing—or worse, loops endlessly without offering human help. Poor escalation logic erodes trust and can result in unresolved complaints, churn, or legal exposure.

The Solution:

Build structured, multi-condition escalation logic that recognizes:

- Confidence score below a defined threshold

- Sentiment turning negative or angry

- Explicit escalation phrases: “talk to a person,” “this isn’t helping,” “I need support”

- Multi-turn failures (3 or more failed intents or clarifications)

Once triggered, the agent should:

- Transfer the full conversation history to a live agent (in Zendesk, Freshdesk, Intercom, etc.)

- Provide the customer with estimated wait time and confirmation of escalation

- Pause the bot to avoid further automated messages

A robust fallback design ensures that AI augments—not replaces—human support where it matters most.

3. Maintaining Session Integrity and Context

The Problem:

LLMs are stateless by default. They don’t automatically remember previous interactions unless explicitly engineered to do so. This creates issues in support flows where multi-turn logic or user identity is important. Example:

- User: “What’s the status of my last order?”

- AI: “What’s your email address?”

- User: “john@example.com”

- User (later): “Can I cancel it?”

If session memory isn’t properly managed, the AI won’t know what “it” refers to.

The Solution:

Use session memory and user-level context tracking to persist important entities and conversation variables.

Techniques:

- Store key user attributes (email, order ID, language preference) in a session cache (e.g., Redis or Postgres)

- Use structured memory in frameworks like LangChain or Rasa

- Associate unique user sessions via JWT or OAuth tokens in web/WhatsApp flows

- Allow sessions to resume across asynchronous channels (e.g., returning to WhatsApp after 1 hour)

Session integrity is especially critical in mobile-first and WhatsApp-first deployments, where users expect fluid, on-demand continuity.

4. Security and Access Control

The Problem:

Support AI agents often handle sensitive data—order details, user profiles, payment references. If not properly secured, they can become vectors for data leaks, privacy violations, or API abuse.

The Solution:

Treat your AI agent like any other user-facing application with access to internal systems. Implement the following controls:

- Authentication middleware: Require login tokens before accessing private user data

- Role-based access control (RBAC): Only expose APIs and actions based on user roles or permission levels

- Input sanitization: Protect against prompt injection and payload manipulation

- Data masking: Redact sensitive data (credit cards, passwords, IDs) from prompts and logs

- Audit trails: Log every interaction and backend call for forensic review

For regulated sectors (healthcare, fintech), ensure the system complies with GDPR, HIPAA, or applicable standards. If a user says, “Delete my chat history,” the AI should comply and confirm deletion.

5. Multi-Language Support Accuracy

The Problem:

AI agents that serve global audiences often fail when handling queries in non-English languages. Even when the model technically supports multilingual input, performance may degrade in terms of tone, grammar, or intent recognition.

The Solution:

Use LLMs with strong multilingual capabilities (Claude 3, Gemini 1.5, GPT-4o) and test performance across languages critical to your user base.

Tactics:

- Fine-tune intent classifiers on multilingual datasets

- Build language detection logic (e.g., LangDetect) into the first step of every flow

- Route users to localized knowledge bases or translated documents via RAG

- Provide a manual language selection fallback: “Would you prefer to continue in Spanish?”

For brands operating in LATAM, India, Southeast Asia, or the Middle East, investing in proper multilingual support is key to accessibility and scale.

6. Live Monitoring and Human Override Systems

The Problem:

AI agents operate autonomously, but without proper oversight, you can’t detect when something breaks—or worse, when a user is receiving incorrect or harmful information.

The Solution:

Implement real-time observability and override systems.

Monitoring stack:

- Prompt monitoring: Use LangSmith, PromptLayer, or custom logs to inspect inputs and outputs

- Fallback tracking: Measure the percentage of queries not resolved and escalate patterns

- Sentiment dashboards: Alert on spikes in negative tone or user dissatisfaction

- Manual override mode: Allow support managers to take over active sessions or inject corrections into LLM behavior

Some companies now deploy shadow human reviewers for live monitoring of early-stage agents. This hybrid model ensures that even as automation increases, quality control remains human-guided.

The technical success of a customer support AI agent depends on more than just prompt engineering or LLM selection. It requires a holistic architecture that proactively addresses issues of trust, context, data safety, and operational edge cases.

By understanding these six core challenges and implementing the recommended solutions, you can build an AI agent that isn’t just intelligent—but reliable, compliant, and ready for scale.

9. Costs and Timelines

Building a customer support AI agent involves multiple cost layers—model usage, infrastructure, integration, and long-term tuning. While AI APIs make development faster than ever, the true investment comes in orchestration, maintenance, and ensuring the system performs well in real-world customer conversations.

Whether you’re a startup founder asking, “How much does it cost to build an AI agent?” or a CTO evaluating whether to build or buy, this section breaks down the components you need to consider.

Initial Development (Team and Timeline)

A functional, production-ready AI support agent typically takes 6–8 weeks to develop with a small cross-functional team:

Recommended Team:

- 1 LLM Engineer / AI Developer – handles prompt engineering, API calls, and orchestration

- 1 Full-Stack Developer – integrates the agent with CRMs, helpdesks, and web/WhatsApp interfaces

- 1 Product Owner or UX Lead – defines user flows, test cases, and fallback logic

Estimated Timeline:

Phase | Timeframe |

Scoping & intent design | 5–7 days |

LLM + RAG setup | 10–14 days |

API integrations | 10–14 days |

Frontend deployment (web, WhatsApp, etc.) | 7–10 days |

Testing, security, and optimization | 7–10 days |

Most teams can launch an MVP in under two months. More advanced versions—multi-agent workflows, enterprise-level compliance, multilingual handling—may stretch to 10–12 weeks.

GPT API Usage vs Open-Source Hosting

Option 1: Using GPT-4o or Claude 3 APIs

- Costs are based on token usage. For example, OpenAI’s GPT-4o charges around $0.005–0.01 per 1,000 tokens depending on prompt/response size.

- A typical customer support conversation may consume 800–2,000 tokens, meaning $0.005–0.02 per session.

- For 10,000 monthly sessions, that translates to $50–$200/month in API costs.

- Add-on costs for sentiment analysis, embeddings, or third-party middleware may increase monthly spend by 15–20%.

Pros: Fast setup, minimal infra overhead, access to cutting-edge LLMs

Cons: Recurring cost, limited control, sensitive data leaves your environment

Option 2: Open-Source Self-Hosted Models (Mistral, LLaMA 3)

- No API fees, but compute costs are significant

- Requires GPU server hosting (AWS EC2, Paperspace, or private on-prem hardware)

- Minimum requirement: 1 x A100 GPU for smooth LLM inference, costing $2–3/hour

- Add $200–500/month for devops, monitoring, patching, and uptime management

Pros: Full control, better for data privacy, no vendor lock-in

Cons: High setup and maintenance burden, requires ML ops expertise

Vector DB and Hosting Costs

If your agent uses Retrieval-Augmented Generation (RAG)—which it should for accurate support—you’ll need a vector database to store and retrieve your internal knowledge.

Common Options:

Platform | Starting Cost | Scaling Factor |

Pinecone | ~$50/month for 100K vectors | Based on read/write ops and storage |

Weaviate (self-hosted) | Free (infra only) | Requires devops and backend setup |

Chroma (lightweight) | Free, local or server | Ideal for MVPs and internal tools |

In addition, your infrastructure stack may include:

- Hosting (Vercel, AWS, Render): $20–100/month

- Database/API Gateway: $25–50/month

- WhatsApp Cloud API: $0.005–0.07 per message (if deployed on WhatsApp)

Total recurring infra costs for a small-to-medium deployment: $100–300/month

Maintenance and Continuous Tuning

AI agents are not “set and forget.” You must invest in ongoing maintenance to handle:

- New intents and customer use cases

- Prompt optimizations to reduce errors

- Periodic vector re-indexing for updated knowledge

- System upgrades and fallback tuning

Recommended minimum:

- 8–12 hours/month of prompt tuning and monitoring

- Monthly error review from chat logs and escalation reports

- Quarterly updates to KB, policies, and product workflows

Estimated cost:

- In-house: $500–1,000/month (1 part-time developer)

- Outsourced: $1,000–2,500/month for full-service AI ops & tuning agency

When to Build In-House vs Outsource

Build In-House If:

- You have a dev team with AI/LLM experience

- Your support stack is complex or heavily customized

- Data privacy requires full ownership of infra and workflows

- You want long-term flexibility and scalability

Outsource If:

- You want a fast go-to-market with minimal internal lift

- You have a small team and no in-house AI specialists

- You prefer fixed costs, faster iteration cycles, and SLA-backed delivery

- You’re running an initial pilot and need expert guidance on architecture, LLM selection, and deployment

Aalpha Information Systems offers specialized AI agent development services for businesses looking to automate customer support. With deep expertise in GPT-based agents, RAG pipelines, vector DB integration, and omnichannel delivery (including WhatsApp and web), Aalpha can help you go from idea to production in a matter of weeks—without the burden of hiring, training, or managing an in-house AI team.

Typical outsourcing cost for a full-stack AI support agent (including design, development, LLM integration, testing, and hosting):

- $6,000–12,000 one-time development fee

- $1,000–2,500/month for managed services, monitoring, and continuous tuning

Outsourcing to a trusted partner like Aalpha lets you focus on business outcomes while leaving the technical complexity to a team that does this every day.

An AI support agent doesn’t have to cost six figures. With modern tools and hosted LLM APIs, even lean teams can deploy production-ready systems for under $10,000 in initial build cost and $100–500/month in operational spend.

The key is aligning the build scope with support priorities and choosing between in-house vs outsourced implementation based on your internal capabilities and growth stage.

10. Best Practices for Long-Term Success

Building and launching a customer support AI agent is only the first milestone. The real value emerges through consistent monitoring, iteration, and alignment with user needs over time. Without a post-launch strategy, even the most well-engineered agent will begin to underperform as customer expectations evolve, new edge cases emerge, and your business offerings change.

Here are five best practices that high-performing companies follow to ensure their AI support agents stay accurate, safe, and effective long after deployment.

1. Retrain from User Feedback and Unresolved Sessions

No matter how thorough your initial training, real users will surprise you. They’ll use unexpected phrasing, raise new issues, or introduce corner cases that weren’t in your original intent list or knowledge base.

To keep your agent learning:

- Review chat transcripts from fallback interactions and low-CSAT sessions weekly

- Cluster failed conversations to detect new intents or missing knowledge

- Use real user queries to update training data or expand vector indexes

- Retrain classifiers (if applicable) every 2–4 weeks based on new patterns

Many product teams ask, “How do we make the agent smarter over time?” The answer is simple: listen to what your customers are already telling it—and treat those signals as your most valuable training data.

2. Don’t Over-Rely on Generative Answers

While large language models are excellent at generating fluent, natural-sounding responses, they are not inherently fact-checking machines. Relying solely on generative output from GPT or Claude without context grounding can lead to hallucinations or inconsistent information.

Best practice:

- Use retrieval-augmented generation (RAG) for all factual queries: return policies, warranty conditions, pricing rules, etc.

- Fine-tune instructions in prompts to limit freeform responses: “Answer only if data is found in the provided context.”

- For critical workflows (like refund eligibility or cancellation policy), consider using deterministic logic or hard-coded responses.

Balance flexibility with control. Use generative AI where it adds value—empathy, tone, summarization—but anchor critical answers in your approved content.

3. Always Offer a Human Fallback

No matter how advanced your AI agent becomes, there will always be edge cases, emotionally sensitive situations, or legal queries that require human intervention. Forcing users through an endless AI loop without a way out is one of the fastest ways to lose trust—and customers.

To maintain user confidence:

- Detect escalation signals like repeated failures, negative sentiment, or explicit handoff phrases (“I want to talk to someone”)

- Provide seamless transfer to human agents with full conversation history

- Let users choose early: “Would you prefer to speak to a human?”

- During off-hours, clearly inform users of expected wait times or alternative contact options

Think of fallback as a feature, not a failure. Smart escalation protects your brand while preserving the efficiency gains of automation.

4. Use Observability Tools to Monitor and Tune

Without visibility into how your AI agent performs in the wild, you’re flying blind. Errors may go unnoticed, hallucinations may repeat, and user frustration may build before anyone on your team is aware.

To avoid that, implement a robust observability stack:

- LangSmith: Logs and analyzes prompts, inputs, outputs, and token usage

- PromptLayer: Tracks prompt changes, model behavior, and rollback history

- Session analytics: Track success rate, fallback ratio, average handle time, and CSAT

- Escalation heatmaps: Identify where and when users exit the AI flow

- Live transcript reviews: Weekly audit of 20–50 sessions to catch quality issues

These tools help answer critical questions like:

- “Why did the agent hallucinate here?”

- “Which intent fails most often?”

- “What’s the average cost per resolved session?”

Monitoring isn’t just about error catching—it enables continuous improvement at scale.

5. Define a Roadmap for V2 and V3 Upgrades

A common mistake is treating AI agent development as a one-time project. In reality, the most successful teams roadmap their agents like software products, with feature rollouts, feedback loops, and future-state planning.

Your post-launch roadmap might include:

- V2: Adding new channels (e.g., WhatsApp, SMS, voice), multilingual support, improved escalation logic

- V3: Integrating with billing, CRM, or shipping APIs to support transactional actions (refunds, updates, order lookups)

- V4+: Multi-agent collaboration, proactive support agents, upsell/cross-sell workflows

Plan quarterly reviews of agent performance, business alignment, and new technical capabilities (e.g., upgrades to GPT-5 or Claude Next). Budget for ongoing development, just as you would for any mission-critical software product.

Launching an AI support agent is not the end—it’s the beginning of a long-term automation strategy. Treat your agent like a digital team member: onboard it with real data, evaluate its performance regularly, and help it grow over time.

The companies that get this right are the ones that don’t just reduce support costs—they transform support into a brand asset.

11. Future of Customer Support with AI

The AI support agents of today are already capable of handling routine tasks, reducing wait times, and deflecting Tier-1 queries—but what’s coming next will fundamentally reshape the role of customer service in every industry. The future lies in systems that are not only intelligent and scalable but collaborative, emotionally aware, and fully integrated with business operations.

Here are four key shifts shaping the future of AI-driven customer support.

1. Multi-Agent Systems for Layered Support

The next evolution of customer support automation is not a single agent—it’s a network of AI agents, each specialized in a specific domain: billing, technical issues, onboarding, compliance, etc. These agents will collaborate in real time, handing off context to each other like departments in a support team.

Using frameworks like AutoGen, CrewAI, or custom orchestrations in LangGraph, teams can design:

- Tiered agent hierarchies (Level 1 → Level 2)

- Specialist agents that handle API calls, document search, or escalation logic

- Agents that reason about other agents’ outputs before responding

This layered design creates a support system that mirrors human workflows—except faster, available 24/7, and able to scale without hiring more staff.

2. Sentiment-Aware Agents that Understand Emotion

AI agents are getting better not just at understanding language—but detecting tone, frustration, and urgency. Sentiment-aware agents will play a critical role in reducing churn and improving customer satisfaction.

They will:

- Detect negative sentiment early and soften responses proactively

- Escalate conversations when emotion exceeds acceptable thresholds

- Adjust tone dynamically based on emotional cues (e.g., “I’m really upset right now”)

- Deliver more human-like empathy without the cost of human staffing

As customers increasingly interact with machines, the ability to maintain emotional intelligence at scale will separate commodity bots from brand-defining experiences.

3. Seamless Integration with CRM, RPA, and Voice Interfaces

The future of support is not just text—it’s full operational integration across all customer-facing systems.

- AI agents will plug directly into CRMs (like Salesforce or HubSpot) to personalize answers using customer history, segmentation, and lifecycle stage.

- They’ll trigger RPA workflows to automate backend processes like updating subscriptions, processing refunds, or initiating KYC compliance without human input.

- And with voice AI improving rapidly (Whisper, Deepgram, ElevenLabs), agents will shift from chat-only to conversational voice assistants—replacing traditional IVR trees with intelligent, spoken interactions.

The result: true end-to-end automation where the customer experience is consistent across chat, email, and phone.

4. From Reactive Support to Proactive Service

Perhaps the biggest shift is that AI will move support from something users request to something that anticipates user needs. Future agents will:

- Monitor product usage and send help before users even ask

- Trigger support based on events—failed transactions, API errors, overdue invoices

- Offer educational nudges (“Want help setting up your dashboard?”) based on behavioral cues

- Recommend upsells or retention actions based on predicted churn risk

This evolution—from reactive problem-solving to proactive service delivery—will fundamentally reposition support as a growth driver, not just a cost center.

AI agents will soon operate more like proactive team members than passive tools. With multi-agent collaboration, emotional intelligence, deep backend integrations, and predictive behaviors, the customer support function will become smarter, faster, and more valuable than ever before.

Companies that embrace this future early will not only save costs—they’ll redefine what it means to deliver great service.

12. FAQs on AI Customer Support Agents

What is an AI customer support agent?

An AI customer support agent is a software-based system powered by artificial intelligence—usually large language models (LLMs)—that autonomously handles customer queries. These agents can understand natural language, retrieve data from knowledge bases, execute backend actions, and maintain conversational context. Unlike rule-based chatbots, AI agents are capable of multi-turn dialogues, contextual memory, and dynamic problem-solving across channels like web chat, email, and WhatsApp.

Can AI replace human agents?

AI can automate up to 70% of Tier-1 and Tier-2 support queries, but it doesn’t fully replace human agents. Instead, AI agents handle routine and repetitive tasks—like password resets, order tracking, or policy inquiries—so human teams can focus on complex, emotionally nuanced, or edge-case issues. The most effective support systems use a hybrid model with AI as the first line of response and seamless escalation to live agents when needed.

What’s the best tool to build a support bot?

The best tool depends on your goals and technical resources:

- For quick deployment with LLMs: OpenAI GPT-4o or Anthropic Claude 3

- For full control and orchestration: LangChain or Rasa

- For no-code/low-code prototyping: Botpress, Flowise, or CustomGPT

- For WhatsApp support: Meta WhatsApp Cloud API + LangChain integration

Each tool has trade-offs in flexibility, cost, and time to deploy. Startups often begin with hosted APIs, while enterprises opt for modular frameworks and custom integrations.

How do I train a support bot with my knowledge base?

To train a support bot on your knowledge base:

- Ingest content from docs, FAQs, SOPs, or help articles

- Use a retrieval-augmented generation (RAG) pipeline with a vector database (like Pinecone or Weaviate)

- Embed the text into semantic vectors using tools like OpenAI, Cohere, or HuggingFace

- During a live query, the bot retrieves relevant sections and feeds them into the LLM for grounded response generation

- Continuously update the knowledge base and reindex for freshness

This approach ensures the AI only answers from verified content and reduces hallucinations.

How much does it cost to build an AI agent?

Initial development costs typically range from $6,000 to $12,000, depending on complexity. Ongoing monthly costs include:

- LLM API usage: $50–$300/month

- Hosting & vector DB: $100–$500/month

- Maintenance & tuning: $500–$2,000/month

You can reduce costs using open-source models and self-hosted infrastructure, but that requires in-house expertise. Outsourcing to a provider like Aalpha Information Systems offers a predictable setup fee and managed service option.

Is GPT-4 good enough for customer support?

Yes, GPT-4o is currently one of the most advanced models for customer support. It handles complex queries, follows tone guidelines, supports multi-turn conversations, and can integrate with structured flows using LangChain or other orchestration layers. For support use cases, it’s best paired with RAG to ensure factual consistency and with guardrails to handle edge cases safely.

How do I connect my support AI to WhatsApp or web chat?

To deploy your support AI on WhatsApp:

- Apply for WhatsApp Business API access via Meta or partners like Twilio

- Set up webhook handlers for session messages

- Connect the agent logic (e.g., via LangChain or a backend API) to respond dynamically

- Manage session state and authentication with JWT or OAuth

- Ensure fallbacks for agent downtime or policy compliance

For web chat, use tools like Vercel, React chat widgets, or platforms like Intercom or Crisp, and connect them to your AI backend using REST or WebSocket.

How to measure the performance of an AI support agent?

Performance metrics for AI agents include:

- Containment Rate: % of queries fully resolved by AI

- Fallback Rate: % of conversations escalated to humans

- First Response Time: Time from user input to initial reply

- Resolution Time: Average duration to issue resolution

- CSAT Score: Customer satisfaction rating after interaction

- Hallucination Rate: Frequency of inaccurate or fabricated responses

Use tools like LangSmith, PromptLayer, or custom dashboards to log, analyze, and refine performance over time.

13. Conclusion and Next Steps