What is monetization of AI agents?

Monetizing AI agents refers to the process of turning intelligent, autonomous systems—powered by large language models (LLMs) or multi-agent frameworks—into commercially viable products or services. These agents are not limited to chatbots; they execute tasks, make contextual decisions, and interact with software ecosystems to drive outcomes. Examples include autonomous sales assistants, report-generating medical agents, support ticket triage bots, and scheduling coordinators.

The monetization process involves defining the value the agent delivers (e.g., time saved, conversions generated, tasks automated), identifying who pays for that value (B2B, B2C, internal stakeholders), and designing a business model that captures revenue sustainably. This includes direct user billing, usage-based pricing, subscription tiers, pay-per-outcome models, or integrations with platform marketplaces (e.g., GPT Store, Salesforce AppExchange).

Why does AI agent monetization matter?

For startups, monetization is not just about revenue—it’s about product-market fit. A well-monetized agent validates that its core function addresses a pressing user need. Agents that automate routine, high-frequency tasks—like triaging inbound customer support or handling compliance workflows—represent immediate revenue opportunities.

For CTOs and product managers, monetizing agents is a systems-level concern. Agent behavior, observability, billing logic, API limits, and uptime directly impact user satisfaction and profitability. Leaders must decide: How do we define a “unit of value”? Is it per task, per token, per minute, or per business outcome?

AI engineers must consider not only the technical feasibility of agents but also their economic viability. This includes optimizing inference costs, ensuring accuracy to reduce manual overrides, and building instrumentation for usage tracking.

The growing adoption of LLMs means AI agents are being embedded into enterprise workflows. According to Grand View Research, the global AI agent market is projected to exceed $50 billion by 2030, fueled by the rise of verticalized AI, plug-and-play agent frameworks, and APIs that integrate seamlessly with enterprise systems.

Overview of Monetization Models

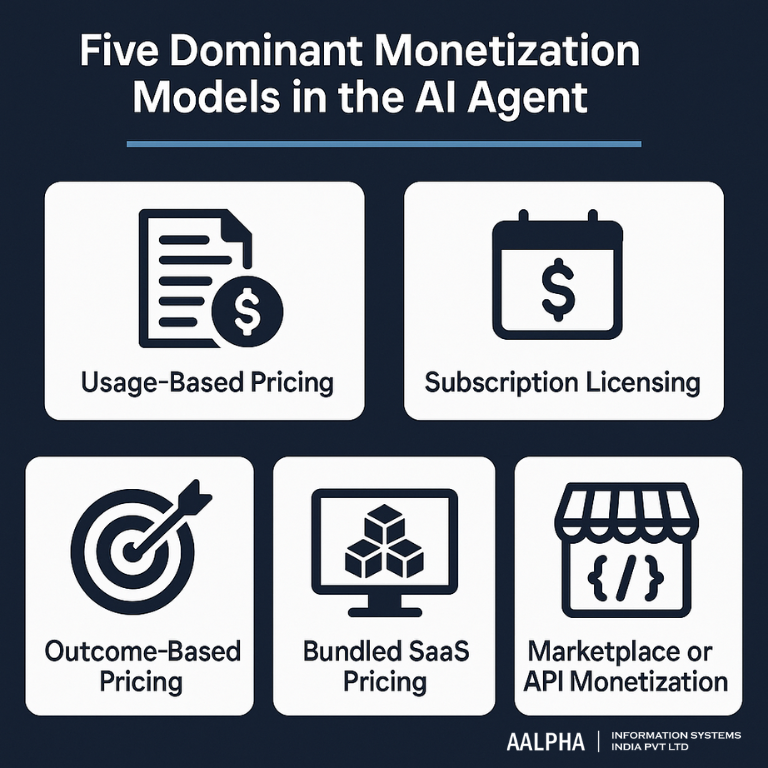

There are five dominant monetization models in the AI agent landscape:

- Usage-Based Pricing

Customers are charged based on tokens, API calls, execution time, or action frequency. This model mirrors cloud and SaaS pricing, appealing to technical buyers who align cost with volume.

- Subscription Licensing

Fixed monthly or annual pricing tiers based on number of users, agents, or features. This is ideal for predictable revenue streams and customer retention.

- Outcome-Based Pricing

Clients pay for measurable success—e.g., leads generated, support tickets resolved, or hours saved. Though harder to instrument, this model aligns cost with ROI and is gaining traction.

- Bundled SaaS Pricing

Agents are embedded as features inside existing SaaS products (e.g., CRM, ERP). Monetization is indirect but powerful—agents boost product stickiness and expansion revenue.

- Marketplace or API Monetization

Agents are sold via marketplaces (e.g., GPT Store, Zapier, Salesforce) or exposed via APIs. Developers charge per integration or execution.

Each of these models requires precise alignment between value creation, cost structure, and user willingness to pay.

This guide explores these models in detail, provides real-world examples, and presents a blueprint for building, pricing, and scaling AI agents as monetizable assets. Whether you’re building a vertical AI SaaS product or embedding agents into your enterprise stack, understanding how to turn intelligence into income is now a core competency.

2. Market Size & Growth Projections

The monetization of AI agents is emerging as one of the most financially significant trends in artificial intelligence. As organizations shift from simple automation toward context-aware, task-executing systems, AI agents are poised to transform how digital labor is bought, sold, and scaled. Quantifying this opportunity requires an examination of current market valuation, future projections, geographic dynamics, and investment inflows.

Global Market Size: 2024–2035 Outlook

As of early 2025, the global AI agent market—including autonomous agents, task-specific LLM deployments, and agentic middleware—is estimated to be valued between $5.3 billion and $5.4 billion. This figure is based on compiled insights from multiple sources including Grand View Research, Growth Unhinged, and GeekWire.

Projections for the coming decade vary significantly depending on scope:

- Modest projections estimate the market will reach $7.6 billion by 2025, growing at a compound annual growth rate (CAGR) of 25–30%.

- Aggressive estimates, particularly those factoring in embedded agents across SaaS, enterprise automation, and personal AI assistants, project a market size of $50–216 billion by 2030–2035.

This divergence reflects both definitional boundaries (narrow vs. broad AI agent scope) and uncertainty around LLM cost curves, regulatory barriers, and monetization viability across verticals.

Key Growth Drivers

- NLP Maturity and LLM Accessibility

The increasing accuracy and multimodality of large language models like GPT-4o, Claude 3, and Gemini 1.5 Turbo has catalyzed the shift from static chatbots to truly autonomous agents. These models support reasoning, planning, retrieval augmentation (RAG), and API orchestration—making them commercially deployable at scale. The lower barrier to entry enables startups to build agents with minimal custom modeling.

- Cloud Infrastructure and API Standardization

Cloud-native platforms like AWS Bedrock, Azure OpenAI, and LangChain Cloud are enabling real-time deployment of agentic architectures without bespoke infrastructure. APIs such as OpenAI’s function calling, Google’s Vertex Agents, and Autogen from Microsoft make agent development more modular and scalable.

- Enterprise Automation Demand

Enterprises increasingly seek “autonomous digital workers” to handle sales, support, compliance, and analytics. Rather than building full applications, companies embed agents inside existing systems (e.g., Salesforce, Notion, Jira) to automate workflows. This reduces integration costs and accelerates time to value.

- Hyper-Personalization in Consumer Tools

AI agents are now being embedded in consumer-facing products—from personal finance apps to mental health coaches—offering 1:1 services at scale. Examples include Replika AI, Character.AI, and Pi.ai.

Regional Outlook

- North America currently dominates the AI agent landscape, accounting for roughly 40% of global market share, led by U.S.-based platforms such as OpenAI, Microsoft, Google DeepMind, and emerging agent infrastructure startups like LangChain and Cognosys.

- Asia-Pacific (APAC) is projected to be the fastest-growing region due to rapid digitization in India, China, Singapore, and South Korea. The Indian AI market alone is projected to reach $17 billion by 2027, up from ~$8 billion in 2025.

- Europe is focusing on highly regulated sectors (healthcare, legal, govtech), with strong emphasis on data residency, GDPR compliance, and sovereign AI initiatives.

Investment Trends

Investor appetite for AI agents has accelerated significantly. In 2024 alone:

- Over $3.8 billion was raised by AI agent startups globally, including infrastructure (e.g., LangChain, ReworkAI), vertical agents (e.g., Jasper, AutoCloud), and agent marketplaces (e.g., Cognosys, MindStudio).

- Valuations for pre-revenue AI agent startups have soared, reflecting the long-term market belief in agent-led automation.

Examples:

- LangChain secured $40 million in Series B to build composable agent frameworks.

- Sakana AI (Japan) raised $30 million for LLM-aligned agent models.

- Cognosys and Superagent are building marketplace-first agent platforms with monetization baked into the deployment layer.

The shift from “LLM-as-a-feature” to “agent-as-a-service” business models is becoming the dominant monetization strategy across funded AI startups.

- The AI agent market is currently valued at $5.3–5.4 billion, with forecasts reaching up to $216 billion by 2035 depending on vertical uptake and cost structure optimization.

- Growth is driven by the convergence of LLM availability, cloud scalability, API integration, and enterprise automation needs.

- North America leads in platform innovation, but APAC is rapidly emerging as the next frontier, especially in healthcare and finance sectors.

- Startups are receiving significant investment to build infrastructure, vertical agents, and monetization-first agent layers.

This trajectory signals a multi-billion-dollar opportunity not just to build AI agents—but to build sustainable businesses around them. The next sections will explore how.

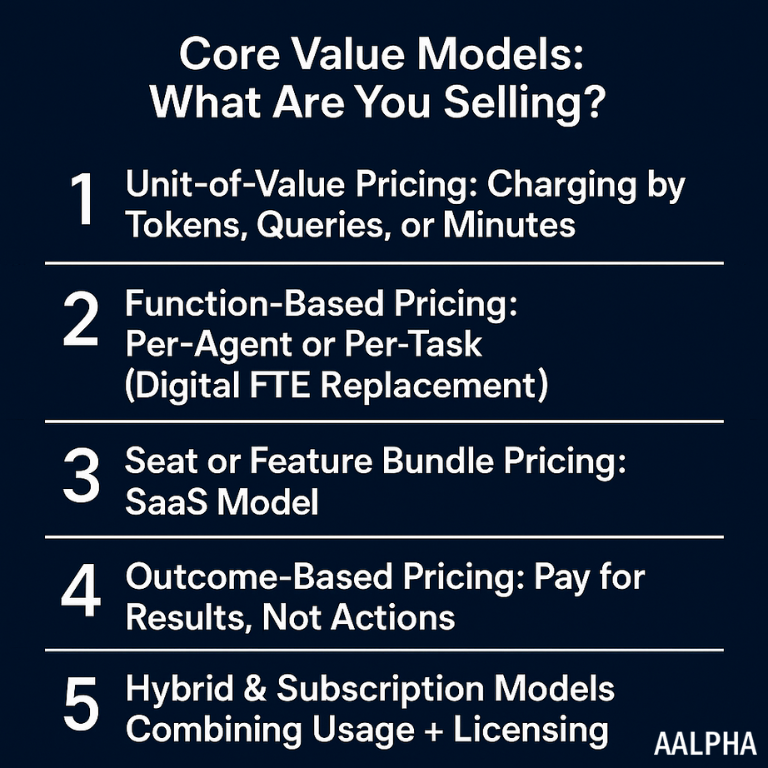

3. Core Value Models: What Are You Selling?

Monetizing AI agents begins with a fundamental question: what exactly is being sold? Unlike traditional software, AI agents are not static tools—they’re dynamic, context-aware systems that perform tasks on behalf of users. This changes the economics of value delivery and forces teams to rethink pricing, packaging, and unit economics.

Depending on the context—B2B, B2C, or internal enterprise use—different models of monetization are suitable. This section breaks down the five dominant pricing strategies for AI agents, their pros and cons, and real-world implementation examples. Each model aligns value creation with revenue capture, and can be mixed or adapted depending on scale, industry, and customer maturity.

1. Unit-of-Value Pricing: Charging by Tokens, Queries, or Minutes

Unit-of-value pricing refers to monetizing AI agents based on their actual consumption of compute, API calls, or inference volume. This mirrors cloud pricing (e.g., AWS Lambda, OpenAI API), making it familiar to technical buyers.

- Tokens: Charge based on LLM tokens processed (input + output). This is the default metric for most GPT-based agent platforms.

- Queries or Tasks: Counted actions (e.g., document parsed, lead scored, report generated).

- Minutes: Useful for voice agents or real-time interactions (e.g., AI receptionist or customer support agent).

“How do I charge for AI agents that use GPT?”

You can bill customers based on the number of tokens their agents consume per month. For example, 1M tokens may equal $1.20 in backend cost and $10 in customer value.

Pros:

- Transparent for technical users

- Easy to align with backend costs

- Scales directly with usage

Cons:

- Hard for non-technical customers to understand

- Revenue is volatile if usage fluctuates

- Difficult to estimate bills in advance

2. Function-Based Pricing: Per-Agent or Per-Task (Digital FTE Replacement)

This model charges based on the function or “job” the agent performs—essentially pricing the agent as a digital employee. It fits especially well when the agent is replacing or augmenting a human worker.

“Can I price an AI agent like a virtual employee?”

Yes. If your agent handles a full-time job like sales outreach, scheduling, or compliance review, you can price it as a per-agent subscription—similar to hiring an FTE.

For example, a sales agent that sends 100 outreach emails per day and qualifies leads can be priced at $300–$800/month depending on volume and ROI. This model aligns particularly well with vertical SaaS tools embedding autonomous agents (e.g., lawtech, medtech).

Pros:

- Easy for customers to conceptualize

- Tied to specific business function (HR, sales, finance)

- Recurring revenue predictability

Cons:

- Must prove equivalent or better performance than humans

- Requires strong analytics and SLA guarantees

3. Seat or Feature Bundle Pricing: SaaS Model

This model treats AI agents as part of a broader SaaS platform. Customers pay based on:

- Number of human users (seats) interacting with or overseeing agents

- Features unlocked (e.g., adding multi-agent workflows, analytics dashboards)

It’s ideal for platforms embedding agents within core workflows (e.g., support desks, CRMs, project management tools). The agent is part of the experience but not priced in isolation.

“How do I include AI agents in my SaaS pricing?”

Add agent capabilities as upsell features or include them in higher-tier bundles, increasing ACV (annual contract value) without fragmenting pricing.

Pros:

- Familiar SaaS economics

- High ARPU through bundling

- Agents boost platform retention

Cons:

- Hard to isolate agent ROI

- Seat-based pricing may feel misaligned for autonomous features

4. Outcome-Based Pricing: Pay for Results, Not Actions

In this performance-aligned model, customers pay based on specific measurable outcomes:

- Number of qualified leads

- Support tickets resolved autonomously

- Reduction in human labor hours

- Increased conversion or revenue

This is especially powerful for AI agents in revenue-generating or cost-saving roles—sales, marketing, support, operations. However, it requires robust attribution and trust.

“Can I charge only when my AI agent delivers value?”

Yes, if you can tie usage to outcomes (e.g., cost savings or revenue gains), performance-based pricing ensures alignment with customer ROI.

Pros:

- Strong alignment with client value

- Encourages customer adoption

- Supports premium pricing

Cons:

- Hard to instrument and enforce

- Risk of disputes around attribution

- Requires access to customer KPIs

5. Hybrid & Subscription Models: Combining Usage + Licensing

Many AI SaaS products combine the models above:

- Base Subscription (e.g., $99/month) + Usage overages (e.g., $0.005/token)

- Tiered Bundles with increasing agent functionality

- Custom enterprise pricing for multi-agent orchestration

Hybrid models allow for predictable baseline revenue while also capturing upside from high-usage customers. They offer flexibility and reduce bill shock.

“Should I offer both fixed pricing and usage-based add-ons?”

Yes. Hybrid models allow small customers to start with a flat plan, while power users pay more as they scale usage.

Pros:

- Balanced risk and reward

- Easy to upsell

- Works well with API-based platforms

Cons:

- Requires billing sophistication

- Needs real-time usage monitoring

Align Pricing With Perceived Value

Monetizing AI agents successfully is not just about covering compute costs—it’s about aligning pricing with the perceived value your agent delivers. Whether you charge per token, per task, per user, or per outcome, the key is clarity, fairness, and trust.

4. Implementation Blueprint for Startups & Enterprises

Monetizing AI agents requires more than pricing theory. It demands a concrete operational framework that integrates business strategy, technical execution, and ongoing optimization. Whether you’re a startup launching your first agent-powered product or an enterprise embedding agents into your SaaS suite, success hinges on how well you define your value metric, manage cost dynamics, and align billing with user expectations.

This blueprint walks through each phase of monetization implementation—from defining the unit of value to iterating your model based on real usage patterns.

Define Your Unit of Value

Your monetization model starts by answering a foundational question: What are you charging for? AI agents differ from traditional SaaS features because their “work” is dynamic—measured in tasks completed, content generated, or business outcomes delivered.

To monetize agents effectively, you must define a unit of value that satisfies three conditions:

- Correlates to backend costs (e.g., LLM tokens or compute time)

- Aligns with user-perceived value (e.g., tasks automated or hours saved)

- Is measurable and transparent

Common Unit Metrics:

- Tokens: Standard for LLM-driven agents. Easy to track, scalable.

- Queries / Executions: Discrete agent actions (e.g., document summary, sentiment analysis).

- Runtime (Minutes or Seconds): Suited for synchronous or voice-based agents.

- Tasks Completed: Useful for agents performing structured workflows.

- Outcomes: Leads generated, reports filed, tickets resolved—ideal for outcome-based pricing.

“How do I choose what to bill for in my AI agent product?”

Start by identifying what users value most—completed actions, saved time, or business outcomes—and map it to measurable system-level events like token usage or task completion.

Implementation Tips:

- Tag all agent interactions with metadata: token count, execution time, and task ID.

- Aggregate metrics per user/org level to support billing and analytics.

- Expose this data in a user-facing dashboard to build trust and reduce billing disputes.

Cost & Margin Analysis

Behind every monetized agent is a cost structure primarily driven by:

- LLM inference costs (e.g., OpenAI GPT-4o at $0.005–$0.01 per 1,000 tokens)

- Cloud infrastructure (serverless functions, vector databases, storage)

- Third-party API fees (retrieval, payments, notifications)

To maintain healthy margins, pricing must cover variable costs and allow room for scaling and customer acquisition.

Cost Mapping Strategy:

- Calculate average cost per user session or agent interaction.

- Identify break-even points per pricing tier.

- Bake a 60–80% gross margin into each plan to allow for support, R&D, and unexpected spikes.

Mitigation Tactics for LLM Cost Volatility:

- Use model fallback strategies: route to cheaper models (e.g., GPT-3.5 vs GPT-4o) based on query type.

- Enable RAG pipelines or custom instructions to reduce token usage.

- Offer customers options: “High Accuracy Mode” (more costly) vs “Fast & Lean Mode.”

Pricing Architecture

Once your value unit and costs are clear, design a pricing structure that balances simplicity, scalability, and upsell potential. For AI agent products, three primary architectures are common:

A. Flat Subscriptions

- Fixed monthly fee for access to a single agent or basic feature set.

- Works well for early-stage products or low-complexity use cases.

B. User-Based (Seat) Pricing

- Charge based on the number of human users who benefit from or supervise agents.

- Common in enterprise SaaS settings where AI augments teams.

C. Usage-Based or Credit Systems

- Sell credits that customers redeem for agent activity (e.g., 10,000 tokens, 1,000 tasks).

- Good for dev tools or low-frequency B2B use cases.

“Should I offer monthly pricing or pay-as-you-go?”

Offer both. A base subscription ensures recurring revenue, while usage-based pricing allows scaling with customer success.

Tiering Strategy:

- Create feature-based tiers (e.g., Starter, Pro, Enterprise) based on:

- Number of agents deployed

- Complexity of workflows supported

- Support level (community, SLA-backed, dedicated AM)

- Number of agents deployed

- Include volume multipliers: higher plans offer bulk credits or discounted overages.

Outcome-Based Model Design

Outcome-based pricing aligns what customers pay with measurable success—ideal for performance-critical agents in sales, customer service, or operations.

To implement:

- Define clear success metrics (e.g., support ticket deflection, leads converted, tasks automated).

- Build instrumentation to capture outcome data: tags, webhook events, analytics pipelines.

- Create business logic to associate outcomes with billing events.

Example: An AI sales agent that books qualified meetings can charge per booked call rather than per message sent.

Backend Requirements:

- Real-time event tracking

- Secure integration with customer systems (CRM, support, analytics)

- Auditable logs for every outcome trigger

Customer Success Layer:

- Train CS teams to communicate how outcomes are defined and tracked.

- Offer ROI calculators and quarterly value review sessions.

Usage Tracking, Billing & Transparency

Modern users demand real-time visibility into their consumption. Lack of transparency is a leading cause of churn in AI products.

Build a Usage Layer That Includes:

- Token and task usage breakdown

- Overages and thresholds

- Cost previews or real-time estimators

- Exportable billing reports

Integrate this data into both your billing system and product UI. Allow customers to self-manage their plans, set limits, and receive proactive notifications.

“How do I help users understand what they’re paying for?”

Build transparent dashboards showing usage metrics, cost trends, and outcome logs—similar to what cloud platforms offer.

A/B Testing & Iteration

Once live, your pricing and packaging must evolve with user behavior and market feedback. Treat monetization as a living system.

A/B Test Variables:

- Price points (e.g., $29 vs $39)

- Value units (task vs token vs time)

- Tiered feature sets

- Free trial vs freemium

Track KPIs like:

- Conversion rates

- Average revenue per user (ARPU)

- Usage intensity per plan

- Churn vs satisfaction correlation

Elasticity of Demand:

Analyze how sensitive customers are to price increases or volume limits. Use this data to refine plans and upsell strategies. If increasing price by 10% drops usage by only 2%, you’ve discovered price inelasticity—a margin opportunity.

Feedback Loops:

Use customer interviews, sales objections, and support tickets to refine pricing language and packaging logic.

Implementing a monetization model for AI agents isn’t just a finance or product task—it’s an ongoing cross-functional effort involving engineering, growth, design, and customer success. You’re not just selling compute—you’re selling intelligent labor at scale.

To recap, successful implementation requires:

- A clearly defined, measurable unit of value

- Strong margin visibility and LLM cost controls

- Adaptive pricing architecture that matches buyer expectations

- Outcome tracking for high-value, performance-aligned use cases

- Transparent billing and real-time usage dashboards

- Ongoing testing to optimize revenue per user

This blueprint ensures that as your AI agents grow more powerful, your business model grows more sustainable.

5. Go-to-Market Strategy & Pricing Playbooks

Monetizing AI agents at scale depends not only on the technical and economic design of the product, but also on how you position, package, and distribute it. Without a precise go-to-market (GTM) strategy, even the most advanced agents risk underperformance. This section outlines how to clearly articulate value, package your pricing, choose the right distribution channels, and tailor monetization approaches based on your product category.

Positioning & Messaging

Your messaging must answer one question concisely: “What does this agent do, and why is it worth paying for?” In a crowded AI landscape, vague or overly technical descriptions will be ignored. Effective positioning is not just about features—it’s about clearly communicating outcomes and business value.

Principles for Effective Positioning:

- Lead with outcomes, not features. E.g., “Books qualified meetings autonomously” instead of “Uses GPT-4 to generate email copy.”

- Anchor to existing budgets. Agents should map to cost centers like sales ops, customer support, compliance—not R&D or “innovation.”

- Avoid overpromising intelligence. Frame the agent as reliable and focused, not omniscient or human-like.

“What value does this agent deliver to a customer support team?”

It reduces ticket resolution time by 45%, triages inbound queries autonomously, and saves 30–50 hours of support work per month.

Messaging Formula:

[Agent Type] that [Performs Function] so you can [Achieve Outcome]

- “An autonomous sales agent that books qualified calls, so your team spends more time closing, not chasing.”

Back this up with quantified results from internal usage or early adopters—conversion boosts, time savings, deflection rates.

Sales & Packaging

Whether you’re a SaaS company embedding agents into your platform or a startup selling agent-as-a-service tools, the packaging and sales narrative must align with how buyers think—in terms of people and budgets.

Headcount Replacement vs Augmentation:

Frame agents as either:

- FTE replacements (“This agent replaces 1–2 SDRs at 20% the cost”), or

- Team multipliers (“This agent allows your team to handle 3x the workload”).

In both cases, pricing should be benchmarked against total cost of ownership (TCO) for equivalent human labor, including salary, tools, and time.

Direct Sales Script Tips:

- Open with a pain point: “What’s your current monthly cost per lead?” or “How long does it take to resolve a support ticket?”

- Connect agent capability to quantifiable ROI: “Our agent qualifies leads at $3 each vs your $22 SDR cost.”

- Objection handling: Emphasize control, audit logs, override capabilities, and integration with existing workflows.

“How do I sell an AI agent to a mid-size enterprise?”

Emphasize savings on headcount, increased output, and seamless integration. Map it to existing roles and KPIs rather than pitching it as experimental tech.

API-First Distribution & Partnerships:

If you’re not going full-stack, adopt an API-first GTM model. This allows partners to embed your agent into their workflow. Offer:

- Pre-built wrappers (e.g., for Slack, Gmail, Salesforce)

- SDKs or Zapier-compatible integrations

- Volume-based pricing for B2B resellers

Strategic partnerships—especially with platforms lacking their own agent layer—create leverage. Examples include developer tools bundling agents for test generation, or CRMs offering AI triage for inbound leads.

Distribution Channels

The success of monetizing agents hinges on finding the right buyers in the right places. There are four major GTM channels for agent products:

1. Agent Marketplaces

AI agent stores (e.g., OpenAI’s GPT Store) promise reach, but are still immature in monetization and discovery. As Wired noted, most agents in the GPT Store remain unmonetized due to:

- Lack of subscription infrastructure

- Inability to differentiate deeply (limited customization)

- Limited business-focused discovery

Workarounds:

- Build parallel landing pages with Stripe integration

- Use “bring-your-own-key” models to monetize via APIs

- Offer a freemium GPT app that funnels to a paid SaaS product

2. Enterprise Integrations

Mid-market and enterprise buyers prefer native integrations into their existing tools. This means your agent should plug into:

- CRMs (Salesforce, HubSpot)

- Project tools (Asana, Jira, Notion)

- Helpdesk systems (Zendesk, Freshdesk)

Bundle integrations into pricing tiers or offer them as add-ons.

3. White-Label & OEM Licensing

Offer white-label versions of your agent to SaaS vendors looking to enhance their own offerings. You maintain the backend; they own the customer relationship. This is common in:

- Healthcare SaaS (e.g., agents for medical note summarization)

- Finance tech (e.g., compliance review agents)

- Legal software (e.g., document parsing agents)

“Can I sell my AI agent to other software vendors?”

Yes—offer white-label options or API licensing so platforms can integrate your agent under their brand while you earn recurring revenue.

4. Product-Led Growth (PLG)

If your agent product is self-serve, PLG is a viable channel. Offer a free tier with usage limits and gradually introduce upsell points:

- More executions

- Advanced reporting

- Team collaboration

Promote usage-based triggers for upgrades (e.g., tokens used, tasks completed).

Monetization by Product Type

Not all agents are monetized the same way. Your pricing and GTM should reflect the nature of your agent and its target market.

A. B2B SaaS Agents

- Sales, support, finance, compliance

- Monetization: seat-based + usage

- Distribution: direct sales, integrations, channel partners

- Key: map agent ROI to operational KPIs (leads, resolution time, costs)

B. B2C Agents

- Productivity, wellness, personal finance, education

- Monetization: freemium → premium, flat monthly fee

- Distribution: app stores, GPT Store, social virality

- Key: emotional UX, trust, retention loops

C. Vertical AI Agents

- Healthcare, legal, real estate, logistics

- Monetization: outcome-based or per-task

- Distribution: white-label, compliance-first sales

- Key: regulatory credibility, interoperability, auditability

D. Infrastructure Agents

- Agent orchestration frameworks, copilots, SDKs

- Monetization: API usage + enterprise licensing

- Distribution: dev communities, GitHub, partnerships

- Key: DevEx, documentation, observability

“How should I monetize a personal AI agent vs a B2B one?”

Consumer agents typically follow a freemium or subscription model, while B2B agents rely on usage-based or outcome-aligned pricing tied to operational savings or revenue generation.

A successful GTM for AI agents combines clear value communication, intelligent pricing alignment, and strategic distribution. You’re not just offering AI—you’re offering automated labor with measurable ROI. Treat your agents like products that solve real business problems, not technical showcases.

Risk & Compliance Considerations

While the potential for monetizing AI agents is significant, so are the risks. Deploying autonomous systems that make decisions, access sensitive data, or interact with external systems introduces critical liabilities for developers and businesses. Startups and enterprises alike must implement controls to manage cost exposure, comply with regional regulations, and mitigate legal and operational risks. This section outlines the four major risk categories and prescribes practical safeguards for each.

Cost Overruns & Usage Unpredictability

One of the most immediate risks of running AI agents—especially those powered by large language models—is unpredictable backend costs. Unlike traditional software, which has fixed compute requirements, agents may consume variable amounts of resources based on prompt length, user interaction depth, or external API calls.

Common Scenarios:

- Token sprawl: poorly designed prompts or unbounded loops drive up token usage

- User abuse: customers unknowingly (or maliciously) trigger high-cost actions repeatedly

- System triggers: agents recursively calling sub-agents or APIs in ways not anticipated

“How can I prevent my AI agent from racking up unexpected charges?”

Set strict usage limits, implement circuit breakers, and display real-time usage dashboards to your customers.

Mitigation Strategies:

- Token and time limits per task: Hard cap on maximum tokens or execution duration.

- Per-user quotas: Restrict volume by user or organization tier.

- Rate limiting and retry throttles: Prevent high-frequency API execution.

- Cost analytics dashboards: Offer transparent usage views for internal teams and customers.

Integrate anomaly detection to flag cost spikes in real time. Build alerts for your DevOps team and pause execution on hitting threshold limits.

Data Privacy & Regulatory Compliance

AI agents frequently handle personal, financial, or operational data—placing them squarely under the purview of privacy laws such as:

- GDPR (EU)

- DPDP Act (India)

- HIPAA (USA, for healthcare)

- CCPA (California)

These frameworks emphasize data minimization, consent, purpose limitation, and auditability—each of which can be easily violated by generative agents unless designed carefully.

Key Privacy Risks:

- Inadvertent retention or processing of PII in LLM memory or logs

- Use of non-compliant third-party LLM APIs that store or train on user data

- Lack of clear opt-in/opt-out flows

“Is my AI agent compliant with GDPR or Indian DPDP law?”

Only if it avoids storing or transmitting PII without explicit consent, uses processors that are contractually bound to protect data, and provides clear mechanisms for data access and deletion.

Best Practices:

- Use zero-retention LLM endpoints where possible (e.g., OpenAI’s “no data logging” API setting).

- Clearly disclose how data is processed, including in agent UI or API documentation.

- Implement data segregation per tenant or user.

- For high-risk verticals, consider on-premise LLMs or private deployment of open-source models like LLaMA, Mistral, or Cohere.

In India, compliance with the DPDP Act (2023) mandates explicit purpose definition and data fiduciary responsibilities. Enterprise deployments in India should consider local hosting or sovereign cloud solutions to avoid cross-border data issues.

Liability & Error Handling

Unlike static code, agents act dynamically—retrieving content, taking actions, or giving advice. This creates shared liability between the platform provider and the user, especially if the agent makes decisions that lead to tangible loss or harm.

Example Risk Areas:

- A medical agent gives incorrect dosage recommendations

- A financial agent misclassifies a compliance alert

- A scheduling agent double-books critical meetings

In all cases, the line between tool and decision-maker blurs. Regulators and courts are still undecided on how liability will apply in AI agent contexts—but the burden of proof will likely fall on the developer or platform.

“Who is responsible if an AI agent makes a mistake?”

Unless you have strong disclaimers and override mechanisms, your platform could be held liable—especially in regulated industries or if the agent acts autonomously without review.

Recommendations:

- Disclaimers and warnings: Every agent interaction should include context-specific disclaimers, especially for health, legal, or financial advice.

- Audit trails: Store logs of agent reasoning, actions taken, and human overrides.

- Fail-safes and human-in-the-loop: For critical workflows, build checkpoints that require human validation before execution.

- Liability clauses in Terms of Service: Specify usage limits, disclaim warranties, and define where responsibility lies in the event of errors.

For enterprises, contracts should include indemnification limits and insurance-backed protections.

Security, Access Control, and Auditability

As AI agents connect to third-party APIs, CRMs, internal databases, or cloud storage, they become attack surfaces. Agents that are over-privileged, poorly validated, or improperly sandboxed can act as vectors for unauthorized data access or even privilege escalation.

Key Attack Vectors:

- Prompt injection: Malicious input manipulates the agent’s behavior

- Overbroad API scopes: Agent has access to functions it shouldn’t invoke

- No authentication/authorization: Unverified users triggering agent actions

- Log leakage: Sensitive data stored or exposed in plaintext

“Can AI agents be hacked or exploited?”

Yes—if you don’t properly sandbox their behavior, secure their API access, and validate inputs, they can be manipulated or misused.

Security Protocols:

- OAuth-based access control for third-party services

- Role-based agent permissions to restrict actions per user

- Tokenized, expiring credentials for sensitive endpoints

- Prompt sanitization and context checks to prevent malicious input

- Encrypted agent logs with restricted access

Auditability is equally important. For enterprise sales, customers will ask:

- “Can we see what actions the agent took?”

- “Is there an immutable record of interactions?”

- “Can we disable, pause, or rollback agent actions?”

Build dashboards that answer these questions clearly.

The path to monetizing AI agents is not without friction. Each new capability introduced—task execution, data access, workflow automation—comes with legal, financial, and reputational risks. However, these can be effectively mitigated with thoughtful architecture, robust compliance frameworks, and user-transparent controls.

Startups must build risk mitigation into the product from day one. Enterprises should treat AI agents as regulated systems—subject to audits, access controls, and legal review. By operationalizing these principles, companies can confidently scale agentic products into complex, high-stakes environments without compromising trust or compliance.

7. Emerging Models & Ecosystem Trends

The commercialization of AI agents is not static—it’s evolving in parallel with foundational shifts in infrastructure, platform dynamics, and governance. As agent usage scales across verticals and user types, the surrounding ecosystem is adapting. Emerging models are not just improving the capabilities of agents themselves, but reshaping how they are distributed, paid for, and governed.

This section outlines four pivotal developments: the rise of agentic commerce infrastructure, the restructuring of platforms around agent middleware, the emergence of responsible agent frameworks, and the central role product managers play in maintaining and optimizing agent behavior.

Infrastructure for Agentic Commerce

Traditional SaaS billing, authentication, and licensing systems are inadequate for monetizing autonomous, API-driven agents. Monetization now requires a new class of infrastructure purpose-built for agentic commerce—the orchestration of identity, payments, provisioning, and accountability across agents that function dynamically and contextually.

Key Features of Emerging Infrastructure:

- Persistent Agent Identity: Each agent (not just the user) has a unique, verifiable ID and associated capabilities. This supports reputation systems, credential management, and agent-level analytics.

- Programmable Payment Flows: Agents need access to financial instruments (wallets, metered billing, subscriptions) so they can trigger transactions—either on behalf of a user or autonomously.

- Fine-Grained Entitlements: Systems must control not just user access, but which agents can execute which functions under which conditions.

- Usage Attribution: Multi-agent architectures need mechanisms to track which agent initiated a task or used tokens, to allocate cost and value precisely.

“Do AI agents need their own identities and payment systems?”

Yes—especially as agents become persistent entities that transact or operate independently, they require a digital wallet, usage quota, and verifiable identity.

We are seeing early examples in frameworks like LangChain (with session/agent IDs), toolkits enabling AI wallets, and identity protocols for agents built on decentralized ID standards. Future billing platforms may resemble Stripe for Agents: supporting multi-agent billing, tokenized payments, and cross-agent reconciliation.

Superplatform Disruption: The Rise of Agentic Middle Layers

One of the most important but underexplored dynamics in the AI economy is the shift from attention-based platforms (e.g., Google Search, Facebook) to agentic platforms—where value is mediated not through ads or clicks, but through task automation and outcome delivery.

This gives rise to agentic middle layers—infrastructures or orchestration systems that sit between users and services, mediating interactions through AI agents.

Implications:

- Platforms may lose control over user journeys as agents automate discovery, filtering, and decision-making.

- Interface primacy declines; what matters is which agent is executing the task, not which site or UI the user sees.

- New entrants (e.g., Cognosys, Superagent) can build value by controlling the agent routing and orchestration layer—even if they don’t own the foundation models or APIs.

“Will AI agents replace apps and search engines?”

Not directly—but as agents handle more decisions, they’ll mediate access to services, reducing direct user interaction with traditional interfaces.

This is analogous to what AWS Lambda did for backends—decoupling compute from infrastructure. In this case, agents decouple user outcomes from interface dependency. As more enterprises adopt API-first models, the orchestration layer becomes the new distribution channel.

Responsible AI Agent Frameworks

As AI agents transition from “experiments” to production tools, there’s a growing demand for responsible deployment frameworks. Unlike static models, agents act, adapt, and influence systems—raising questions about control, bias, safety, and accountability.

Emerging best practices now include:

- Intent tracing: Tracking what goal the agent pursued, how it chose tools, and why it took a given action

- Behavioral constraints: Guardrails that limit scope, execution frequency, or access based on predefined risk profiles

- Transparency & Explainability: Allowing humans to inspect agent reasoning, especially for regulated industries like finance or healthcare

- Governance APIs: Interfaces to review, halt, or override agent behavior remotely

“How can I make sure my AI agent doesn’t behave unpredictably?”

Use intent tracing, tool access control, and real-time override systems to monitor and govern autonomous actions.

Frameworks like Microsoft’s Responsible Agents and academic proposals in papers published via arXiv are exploring how to encode ethical principles, role-based permissions, and incident logging. Some of these ideas are filtering into enterprise deployments already, with internal policies requiring agent activity reports, third-party audits, and bias documentation.

In 2024–2025, multiple incidents (e.g., hallucinated medical summaries, agent-led social engineering) have accelerated the push for these frameworks. Expect to see responsibility by design become a prerequisite for enterprise-scale adoption.

Role of Product Managers in Feedback Loops

AI agents are not static features—they are systems that learn, fail, and iterate. The role of product managers (PMs) in AI agent teams is becoming more operational, analytical, and continuous. PMs must now function as:

- Behavioral curators, adjusting prompts, tool access, and fallback strategies based on usage logs

- Instrumentation architects, defining what should be logged, how user feedback is captured, and where interventions happen

- Cross-functional translators, collaborating across engineering, data science, and compliance to iterate safely

Key Responsibilities for PMs:

- Define KPIs for agent success beyond accuracy: user trust, time saved, error rate

- Monitor feedback loops: human override frequency, support tickets, dissatisfaction signals

- A/B test agent behavior: tone, verbosity, strategy for multi-step tasks

- Manage user escalation paths: when agents fail or underperform, how are users supported?

“What does a product manager do for an AI agent?”

They monitor how the agent performs, tune its behavior, manage user feedback loops, and ensure the agent continuously improves while staying aligned with user goals and compliance constraints.

By codifying agent behavior as product logic—just like UI decisions or feature toggles—PMs play a key role in keeping agents safe, useful, and market-ready.

The agent ecosystem is rapidly maturing—from single-task chatbots to autonomous workflows, from hardcoded agents to programmable orchestration, and from opaque interactions to governed behavior. The emergence of agentic commerce infrastructure, the disruption of traditional platforms by AI middle layers, and the institutionalization of responsibility frameworks are laying the foundation for long-term commercial viability.

Forward-looking teams are investing not only in building agents, but in surrounding them with systems for identity, governance, iteration, and monetization. As these models evolve, they will define the next generation of software—and how we interact with it.

8. Case Examples & In-Depth Use-Cases

Real-world implementations of AI agents reveal the practical nuances behind monetization, deployment, and performance measurement. This section explores five illustrative case studies across consumer and enterprise contexts. These examples span subscription models, marketplace monetization constraints, VC-backed agent startups, and outcome-based enterprise integrations—demonstrating both the opportunities and trade-offs inherent in AI agent commercialization.

GPT Store: Monetization Constraints and Creative Workarounds

When OpenAI launched the GPT Store in early 2024, it promised to democratize agent distribution by allowing anyone to publish, promote, and monetize GPT-powered agents. However, as detailed in Wired’s reporting, the monetization framework proved underwhelming.

Observed Limitations:

- No direct revenue share: early GPT Store iterations lacked a structured payout model for agent creators.

- Limited discovery tools: saturation made it difficult for niche or high-value agents to gain traction.

- Lack of usage-based billing or subscription mechanisms for creators

Community Workarounds:

- Creators embedded Stripe paywalls on landing pages and redirected users from the GPT agent to full SaaS versions.

- Others built “freemium GPTs” that offered sample outputs and then upsold access to proprietary tools or templates outside the Store.

- A few leveraged email list building to convert free users into subscribers elsewhere.

“Can I make money from publishing in the GPT Store?”

Not directly yet. Most creators use it for lead gen and funnel users to monetized SaaS or consulting services outside the platform.

Implication:

The GPT Store serves as a top-of-funnel discovery layer, not a standalone monetization platform. Until OpenAI introduces formal monetization tools (e.g., subscriptions, pay-per-use), GPT creators must rely on external channels to capture value.

Consensus GPT: Research Aggregation Agent with Marketing-Led Growth

Consensus GPT is a research-focused agent that summarizes scientific literature using LLMs and retrieval-augmented generation (RAG). It targets professionals, researchers, and students seeking fact-based answers grounded in peer-reviewed papers.

Business Model:

- Freemium SaaS: Basic question-answering is free, but premium tiers offer citation tracing, advanced filtering, and access to proprietary ranking models.

- VC-funded growth strategy: The company raised over $3 million to invest in infrastructure, marketing, and institutional partnerships.

Marketing & Monetization Highlights:

- Built virality through LLM-integrated branding: e.g., “Ask Consensus instead of ChatGPT for science-backed answers.”

- Ran high-conversion campaigns targeting academic LinkedIn and Twitter audiences

- Achieved a CAC-to-LTV ratio of 1:7 within six months post-launch

“How do I monetize an AI agent for research or education?”

Offer a freemium experience, then upsell advanced filters, citation tracking, or API access to professionals and institutions.

Lessons Learned:

- Trust and explainability are critical in factual domains.

- Academic and B2B audiences prefer usage predictability—freemium with soft limits works better than hard paywalls.

- Clear attribution to real sources (PDFs, DOIs) increases credibility and perceived value.

Salesforce: Embedded Agent Monetization via Outcome-Based Upselling

Salesforce has aggressively embedded LLM-powered agents across its ecosystem via Einstein Copilot. These agents assist users in sales forecasting, email generation, support ticket triage, and pipeline updates—without leaving the core CRM interface.

Monetization Strategy:

- Bundled agent access into enterprise pricing tiers

- Outcome-aligned upsells: Features like predictive lead scoring or next-best action suggestions are paywalled behind higher plans

“How does a platform like Salesforce monetize its AI agents?”

By embedding agents in core workflows and charging more for tiers that unlock outcome-boosting capabilities like deal scoring or workflow acceleration.

Why It Works:

- Salesforce customers are accustomed to tiered SaaS pricing and are willing to pay for measurable performance improvements.

- Agent functionality improves time-to-close, forecast accuracy, and sales team efficiency—all tied to revenue metrics.

Takeaway:

Don’t always sell the agent itself—sell the outcome the agent improves, and package that within broader platform upgrades.

Zendesk & Microsoft: Feedback Loops and PM-Led Agent Tuning

Zendesk has integrated agents into its helpdesk suite under the “AI-powered ticket resolution” label. These agents auto-categorize tickets, suggest resolutions, and escalate when needed.

Monetization Mechanism:

- Feature gating: Basic AI suggestions included in standard tiers; full automation (e.g., autonomous ticket replies) requires Enterprise licenses

- Volume-based billing: Some plans include a quota of AI-assisted tickets, with overage charges applied

Microsoft, in contrast, emphasizes the product management feedback loop in agent deployment. PMs at Microsoft working on Copilot agents are responsible for:

- Monitoring human override rates

- Analyzing task completion accuracy

- Running prompt A/B tests

- Mapping errors to training data gaps or design flaws

“What does it take to maintain a high-performance enterprise AI agent?”

Continuous monitoring, prompt refinement, customer feedback analysis, and a product manager who treats the agent like a dynamic system—not a static feature.

Shared Insight:

- PM ownership of the agent lifecycle leads to faster iteration and safer scaling.

- Enterprise buyers care deeply about auditability, override capability, and compliance traceability.

These examples illustrate that monetizing AI agents is not a one-size-fits-all exercise. The strategy must align with the use case, audience, integration depth, and performance measurability. Whether through direct subscriptions, embedded upsells, or freemium funnels, the path to sustainable agent revenue lies in delivering clear, repeatable value—measured not in features, but in outcomes.

9. Go-to-Scale & Roadmap

Building and monetizing an AI agent is only the beginning. Scaling that agent into a durable business requires operational discipline, infrastructure resilience, strategic market expansion, and continuous product evolution. This section outlines a pragmatic roadmap for startups and enterprises to grow their AI agent platforms sustainably—across verticals, markets, and distribution models.

Scaling Infrastructure and Cost Control

As usage grows, the backend cost curve becomes one of the most critical drivers of profitability. AI agents—especially those leveraging large language models (LLMs)—incur non-linear costs due to unpredictable input lengths, high concurrency, and expanding task complexity.

Key Priorities for Scalable Infrastructure:

- Model optimization: Use lower-cost models (e.g., GPT-3.5, Claude Instant) for non-critical tasks and reserve GPT-4-level models for high-stakes workflows.

- Token compression strategies: Prune prompt history, use embeddings for context recall, and limit verbose output formatting.

- Orchestration and agent routing: Implement intelligent switches to delegate tasks between agents or tools based on task complexity and cost profiles.

- Observability stack: Log and monitor every agent decision, token count, and third-party API call to trace both errors and cost drivers.

“How do I keep AI agent costs under control as I scale?”

Use smart routing across models, limit token usage through compression, and continuously track agent-level cost analytics to optimize for margins.

Additionally, cache common queries and responses when possible to avoid unnecessary LLM calls and enable progressive enhancement via precomputed agent logic for frequent workflows.

Expanding to New Verticals and Hybrid Products

One of the highest-leverage growth strategies is extending successful agent models into adjacent verticals or bundling them into hybrid product suites.

Expansion Tactics:

- Vertical cloning: Adapt an agent originally built for sales (e.g., Alta) to real estate, legal prospecting, or fundraising outreach.

- Workflow generalization: Convert specific functions (e.g., “triage support tickets”) into abstracted modules usable across industries.

- Hybrid product bundling: Combine agents with dashboards, analytics, or workflow builders to increase value and upsell potential.

This modular approach enables the reuse of your underlying orchestration layer and foundation models, reducing development overhead while unlocking new revenue streams.

Example: A compliance-checking agent in fintech can be repurposed for legal tech by swapping its domain-specific prompt templates and APIs.

“Can I reuse my AI agent in different industries?”

Yes—if you modularize its core logic, you can adapt the agent to new verticals by changing only the data sources, language models, and outcome metrics.

Global Expansion: Capturing Growth in Emerging Markets

AI adoption is accelerating globally, but particularly in fast-growing regions like Asia and the Middle East. India, for instance, is projected to grow from a $8 billion AI market in 2025 to $17 billion by 2027, with government initiatives and private investment accelerating enterprise AI adoption in sectors like healthcare, education, fintech, and logistics.

Localization Strategy:

- Language support: Enable multilingual prompts, local LLM routing, and region-specific tone adaptations.

- Data residency compliance: Use regionally hosted LLM endpoints or deploy private open-source models to comply with data localization rules (especially under India’s DPDP Act).

- Channel alignment: Leverage WhatsApp, SMS, and mobile-first interfaces for agent deployment in markets where email is underused.

Pricing Adjustments:

- Implement tiered regional pricing based on purchasing power parity (PPP).

- Offer freemium or usage-capped tiers to encourage initial adoption.

“How can I launch my AI agent product in India or Southeast Asia?”

Localize the language and interface, ensure compliance with data residency laws, and offer pricing that reflects regional income levels.

Licensing, Marketplaces, and Ecosystem Play

Beyond direct sales and subscriptions, scaling an AI agent business increasingly depends on platform plays—licensing, marketplaces, and ecosystem integration.

Licensing Models:

- White-label offerings: Let SaaS vendors embed your agent as a co-branded or invisible layer in their product. They handle sales and UX; you power the backend.

- API-based monetization: Offer agent functionality as a service (Agent-as-a-Platform), with pay-per-task or credit-based billing.

Marketplace Distribution:

- Publish agents on GPT Store, Zapier, Salesforce AppExchange, or Microsoft Teams Marketplace to gain discovery and credibility.

- Use these channels as lead-generation funnels, not core revenue drivers.

Ecosystem Playbook:

- Integrate with best-in-class SaaS tools (Notion, Jira, Slack, Trello) to reduce onboarding friction.

- Build a partner program for resellers, consultants, and vertical SaaS platforms that want to embed or resell your agent capabilities.

“What are the best ways to scale AI agents without hiring a large sales team?”

Use white-label partnerships, marketplaces, and developer APIs to embed your agent into platforms that already own the customer relationship.

Scaling an AI agent product is not just a technical challenge—it’s a business systems challenge. The winning teams are those that:

- Optimize infrastructure and contain costs

- Modularize agent design for vertical reuse

- Localize aggressively to capture emerging markets

- Embed into ecosystems rather than compete against them

The next generation of software companies will not just build agents—they will build the platforms that power agent-driven economies, across countries, verticals, and cloud stacks.

10. Conclusion & Strategic Checklist

Successfully monetizing AI agents is about more than deploying intelligent systems—it’s about designing a business model that aligns value delivery, cost structure, pricing logic, and go-to-market execution. Whether you’re launching a vertical SaaS product powered by agents or embedding task-based autonomy into an existing platform, the foundations of monetization remain the same: clarity, control, and continuous iteration.

Across this guide, we’ve broken down every major component of the agent monetization lifecycle—from defining the unit of value to deploying agents across verticals and geographies. The most successful agent-based businesses are those that:

- Price based on outcomes users care about—not just features or compute

- Maintain transparent cost controls tied to token, task, or execution metrics

- Offer flexible pricing tiers that allow customers to start small and scale up

- Embed their agents into ecosystems through partnerships, APIs, and marketplaces

- Build for trust and governance, especially in regulated markets and enterprise environments

10-Point Monetization Readiness Checklist

Use this checklist to assess whether your AI agent is ready for scalable monetization:

- Defined Unit of Value

Have you clearly identified what users are paying for (e.g., tokens, tasks, minutes, outcomes)?

- Cost Visibility & Margin Model

Can you map your backend costs (e.g., LLM inference, cloud compute) to user pricing tiers?

- Usage Tracking & Billing Infrastructure

Do you have real-time dashboards, overage alerts, and granular usage logs for billing accuracy?

- Outcome Alignment

Are you measuring success in terms of outcomes (e.g., leads booked, tickets resolved) rather than just actions?

- Transparent Pricing Architecture

Is your pricing simple to understand, with clear upgrade paths and value-based tiering?

- Scalable Infrastructure

Have you implemented token compression, model switching, and rate limiting to manage cost at scale?

- Vertical Reusability

Is your agent modular enough to be repurposed for other industries or use cases?

- Localization & Compliance Readiness

Are you ready to deploy in markets like India or the EU, with appropriate data handling and pricing?

- Ecosystem Integrations

Have you integrated your agent into major platforms (e.g., Salesforce, Slack, Zapier) or marketplaces?

- Governance & Responsible AI Practices

Do you have safeguards, audit trails, and override mechanisms in place to minimize risk?

“How do I know if my AI agent is ready for monetization?”

Start with this checklist. If you can clearly explain what your agent does, how it delivers value, what it costs to run, and how customers can pay for it—then you’re ready to monetize. If not, go back and refine your product’s pricing logic, infrastructure, and go-to-market alignment.

Back to You!

AI agents are more than a technological novelty—they’re the new operational layer of software. But intelligence alone isn’t enough. The winners in this space will be those who build viable, scalable, and accountable business models around autonomy.

If you’re building such systems, now is the time to move from experimentation to execution. With the right foundation, your agent can become not just a product—but a profit center.

Build Monetizable AI Agents with Aalpha

At Aalpha Information Systems, we specialize in AI development solutions, helping startups and enterprises turn AI agents into scalable, revenue-generating products. Whether you’re building a vertical SaaS agent, deploying enterprise-grade automation, or integrating agent capabilities into your existing platform, our team brings the technical depth and product expertise to get you from concept to commercialization.

From defining your unit of value and designing cost-efficient infrastructure, to setting up usage-based billing and ensuring global compliance—we partner with you at every stage of the monetization journey.

- Need help with architecture, pricing models, or go-to-market strategy?

- Want to launch an agent with enterprise-grade governance and observability?

- Looking to embed agents into your SaaS product with white-label or API-first strategies?

Consult Aalpha to build, launch, and monetize your AI agents—responsibly and profitably. Contact Us to get started or schedule a free consultation.