Artificial intelligence (AI) has shifted from being an experimental technology to a mission-critical component of modern business. From predictive analytics in finance to recommendation engines in eCommerce and diagnostic tools in healthcare, AI is embedded in day-to-day operations across industries. However, deploying an AI model into production is only the beginning of its journey. Much like physical machinery requires regular servicing, AI systems demand ongoing maintenance and support to remain reliable, accurate, and secure. Without consistent oversight, even the most advanced models degrade in performance, introducing risks that can undermine business outcomes, regulatory compliance, and customer trust.

Why AI Systems Require Ongoing Maintenance

AI models are not static; they are deeply dependent on data quality, system integration, and environmental stability. Over time, real-world data changes. For example, a fraud detection model in banking may become less effective as criminal tactics evolve, or a medical diagnostic model may struggle as new variants of diseases emerge. This phenomenon, known as “model drift,” gradually reduces predictive accuracy and reliability. Maintenance ensures that models are regularly monitored, retrained with fresh data, and recalibrated to reflect current conditions.

In addition, the infrastructure supporting AI systems must be continually optimized. Cloud environments evolve, APIs change, and hardware requirements shift as workloads increase. Maintenance ensures that the underlying architecture scales with demand, remains cost-effective, and is aligned with the latest security standards. Just as importantly, AI systems must remain transparent and ethical, requiring regular audits for bias, fairness, and compliance with regulations such as GDPR or HIPAA. Maintenance is therefore not only a technical necessity but also a business safeguard.

Difference Between AI Development and AI Maintenance

AI development and AI maintenance are often mistakenly viewed as interchangeable. Development focuses on creating, training, and deploying AI models. It involves data collection, feature engineering, model training, validation, and initial deployment into production environments. Once the system goes live, however, the challenges change dramatically. Development teams may celebrate the launch, but the true test begins afterward.

AI maintenance, in contrast, is about ensuring the system’s ongoing effectiveness. It involves continuous monitoring, updating data pipelines, patching infrastructure, fixing errors, managing performance at scale, and handling compliance requirements. For example, while a data science team might spend six months developing a machine learning model, it could require years of active support to keep the system relevant and accurate. In other words, development creates the engine, while maintenance keeps it running smoothly under real-world conditions. Organizations that ignore this distinction often over-invest in initial AI build-outs without preparing for the long-term operational costs.

Real-World Consequences of Neglecting AI Upkeep

The consequences of inadequate AI maintenance can be severe. Performance decay is the most visible effect. A customer service chatbot that once resolved 80% of queries accurately may decline to 50% or lower if left unmonitored, leading to frustrated users and higher support costs. In high-stakes industries such as healthcare, finance, or aviation, the risks escalate dramatically. A diagnostic model misclassifying patient scans due to outdated training data can result in misdiagnosis, delayed treatments, and legal liabilities. A trading algorithm that no longer reflects current market conditions could trigger significant financial losses within minutes.

Neglecting maintenance also exposes organizations to compliance failures. Regulations governing AI are tightening worldwide, with mandates for explainability, data governance, and fairness. An AI system that drifts from its intended design without oversight could violate these rules, resulting in fines or reputational damage. Security is another overlooked dimension. Without active support, AI systems may rely on outdated libraries, leaving them vulnerable to exploitation and cyberattacks. In industries handling sensitive data, such vulnerabilities can lead to breaches that compromise thousands—or even millions—of users.

Finally, failure to invest in AI maintenance undermines return on investment. AI projects are often costly, requiring significant time and resources to develop. Allowing systems to degrade after deployment wastes that investment, leaving organizations with ineffective technology and sunk costs. Forward-thinking businesses recognize that AI success is measured not at launch, but in its sustained ability to deliver value over months and years. Maintenance and support transform AI from a one-time project into a living system that continuously adapts to real-world challenges.

Understanding AI Maintenance and Support

Artificial intelligence has quickly evolved from an experimental tool to an enterprise-critical technology. However, unlike traditional software systems that operate within relatively fixed parameters, AI is inherently dynamic. It learns from data, adapts to environments, and interacts with unpredictable human behavior. This makes ongoing maintenance and support not just beneficial, but essential for sustaining reliable outcomes. To understand the role of AI maintenance, it is useful to examine its technical, operational, and ethical dimensions, trace the lifecycle of AI solutions after deployment, and compare it to conventional IT support models.

What AI Maintenance Means: Technical, Operational, and Ethical Aspects

Technical Aspects

At its core, AI maintenance is about preserving and enhancing the technical performance of models and systems. This includes monitoring algorithms for accuracy, detecting data drift, retraining models with updated datasets, and optimizing system resources. For instance, a natural language processing model may initially perform well but gradually misinterpret user intent as slang, terminology, or customer expectations change. Without retraining, accuracy erodes. Technical maintenance also involves patching dependencies, updating APIs, scaling infrastructure, and ensuring interoperability across platforms.

Operational Aspects

AI systems rarely operate in isolation; they are embedded within workflows, business processes, and user-facing applications. Operational maintenance ensures these systems continue to integrate seamlessly into day-to-day activities. This includes monitoring latency, minimizing downtime, and optimizing response times to meet service-level agreements (SLAs). For example, a recommendation engine in eCommerce must provide suggestions within milliseconds—delays can directly reduce conversion rates. Operational support also includes setting up monitoring dashboards, incident response protocols, and continuous integration/continuous deployment (CI/CD) pipelines that allow models to be updated efficiently without interrupting services.

Ethical Aspects

AI maintenance extends beyond performance and uptime to encompass ethical responsibilities. Models must be continuously audited for bias, fairness, and compliance with evolving regulations such as GDPR, HIPAA, and the EU AI Act. Consider a hiring algorithm that inadvertently favors one demographic group due to skewed historical data. Without active ethical oversight, this bias could persist, exposing organizations to legal liabilities and reputational harm. Ethical maintenance also includes explainability—ensuring decisions remain transparent and justifiable to regulators, stakeholders, and end users. This is especially critical as public scrutiny of AI systems grows.

The Lifecycle of AI Solutions After Deployment

AI solutions are often mistakenly viewed as one-time deliverables, but in reality, they operate within a lifecycle that begins after deployment. This lifecycle typically includes the following stages:

- Initial Deployment

The AI system is integrated into production environments. At this stage, it is expected to meet predefined benchmarks and business objectives. - Performance Monitoring

Continuous monitoring begins immediately after deployment. Metrics such as accuracy, precision, recall, latency, and user satisfaction are tracked to identify deviations from expected behavior. - Model Drift Detection

Over time, data distributions shift. This could be due to seasonality, emerging trends, or external shocks such as a pandemic. Drift detection mechanisms signal when a model’s predictions no longer align with reality. - Retraining and Updating

Once drift is detected, retraining becomes necessary. Updated datasets are fed into the model, and performance is revalidated. Sometimes new features are engineered, or entirely new architectures are adopted. - Infrastructure Scaling

As adoption grows, the system must scale to handle increased demand. This requires adjustments in cloud resources, storage, and load-balancing mechanisms. - Governance and Compliance Checks

Throughout its lifecycle, the AI system is subjected to audits for transparency, fairness, and compliance with industry-specific regulations. Documentation and version control are maintained for accountability. - Retirement or Replacement

Eventually, some AI systems become obsolete. They may be replaced with more advanced models, integrated into broader platforms, or phased out entirely. Maintenance includes planning for graceful retirement while ensuring continuity of service.

This lifecycle emphasizes that AI is not a static product but a continuously evolving service requiring active management.

Key Differences Between Traditional IT Support and AI Support

At first glance, AI maintenance may appear similar to IT support. Both involve monitoring systems, addressing errors, and ensuring uptime. However, there are fundamental differences that set AI apart.

- Data Dependence vs. Code Dependence

Traditional IT systems primarily rely on deterministic code. Once bugs are fixed, the system often remains stable unless new features are added. AI systems, by contrast, are probabilistic and data-driven. Their performance is directly tied to the quality and relevance of training data. As data changes, so too must the model. - Dynamic Performance vs. Static Stability

Conventional IT systems tend to degrade slowly, often due to hardware failures or unpatched vulnerabilities. AI systems can degrade much faster because of model drift. A predictive model might lose significant accuracy in weeks if exposed to rapidly shifting environments. - Continuous Learning vs. One-Time Deployment

In IT, software updates are episodic, released in versions or patches. AI requires continuous retraining, testing, and redeployment to remain effective. Support teams must be equipped with MLOps pipelines to automate this process. - Ethical Oversight vs. Technical Oversight

Traditional IT support emphasizes uptime, security, and performance. AI support must also address fairness, transparency, and accountability. The potential for bias, discriminatory outputs, or opaque decision-making adds layers of complexity. - Multidisciplinary Teams vs. IT Specialists

IT support is typically managed by infrastructure and security teams. AI support demands collaboration among data scientists, machine learning engineers, ethicists, and domain experts. This multidisciplinary nature reflects the diverse challenges of maintaining AI responsibly.

Organizations that treat AI like conventional IT systems often underestimate the resources required for upkeep. They may underinvest in monitoring tools, neglect retraining cycles, or fail to establish governance frameworks. This leads to performance decay, compliance risks, and eroded trust among users. By recognizing the unique demands of AI support, businesses can allocate appropriate budgets, build specialized teams, and design processes that sustain AI performance over the long term.

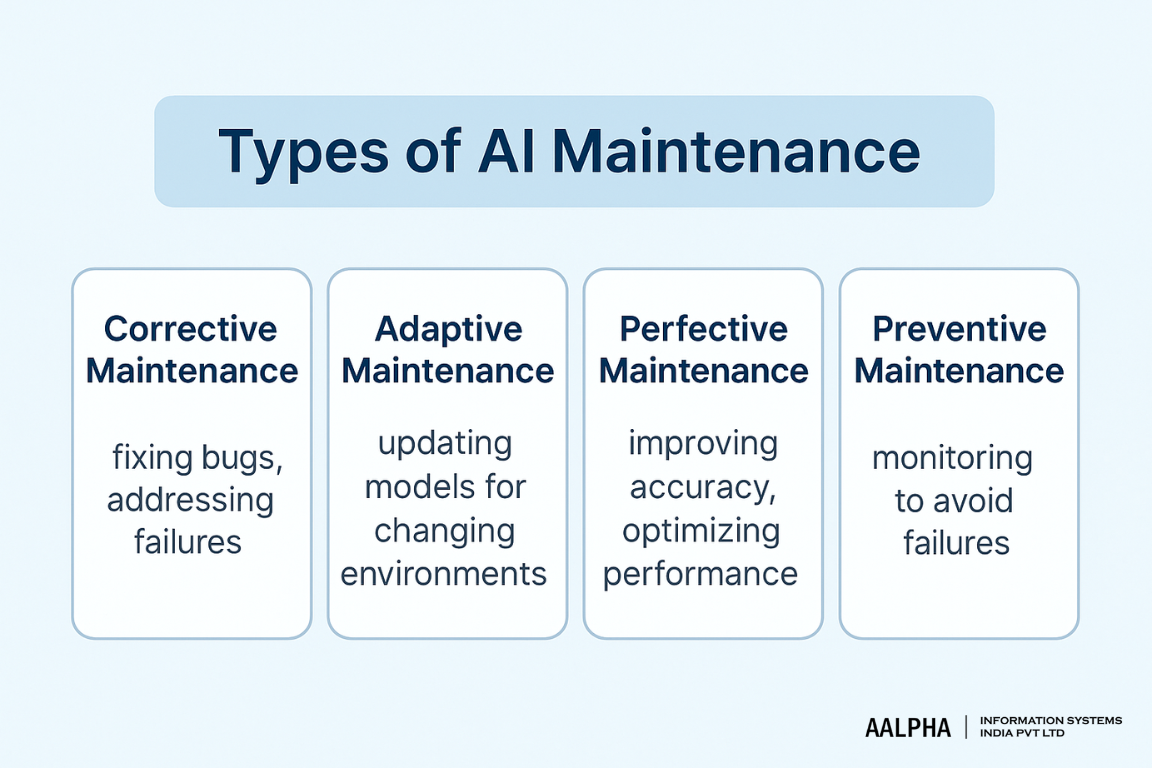

Types of AI Maintenance

AI systems, much like traditional software, require different categories of maintenance to remain reliable. However, because AI models operate probabilistically and depend heavily on data, the scope of maintenance is broader and more nuanced. Maintenance activities are generally grouped into four categories: corrective, adaptive, perfective, and preventive. Each plays a distinct role in ensuring long-term performance, accuracy, and compliance.

Corrective Maintenance – Fixing Bugs and Addressing Failures

Corrective maintenance focuses on identifying and resolving issues that impair the functionality of AI systems. These issues may be technical bugs in the codebase, misconfigurations in infrastructure, or failures in data pipelines that feed the model.

Examples in practice:

- A chatbot deployed for customer service may suddenly stop responding to certain queries due to an error in its natural language processing pipeline. Corrective maintenance would involve debugging the model, repairing the pipeline, and restoring service.

- A computer vision model used in manufacturing may misclassify defective products after an API update introduces unexpected changes. Engineers must trace the issue back to the code and deploy a patch.

Corrective maintenance often requires incident response protocols similar to those in traditional IT environments, but with additional layers of complexity. AI bugs are not always deterministic; sometimes the model produces inconsistent outputs due to data drift or unanticipated edge cases. This requires diagnostic techniques that go beyond code inspection, including error analysis of the training data, validation checks, and root-cause investigation across the AI lifecycle.

While corrective maintenance is reactive by nature, well-structured logging and monitoring systems allow organizations to detect failures faster, reducing downtime. The goal is to minimize disruption and restore system performance to acceptable levels as quickly as possible.

Adaptive Maintenance – Updating Models for Changing Environments

Adaptive maintenance ensures AI systems continue to perform effectively in evolving environments. Unlike static software, AI models can lose accuracy over time when exposed to new data distributions—a phenomenon known as model drift. Adaptive maintenance addresses this by updating, retraining, or fine-tuning models.

Key drivers of adaptive maintenance include:

- Changing user behavior: For instance, eCommerce recommendation engines must adapt to shifting consumer trends, seasonal shopping patterns, or emerging product categories.

- External shocks: A financial fraud detection system may become ineffective when fraudsters adopt new techniques. Adaptive updates ensure the model recognizes new fraudulent patterns.

- Regulatory changes: New laws, such as stricter data privacy requirements, may necessitate altering how AI systems process and store personal data.

Practical example: During the COVID-19 pandemic, many predictive models in healthcare and logistics quickly became obsolete because the underlying assumptions no longer reflected reality. Adaptive maintenance required retraining models with updated datasets to account for changes in patient care, supply chain demand, and workforce availability.

Adaptive maintenance highlights the importance of MLOps (Machine Learning Operations) practices. Automated retraining pipelines, data versioning, and validation frameworks allow organizations to update models without extensive manual intervention. This not only preserves accuracy but also reduces operational risks from outdated predictions.

Perfective Maintenance – Improving Accuracy and Optimizing Performance

Perfective maintenance goes beyond fixing problems or adapting to changes. It aims to enhance system performance, accuracy, and efficiency over time. The focus here is on refining the AI system to better meet user needs and business objectives.

Common activities in perfective maintenance include:

- Improving model accuracy: Adding new features, refining training datasets, or experimenting with advanced architectures to increase predictive performance.

- Enhancing user experience: Adjusting response styles in chatbots, improving personalization in recommendation engines, or reducing latency in real-time systems.

- Optimizing infrastructure: Reducing computational costs by compressing models (e.g., through pruning or quantization) or by adopting more efficient deployment frameworks.

Example in practice:

A healthcare provider may initially deploy a diagnostic imaging model with 85% accuracy. Through perfective maintenance, engineers may fine-tune the model with additional labeled data, raising accuracy to 92% while simultaneously reducing false positives. Similarly, an enterprise might optimize a large language model to run more efficiently on cloud infrastructure, reducing inference costs by 30%.

Perfective maintenance is typically proactive and tied to long-term strategic goals. Unlike corrective maintenance, which is about restoring function, or adaptive maintenance, which responds to external changes, perfective maintenance represents continuous improvement. It ensures AI systems not only remain functional but also deliver growing value as technology and user expectations evolve.

Preventive Maintenance – Monitoring to Avoid Failures

Preventive maintenance focuses on detecting potential problems before they cause system failures. In AI, this involves setting up monitoring systems, implementing alert mechanisms, and analyzing logs to identify anomalies early.

Preventive activities include:

- Drift monitoring: Continuously tracking model inputs and outputs to detect when distributions deviate from training data.

- Bias detection: Running regular audits to ensure outputs remain fair and free from unintended discrimination.

- Security monitoring: Identifying vulnerabilities in APIs, data pipelines, or third-party libraries before they are exploited.

- Stress testing: Simulating high-traffic scenarios to verify system resilience under heavy loads.

Example in practice:

A banking institution may use drift detection tools to identify when a credit scoring model begins producing risk assessments inconsistent with historical benchmarks. Early intervention prevents the model from misclassifying applicants, avoiding reputational harm and regulatory breaches. In another case, preventive security checks might reveal vulnerabilities in an open-source AI library, allowing engineers to patch systems before attackers exploit them.

Preventive maintenance is increasingly automated through specialized monitoring platforms such as Arize AI, Fiddler AI, or Evidently AI. These tools provide dashboards, alerts, and diagnostics to continuously track performance metrics, reducing the reliance on manual oversight. By prioritizing prevention, organizations avoid costly downtime, compliance issues, and the erosion of trust among users.

Interdependence of the Four Maintenance Types

While each type of maintenance has distinct objectives, they are interdependent in practice. Corrective maintenance often reveals opportunities for perfective improvements. Adaptive maintenance is closely linked with preventive monitoring, as drift detection triggers retraining cycles. Organizations that silo these categories risk inefficiency; the most effective AI support strategies integrate all four into a cohesive framework.

For example, a self-driving car system may require corrective maintenance to address sensor failures, adaptive updates to handle new traffic regulations, perfective tuning to improve pedestrian recognition accuracy, and preventive monitoring to anticipate system anomalies. Together, these maintenance approaches ensure not just safety but also public confidence in the technology.

Categorizing AI maintenance into corrective, adaptive, perfective, and preventive allows organizations to plan resources more effectively. It clarifies priorities: urgent failures demand corrective action, while long-term improvement projects fall under perfective maintenance. Preventive monitoring helps reduce the frequency of corrective incidents, and adaptive retraining ensures relevance in dynamic environments. By structuring maintenance this way, businesses can balance immediate firefighting with sustainable growth, ensuring their AI investments continue delivering value over the long term.

Core Components of AI Support Services

AI maintenance is not a single task but a collection of interdependent practices that ensure systems remain accurate, secure, and scalable long after deployment. While the previous section outlined the categories of maintenance, this section breaks down the essential components that every AI support service must address. These include monitoring models, maintaining data pipelines, managing infrastructure, safeguarding compliance and privacy, and incorporating human oversight into feedback loops. Together, they form the backbone of a sustainable AI operations strategy.

-

Model Monitoring and Performance Tracking

The foundation of AI support lies in continuous monitoring of models to ensure they perform as intended in real-world settings. Unlike traditional software, AI systems do not behave deterministically; their performance depends on data quality, evolving user behavior, and external factors that may shift over time. Without proper monitoring, models risk drifting into irrelevance or even harmful decision-making.

Key aspects of model monitoring include:

- Accuracy and precision tracking: Regularly evaluating outputs against benchmarks to detect degradation.

- Drift detection: Identifying changes in input distributions or output patterns that signal misalignment with training data.

- Latency and throughput analysis: Ensuring predictions are delivered quickly enough to support business processes.

- Explainability monitoring: Using interpretability tools to confirm that model reasoning aligns with expectations and is defensible under scrutiny.

Example: A fraud detection model may initially flag suspicious transactions with 95% accuracy. Over time, new fraud techniques emerge, lowering accuracy to 80%. Continuous monitoring allows detection of this decline, triggering retraining before significant financial losses occur.

Modern monitoring platforms such as Arize AI and Evidently AI automate much of this process, offering dashboards and alerts. These tools make it easier to track multiple models across environments, ensuring that AI systems remain accountable and dependable.

-

Data Pipeline Maintenance

AI systems are only as reliable as the data that fuels them. A well-designed model can quickly lose relevance if its underlying data pipelines are poorly maintained. Data pipeline maintenance focuses on ensuring that raw inputs are collected, cleaned, transformed, and delivered consistently to models in production.

Core tasks include:

- Data quality checks: Detecting missing values, anomalies, or corrupted records before they reach the model.

- ETL (extract, transform, load) process reliability: Ensuring seamless integration of multiple data sources without duplication or conflict.

- Schema validation: Tracking changes in data formats or sources to prevent pipeline failures.

- Versioning and lineage tracking: Keeping detailed records of where data originates, how it has been modified, and which models it supports.

Example: In healthcare, an AI diagnostic tool may rely on imaging data from multiple hospitals. If one source changes its file format or alters metadata conventions, the pipeline could break, resulting in incomplete datasets. Maintenance teams must detect and adapt to these changes before they compromise the model’s reliability.

Tools such as Apache Airflow, Prefect, and dbt help orchestrate data pipelines, while monitoring solutions can automatically validate incoming data. Well-maintained pipelines not only improve accuracy but also reduce the cost and time associated with retraining models.

-

Infrastructure Management (Cloud, On-Prem, Hybrid)

The infrastructure supporting AI systems determines their scalability, efficiency, and cost-effectiveness. Unlike traditional applications, AI workloads are computationally intensive, requiring specialized hardware such as GPUs or TPUs, large-scale storage solutions, and orchestration frameworks. Infrastructure management ensures these resources are provisioned, optimized, and secure.

Infrastructure considerations include:

- Cloud-based AI: Offers scalability and flexibility, allowing organizations to quickly spin up resources as workloads expand. Cloud-native MLOps platforms simplify deployment but require careful cost management.

- On-premises AI: Preferred by organizations with strict data sovereignty requirements, such as those in healthcare or defense. Maintenance here focuses on hardware lifecycle management, cooling, and physical security.

- Hybrid approaches: Combine the scalability of cloud with the control of on-prem systems. Maintenance requires balancing workloads across environments while ensuring data flows securely between them.

Example: A global eCommerce platform may run recommendation models in the cloud for scalability but maintain sensitive customer data on-premises for compliance. Infrastructure management ensures seamless communication between these environments, with redundancy and failover mechanisms to avoid downtime.

Kubernetes and Docker remain the backbone of container orchestration, while cloud providers like AWS, Google Cloud, and Azure offer AI-specific services. Maintenance teams must continuously optimize costs, prevent resource bottlenecks, and ensure high availability.

-

Security, Compliance, and Privacy Management

AI systems operate at the intersection of technology, regulation, and ethics. Maintenance must include a robust framework for securing systems, ensuring compliance with legal requirements, and protecting user privacy. Failure in any of these areas can result in not only technical failures but also severe legal and reputational consequences.

Security maintenance tasks include:

- Patching vulnerabilities in AI libraries and dependencies.

- Protecting data pipelines against unauthorized access or tampering.

- Implementing encryption for sensitive data in transit and at rest.

- Monitoring for adversarial attacks designed to manipulate AI outputs.

Compliance and privacy maintenance tasks include:

- Auditing datasets for alignment with regulations like GDPR, HIPAA, or the EU AI Act.

- Documenting model decisions for explainability requirements.

- Managing consent frameworks for user data usage.

- Updating policies as new laws emerge across different jurisdictions.

Example: A financial institution running AI-driven credit scoring must ensure its models do not unintentionally discriminate against certain demographic groups. Compliance checks involve regular bias testing, transparency reports, and governance documentation.

Support teams often employ tools such as IBM OpenScale or Fiddler AI to provide bias detection and explainability. Maintenance in this area is not optional—regulators increasingly mandate it, and consumers expect ethical stewardship of their data.

-

Human-in-the-Loop Systems and Feedback Cycles

Despite advances in automation, humans remain essential in maintaining AI systems. Human-in-the-loop (HITL) systems integrate expert oversight into AI workflows, enabling organizations to validate outputs, retrain models effectively, and ensure ethical use.

Functions of human-in-the-loop systems include:

- Error correction: Subject matter experts review AI errors to identify patterns and feed corrections back into training datasets.

- Bias detection: Humans can flag biased outputs that automated monitoring may overlook.

- Complex decision-making: AI systems often struggle with ambiguous cases. Humans provide judgment where data-driven models fall short.

- Continuous feedback cycles: End-users provide feedback on AI outputs, which can be used to retrain models and improve relevance.

Example: In medical imaging, an AI system may flag potential tumors, but radiologists review the results to confirm diagnoses. Their feedback not only improves patient safety but also creates labeled data for retraining the model, enhancing future performance.

HITL approaches also strengthen trust. When users know humans are supervising and refining AI systems, they are more likely to adopt them confidently. This balance of automation and oversight is critical to achieving both scalability and accountability.

-

Integration of Core Components

Each of these components—monitoring, pipeline maintenance, infrastructure, compliance, and human oversight—interacts with the others. A robust data pipeline feeds accurate information into models; monitoring detects when performance drops; infrastructure scales to handle workload; compliance frameworks ensure decisions remain ethical; and human reviewers provide judgment where algorithms falter. Ignoring any one of these pillars risks undermining the entire AI ecosystem.

For instance, a retail recommendation engine may have well-optimized infrastructure but fail if data pipelines introduce corrupted inputs. Similarly, a healthcare diagnostic model with perfect accuracy may still be unusable if compliance standards are not met. By treating these core components as interdependent rather than siloed, organizations can establish AI support systems that are resilient, adaptable, and trusted.

Challenges in AI Maintenance

Maintaining AI systems is not a simple extension of IT support; it introduces new complexities driven by the probabilistic, dynamic, and data-dependent nature of machine learning. Even with strong monitoring and infrastructure, organizations encounter persistent obstacles that can reduce effectiveness, inflate costs, or introduce risk. The most common challenges include model drift and data decay, bias and fairness concerns, scaling infrastructure costs, integration with legacy systems, and keeping up with regulatory updates. Understanding these challenges is essential for businesses to allocate resources wisely and design resilient AI support strategies.

-

Model Drift and Data Decay

Model drift refers to the gradual decline in performance as the relationship between input data and output predictions changes over time. Unlike software bugs, which remain static until fixed, model drift emerges because real-world data evolves. A customer support chatbot, for example, may initially interpret queries with high accuracy but lose effectiveness as new slang, product names, or service categories emerge. Similarly, a demand forecasting model in retail may mispredict sales when consumer behavior shifts due to global events, new competitors, or economic cycles.

Types of drift include:

- Data drift: Input data distributions change. For example, an insurance model may see different customer demographics over time.

- Concept drift: The relationship between inputs and outputs changes. For instance, fraud patterns evolve as criminals adopt new tactics.

- Label drift: The meaning of outputs shifts, such as new categories in sentiment analysis or diagnostic outcomes in healthcare.

Data decay compounds this problem. Data pipelines that once delivered high-quality inputs may degrade as sources change or become incomplete. Missing records, altered formats, or reduced quality of sensor readings can directly affect predictions.

Consequence: If left unchecked, drift and decay lead to declining accuracy, eroded trust among users, and potentially costly decisions. For high-stakes industries like finance or healthcare, the consequences may include regulatory violations or patient harm.

-

Bias and Fairness Issues Over Time

AI systems inherit biases from training data, and these biases can intensify if not actively monitored. Even when initially well-calibrated, models can become less fair as populations, behaviors, and data contexts change. A hiring algorithm that was balanced at launch may become biased if future applicant pools skew toward one demographic, reinforcing historical imbalances. Similarly, a credit scoring system might inadvertently penalize minority groups if economic shifts disproportionately affect their data representation.

Key challenges include:

- Detecting hidden bias: Bias is not always visible in accuracy metrics. Models may perform well overall but fail disproportionately for specific subgroups.

- Maintaining fairness over time: As data distributions change, fairness achieved at launch may erode.

- Balancing accuracy and fairness: Improving fairness sometimes reduces predictive accuracy, forcing trade-offs between business goals and ethical responsibility.

Example: In healthcare, diagnostic AI may show strong performance overall but underperform in diagnosing conditions for underrepresented populations. Without fairness monitoring, these disparities go unnoticed, resulting in unequal access to quality care.

Consequence: Bias undermines trust and exposes organizations to reputational damage, lawsuits, or regulatory penalties. With the rise of global AI governance frameworks, fairness audits are becoming mandatory rather than optional.

-

Scaling Infrastructure Costs

AI workloads are computationally expensive. Training and retraining large models demand powerful GPUs, storage capacity, and bandwidth, while inference at scale requires low-latency, high-availability infrastructure. Maintenance must address not only performance but also cost-effectiveness.

Cost drivers include:

- Retraining frequency: Frequent retraining improves accuracy but drives up compute and storage expenses.

- Model size: Large models, such as transformer-based architectures, require vast resources for both training and deployment.

- Scaling demand: As more users rely on AI systems, workloads scale, necessitating larger clusters or more cloud resources.

- Cloud pricing complexity: Usage-based pricing in cloud environments can lead to unpredictable bills, particularly if workloads spike unexpectedly.

Example: An eCommerce platform deploying personalized recommendations may initially manage with modest infrastructure. As user adoption grows, requests multiply from thousands to millions per day, significantly raising inference costs. Retraining models weekly to capture new shopping trends further inflates resource consumption.

Consequence: Without careful optimization, infrastructure costs can spiral, eroding return on investment. Businesses may also face trade-offs between accuracy and affordability, forcing difficult decisions about model complexity and retraining cycles.

-

Integration Challenges with Legacy Systems

AI systems rarely operate in isolation; they must integrate with existing IT infrastructure, applications, and workflows. Many enterprises still depend on legacy systems built decades ago, which were never designed to accommodate modern AI workloads.

Integration hurdles include:

- Data incompatibility: Legacy systems may store data in outdated formats or siloed databases, complicating ingestion into modern AI pipelines.

- Limited APIs: Older systems often lack robust APIs, making real-time communication with AI models difficult.

- Operational disruption: Retrofitting AI into established workflows can disrupt daily operations if not carefully managed.

- Skill gaps: Internal IT staff may lack the expertise to bridge modern AI frameworks with legacy architectures.

Example: A bank introducing AI-driven credit scoring must integrate it with decades-old mainframes used for customer account management. Without middleware or custom connectors, the AI system cannot access the data it needs or feed results back into core banking workflows.

Consequence: Poor integration reduces adoption and may limit AI’s value to isolated pilots rather than enterprise-wide transformation. In worst cases, integration failures lead to downtime, duplicated effort, or inconsistent decision-making.

-

Regulatory and Compliance Updates

AI regulations are evolving rapidly as governments and industry bodies respond to ethical concerns, security risks, and fairness issues. For organizations maintaining AI systems, compliance is not a one-time certification but a continuous responsibility.

Regulatory challenges include:

- Dynamic laws: Frameworks such as the EU AI Act, U.S. sector-specific guidelines, and Asia-Pacific regulations are still in flux, requiring constant updates.

- Jurisdictional variation: Multinational companies must comply with different, sometimes conflicting, regulations across regions.

- Documentation requirements: Regulators demand detailed records of training data, model versions, and decision-making processes.

- Auditability: Organizations must prove that AI systems are explainable, fair, and aligned with stated objectives.

Example: A healthcare AI application must comply with HIPAA in the United States, GDPR in Europe, and potentially stricter local laws in other jurisdictions. This requires ongoing maintenance of documentation, data handling protocols, and audit logs.

Consequence: Failure to keep pace with compliance exposes businesses to fines, legal liabilities, and reputational harm. More importantly, non-compliance may bar organizations from deploying AI in certain markets altogether.

The Compounding Effect of Challenges

These challenges do not exist in isolation—they compound one another. For example, drift can exacerbate bias if underrepresented groups change behavior faster than the majority population. Integration difficulties with legacy systems can slow retraining, worsening drift and accuracy decline. Rising infrastructure costs may pressure organizations to retrain less frequently, indirectly harming fairness or compliance.

The complexity of maintaining AI lies in this interconnected web of challenges. Successful organizations adopt holistic strategies that monitor technical, operational, and ethical factors simultaneously, supported by multidisciplinary teams that combine data science, IT, legal, and domain expertise.

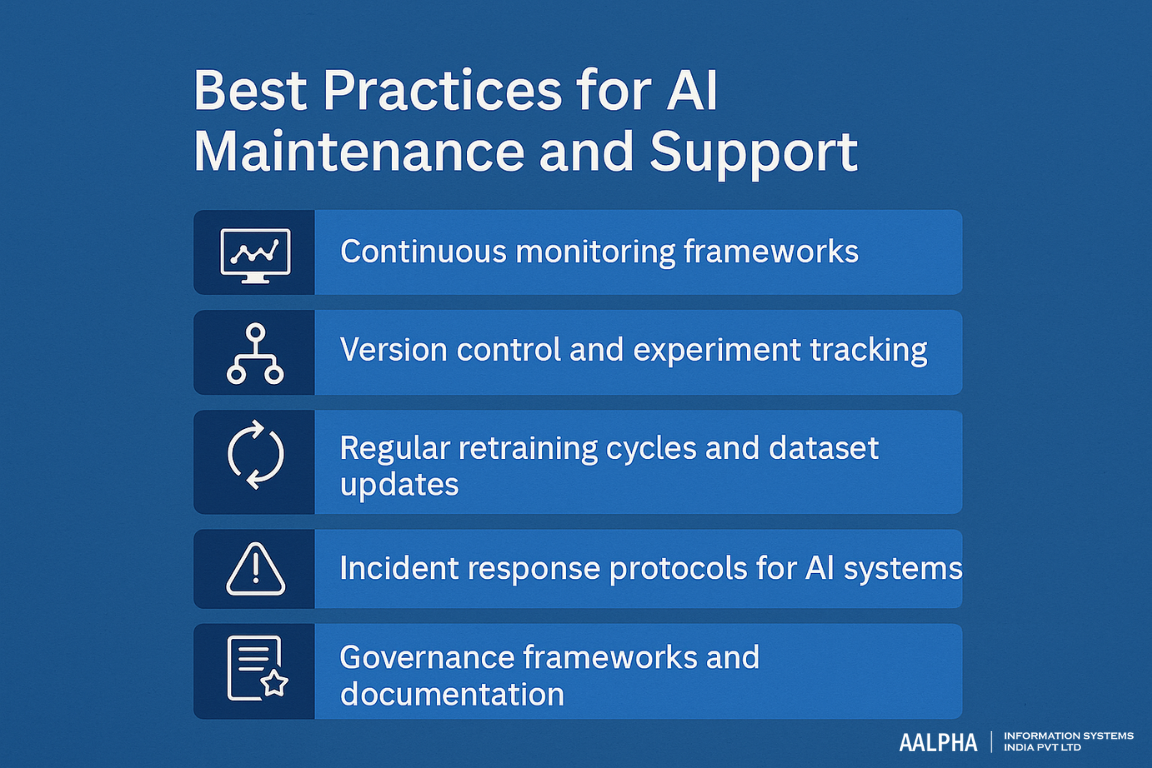

Best Practices for AI Maintenance and Support

As AI systems become core components of business operations, ensuring their stability, fairness, and adaptability is no longer optional. Organizations that treat AI as “deploy once and forget” risk facing declining accuracy, compliance violations, and reputational damage. To mitigate these risks, businesses must adopt structured best practices that keep AI models relevant and reliable over time. The most critical practices include implementing continuous monitoring frameworks, maintaining version control and experiment tracking, conducting regular retraining cycles, establishing incident response protocols, and enforcing governance with robust documentation.

-

Continuous Monitoring Frameworks

The first pillar of AI maintenance is continuous monitoring. Unlike static software, AI performance can degrade quickly as data distributions change, user behavior evolves, or external conditions shift. Continuous monitoring frameworks provide real-time visibility into system health and ensure problems are detected before they cause significant harm.

Core elements of monitoring include:

- Performance metrics: Tracking accuracy, precision, recall, and F1 scores across different datasets and user groups.

- Drift detection: Identifying data or concept drift by comparing live data distributions against training benchmarks.

- Latency and throughput: Measuring system responsiveness to ensure service-level agreements (SLAs) are met.

- Alert systems: Setting thresholds that trigger notifications when models deviate from expected behavior.

Example: A retail recommendation engine might perform well during standard shopping periods but experience performance drops during seasonal sales due to unusual purchasing behavior. Monitoring frameworks detect these anomalies in real time, prompting engineers to retrain or recalibrate models before customer experience suffers.

Modern monitoring tools such as Arize AI and Evidently AI automate drift detection and provide dashboards for multiple stakeholders, from data scientists to compliance officers. By embedding monitoring as a continuous process, organizations build resilience against unpredictable changes.

-

Version Control and Experiment Tracking

AI development is iterative. Multiple experiments are run to test new features, architectures, or datasets. Without systematic version control and experiment tracking, organizations risk confusion, reproducibility failures, and compliance gaps.

Best practices include:

- Model versioning: Assigning unique identifiers to every trained model, along with metadata on training data, hyperparameters, and performance benchmarks.

- Data versioning: Maintaining snapshots of datasets used in each experiment to ensure reproducibility and auditability.

- Experiment tracking systems: Recording results of each training run, including performance metrics and configuration details.

- Rollback mechanisms: Allowing teams to revert to previous models if new deployments underperform.

Example: A financial institution deploying credit scoring models must keep detailed records to demonstrate compliance with regulatory audits. If a regulator asks why a particular decision was made, the institution can trace it back to the exact model version and dataset used at the time.

Platforms such as MLflow, Weights & Biases, and DVC (Data Version Control) streamline this process, providing centralized repositories for tracking models and experiments. This not only supports compliance but also fosters collaboration across teams, ensuring transparency and consistency.

-

Regular Retraining Cycles and Dataset Updates

AI systems inevitably lose accuracy over time as new data diverges from historical patterns. Regular retraining cycles are essential to maintain relevance. This practice involves updating datasets, retraining models, validating results, and deploying refreshed versions systematically.

Key considerations for retraining include:

- Frequency: Determined by the pace of change in the domain. A fraud detection model may need weekly updates, while a climate forecasting model might be retrained seasonally.

- Data freshness: Ensuring retraining incorporates the most recent, high-quality data.

- Validation protocols: Comparing new models against baselines to ensure improvements in accuracy and fairness.

- Automation: Leveraging MLOps pipelines to automate retraining and deployment, reducing manual overhead.

Example: A voice recognition system may initially perform well but struggle as new slang or accents become common. Scheduled retraining cycles incorporating updated audio data help the model adapt to linguistic diversity, sustaining user satisfaction.

Organizations that fail to plan retraining cycles often find themselves reacting to performance crises. By scheduling proactive updates, they avoid sudden declines and ensure that AI systems remain aligned with business goals.

-

Incident Response Protocols for AI Systems

Even with strong monitoring and retraining practices, AI systems can encounter unexpected failures. These may include sudden spikes in error rates, biased outputs, or adversarial attacks. Incident response protocols ensure organizations react quickly and consistently when things go wrong.

Core steps in AI incident response include:

- Detection: Monitoring systems flag anomalies or failures.

- Diagnosis: Engineers investigate root causes, analyzing logs, inputs, and outputs.

- Containment: Faulty models are rolled back, throttled, or taken offline to minimize impact.

- Resolution: Models are patched, retrained, or replaced to restore functionality.

- Post-mortem review: Teams document the incident, identify preventive measures, and update protocols.

Example: A healthcare AI system used for triaging patient cases may suddenly misclassify symptoms due to corrupted input data. An effective incident response protocol ensures the system is quickly rolled back, physicians are alerted, and retraining occurs before redeployment.

Incident management platforms such as PagerDuty or Opsgenie can be integrated into AI pipelines to ensure timely escalation. The goal is to minimize downtime and preserve trust in AI systems, particularly in industries where errors have high stakes.

-

Governance Frameworks and Documentation

AI maintenance is not solely technical—it also requires governance structures that ensure accountability, transparency, and compliance. Governance frameworks provide the policies, standards, and oversight needed to manage AI responsibly across its lifecycle.

Best practices in governance include:

- Policy frameworks: Defining rules for model deployment, retraining frequency, and decommissioning.

- Ethical oversight: Establishing review boards or committees to monitor fairness, bias, and explainability.

- Audit trails: Maintaining documentation of data sources, training methods, model versions, and decision-making processes.

- Stakeholder communication: Ensuring that both technical and non-technical stakeholders understand how AI systems are managed.

Example: Under the EU AI Act, organizations must document and explain how high-risk AI systems are trained and used. Governance frameworks that include detailed audit trails and accountability structures make compliance more efficient and defensible.

Documentation is not just a regulatory requirement; it also supports internal learning and risk management. By codifying decisions, organizations avoid repeating mistakes and create institutional knowledge that accelerates future development.

-

Building a Culture of Continuous AI Care

Adopting these best practices is not a one-time exercise but a cultural shift. Organizations must treat AI as a living system that requires continuous care. This involves empowering multidisciplinary teams—including data scientists, IT professionals, compliance officers, and domain experts—to collaborate seamlessly. It also requires investment in tools that automate monitoring, versioning, and retraining, reducing reliance on ad-hoc fixes.

Ultimately, best practices ensure that AI systems remain accurate, ethical, and scalable. They allow businesses to extract sustained value from their AI investments while minimizing risks of failure, bias, and non-compliance. In a world where AI is increasingly mission-critical, these practices form the difference between organizations that thrive and those that falter under the weight of neglected systems.

Tools and Technologies for AI Maintenance

Best practices in AI maintenance cannot succeed without the right supporting technologies. The complexity of monitoring models, retraining them, managing infrastructure, and ensuring compliance requires a combination of specialized tools. These technologies have matured under the banner of MLOps (Machine Learning Operations), bringing automation and scalability to AI maintenance. The following categories—MLOps platforms, monitoring tools, infrastructure orchestration, retraining automation, and security/compliance toolkits—represent the backbone of modern AI support systems.

-

MLOps Platforms (Kubeflow, MLflow, and Others)

MLOps platforms provide the foundation for maintaining AI systems at scale. They automate the end-to-end lifecycle of machine learning, from data ingestion to deployment and retraining. These platforms bring practices from DevOps into the machine learning world, emphasizing reproducibility, scalability, and continuous integration.

Key capabilities of MLOps platforms include:

- Pipeline automation: Defining workflows for preprocessing, training, evaluation, and deployment.

- Experiment tracking: Capturing metadata, hyperparameters, and results for reproducibility.

- Model registry: Centralized repositories to manage multiple model versions.

- Continuous integration/continuous deployment (CI/CD): Automating the rollout of new models while allowing rollback if problems occur.

Examples:

- Kubeflow: An open-source platform built on Kubernetes, designed for large-scale machine learning pipelines. It provides tools for distributed training, model serving, and monitoring, making it popular for enterprises with complex AI systems.

- MLflow: A lightweight, open-source platform focused on experiment tracking, model packaging, and deployment. Its simplicity makes it ideal for teams beginning to scale AI operations.

- SageMaker (AWS) and Vertex AI (Google Cloud): Managed services that integrate MLOps pipelines with cloud infrastructure, reducing the burden of custom setup.

MLOps platforms serve as the control hub, ensuring AI systems can evolve while maintaining governance and reproducibility.

-

Monitoring Tools (Evidently AI and Arize)

Model monitoring tools are critical for detecting issues such as drift, bias, or performance degradation in real time. They allow teams to transition from reactive troubleshooting to proactive maintenance.

Key functions of monitoring tools include:

- Drift detection: Identifying changes in data distributions or model outputs that indicate declining accuracy.

- Bias and fairness analysis: Auditing models for unequal performance across demographic groups.

- Performance dashboards: Visualizing accuracy, precision, recall, and latency for ongoing oversight.

- Alerts and diagnostics: Automatically notifying teams when models deviate from expected thresholds.

Examples:

- Evidently AI: An open-source library that provides extensive drift detection, data validation, and performance monitoring. It integrates easily into existing pipelines, making it suitable for organizations seeking flexible and transparent monitoring.

- Arize AI: A commercial platform offering comprehensive monitoring, including explainability features, anomaly detection, and advanced visualizations. It excels at managing models at scale, providing insights for both engineers and business stakeholders.

By deploying these tools, organizations gain visibility into model health, ensuring they can act before small problems escalate into major failures.

-

Infrastructure Orchestration (Kubernetes and Docker)

AI systems demand significant computational resources, which must be provisioned and managed efficiently. Infrastructure orchestration tools like Kubernetes and Docker provide the flexibility and scalability needed to run AI workloads reliably.

Docker: A containerization platform that packages AI models and their dependencies into portable containers. This ensures models run consistently across environments—from local testing to cloud deployment.

Kubernetes: A container orchestration platform that automates deployment, scaling, and management of containerized applications. For AI, Kubernetes enables:

- Scalability: Automatically adjusting resources based on demand.

- Resilience: Restarting failed containers and distributing workloads across nodes.

- Flexibility: Running hybrid workloads across cloud and on-prem environments.

- Integration with GPUs/TPUs: Efficiently scheduling workloads that require specialized hardware.

Example: A financial institution running multiple fraud detection models can use Kubernetes to ensure high availability, scaling resources during transaction spikes and minimizing costs during off-peak periods.

Together, Docker and Kubernetes provide the backbone of AI infrastructure management, enabling organizations to run models at scale without manual intervention.

-

Automation for Retraining and Deployment

AI systems must be retrained regularly to combat model drift and adapt to new data. Manual retraining is time-consuming and error-prone, which makes automation essential.

Key elements of retraining automation include:

- Scheduled retraining pipelines: Automatically retraining models based on calendar intervals (e.g., weekly, monthly).

- Trigger-based retraining: Initiating updates when monitoring tools detect drift or declining performance.

- Automated validation: Testing retrained models against benchmarks to confirm accuracy before deployment.

- Canary deployments: Gradually rolling out updated models to subsets of users, reducing risk of system-wide errors.

Example: An eCommerce recommendation system might automatically retrain weekly, incorporating the latest purchase data. Drift detection tools can trigger additional retraining cycles during unusual shopping events, such as Black Friday.

Platforms like Kubeflow Pipelines, Airflow, and cloud-native tools (e.g., SageMaker Pipelines) help orchestrate retraining workflows. Automated deployment ensures refreshed models move seamlessly into production, closing the loop between monitoring and maintenance.

-

Security and Compliance Toolkits

Security and compliance are inseparable from AI maintenance. As regulations evolve and cyber threats grow, organizations need toolkits that safeguard sensitive data, prevent adversarial attacks, and ensure regulatory adherence.

Key components of security and compliance tools include:

- Data encryption: Protecting sensitive data at rest and in transit.

- Access controls: Enforcing role-based permissions to limit exposure.

- Audit trails: Recording every model version, dataset, and decision for accountability.

- Bias detection and explainability: Ensuring fairness and transparency, which are increasingly mandated by laws such as GDPR and the EU AI Act.

- Adversarial defense tools: Detecting and mitigating malicious attempts to manipulate AI inputs.

Examples:

- Fiddler AI: Provides explainability and bias detection, helping organizations maintain trust and comply with fairness requirements.

- IBM OpenScale: Offers monitoring, explainability, and compliance features integrated with enterprise environments.

- Cloud-native security suites: AWS GuardDuty, Azure Security Center, and GCP Security Command Center provide infrastructure-level protection for AI workloads.

Example in practice: A healthcare provider using AI for patient triage must comply with HIPAA in the U.S. and GDPR in Europe. Compliance toolkits help enforce data handling rules, generate audit logs, and detect potential violations before regulators intervene.

-

The Interplay of Tools in a Maintenance Ecosystem

No single tool addresses all AI maintenance needs. MLOps platforms provide the overarching structure, monitoring tools deliver visibility, orchestration frameworks manage infrastructure, retraining pipelines ensure adaptability, and compliance toolkits enforce governance. When integrated, these technologies form a resilient ecosystem that supports continuous AI operations.

For instance, a retail organization might use MLflow for version control, Evidently AI for drift detection, Kubernetes for scaling, automated pipelines for retraining, and Fiddler AI for compliance checks. Together, this toolchain ensures the organization’s recommendation engine stays accurate, fair, and trustworthy under dynamic market conditions.

Outsourcing vs In-House AI Support

Once AI systems are deployed, the question of who maintains and supports them becomes a strategic decision. Some organizations choose to build in-house teams, while others outsource to specialized providers. This Outsourcing vs In-House approach often shapes long-term efficiency, scalability, and costs. Many also adopt hybrid models, blending internal oversight with external expertise. The right choice depends on company size, industry, budget, and risk tolerance. Evaluating the pros and cons of each approach, understanding the needs of startups versus enterprises, and weighing costs against long-term trade-offs is critical for sustaining AI value.

Pros and Cons of Outsourcing AI Support

Advantages of outsourcing:

- Specialized expertise: External vendors bring domain-specific skills in monitoring, retraining, and compliance that may be difficult to build internally.

- Faster implementation: Service providers often have prebuilt pipelines, monitoring frameworks, and toolchains that accelerate AI support setup.

- Cost efficiency in the short term: Businesses avoid the upfront investment of hiring data scientists, ML engineers, and compliance officers.

- Scalability: Outsourcing partners can ramp services up or down based on workload, supporting unpredictable demand without hiring surges.

- Access to the latest tools: Providers stay current with evolving MLOps technologies, offering solutions that might be expensive or complex to replicate in-house.

Drawbacks of outsourcing:

- Dependency risks: Organizations may become reliant on vendors, limiting internal knowledge and flexibility.

- Data security concerns: Sharing sensitive data with third parties increases the risk of breaches or compliance violations.

- Reduced customization: External providers may offer standardized processes that don’t fully align with unique business needs.

- Hidden costs: Vendors often charge premiums for custom integrations, fast response times, or additional compliance requirements.

- Potential misalignment: Third-party priorities may not always align with long-term business goals, especially if providers focus on short-term fixes.

Example: A retail startup might outsource AI monitoring to quickly stabilize its recommendation engine. However, as it grows, the lack of in-house expertise may hinder innovation and increase reliance on the vendor.

When Startups vs Enterprises Should Outsource

The decision to outsource depends heavily on organizational maturity.

Startups:

For early-stage companies, outsourcing AI support often makes sense. Startups typically lack the budget and talent pool to build specialized teams. Outsourcing provides access to experts and ready-made frameworks, allowing startups to focus on core business activities. For example, a healthtech startup deploying AI diagnostics can outsource compliance monitoring to avoid costly regulatory mistakes, while its internal team focuses on improving the product experience.

Enterprises:

Large organizations, in contrast, usually benefit from building in-house support. Enterprises have greater financial resources and can justify the long-term investment in dedicated teams. In-house support allows tighter integration with proprietary data, greater control over compliance, and alignment with strategic goals. However, enterprises may still outsource niche functions, such as explainability audits or security testing, when external providers have superior tools or expertise.

Example: A multinational bank may keep fraud detection support in-house to protect sensitive customer data but outsource fairness audits to specialized AI ethics firms.

Cost Comparisons and Hidden Trade-Offs

Outsourcing costs:

Outsourced support is typically billed as a subscription or service contract, with fees based on usage volume, number of models, or complexity. While upfront costs are lower than hiring a full team, long-term contracts can become expensive, especially as the number of supported models grows.

In-house costs:

Building internal teams requires significant investment in salaries, training, and infrastructure. A full team might include data engineers, ML engineers, DevOps specialists, and compliance officers. Annual costs can run into millions for large enterprises. However, these costs stabilize over time and yield internal capabilities that outsourcing cannot provide.

Hidden trade-offs:

- Knowledge retention: In-house teams build organizational knowledge, while outsourcing risks dependency.

- Compliance risk: Outsourcing may complicate audits if vendors fail to maintain proper documentation.

- Speed vs. control: Outsourcing accelerates deployment but reduces control, while in-house provides customization at the cost of slower implementation.

- Flexibility: Outsourcing contracts may lock organizations into specific tools or approaches, limiting agility.

Example: A logistics company outsourcing retraining to a managed service may pay less initially. But as its AI fleet expands to hundreds of models, per-model service fees balloon, making in-house teams more cost-effective in the long term.

Building a Hybrid Model for Flexibility

Many organizations adopt a hybrid approach, combining the best of both worlds. In this model, core AI maintenance—such as compliance-sensitive monitoring, bias audits, and retraining for mission-critical systems—is handled internally, while specialized or resource-intensive tasks are outsourced.

Advantages of hybrid models include:

- Strategic control: Critical systems remain under direct oversight.

- Cost optimization: Routine tasks are outsourced to save costs, while high-value expertise is retained in-house.

- Access to external innovation: External providers supply tools and methods that complement internal teams.

- Scalability: Hybrid models allow organizations to handle peak workloads with vendor support without permanently expanding staff.

Example: An eCommerce enterprise might keep its personalization engine fully managed in-house for competitive advantage but outsource data labeling and bias audits to external firms. This ensures speed, compliance, and innovation without overburdening internal teams.

Strategic Considerations for Decision-Makers

When choosing between outsourcing, in-house, or hybrid approaches, organizations should ask:

- What is the sensitivity of the data and processes involved?

- Do we have the budget and talent to sustain long-term AI support internally?

- How critical is AI performance to our core business strategy?

- What level of flexibility and customization do we require?

- Can we negotiate vendor contracts that prevent long-term lock-in?

By answering these questions, businesses can align their AI maintenance strategy with broader organizational priorities.

Industry-Specific AI Maintenance Examples

While AI maintenance principles apply broadly, the way they manifest varies significantly across industries. Sector-specific regulations, data types, and operational priorities influence how organizations design and execute support services. Examining key industries—healthcare, finance, retail and eCommerce, manufacturing and logistics, and the legal and public sector—highlights the diverse requirements and unique challenges of maintaining AI in real-world settings.

Healthcare – Patient Data Privacy and Continuous Retraining with New Diagnostics

Healthcare is one of the most promising yet challenging fields for AI. From diagnostic imaging to personalized treatment recommendations, AI systems must operate with high accuracy while safeguarding sensitive patient data.

Maintenance priorities include:

- Patient data privacy: Compliance with HIPAA in the United States, GDPR in Europe, and emerging global regulations requires strict controls over how AI systems handle medical records. Maintenance teams must implement encryption, audit trails, and access controls, while monitoring for unauthorized access or data leaks.

- Continuous retraining: Medical science evolves rapidly. New diagnostic markers, treatment protocols, and disease variants necessitate frequent model retraining. For instance, AI tools that analyze radiology images must be updated when new imaging techniques or classifications are introduced.

- Bias monitoring: Healthcare data often underrepresents minority groups, leading to potential disparities in diagnosis or treatment recommendations. Maintenance involves fairness audits to ensure equitable outcomes.

Example: During the COVID-19 pandemic, diagnostic AI models trained on pre-pandemic datasets underperformed when identifying new lung pathologies associated with the virus. Maintenance teams had to rapidly incorporate new imaging data, retrain models, and validate outputs with physician oversight to ensure safety.

Challenge: The combination of high regulatory scrutiny and life-or-death consequences makes healthcare AI maintenance one of the most resource-intensive undertakings, requiring multidisciplinary teams of engineers, clinicians, and compliance officers.

Finance – Fraud Detection Models and Compliance Updates

In finance, AI systems are deeply embedded in fraud detection, credit scoring, algorithmic trading, and risk management. These applications operate under strict regulatory environments and require both real-time performance and explainability.

Maintenance priorities include:

- Fraud detection updates: Fraud patterns evolve constantly as criminals adapt to detection methods. Adaptive maintenance involves retraining models on new data, incorporating signals from emerging fraud vectors, and recalibrating thresholds to reduce false positives.

- Regulatory compliance: Financial regulators demand transparency and fairness in AI-driven decisions. Maintenance involves generating audit logs, documenting model rationale, and updating systems to align with new laws or guidelines.

- Infrastructure scalability: Financial transactions occur at massive volumes, especially in payments and trading. Maintenance ensures low-latency predictions while keeping infrastructure costs under control.

Example: A global payments provider may deploy an AI fraud detection system that flags unusual spending patterns. As fraudsters innovate (e.g., synthetic identity fraud), the model must be retrained with new datasets and validated against updated regulatory standards. Failure to update can lead to financial losses and compliance breaches.

Challenge: Finance balances two competing demands—speed and compliance. Maintenance must ensure models detect fraud in milliseconds while providing explainability that satisfies regulators. This dual requirement makes maintenance complex and costly.

Retail & eCommerce – Recommendation Engines and Personalization Upkeep

Retail and eCommerce thrive on personalized user experiences. Recommendation engines, demand forecasting, and chatbots are central to customer engagement and revenue growth. However, these systems face constant pressure to remain relevant as consumer behavior shifts rapidly.

Maintenance priorities include:

- Recommendation engine updates: User preferences evolve daily. AI models must be retrained frequently to incorporate recent browsing and purchase data, especially during seasonal or promotional events.

- Data pipeline reliability: Retail data often comes from multiple sources, including web traffic, mobile apps, and supply chain systems. Maintaining data pipelines is critical to prevent broken recommendations or outdated personalization.

- Scalability: Traffic spikes during holidays or flash sales require scaling infrastructure without compromising response times.

- Bias monitoring: Ensuring recommendations don’t unfairly prioritize certain products or vendors due to skewed datasets.

Example: An online fashion retailer may deploy AI to recommend clothing items. If a sudden trend in sustainable fashion emerges, the system must incorporate updated data to suggest relevant products. Without timely retraining, recommendations become outdated, leading to reduced conversion rates.

Challenge: The pace of consumer change requires retail AI maintenance to be almost continuous, supported by automated retraining and real-time monitoring. Neglect can quickly lead to lost revenue and customer dissatisfaction.

Manufacturing & Logistics – Predictive Maintenance for Machinery and Supply Chains

In manufacturing and logistics, AI plays a crucial role in predictive maintenance, supply chain optimization, and demand forecasting. These applications operate in environments where downtime is costly and data streams from sensors must be processed in real time.

Maintenance priorities include:

- Predictive maintenance updates: Models that forecast machine failures must be retrained regularly with new sensor data. Wear-and-tear patterns evolve, and anomalies must be detected early to avoid production halts.

- Integration with IoT systems: AI systems rely on Internet of Things (IoT) sensors, which themselves require calibration and updates. Maintenance ensures smooth data ingestion and processing.

- Supply chain adaptation: Global events such as pandemics, natural disasters, or geopolitical shifts affect supply chain patterns. AI models must adapt to maintain accuracy in forecasting and routing.

- Infrastructure resilience: Downtime in logistics can cause cascading delays. Maintenance ensures infrastructure redundancy and real-time failover mechanisms.

Example: A logistics company may use AI to optimize delivery routes. During extreme weather events, the model must adapt quickly to reroute shipments and avoid disruptions. Maintenance involves feeding updated environmental and traffic data into the system to recalibrate predictions.

Challenge: The complexity of data sources—spanning sensors, suppliers, and external factors—requires robust pipeline maintenance. Failure in one component can ripple across entire manufacturing or logistics ecosystems.

Legal & Public Sector – Ethical Use and Regulatory Alignment

The legal and public sector deploy AI in areas such as case research, predictive policing, benefits eligibility assessments, and citizen services. These applications are particularly sensitive because they directly impact people’s rights and public trust.

Maintenance priorities include:

- Ethical oversight: AI systems must be regularly audited to prevent discriminatory or unfair outcomes, especially in policing, sentencing, or benefits allocation.

- Regulatory compliance: Governments are subject to strict transparency requirements. AI models must be explainable, with clear documentation of decision-making processes.

- Data governance: Sensitive legal and citizen data must be handled with the highest privacy standards. Maintenance ensures anonymization, encryption, and compliance with jurisdiction-specific rules.

- Human-in-the-loop validation: Many public-sector AI systems require human oversight to ensure accountability in high-stakes decisions.

Example: A court system using AI for case prioritization must regularly audit models to ensure they don’t inadvertently disadvantage certain groups. Maintenance may involve retraining models with balanced datasets and providing judges with explainable outputs.

Challenge: In the public sector, the bar for fairness and accountability is higher than in commercial industries. Even small errors can lead to public backlash, erode trust, or trigger lawsuits. Maintenance therefore emphasizes transparency, ethics, and human oversight more than speed or scalability.

The Importance of Industry Context in AI Maintenance

These examples illustrate that AI maintenance is never one-size-fits-all. Healthcare emphasizes privacy and retraining for new diagnostics; finance prioritizes fraud adaptation and compliance; retail demands real-time personalization; manufacturing focuses on predictive reliability; and the legal sector requires ethical and transparent oversight.

While the underlying principles—monitoring, retraining, security, and compliance—are consistent, industry context shapes their execution. Organizations that tailor maintenance to their domain’s challenges and regulations are best positioned to sustain AI value and build trust with stakeholders.

Cost of AI Maintenance and Support

AI systems are rarely a “set-and-forget” investment. Once deployed, they demand continuous care to remain accurate, scalable, and compliant. This translates into ongoing costs that can sometimes rival or even exceed the initial development budget. For decision-makers, understanding the financial dimensions of AI maintenance is as important as understanding its technical requirements. Costs are shaped by complexity, scale, and industry regulations; they fall into both ongoing and one-time categories; and they typically cover monitoring, retraining, infrastructure, and compliance. Effective budgeting ensures AI investments continue to generate value rather than drain resources.

-

Factors Influencing Cost (Complexity, Scale, Industry)

Complexity of the model:

Simple models, such as linear regressions or decision trees, require relatively low maintenance. However, complex models like large language models (LLMs) or deep neural networks incur higher costs due to the need for advanced monitoring, extensive retraining, and specialized infrastructure.

Scale of deployment:

The more users, transactions, or predictions a system processes, the higher the maintenance cost. For instance, an eCommerce recommendation system serving millions of customers incurs far greater infrastructure and retraining expenses than a small internal HR chatbot.

Industry requirements:

Industries with stringent regulations, such as healthcare and finance, face higher costs due to compliance audits, documentation requirements, and data security measures. In contrast, sectors like retail or logistics may spend more on infrastructure scalability but less on regulatory overhead.

Integration complexity:

Systems that must interact with legacy IT platforms or ingest data from numerous sources require more extensive maintenance to ensure pipelines remain reliable. Integration challenges often translate into higher engineering costs.

-

Ongoing vs One-Time Expenses

AI maintenance involves both recurring and one-off expenditures.

Ongoing expenses:

- Monitoring: Subscription costs for monitoring platforms or salaries for dedicated staff to track performance.

- Retraining: Regular updates to datasets and models, which consume compute resources and staff time.

- Infrastructure: Continuous expenses for cloud resources, GPUs, or on-premises hardware.

- Compliance: Recurrent audits, reporting, and fairness assessments required by regulators.

One-time expenses:

- Initial tool setup: Implementing MLOps platforms, monitoring dashboards, or pipeline automation systems.

- Upgrades: Migrating to new infrastructure or adding capabilities like explainability tools.

- Custom integrations: Developing APIs or middleware to connect AI with legacy systems.

A useful analogy is to think of AI as owning a fleet of vehicles. The initial purchase (development) is just the start. Fuel, servicing, insurance, and occasional upgrades (maintenance) determine the total cost of ownership.

-

Cost Breakdown: Monitoring, Retraining, Infrastructure, Compliance

Monitoring:

Monitoring costs vary depending on whether businesses use open-source tools or commercial platforms. Open-source libraries like Evidently AI are free but require in-house expertise to manage. Commercial solutions like Arize AI or IBM OpenScale charge subscription fees, often based on the number of models or transactions monitored. Annual costs can range from tens of thousands to millions for large enterprises.

Retraining:

Retraining is one of the most resource-intensive activities. Training small models may only require modest compute resources, but retraining large-scale models—especially LLMs or deep learning systems—can cost hundreds of thousands per cycle. Frequency also matters: models retrained weekly incur far higher costs than those updated quarterly. Costs include compute (cloud GPUs/TPUs), storage for datasets, and personnel time for validation.

Infrastructure:

Cloud-based infrastructure offers flexibility but comes with variable costs tied to usage. On-demand GPU pricing, storage, and bandwidth quickly add up at scale. Enterprises with consistent workloads sometimes prefer on-premises infrastructure, which has higher upfront costs but lower long-term expenses. Annual infrastructure costs can represent 40–60% of total AI maintenance budgets in compute-heavy applications.

Compliance:

Compliance costs are often underestimated. They include legal reviews, third-party fairness audits, documentation maintenance, and regular system explainability testing. For high-risk industries like healthcare, finance, and government, compliance can account for 20–30% of maintenance budgets. Non-compliance, however, carries far higher costs in the form of fines or reputational damage.

How Businesses Can Budget Effectively

Adopt a total cost of ownership (TCO) perspective:

Organizations should view AI as an ongoing operational expense, not a one-time capital project. Budgeting should account for at least three to five years of continuous maintenance.

Right-size infrastructure:

Avoid over-provisioning expensive GPU clusters if workloads are sporadic. Use auto-scaling cloud services where possible, and consider hybrid models that combine cloud flexibility with on-prem cost efficiency.

Leverage open-source tools wisely:

Open-source frameworks like MLflow, Kubeflow, and Evidently AI can significantly reduce licensing costs. However, businesses must balance this with the internal staffing required to manage and maintain these tools.

Automate retraining pipelines:

Automating retraining reduces personnel costs and minimizes errors. MLOps frameworks streamline updates, enabling businesses to retrain only when drift is detected rather than on fixed schedules.

Allocate compliance budgets upfront:

Rather than treating compliance as an afterthought, organizations should assign dedicated budgets for audits, documentation, and fairness testing. This prevents costly surprises during regulatory reviews.