What Is a Video Feedback Platform for Surveys?

A video feedback platform for surveys is a digital system that allows respondents to answer survey questions by recording short video responses instead of selecting predefined options or typing text. At its core, the platform combines survey logic with browser-based or mobile video recording, enabling organizations to collect qualitative feedback in a richer, more human format. Respondents are typically presented with structured questions, open-ended prompts, or dynamically generated follow-up questions and are asked to record brief videos that capture their thoughts in their own words.

Unlike traditional text-based or multiple-choice surveys, video feedback surveys do not force respondents to compress complex opinions into limited answer fields. Text surveys rely on written articulation, time investment, and interpretation, while video surveys allow people to speak naturally, often reducing effort and cognitive load. Modern platforms also support AI-assisted prompts, where follow-up questions adapt based on earlier responses, mimicking a lightweight interview experience at scale. From a data perspective, video feedback platforms collect not just spoken content but also metadata such as response length, pacing, and engagement signals, creating a multidimensional feedback dataset that goes far beyond static survey answers.

Why Text-Based Surveys Are Failing Modern Research

Text-based surveys are increasingly ineffective in capturing meaningful insights, primarily because they were designed for a different era of user behavior. Survey fatigue has become widespread as users are repeatedly asked to complete long forms, leading to rushed answers, skipped questions, or complete abandonment. Completion rates decline sharply when surveys exceed a few minutes, particularly in B2C contexts where respondents have little intrinsic motivation to provide detailed written feedback.

Even when surveys are completed, the quality of responses often falls short. Open-text fields frequently contain short, vague answers that lack context or emotional depth, while multiple-choice questions oversimplify complex opinions. This creates a significant interpretation gap, where researchers must infer sentiment, intent, and urgency from limited signals. In B2B research, stakeholders may provide politically safe or overly neutral responses in writing, masking real concerns. In consumer research, tone and emotional nuance are lost entirely, making it difficult to distinguish between mild dissatisfaction and serious frustration. As a result, organizations collect large volumes of data but struggle to extract insights that genuinely inform product, experience, or strategy decisions.

The Rise of Video-First Customer and User Research

Video-first feedback is gaining traction because it aligns with how people naturally communicate and how modern research teams need to understand users. Video captures tone of voice, facial expressions, pauses, and hesitation, all of which provide critical context that text cannot convey. A short video response can reveal confidence, uncertainty, enthusiasm, or frustration within seconds, enabling researchers to interpret feedback with far greater accuracy and empathy.

In product discovery, video feedback helps teams understand why users behave a certain way, not just what they do. Watching a user explain confusion around a feature often surfaces usability issues that analytics alone cannot detect. In UX research, video responses allow designers to observe emotional reactions to interfaces, workflows, and visual changes without conducting live interviews. Employee feedback programs benefit as well, since video responses often feel more personal and less bureaucratic, encouraging honesty and deeper reflection. For market validation and brand research, video enables teams to test messaging, concepts, and positioning while observing authentic reactions that written answers rarely capture.

As organizations seek faster, more human insight without the cost and logistics of live interviews, video feedback platforms bridge the gap between qualitative depth and quantitative scale. This shift reflects a broader recognition that understanding people requires seeing and hearing them, not just reading what they type.

Market Opportunity and Use Cases for Video Feedback Platforms

Market Size and Growth of Experience Research Platforms

The market for experience research platforms has expanded rapidly as organizations prioritize customer experience, user experience, and employee engagement as strategic differentiators. CX and UX research are no longer treated as occasional initiatives tied to product launches or redesigns. They are now continuous processes embedded into product development, marketing, and operations. This shift has driven sustained growth in feedback intelligence platforms that can capture, analyze, and operationalize user sentiment at scale.

Several structural trends are accelerating adoption. First, digital-first businesses generate constant interaction data but still lack qualitative context around why users behave the way they do. Second, distributed teams and remote work have reduced opportunities for in-person interviews, increasing demand for asynchronous research methods. Third, enterprises are investing heavily in AI-driven analytics to reduce manual analysis time and surface insights faster. Video feedback platforms sit at the intersection of these trends by combining qualitative depth with automation and scalability.

Enterprise adoption has been particularly strong in sectors such as SaaS, eCommerce, financial services, healthcare, and media, where understanding user trust, friction, and perception is critical. Large organizations are moving beyond traditional survey tools toward systems that support video, transcription, sentiment analysis, and automated insight generation. The result is a growing category of experience intelligence platforms that treat feedback not as static data, but as a continuous, decision-driving asset. Video feedback is increasingly viewed not as an experimental add-on, but as a core capability within modern research and insights stacks.

Key Use Cases Across Industries

Product discovery and validation is one of the most common and high-impact use cases for video feedback platforms. Teams use video surveys to understand unmet needs, validate problem statements, and test early concepts before investing in development. Typical questions include why users chose a particular solution, what frustrated them during a task, or how they currently solve a problem. Video performs better here because users can explain context, constraints, and emotions in their own words. A short video often reveals assumptions, workarounds, and expectations that would never surface in a written response.

In UX and usability testing, video feedback allows researchers to observe emotional reactions to interfaces and workflows without conducting live sessions. Users may be asked to describe what they expected a button to do, where they felt confused, or how confident they felt completing a task. Video captures hesitation, uncertainty, and tone, which are essential signals when evaluating usability. Designers can quickly identify friction points by watching a small set of responses rather than interpreting abstract usability scores.

Customer satisfaction and NPS follow-ups benefit significantly from video because they move beyond numeric ratings. Instead of only asking how likely a customer is to recommend a product, teams can ask why they gave that score and what influenced their perception. Video responses reveal whether satisfaction is driven by genuine loyalty, convenience, or lack of alternatives. They also make it easier to distinguish between minor issues and serious dissatisfaction, helping teams prioritize improvements more accurately.

In employee engagement and internal surveys, video feedback reduces the formality and emotional distance associated with traditional HR surveys. Employees may be asked how supported they feel, what challenges they face, or what changes would improve their work experience. Video encourages more thoughtful and candid responses, especially when paired with clear privacy assurances. Leaders gain a clearer understanding of morale, trust, and engagement by hearing employees speak directly rather than reading sanitized text.

For market research and brand perception, video feedback is used to test messaging, advertising concepts, and brand positioning. Participants might be asked how a message made them feel, what stood out, or whether a brand aligns with their values. Video captures instinctive reactions and emotional resonance, which are central to brand research but difficult to measure through text alone. This makes video particularly effective for early-stage concept testing and qualitative brand studies.

B2B vs B2C Video Feedback Requirements

While the core mechanics of video feedback platforms remain consistent, B2B and B2C use cases impose different requirements. In B2B contexts, responses are typically longer and more detailed, as participants are domain experts discussing workflows, decision criteria, or operational challenges. Moderation and review processes tend to be more rigorous, with an emphasis on tagging, categorization, and sharing insights across multiple stakeholders. Compliance expectations are also higher, particularly in regulated industries, requiring stricter access control, data retention policies, and auditability.

B2C video feedback places greater emphasis on ease of use, brevity, and incentives. Response lengths are usually shorter, and friction must be minimized to maintain participation. Incentives such as vouchers or rewards play a larger role, and platforms must support high volumes of responses with lightweight moderation. Reporting in B2C scenarios often focuses on patterns and trends rather than individual deep dives, requiring strong aggregation and summarization capabilities.

Understanding these differences is essential when designing or building a video feedback platform. A system optimized for enterprise B2B research will differ significantly from one designed for high-volume consumer feedback, even though both rely on the same foundational concept of video-driven insight.

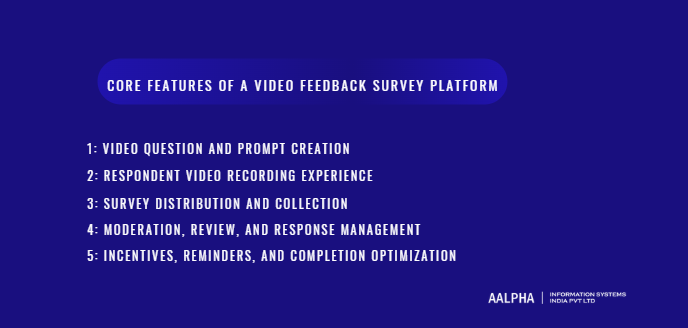

Core Features of a Video Feedback Survey Platform

-

Video Question and Prompt Creation

Video feedback quality is determined largely by how questions and prompts are designed. A strong platform must support both structured and open-ended prompts, as each serves a different research purpose. Structured prompts are typically used when teams want consistency across responses, such as asking users to explain why they chose a particular option or how they felt about a specific interaction. These prompts reduce ambiguity and make analysis easier across large datasets. Open-ended prompts, by contrast, are designed to encourage free expression and storytelling, allowing respondents to surface issues or insights that researchers may not have anticipated.

Advanced platforms increasingly support AI-generated follow-up questions that adapt in real time based on a respondent’s previous answer. For example, if a user mentions confusion during onboarding, the system can automatically ask a follow-up question to explore which step caused friction. This creates a semi-conversational experience that approximates a human interview while remaining asynchronous and scalable. Branching logic plays a critical role here, enabling different question paths based on responses, demographics, or behavior. When implemented correctly, branching reduces irrelevant questions and keeps respondents engaged.

Prompt design has a direct impact on response quality. Clear, concise prompts encourage focused answers, while overly broad or leading questions often result in unfocused responses. Video platforms must therefore provide guidance, examples, or templates that help researchers frame questions effectively. The ability to preview prompts from a respondent’s perspective is also essential, ensuring that the survey experience feels natural rather than interrogative.

-

Respondent Video Recording Experience

The respondent recording experience is the most critical factor influencing completion rates and response authenticity. Video recording must work seamlessly across modern browsers without requiring plugins or downloads. Browser-based recording using device cameras allows respondents to participate instantly, reducing friction and drop-off. Mobile support is equally essential, as many respondents prefer recording video on their phones where cameras and microphones are optimized.

Time limits are a subtle but powerful design element. Short limits encourage concise, focused answers, while optional extended limits allow deeper explanations when needed. Platforms should also allow respondents to retry recordings, which reduces anxiety and improves confidence, especially for users unfamiliar with video feedback. At the same time, retry limits help prevent overproduction and maintain authenticity.

Accessibility is another core consideration. Captions, clear instructions, and compatibility with assistive technologies ensure broader participation. Upload optimization is equally important, particularly for users on slower connections. Techniques such as background uploads, compression, and progress indicators significantly improve the experience by reducing perceived wait times. Every element of the recording flow should be designed to minimize cognitive and technical friction, making video feedback feel easier than typing a long response.

-

Survey Distribution and Collection

Effective distribution is what turns a well-designed survey into a meaningful dataset. Video feedback platforms must support multiple distribution channels to match how organizations interact with users. Shareable links are the most common method, allowing surveys to be embedded in emails, messages, or documents. Email distribution remains essential for formal research and customer feedback, requiring features such as personalized invites, scheduling, and tracking.

In-app embeds enable contextual feedback collection, such as asking users to record a response immediately after completing a task or encountering an issue. QR codes are particularly useful in physical environments like retail locations, events, or training sessions, where users can scan and respond on their mobile devices. Platforms must also support both anonymous and authenticated responses. Anonymous surveys encourage honesty in sensitive contexts, while authenticated responses enable longitudinal analysis and follow-ups. Balancing these options allows organizations to tailor data collection to their specific research goals and trust requirements.

-

Moderation, Review, and Response Management

As video responses accumulate, moderation and management tools become essential for maintaining signal quality. A centralized dashboard allows researchers to review incoming responses, monitor participation, and track completion rates in real time. Tagging and labeling systems enable teams to categorize responses by theme, sentiment, or priority, creating structure within large qualitative datasets.

Filtering tools allow reviewers to focus on specific segments, such as responses from a particular user group or those flagged by AI for strong sentiment. Review queues help teams manage workflows by assigning responses for moderation, analysis, or escalation. Internal collaboration features, such as comments or shared annotations, allow multiple stakeholders to discuss insights directly within the platform. These capabilities transform raw video responses into an organized, actionable knowledge base rather than an unmanageable media archive.

-

Incentives, Reminders, and Completion Optimization

Completion optimization requires a combination of behavioral design and operational tooling. Gentle nudges, such as progress indicators or encouraging copy, help respondents understand the effort required and stay engaged. Automated reminders play a crucial role in improving completion rates, especially for surveys distributed via email. Timing and frequency must be carefully calibrated to avoid annoyance while maintaining visibility.

Incentives are often used to motivate participation, particularly in B2C research. These may include gift cards, discounts, or access to exclusive content. Platforms must support incentive tracking and fulfillment without complicating the respondent experience. Compared to text surveys, video surveys often achieve higher engagement when incentives are aligned with effort expectations. Respondents are more willing to participate when they feel their input is valued and their time respected. Optimizing these elements ensures that video feedback remains scalable without sacrificing response quality.

User Roles and Platform Workflows

-

Admin and Researcher Workflows

Admin and researcher workflows determine whether a video feedback platform becomes a strategic research asset or a fragmented tool used inconsistently across teams. The workflow begins with survey creation, where researchers define objectives, select target audiences, and configure video prompts. This stage typically involves choosing between structured or exploratory questions, setting response limits, enabling branching logic, and deciding whether AI-generated follow-ups are allowed. A well-designed platform guides researchers through these decisions without requiring deep technical expertise, while still offering flexibility for advanced research scenarios.

Permissions and access control are central to governance, particularly in larger organizations. Admins must be able to define who can create surveys, edit prompts, view raw video responses, apply tags, or export insights. Role-based access ensures sensitive data is only visible to authorized users and supports compliance with internal policies and external regulations. In regulated environments, audit logs and activity tracking are often required to document who accessed or modified data and when.

Collaboration is another critical aspect of researcher workflows. Multiple team members often contribute to the same research project, requiring shared workspaces, commenting, and version control for prompts and tags. As responses are collected, researchers review videos, apply thematic tags, and flag notable insights. AI-assisted tools may suggest themes or sentiment, but human validation remains essential for accuracy and context. Once analysis is complete, insights must be exported in formats suitable for different audiences, such as summaries, highlight clips, or structured reports. An effective workflow reduces manual overhead while preserving analytical rigor, enabling teams to run continuous research rather than one-off studies.

-

Respondent Experience and Journey

The respondent experience directly influences participation rates, response quality, and trust in the platform. The journey begins with the invitation, which must clearly explain why the respondent is being asked for feedback, how long it will take, and how their video will be used. Ambiguity at this stage often leads to drop-off or low-quality responses, particularly when video is involved.

Upon accessing the survey, respondents should encounter a clean, distraction-free interface that sets expectations upfront. Clear instructions, visible time estimates, and reassurance about privacy help reduce anxiety associated with recording oneself. The recording process itself should be intuitive, with browser-based or mobile-native capture that requires no setup or downloads. Features such as test recordings, re-record options, and visible progress indicators increase confidence and reduce friction, especially for first-time users.

Privacy and trust signals are essential throughout the journey. Respondents need to know whether their responses are anonymous, who will view them, and how long the data will be stored. Explicit consent mechanisms and transparent messaging build credibility and encourage honesty. After submission, a confirmation step reassures respondents that their input was successfully received and, where applicable, provides information about incentives or next steps. A respectful, well-designed journey signals that the respondent’s time and perspective are valued, which consistently leads to more thoughtful and authentic feedback.

-

Stakeholder and Viewer Access

Not all users of a video feedback platform are involved in research execution. Product managers, marketers, founders, and external clients typically engage with insights rather than raw data. For these stakeholders, the platform must translate qualitative depth into accessible, decision-ready outputs. This requires dedicated viewing experiences that surface key themes, trends, and representative examples without overwhelming users with hours of video.

Dashboards designed for stakeholders often emphasize summaries, sentiment distribution, and recurring topics, supported by short highlight clips that illustrate findings. Instead of reviewing every response, stakeholders can quickly understand what users are saying and why it matters. Search and filtering tools allow deeper exploration when needed, enabling viewers to drill into specific segments, questions, or themes without navigating the entire dataset.

Reporting and sharing capabilities are also critical at this level. Stakeholders may need to export insights for presentations, strategy documents, or executive reviews. Platforms that support shareable links, curated playlists, or pre-built summaries make it easier to disseminate findings across the organization. By separating analytical workflows from consumption experiences, a video feedback platform ensures that insights are widely accessible and actionable, transforming qualitative research from a specialist function into a shared organizational capability.

System Architecture and Technical Design

-

High-Level Architecture Overview

A video feedback survey platform should be architected as a modular system where survey logic, media handling, analytics, and AI processing are clearly separated but tightly coordinated. At a high level, the system consists of five primary layers: frontend applications, backend services, media infrastructure, analytics services, and an asynchronous AI processing pipeline.

The frontend layer typically includes two experiences. The respondent-facing interface handles question rendering, branching logic, video recording, upload progress, and submission confirmation. This interface must operate smoothly across browsers and mobile devices, managing camera and microphone permissions and handling recording without requiring plugins or downloads. The internal console is used by researchers and administrators to build surveys, distribute them, review responses, tag insights, and export findings.

The backend layer acts as the system’s control plane. It manages user identity, survey definitions, permissions, response metadata, and workflow orchestration. Importantly, the backend should not act as a proxy for large media uploads. Instead, it issues time-limited upload credentials so clients can upload video directly to the media storage layer. This design dramatically reduces backend load and improves reliability.

Media services handle validation, encoding, thumbnail generation, and playback preparation. These processes are asynchronous and event-driven. The analytics layer captures behavioral signals such as completion rates, retries, drop-offs, and engagement metrics. Finally, the AI processing pipeline consumes transcripts and metadata to generate sentiment, themes, summaries, and searchable insight layers. This pipeline must be decoupled from the core workflow so that feedback remains usable even if AI processing is delayed.

-

Video Capture, Encoding, and Storage

Video capture begins on the client, where the platform requests camera and microphone access and records the media stream locally. For asynchronous survey responses, recording to a local buffer and uploading the completed file is usually the most stable and predictable approach. This avoids the complexity of live streaming infrastructure and simplifies error handling and retries.

Compression decisions directly affect user experience, cost, and downstream processing. Developers often implement a video upscaler to enhance low-quality uploads before they are presented to researchers. Recording in a reasonably compressed format on the client reduces upload time and storage usage, but formats must be chosen carefully to ensure broad playback compatibility. Many platforms adopt a hybrid approach by recording in a standard format and then transcoding server-side into normalized playback renditions. These renditions support smooth playback across devices and network conditions.

Streaming is rarely required for survey use cases. Direct upload after recording provides better resilience, especially for users on unstable connections. Chunked uploads and resumable transfers are particularly important for mobile users. On the storage side, object storage is the natural choice for raw uploads, transcoded renditions, and thumbnails. Cost control is achieved through lifecycle rules, duration limits, automatic cleanup of intermediate files, and clear retention policies aligned with compliance requirements.

Effective platforms treat video storage and encoding costs as a predictable unit cost tied to response volume, rather than an uncontrolled infrastructure expense.

-

Backend Services and Data Models

A robust backend starts with explicit domain modeling. Surveys are versioned entities containing metadata, delivery rules, permissions, and a question graph that supports branching logic. Versioning is essential because prompt changes during an active study must not invalidate earlier responses.

Response objects store references to answers rather than embedding large payloads directly. For video responses, the backend stores pointers to media assets along with duration, size, encoding status, transcript availability, and processing state. Metadata such as timestamps, device type, and submission context supports analytics and debugging without exposing sensitive content unnecessarily.

Tagging and thematic classification should be modeled as structured entities rather than ad hoc labels. This allows consistent aggregation across studies and teams. Internal comments and annotations should be treated separately from tags, since they represent discussion rather than classification.

Database design is typically hybrid. Relational databases are well-suited for users, permissions, audit logs, and survey-response relationships that require strong consistency. Document stores or search indexes are often used for transcripts, AI outputs, and flexible metadata that evolves over time. This separation allows analytical capabilities to expand without destabilizing the core system of record.

-

Scalability, Performance, and Reliability

Scalability challenges in video feedback platforms are driven primarily by media concurrency. When surveys are distributed to large audiences, many respondents may record and upload videos simultaneously. Direct-to-storage uploads prevent application servers from becoming bottlenecks and allow the system to scale horizontally with minimal coordination.

Media processing must be fully asynchronous. Once a video upload completes, the platform emits an event that triggers encoding, thumbnail generation, and transcription. The respondent experience should not depend on these processes completing immediately. Responses should appear in the system as soon as the upload is confirmed, with enrichment added progressively.

Global delivery is handled through geographically distributed storage and content delivery networks, ensuring fast playback regardless of viewer location. Reliability comes from idempotent operations and resumable workflows. Uploads must tolerate retries without creating duplicate records, and processing jobs must be safe to rerun. AI failures should never block access to raw responses. A resilient system treats AI as an enhancement layer, not a hard dependency.

-

Security, Authentication, and Access Control

Video feedback platforms handle sensitive, often personally identifiable data, making security a foundational requirement rather than an optional feature. All data must be encrypted in transit, and encryption at rest should be applied to both structured records and media assets. Authentication mechanisms should support both internal users and external respondents without exposing unnecessary system access.

Role-based access control is the standard approach for managing permissions. Different roles define who can create surveys, view raw videos, apply tags, export data, or administer settings. Media assets should be private by default, with access granted only through authenticated sessions or time-limited access tokens.

Secure media access is critical. Videos should never be publicly accessible unless explicitly intended. Temporary access links limit exposure and reduce risk if URLs are shared unintentionally. Audit logs provide accountability by recording access to sensitive data, permission changes, exports, and deletions. Together, encryption, strict access control, and comprehensive auditability create a security posture that supports enterprise adoption while preserving the agility required for continuous research.

AI and Analytics: Turning Video into Insights

-

Video Transcription and Speech-to-Text

Video transcription is the foundation of any analytics layer in a video feedback platform. Without reliable speech-to-text, downstream analysis such as sentiment detection, topic clustering, and search becomes fragile or misleading. The primary goal of transcription in this context is not perfect linguistic accuracy, but consistent, context-aware conversion of spoken responses into usable text aligned with the original video.

Transcription accuracy depends on several factors, including audio quality, background noise, speaker clarity, and language support. Survey responses are often recorded in uncontrolled environments, which makes noise handling and speaker normalization critical. Platforms must be able to handle accents, informal speech, pauses, and filler words without distorting meaning. Multilingual support is increasingly important as organizations collect feedback across regions. This includes not only major languages but also regional variations and mixed-language speech, where respondents naturally switch between languages within the same response.

Speaker diarization plays a smaller but still relevant role in survey contexts. While most responses involve a single speaker, diarization becomes useful in employee or group feedback scenarios where more than one person may appear in a video. Timestamp mapping is essential across all use cases. Each transcript segment should be aligned to precise timecodes in the video, enabling clickable text playback, quote extraction, and clip generation. Accurate timestamps allow analysts to move seamlessly between text and video, preserving the richness of the original response while enabling scalable analysis.

A well-designed transcription pipeline treats text as a structured artifact rather than a static paragraph. Sentences, pauses, and emphasis markers become analytical inputs, not just readable output.

-

Sentiment and Emotion Analysis

Sentiment and emotion analysis adds interpretive depth to raw transcripts by answering a critical question: how does the respondent feel about what they are saying. Unlike text-only sentiment analysis, video feedback platforms can draw signals from multiple modalities, including spoken language, vocal characteristics, and visual cues.

Tone detection focuses on linguistic indicators such as word choice, phrasing, and polarity. This helps classify responses along dimensions like positive, neutral, or negative sentiment. However, tone alone is often insufficient. Vocal stress analysis examines features such as pitch variation, speech rate, and volume changes to infer emotional intensity or uncertainty. A respondent who speaks quickly with rising pitch may convey frustration or urgency, even if their words appear neutral on paper.

Facial cues introduce another layer of complexity. Expressions, eye movement, and micro-expressions can signal confusion, hesitation, enthusiasm, or discomfort. While these signals can enhance interpretation, they must be handled carefully. Facial analysis is probabilistic and context-dependent, and it can be affected by lighting, camera quality, and cultural differences in expression.

There are important limitations and ethical considerations in this area. Emotion detection is not mind reading, and platforms must avoid presenting inferred emotions as objective facts. Transparency about how sentiment and emotion scores are generated is essential to prevent misinterpretation. Many organizations choose to use these signals as directional indicators rather than definitive judgments. Ethical implementations emphasize assistive insight rather than automated evaluation of individuals. When applied responsibly, sentiment and emotion analysis helps teams prioritize responses and identify areas of concern without replacing human judgment.

-

Topic Extraction and Thematic Clustering

As video feedback scales, the challenge shifts from collection to sense-making. Topic extraction and thematic clustering address this by identifying patterns across large volumes of responses. The objective is to surface recurring themes that reflect what respondents collectively care about, rather than treating each video as an isolated data point.

Topic extraction begins with analyzing transcripts to identify key concepts, phrases, and semantic relationships. Instead of relying solely on keyword frequency, modern approaches focus on meaning. For example, responses that mention “confusing setup,” “hard to get started,” and “onboarding took too long” may be grouped under a broader onboarding friction theme even though the wording differs. This semantic grouping is essential for capturing insight at scale.

Thematic clustering organizes these extracted topics into coherent groups. Each cluster represents a shared idea or concern expressed across multiple responses. Effective clustering balances granularity and clarity. Too few clusters oversimplify the data, while too many create noise. Human oversight remains important, especially in early iterations, to validate that clusters align with real research objectives rather than algorithmic artifacts.

Once established, themes become a powerful analytical layer. Researchers can quantify how frequently a theme appears, observe how it varies across segments, and track changes over time. Clusters can also be linked back to representative video clips, preserving qualitative richness. This approach allows teams to move from anecdotal feedback to evidence-backed insight without losing the human voice that makes video feedback valuable.

-

Automated Summaries and Highlight Reels

Automated summaries bridge the gap between deep qualitative data and executive decision-making. Most stakeholders do not have the time to watch dozens of videos or read long transcripts. They need concise, trustworthy overviews that capture what matters.

Text-based summaries condense large sets of responses into key points, recurring themes, and notable deviations. These summaries often follow a structured format, highlighting what respondents liked, what caused friction, and what suggestions emerged. High-quality summaries preserve nuance by avoiding overgeneralization and by referencing the strength or prevalence of each theme.

Highlight reels extend this concept into video form. By leveraging transcript timestamps and thematic tags, platforms can automatically generate short clips that illustrate specific insights. For example, a reel might show several respondents describing the same onboarding issue in their own words. This format is particularly effective for leadership reviews because it combines brevity with emotional impact.

Key quote extraction further supports storytelling. Quotations anchored to video timestamps allow teams to cite authentic user voices in presentations and reports. The goal of automation here is not to replace human synthesis, but to accelerate it. When summaries and reels are generated reliably, researchers can focus their time on interpretation and action rather than manual compilation.

-

Search, Filters, and Insight Exploration

Search and exploration tools determine whether insights remain accessible as datasets grow. A mature video feedback platform treats transcripts, tags, and AI outputs as a searchable knowledge base rather than a static archive.

Natural-language search allows users to ask questions in plain language, such as what users disliked about pricing or how new users felt during onboarding. The system interprets intent and retrieves relevant responses, even when the exact wording differs. This reduces dependence on predefined filters and empowers non-researchers to explore insights independently.

Filters provide structured exploration paths. Common filters include sentiment category, emotion intensity, theme, demographic attributes, survey version, and time range. Combining filters allows teams to isolate specific cohorts, such as dissatisfied enterprise users or first-time customers in a particular region. This capability is especially valuable for comparative analysis and hypothesis testing.

Effective exploration tools maintain a tight connection between text and video. Search results should link directly to relevant video moments, not just full recordings. This preserves context and prevents misinterpretation. Over time, the platform evolves into an institutional memory of user insight, where past feedback remains discoverable and relevant. When search and analytics are designed well, video feedback becomes a continuously usable asset rather than a one-time research output.

Monetization and Pricing Models

-

SaaS Pricing Models for Video Feedback Platforms

Monetization strategy plays a direct role in how a video feedback platform is positioned, adopted, and scaled. Unlike text survey tools, video platforms incur variable infrastructure costs tied to media storage and processing, which makes pricing design especially important. The most common SaaS pricing models reflect different buyer priorities and usage patterns.

Seat-based pricing charges customers based on the number of internal users who can create surveys, review responses, or access insights. This model works well in research-driven organizations where access is limited to defined teams. It offers predictable revenue and simple forecasting but can discourage broader adoption across departments if seats become a bottleneck.

Response-based pricing ties cost to the number of video responses collected. This aligns pricing closely with value received, particularly for market research teams running discrete studies. It is intuitive for customers but requires careful calibration to avoid penalizing success. Clear response limits and overage rules help maintain transparency and trust.

Usage-based pricing extends beyond response count to include factors such as video duration, transcription volume, or AI analysis depth. This model reflects actual infrastructure consumption and scales naturally with customer growth. However, it requires strong usage visibility so customers can anticipate costs. Enterprise licensing typically combines elements of all these approaches into a custom agreement. Enterprises often prioritize predictable billing, compliance assurances, and dedicated support over granular usage optimization, making bundled pricing with negotiated limits a common choice.

-

Free Trials, Freemium, and Enterprise Sales

Go-to-market strategy influences how pricing models are applied in practice. Free trials are the most common entry point for self-serve customers, allowing teams to experience the platform’s value before committing. Effective trials are time-bound or usage-bound and focus on guiding users to a clear “aha” moment, such as collecting their first set of video responses and viewing automated insights.

Freemium models offer a permanently free tier with strict limitations, often on response count, video length, or analytics features. This approach can drive awareness and word-of-mouth, particularly among startups and small teams. However, freemium only works when upgrade paths are obvious and compelling. If free tiers are too generous, conversion suffers; if too restrictive, users disengage before seeing value.

Enterprise sales follow a fundamentally different motion. Buying decisions involve multiple stakeholders, longer evaluation cycles, and deeper scrutiny of security, compliance, and scalability. Pricing discussions are typically anchored around annual contracts rather than monthly subscriptions. In this context, value is framed in terms of insight velocity, decision quality, and operational efficiency rather than per-response cost. Successful platforms support both self-serve adoption for smaller teams and high-touch sales for enterprise customers without fragmenting the product experience.

-

Cost Drivers and Unit Economics

Understanding cost drivers is essential for sustainable pricing. Video storage is a primary expense, influenced by response volume, average duration, and retention policies. Efficient encoding, lifecycle management, and tiered storage strategies help control these costs. AI processing introduces another variable cost layer. Transcription, sentiment analysis, and summarization all consume compute resources, with costs scaling alongside usage and language complexity.

Margin management depends on aligning pricing units with these cost drivers. Platforms that charge per response or per minute of video can directly offset infrastructure expenses, while seat-based models require careful monitoring to ensure heavy usage does not erode margins. Over time, optimizing AI pipelines, encouraging concise responses, and enforcing retention limits improves unit economics. When pricing, cost structure, and usage incentives are aligned, video feedback platforms can scale profitably while continuing to deliver high-quality insight.

MVP vs Enterprise Platform: What to Build First

-

Defining a Strong MVP Scope

A strong MVP for a video feedback platform is defined less by feature breadth and more by its ability to deliver a complete insight loop with minimal friction. The core objective of an MVP is to validate that users are willing to record video feedback and that teams can extract value from it quickly, which is the foundation of building MVP software the right way. To achieve this, the MVP must focus on a small set of must-have capabilities that enable end-to-end usage without operational complexity.

At minimum, an MVP should allow researchers to create a survey with video prompts, distribute it via a shareable link, collect video responses reliably, and review those responses in a centralized dashboard. Basic tagging and note-taking are essential so teams can organize feedback and identify patterns manually. Video recording must be stable across browsers and mobile devices, and uploads must be resilient to network interruptions. A simple export or sharing mechanism, such as downloading clips or generating a summary view, helps teams turn feedback into action.

Just as important is what the MVP intentionally excludes. Advanced AI analytics, complex sentiment scoring, and automated summaries can wait. Early-stage platforms should avoid deep customization, multi-level permissions, and enterprise-grade compliance features unless they are required by the initial target customers. The goal is not to build a scaled research suite, but to prove that video feedback solves a real problem better than text surveys. By keeping scope tight, teams can iterate faster, limit technical debt, and validate demand before committing to heavier infrastructure and analytics investments.

-

Common Mistakes in Early-Stage Builds

One of the most common mistakes in early-stage builds is over-engineering the platform before real usage data exists. Teams often attempt to design for every conceivable enterprise scenario, resulting in bloated workflows, complex configuration screens, and fragile systems. This slows development and obscures the core value proposition for early users.

Premature investment in AI is another frequent pitfall. While AI-driven insights are a major differentiator in mature platforms, implementing advanced sentiment analysis or clustering too early can backfire. Without sufficient data volume and user feedback, these systems often produce low-confidence results that undermine trust. Early users are typically more interested in seeing authentic video responses than in receiving automated interpretations.

Poor UX decisions also derail many MVPs. Video recording introduces natural hesitation, and any additional friction, such as unclear instructions, aggressive time limits, or unreliable uploads, dramatically reduces participation. Focusing on internal dashboards while neglecting the respondent experience is particularly damaging. If respondents struggle or feel uncomfortable, response quality drops and the entire system fails to deliver value. Early-stage success depends on obsessing over simplicity, clarity, and reliability rather than feature sophistication.

-

Scaling from MVP to Full Platform

Scaling from an MVP to an enterprise-ready platform is an incremental process driven by real customer behavior. Feature expansion should follow demonstrated demand, not speculative roadmaps. Common next steps include introducing AI-assisted transcription, search, and tagging to reduce manual analysis effort, followed by sentiment indicators and thematic clustering once sufficient data volume exists.

Infrastructure evolution is equally important. As usage grows, direct-to-storage uploads, asynchronous processing pipelines, and better observability become necessary to maintain performance and reliability. At this stage, teams often formalize retention policies, introduce role-based access control, and improve auditability to meet enterprise expectations.

Customer feedback loops guide this transition. Early customers provide invaluable insight into which features drive adoption and which create friction. Regular interviews, usage analytics, and support interactions should directly inform prioritization. The most successful platforms treat the MVP not as a disposable prototype, but as the foundation of the final product. By scaling deliberately, aligning features with proven needs, and maintaining a strong focus on user experience, teams can evolve from a focused MVP into a robust, enterprise-grade video feedback platform without losing product clarity.

Why Aalpha: A Proven Partner for Building Video Feedback Platforms at Scale

Building a video feedback and survey platform is not a typical SaaS development effort. It requires deep expertise across video infrastructure, AI-driven analytics, scalable backend systems, and research-focused user experience design. Aalpha is well positioned in this space because it has already designed and built production-grade platforms that operate at the same intersection of video, AI, and insight generation that modern feedback systems demand.

Aalpha approaches video feedback platforms as long-term products, not one-off builds. The team understands that success in this category depends on reliability under real-world conditions, including inconsistent network quality, high-volume media uploads, diverse user devices, and strict expectations around privacy and security. Architecture decisions are made with scale in mind from the start, ensuring that platforms can grow from early MVP usage to enterprise-level adoption without fundamental rewrites.

A key proof point of this capability is CuerateAI, a video-first feedback and sentiment intelligence platform built by Aalpha. CuerateAI enables organizations to collect video responses, transcribe and analyze them using AI, and convert qualitative feedback into structured, actionable insights. The platform reflects Aalpha’s hands-on experience with the exact challenges involved in video-based research systems, including transcription accuracy across accents, AI-assisted sentiment and theme extraction, scalable video storage, and insight presentation tailored for decision-makers.

Having built CuerateAI, Aalpha brings practical, battle-tested knowledge into every client engagement. This includes understanding where AI genuinely adds value and where human-in-the-loop workflows are essential, how to manage video and AI costs without degrading user experience, and how to design respondent journeys that feel comfortable and trustworthy. These are not theoretical considerations; they are lessons learned from building and operating a real video feedback product.

From a delivery standpoint, Aalpha supports the full lifecycle of video feedback platform development. This spans MVP scoping, system architecture, frontend and backend implementation, AI and analytics integration, security and access control, and readiness for enterprise deployment. The team works closely with founders and product leaders to align technical decisions with business goals, monetization models, and go-to-market strategy.

For companies looking to build a serious video feedback or survey intelligence platform, Aalpha offers a rare combination of custom product engineering expertise and direct experience building platforms like CuerateAI. This ensures faster execution, lower risk, and a platform designed not just to function, but to compete and scale in the evolving experience research market.

Future Trends in Video Feedback and Research Platforms

-

AI-Generated Interviewers and Avatars

One of the most significant emerging trends in video feedback platforms is the use of AI-generated interviewers and avatars to guide respondents through surveys. Instead of static text prompts, respondents are presented with a virtual interviewer that asks questions using natural speech and visual presence. This approach reduces the psychological distance between researcher and respondent, making the interaction feel closer to a human conversation rather than a form.

AI interviewers can adapt tone, pacing, and follow-up questions based on the respondent’s answers, encouraging more complete and reflective responses. For example, if a respondent hesitates or provides a short answer, the interviewer can prompt for clarification or reassurance. Avatars also help standardize the interview experience at scale, ensuring every participant receives the same quality of interaction while still benefiting from dynamic questioning. As generative AI improves, these interviewers will become more context-aware, capable of referencing earlier responses and maintaining coherent conversational flow across multiple questions.

-

Real-Time Feedback and Conversational Surveys

Another important trend is the shift toward real-time feedback and conversational survey experiences. Traditional surveys, even video-based ones, are often asynchronous and linear. Conversational surveys blur this boundary by allowing respondents to engage in a back-and-forth flow that feels more like messaging or live chat, while still remaining automated.

Real-time feedback systems can react immediately to user input, adjusting questions, offering clarification, or narrowing in on specific issues as they arise. This is particularly valuable in usability testing and customer support research, where immediate context matters. For example, a user encountering friction in a product can be prompted to explain the issue in the moment, capturing fresh emotional signals that are often lost in delayed surveys. As infrastructure and AI latency improve, real-time conversational feedback will enable richer insights without requiring synchronous human moderation.

-

Deeper Integration with Product and Analytics Stacks

Video feedback platforms are increasingly becoming integrated components of broader product and analytics ecosystems. Rather than existing as standalone research tools, they are being embedded directly into product workflows, customer experience platforms, and business intelligence systems. This integration allows qualitative insights to be correlated with quantitative data such as usage metrics, conversion funnels, and retention patterns.

Deeper integration enables teams to trigger video feedback based on specific events, such as feature usage, churn risk signals, or support interactions. Insights can then flow back into product management tools, analytics dashboards, and reporting systems, ensuring feedback informs decisions continuously rather than episodically. Over time, video feedback becomes part of an organization’s operational data fabric. This shift transforms research from a periodic activity into an always-on insight layer, positioning video feedback as a core input to product strategy, experience optimization, and long-term customer understanding.

Conclusion

Video feedback platforms deliver real value when they are built with a clear focus on usability, reliability, and insight generation rather than feature volume. The most successful platforms make it easy for respondents to share authentic feedback, handle video and data at scale without friction, and present insights in a way that supports confident decision-making. Tools that ignore these fundamentals often struggle to move beyond experimentation.

Sustainable platforms strike the right balance between human insight and AI assistance, manage infrastructure and media costs deliberately, and evolve based on real customer usage. They are designed to grow from focused MVPs into robust systems that integrate naturally into product, research, and analytics workflows.

If you are planning to build a video feedback or survey intelligence platform, working with an experienced product engineering partner can significantly reduce risk and time to market. Connect with Aalpha to discuss your idea, architecture, and roadmap. With proven experience in building scalable, video-first, AI-enabled platforms, Aalpha can help you turn a strong concept into a production-ready product that delivers lasting insight and business value.