1. Introduction to Agent-as-a-Service (AaaS)

What is Agent-as-a-Service?

Agent-as-a-Service (AaaS) refers to the delivery of intelligent, autonomous agents via APIs or modular software services that can be consumed on demand by applications, systems, or users. These agents are typically powered by large language models (LLMs), machine learning systems, and rule-based logic. Unlike traditional software-as-a-service (SaaS) products, AaaS emphasizes dynamic reasoning, context-awareness, and task execution without direct human scripting or oversight.

An AaaS system encapsulates an intelligent agent—capable of decision-making, learning, and action—into a service that can be instantiated, queried, and interacted with as needed. These agents may handle anything from summarizing documents and responding to emails to orchestrating workflows, writing code, or monitoring IT systems.

Why It Matters in 2025

The emergence of agent-based computing reflects a broader trend toward automation that transcends static systems or rule-based bots. In 2025, organizations are moving beyond traditional automation (like RPA or APIs) and embracing adaptive agents that can reason, plan, and act with autonomy.

Enterprise environments demand systems that can handle complexity, reduce manual intervention, and integrate across siloed tools. With the rise of general-purpose foundation models (e.g., GPT-4.5, Claude, Gemini), AaaS enables companies to embed intelligence into every function—without needing to train their own models or build agent architectures from scratch.

AaaS also allows companies to focus on business logic and user experience, rather than on the complexities of managing underlying AI infrastructure. For AI-first organizations, this lowers the barrier to deploying intelligent solutions rapidly and at scale.

Distinction from SaaS, API-as-a-Service, and AIaaS

While there’s overlap, Agent-as-a-Service is fundamentally different from other service-based models:

- SaaS (Software-as-a-Service): Delivers static application software (e.g., Salesforce, Slack) accessed via a web interface. SaaS platforms rarely adapt in real-time or make autonomous decisions.

- API-as-a-Service: Offers programmatic access to functionality (e.g., Stripe for payments, Twilio for messaging). These services are task-specific and non-intelligent unless explicitly designed to be.

- AIaaS (Artificial Intelligence as a Service): Provides access to AI models or tools (e.g., image recognition, NLP APIs). While useful, AIaaS is typically model-centric, not agent-centric.

In contrast, AaaS combines autonomous decision-making, contextual awareness, and sequential task execution in a persistent and interactive service model. The agent maintains its own memory, goal structure, and task planning capabilities, often working in a multi-step or multi-agent environment.

Evolution from Chatbots and RPA to Autonomous Agents

The AaaS paradigm is a direct evolution from earlier automation tools:

- Chatbots (2010s): Simple rule-based or NLP-powered systems focused on question-and-answer tasks.

- Robotic Process Automation (RPA): Scripting-based tools that mimic user actions across interfaces but lack reasoning or learning.

- LLM-based Copilots (2020–2023): Context-aware assistants embedded in IDEs, documentation, or support platforms.

Now, autonomous agents—deployed as services—represent a higher level of capability. They plan multi-step actions, delegate subtasks, retrieve information, and update their memory or belief states in real time. With this, organizations can offload increasingly complex tasks to machines that learn and evolve.

The rest of the guide will explore how to evaluate, build, deploy, and manage these agents effectively—grounded in real-world use cases and evidence from the field.

2. Market Overview and Growth Projection

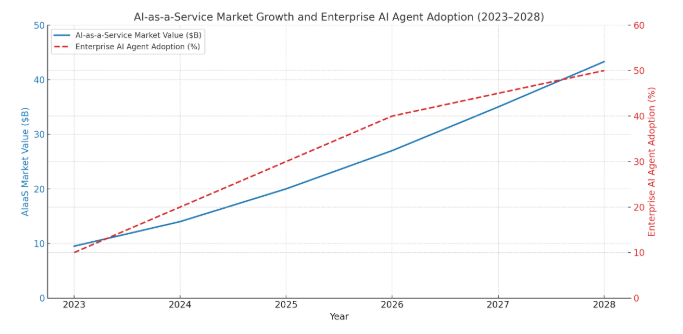

Current Market Valuation

The Agent-as-a-Service (AaaS) segment is still emerging as a formal category, but it is built on the convergence of several fast-growing sectors: AI-as-a-Service, autonomous agents, intelligent automation, and large language model (LLM) deployment. While direct estimates for AaaS are limited due to its nascency, proxies provide strong signals of momentum.

According to MarketsandMarkets, the global AI-as-a-Service market was valued at $9.5 billion in 2023 and is expected to reach $43.3 billion by 2028, growing at a CAGR of 35.0%. Within this broader market, intelligent agents, LLM-based APIs, and orchestration platforms are gaining increased budget allocation in enterprise AI roadmaps.

A McKinsey report (2024) notes that over 40% of enterprises plan to deploy AI agents in production by 2026, primarily for customer operations, IT management, and internal task automation. The transition from simple API-based AI services to dynamic agent orchestration layers is already underway in sectors like finance, healthcare, and logistics.

CAGR and Future Growth (2025–2030)

While AaaS does not yet have a standalone market projection from major analysts, extrapolation from adjacent trends suggests a significant expansion:

- Autonomous Agent Platform Growth: Gartner projects that by 2027, over 20% of enterprise applications will incorporate goal-driven autonomous agents, up from less than 2% in 2023.

- Custom AI Agent Development Platforms (like OpenAI’s Assistants API, LangChain, ReAct frameworks) are attracting major investments. CB Insights recorded over $1.2B in funding to startups focused on agent infrastructure and orchestration tools in 2024 alone.

- The shift toward multi-agent systems for workflow orchestration and intelligent task routing is expected to drive a multi-billion-dollar segment within cloud-based AI services by 2030.

If current adoption trajectories continue, the AaaS segment could represent $15–25 billion in annual enterprise spend by 2030, especially as more organizations adopt agents as persistent virtual workers across internal and customer-facing systems.

Key Investment Trends

The rise of AaaS aligns with growing investor focus on agents and autonomous systems. Notable trends include:

- VC-backed startups like Adept, Cognosys, Reworkd, and MultiOn are building agent platforms or full-stack solutions and have raised $100M+ rounds each in 2024.

- Enterprise incumbents (e.g., Microsoft, Google, Salesforce, SAP) are launching their own agent infrastructure layers within existing cloud ecosystems.

- Open-source agent orchestration tools (Auto-GPT, LangGraph, CrewAI, OpenAgents) have seen exponential GitHub growth and community adoption, signaling grassroots developer traction.

These movements signal a shift from experimentation to production-level deployment. The current focus is not only on model inference but on building full-fledged agents that can carry out multi-step workflows autonomously.

Industry Adoption Rates

Certain industries are leading the charge in AaaS adoption:

Industry | Primary Use Cases | AaaS Adoption Level |

Finance | Risk modeling, trading assistants, compliance agents | High |

Healthcare | Clinical triage, patient assistance, medical research | Medium–High |

Retail/Ecom | Price optimization, order support, supply chain AI | High |

Manufacturing | Maintenance agents, production insights | Medium |

IT/DevOps | Auto-debugging, CI/CD agents, monitoring | High |

An enterprise survey by Deloitte in 2024 showed that 58% of Fortune 1000 companies have at least one intelligent agent deployed internally, most often for customer support or internal knowledge retrieval tasks.

Notable Players and Products in AaaS

Some of the current leaders and innovators in this space include:

- OpenAI (Assistants API): Provides programmable agents with tools, memory, and function-calling.

- LangChain / LangGraph: Frameworks for multi-agent orchestration, widely adopted by developers building AaaS layers.

- Cognosys / MultiOn / Reworkd: Startups focused on autonomous personal AI agents.

- Autogen (Microsoft): Multi-agent orchestration toolkit for high-stakes reasoning tasks.

- Anthropic (Claude Team Agents): Enterprise-facing assistants capable of collaborative workflows.

In addition, several infrastructure providers (like AWS Bedrock, Azure OpenAI, and Google Vertex AI) are enabling agent deployment at scale, offering memory, tool access, and agent chaining features as part of their ecosystems.

The market for Agent-as-a-Service is in the early phases of explosive growth, driven by enterprise demand for autonomous, context-aware systems that go beyond static models or simple bots. While the category lacks a formal boundary today, the convergence of AI model infrastructure, orchestration layers, and intelligent tooling points to AaaS becoming a dominant paradigm in enterprise software by 2030.

The next section will break down what makes up an AaaS system—its core components and architecture—laying the foundation for design and deployment.

3. Core Components of an Agent-as-a-Service System

An Agent-as-a-Service (AaaS) system is not just a hosted language model with an API wrapper. It involves a cohesive set of components designed to support persistent, context-aware, and autonomous behavior. A production-grade AaaS implementation blends memory, planning, tool use, and orchestration into a cohesive system that operates as a service—not merely as a one-shot prompt response.

Below are the core architectural and functional components required for a robust AaaS deployment.

1. Agent Memory (Short-Term and Long-Term)

Purpose: Enables the agent to retain knowledge across interactions, maintain state, and support contextual continuity.

- Short-term memory holds context within a session—such as recent messages, retrieved data, and active task states.

- Long-term memory stores facts, observations, preferences, or past decisions across sessions, often using vector databases (e.g., Pinecone, Weaviate, Qdrant) or knowledge graphs.

Example: A customer service agent remembers past conversations with a user, including complaints or resolutions, and adapts responses accordingly.

2. Planning and Goal Management

Purpose: Enables the agent to decompose high-level goals into actionable plans or sub-tasks.

- Task Planning Modules can use techniques like classical planning (STRIPS), LLM-based chain-of-thought reasoning, or graph-based planners (e.g., LangGraph).

- Goal Tracking Systems ensure persistent intent and track task completion, state transitions, or failure handling.

Example: A DevOps agent receives a goal to “deploy the latest version of the app” and breaks it into tasks: pull the latest code → run CI/CD pipeline → verify logs → update dashboard.

3. Tool Use and API Integration

Purpose: Expands the agent’s capabilities beyond pure language outputs by allowing it to act in the real world.

- Tool use involves calling APIs, triggering functions, scraping web data, running code, or accessing external systems.

- Common implementations include OpenAI’s function-calling, ReAct frameworks, and self-hosted toolchains (e.g., LangChain Tools, Auto-GPT plugins).

Example: A research agent can run Python code to generate a chart, query a financial API, and then summarize the result.

4. Perception and Retrieval Modules

Purpose: Enables agents to gather relevant external and internal information before acting.

- Retrieval-Augmented Generation (RAG) is used to pull context from documents, databases, or knowledge bases.

- Perception modules may include entity recognition, semantic search, visual parsing (for images), or audio input handling.

Example: An agent asked to analyze company financials retrieves relevant reports from the internal document store, extracts tables, and synthesizes insights.

5. Reasoning Engine

Purpose: Performs inference, logical sequencing, constraint satisfaction, and adaptive decision-making.

- LLMs (GPT-4.5, Claude, Gemini) often handle high-level reasoning, using chain-of-thought, scratchpad methods, or few-shot prompting.

- Symbolic logic or rule-based systems can be integrated for deterministic control when necessary.

Example: A logistics agent decides which route to take for a delivery based on weather, priority level, and vehicle availability.

6. Execution Environment

Purpose: Provides a controlled runtime for the agent to act, interact with APIs, manage subprocesses, or trigger actions.

- Includes security policies (e.g., sandboxing), timeouts, and observability tools.

- Execution can happen in serverless environments, containerized workflows, or managed agent runtimes.

Example: An enterprise agent may trigger a Jenkins build, execute a shell command on a VM, or schedule a meeting in Outlook—all through controlled APIs.

7. Multi-Agent Orchestration (Optional but Powerful)

Purpose: Enables multiple agents to collaborate, specialize, or oversee one another for complex task execution.

- Hierarchical models (e.g., Manager → Worker Agents)

- Peer-to-peer agents (e.g., Research Agent ↔ Analyst Agent ↔ Summarizer)

- Agent societies with defined communication protocols (AutoGen, LangGraph)

Example: A document processing pipeline might have one agent extract key facts, another verify them, and a third generate a summary for review.

8. Interface Layer (API, UI, CLI)

Purpose: Enables interaction with the agent as a service via web UIs, API endpoints, or command-line tools.

- REST APIs for external integration

- Webhooks for event-driven triggers

- Embeddable chat UIs or CLI-based control systems

Example: A SaaS product embeds a procurement assistant UI for end-users, but also exposes API endpoints to enterprise backends for automation.

9. Feedback, Monitoring, and Evaluation Systems

Purpose: Supports agent reliability, correctness, and safe behavior in production.

- Feedback loops may include human-in-the-loop systems, RLHF-like corrections, or user ratings.

- Monitoring tracks hallucination rates, latency, token consumption, failure cases, etc.

- Evaluation frameworks (e.g., TruLens, RAGAS, Helicone) are critical in tracking agent performance in real-world use.

Example: A customer support agent that begins hallucinating product return policies is flagged by automated evaluation metrics for retraining or correction.

10. Identity, Authentication, and Permissions

Purpose: Ensures secure, role-based access and identity awareness for personalized service delivery.

- Agents may need to operate on behalf of users or accounts, requiring OAuth tokens or signed credentials.

- Role-based permission systems limit tool usage, data access, or system control.

Example: A sales assistant agent can access CRM data for Account Managers but is restricted from HR systems or finance dashboards.

A robust AaaS system brings together memory, planning, perception, action, reasoning, and orchestration into a composable, service-oriented architecture. Each module must be carefully designed and aligned to the agent’s intended use case, reliability requirements, and integration needs.

4. Types of Agents Delivered as a Service

Agent-as-a-Service (AaaS) is not a monolithic model. Depending on the target use case, capabilities, autonomy level, and system constraints, agents delivered as services can take on various forms. This section categorizes the primary types of agents being deployed as services in enterprise and developer ecosystems today, with distinctions based on purpose, structure, and complexity.

1. Task-Oriented Agents

Definition: Agents designed to complete specific, well-scoped tasks based on a single user input or trigger.

- Characteristics: Stateless or minimally stateful, deterministic, minimal memory use.

- Common Use Cases: Email summarization, code generation, form filling, calendar scheduling.

Example:

A task agent receives an email and summarizes its content. It has no memory of prior emails, simply reads input, performs an NLP task, and returns output.

Technologies Used:

- OpenAI Assistants API

- LangChain + Tool Use

- Zapier-based action agents

Deployment Model:

Often serverless, invoked via API or webhook, returns result in a single response.

2. Goal-Oriented Agents

Definition: Agents that pursue high-level objectives through multiple steps, sub-tasks, or tool invocations.

- Characteristics: Stateful, uses memory, applies planning and decision-making over time.

- Common Use Cases: Report generation, workflow automation, technical research.

Example:

A financial assistant receives the goal “Create a weekly spending report” and retrieves transaction data, categorizes it, creates charts, and emails a summary.

Technologies Used:

- LangGraph

- CrewAI

- AutoGPT-like frameworks

Deployment Model:

Often runs in session-based environments with memory and logging. May involve job queues or agent runtimes.

3. Multi-Agent Systems (MAS)

Definition: A collection of specialized agents that collaborate to complete complex tasks through communication and delegation.

- Characteristics: Distributed, role-based, often hierarchical or peer-coordinated.

- Common Use Cases: Document analysis, knowledge curation, simulation environments.

Example:

In a research assistant MAS, one agent retrieves academic papers, another extracts key findings, and a third generates executive summaries.

Technologies Used:

- AutoGen (Microsoft)

- LangGraph multi-agent workflows

- Custom orchestration on Kubernetes or Celery

Deployment Model:

Service mesh or agent runtime that handles multiple concurrently interacting agents. Can scale horizontally.

4. Conversational Agents (Chat-Based)

Definition: Agents designed to engage in natural language conversations and provide real-time interaction.

- Characteristics: Often user-facing, supports memory and context across sessions, low latency.

- Common Use Cases: Customer support, sales assistants, onboarding guides, personal productivity.

Example:

A customer service chatbot helps users navigate subscription issues, referencing previous orders and offering dynamic suggestions.

Technologies Used:

- RAG systems (e.g., Haystack, LlamaIndex)

- OpenAI + vector memory

- Claude / Gemini with context windows

Deployment Model:

Exposed via chat UIs or embedded in SaaS products, often stateful with per-user memory and identity management.

5. Autonomous Agents

Definition: Agents capable of making decisions and acting without human intervention for extended periods.

- Characteristics: High autonomy, goal tracking, continuous task loops, error handling, sometimes self-healing.

- Common Use Cases: Monitoring systems, trading bots, autonomous DevOps tools.

Example:

An autonomous DevOps agent detects an app crash, diagnoses the issue from logs, applies a patch, redeploys, and reports to a Slack channel.

Technologies Used:

- Self-hosted agent loops

- Planning frameworks + LLMs

- Secure execution environments

Deployment Model:

Persistent runtime environments with watchdog systems, error recovery, and access to system-level tools.

6. Embedded Agents in SaaS Platforms

Definition: Modular agents embedded inside broader SaaS products to extend functionality.

- Characteristics: Task-bound or contextual, aware of product-specific data, integrates with in-app workflows.

- Common Use Cases: Project management assistants, CRM advisors, onboarding helpers.

Example:

A Notion-style writing assistant that rewrites notes, generates summaries, or creates templates based on the user’s workspace.

Technologies Used:

- In-app API calls to hosted agents

- Identity-aware memory

- LLM wrapper services like Vercel AI SDK

Deployment Model:

Integrated into UI components with service calls to backend agent logic. Often paired with usage tracking and user roles.

7. Domain-Specific Expert Agents

Definition: Agents trained and fine-tuned for deep knowledge in a specific vertical or domain.

- Characteristics: Knowledge-rich, context-bound, may leverage custom LLMs or extensive RAG stacks.

- Common Use Cases: Legal advisors, medical assistants, compliance checkers.

Example:

A legal assistant agent can read contracts, detect risky clauses, and recommend edits based on jurisdiction-specific compliance rules.

Technologies Used:

- Fine-tuned LLMs (e.g., Mistral, LLama 3)

- Domain-specific embeddings

- Hybrid retrieval + rule-based engines

Deployment Model:

Runs behind APIs or dashboards with access to domain-restricted data sources, legal databases, or medical corpora.

Agent-as-a-Service delivery models vary based on autonomy, task complexity, and integration depth. From stateless task agents to persistent multi-agent ecosystems, each type has unique infrastructure, design, and operational needs. Choosing the right type depends on your use case, execution context, and business goals.

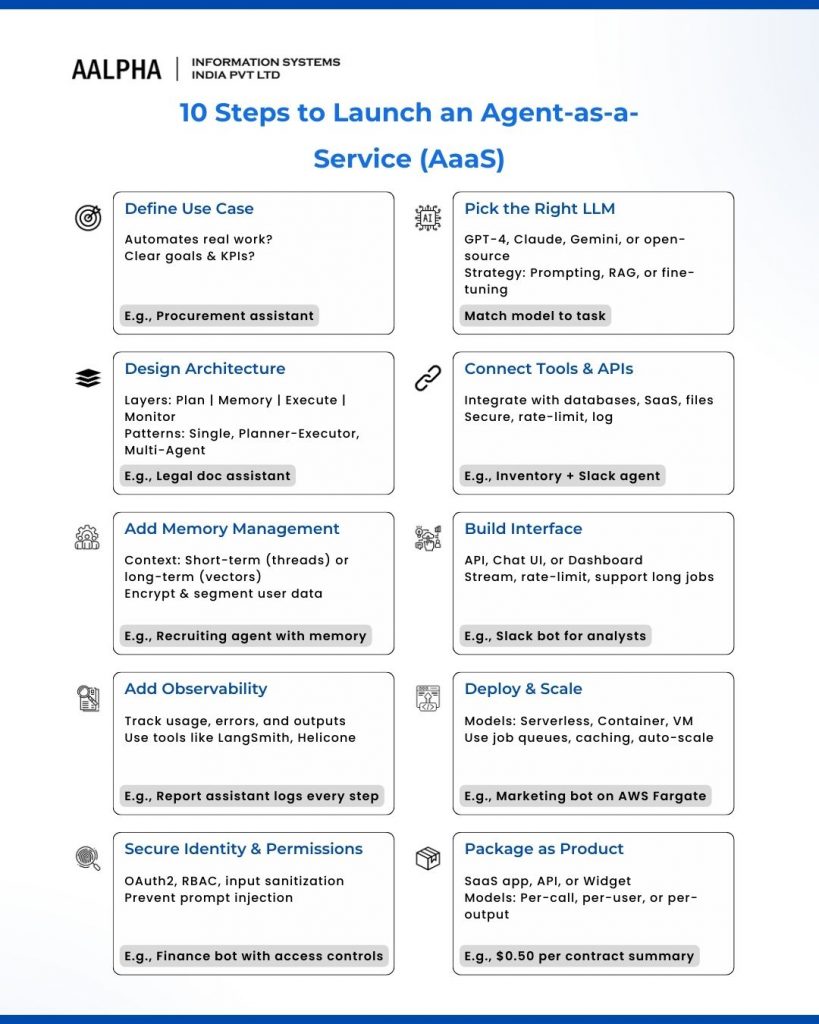

5. Step-by-Step: Building and Offering an Agent-as-a-Service

Offering an Agent-as-a-Service (AaaS) product requires more than wrapping an LLM behind an API. It involves methodically selecting a use case, designing a reliable architecture, building robust agent logic, and deploying and managing it as a scalable, observable, and secure service. This section outlines a full development and deployment process, step by step, suitable for enterprise-grade AaaS products.

Step 1: Define the Use Case and Agent Role

Key Activities:

- Identify a real-world problem that benefits from agent-based autonomy (not just chat).

- Decide on the type of agent: task-based, goal-oriented, autonomous, or multi-agent.

- Define clear inputs, outputs, KPIs, and success criteria.

Checklist:

- What is the user’s intent or goal?

- Can the agent reduce manual work or decision time?

- Is the problem repeatable, automatable, and high-value?

Example:

A procurement assistant that automates vendor comparison and RFP drafting for enterprise procurement teams.

Step 2: Choose the Right LLM Foundation and Strategy

Key Activities:

- Select a foundation model that aligns with the task: GPT-4.5, Claude 3, Gemini, Mistral, or a fine-tuned open-source model.

- Decide between hosted APIs (e.g., OpenAI) or self-hosted LLMs (e.g., Llama 3 with vLLM).

- Optimize for latency, reliability, token limits, and cost.

Strategies:

- Use prompt engineering or function-calling if your agent is simple.

- Use RAG and modular planning if your agent requires context or long reasoning chains.

- Consider fine-tuning if your agent requires domain expertise or reduced hallucinations.

Example:

Use GPT-4 Turbo for general logic and Anthropic Claude for compliance-sensitive summaries, switching models based on task.

Step 3: Design Agent Architecture (Single or Multi-Agent)

Key Components:

- Planning Layer: Enables task decomposition using planning engines or LLM-based logic.

- Memory Layer: Uses vector DBs (e.g., Pinecone) or SQL/NoSQL to retain context and learning.

- Execution Layer: Manages tool/API invocation and sandboxed environments.

- Monitoring Layer: Logs, metrics, hallucination detection, user feedback.

Architectural Patterns:

- Single Agent + Tool Use: Ideal for lightweight, fast-response tasks.

- Planner-Agent + Executor: For autonomous task sequences.

- Multi-Agent Graph (LangGraph, AutoGen): For complex, distributed workflows.

Example:

A legal document assistant with a planner agent that coordinates three executor agents: clause extractor, risk assessor, and summary generator.

Step 4: Implement Tool Use, APIs, and Action Modules

Key Activities:

- Integrate APIs the agent will need: databases, SaaS apps, CRMs, or file systems.

- Build internal tools the agent can invoke using OpenAI function calls or ReAct-style prompts.

- Ensure all tools are secure, rate-limited, and observable.

Common Tool Types:

- Data fetchers (e.g., SQL or REST query wrappers)

- External API clients (e.g., Google Sheets, Salesforce)

- File processors (e.g., PDF parsers, CSV summarizers)

- Shell command wrappers (for DevOps agents)

Example:

An operations agent fetches inventory from a PostgreSQL database, checks reorder thresholds, and sends a Slack notification with recommendations.

Step 5: Implement Memory and Context Management

Options:

- Short-Term: Contextual threading within prompts (windowing, recent history).

- Long-Term: Use embeddings and vector search for persistent knowledge.

- Hybrid: Combine vector DBs with structured state machines or caches.

Best Practices:

- Don’t store sensitive user data in memory without encryption or access control.

- Use namespaces and user IDs to separate memory per tenant/user.

Example:

A recruiting agent remembers past candidate interactions, resumes, and feedback, and retrieves this context when new jobs are posted.

Step 6: Build the Interface (UI, API, or CLI)

User Interfaces:

- API-first: REST or GraphQL for developer integrations.

- Chat UIs: Web widgets, embedded assistants, or chatbots.

- Dashboards: For B2B use cases, include logging, feedback, and interaction history.

Engineering Tips:

- Rate-limit APIs.

- Support real-time streaming responses.

- Use webhooks or polling for long-running jobs.

Example:

A data analyst assistant is exposed via Slack, with an internal API for data science teams to trigger queries programmatically.

Step 7: Add Observability, Logging, and Evaluation

Must-Have Observability Features:

- Token usage and latency tracking

- API error monitoring and tool call auditing

- Prompt + response logs for evaluation

- Drift or hallucination detection

Recommended Tools:

- Helicone for OpenAI usage analytics

- TruLens or LangSmith for prompt evaluation

- Sentry / Datadog for backend monitoring

Example:

A multi-agent reporting assistant logs every step in a report-building chain, from data retrieval to chart generation, with trace IDs.

Step 8: Deploy and Scale the Service

Deployment Models:

- Serverless (Vercel, AWS Lambda): Ideal for stateless, event-based agents.

- Containerized (Docker + Kubernetes): For agents with custom runtimes, multi-agent graphs, or stateful memory.

- Dedicated VM or GPU server: For self-hosted LLMs or fine-tuned agents.

Scalability Tips:

- Use job queues for async tasks (e.g., Celery, BullMQ).

- Cache common requests to reduce LLM calls.

- Auto-scale based on task types and traffic.

Example:

A marketing assistant is containerized on AWS Fargate, scales up for campaign analysis tasks, and uses a Redis job queue for async report generation.

Step 9: Implement Identity, Permissions, and Security

Key Features:

- OAuth2 and JWT for secure API access

- Role-based access controls for tools and memory

- Data encryption at rest and in transit

- Guardrails to restrict unsafe tool use or hallucinations

Security Strategies:

- Use allow-listed tool execution

- Sanitize tool inputs and outputs

- Monitor for prompt injection and misuse

Example:

A financial assistant only exposes sensitive reporting tools to verified finance team users, with logs reviewed weekly.

Step 10: Offer It as a SaaS or API Product

Packaging Options:

- SaaS Web App: Full UI, billing, dashboards, user roles.

- API-first Platform: Developer portal, API keys, rate limits.

- Embedded Widget: Drop-in component for 3rd-party apps.

Monetization Models:

- Pay-per-call or pay-per-task (e.g., 1,000 queries/month)

- User-based pricing (e.g., per seat)

- Outcome-based pricing (e.g., per report generated)

Example:

An AI paralegal service charges law firms $0.50 per contract summary, offered through a secure web portal and API access.

Building an Agent-as-a-Service product is a multi-phase effort combining AI, software engineering, DevOps, and product design. It starts with scoping a specific need, designing the right agent architecture, integrating tools and memory, and exposing it as a reliable and secure service. Success depends on rigorous observability, careful scaling, and a well-defined interface.

6. AaaS Deployment Models and Architectures

A well-architected Agent-as-a-Service (AaaS) platform must balance flexibility, performance, cost, security, and scale. Whether deploying a lightweight task-based agent or a multi-agent autonomous system, the underlying architecture directly affects its reliability and maintainability. This section dives into the core deployment models and architectural patterns for delivering Agent-as-a-Service systems, both for startups and enterprises.

1. Key Deployment Considerations

Before choosing a deployment model, consider:

- Latency Requirements: Real-time vs. async response expectations.

- Data Sensitivity: Handling PII, healthcare, financial, or proprietary data.

- Scalability Needs: Elastic workloads, multi-tenant usage, usage spikes.

- Agent Complexity: Simple tool-call agents vs. goal-driven multi-agent chains.

- LLM Hosting Strategy: External (OpenAI, Anthropic) vs. on-prem or hybrid.

Each factor influences whether to deploy serverless, container-based, or VM-hosted systems, and what architectural patterns to adopt.

2. Deployment Models Overview

a. Serverless AaaS Architecture

Best for:

- Lightweight agents with short execution windows

- Event-triggered actions (e.g., incoming webhook or user query)

- Teams seeking fast iteration without infrastructure overhead

Tech Stack Example:

- AWS Lambda / Google Cloud Functions

- API Gateway

- DynamoDB or Firebase for state/memory

- External LLMs via API (OpenAI, Claude)

Pros:

- Minimal ops management

- Auto-scaled by default

- Pay-per-execution model reduces idle cost

Cons:

- Cold starts and timeouts for longer tasks

- Limited custom runtime or library support

- No GPU or local LLM deployment

Example Use Case:

A Slack-based sales assistant triggered by user input, responds in under 5 seconds by fetching CRM data and calling OpenAI.

b. Container-Based (Microservices) Architecture

Best for:

- Stateful or long-running agents

- Custom dependencies (e.g., open-source LLMs, LangChain, Haystack)

- Scalable, modular platforms with DevOps support

Tech Stack Example:

- Docker + Kubernetes (EKS, GKE, AKS)

- API Gateway + Load Balancer

- Redis or PostgreSQL for memory/state

- Vector DB (e.g., Pinecone, Weaviate)

Pros:

- Full runtime control (Python, Node.js, Rust, etc.)

- Easier debugging, logging, and resource allocation

- Suited for horizontal scaling and multi-agent orchestration

Cons:

- Requires DevOps expertise

- More overhead than serverless

- Cold starts avoided, but container uptime costs persist

Example Use Case:

An AI contract review platform using multiple specialized containerized agents, communicating via message queues.

c. Self-Hosted or VM-Based Architecture

Best for:

- Privacy-sensitive environments (e.g., healthcare, government)

- Custom LLM deployment with GPU acceleration

- Enterprises avoiding third-party LLM APIs

Tech Stack Example:

- On-prem VMs or cloud VMs with GPU (NVIDIA A100)

- Self-hosted LLMs (Llama 3, Mistral, Falcon)

- LangGraph / AutoGen for agent graphs

- Secure API gateways and internal monitoring

Pros:

- Maximum control over model and data

- No external API latency or cost

- Compliance-ready (HIPAA, GDPR, SOC 2)

Cons:

- High upfront infra costs and ops burden

- Requires ML engineering for model tuning

- Updates and patching are manual

Example Use Case:

A hospital system deploying an autonomous care-plan assistant using a fine-tuned clinical LLM and secure internal data sources.

3. Architectures by Agent Complexity

a. Single-Agent Architecture

Structure:

- Input → Prompt → LLM Call → Output

- Optional: Tool call, memory lookup

Ideal for:

Simple, one-shot assistants that don’t need autonomy or planning

Deployment Fit:

- Serverless

- REST API endpoint

- No persistent state needed

Example Use Case:

FAQ answering bot for HR or IT service desk.

b. Agent with Tool Use and Planning

Structure:

- Input → Planner → Tool Use(s) → Output Generator

- Memory integration for contextual continuity

Ideal for:

Agents needing multi-step reasoning, like report generation or research assistance.

Deployment Fit:

- Containerized with queueing (Celery, BullMQ)

- Serverless with fallback mechanisms for long-running jobs

Example Use Case:

AI analyst agent that queries multiple data sources, cleans data, and delivers insight.

c. Multi-Agent Architecture

Structure:

- Planner Agent → Delegator → Executor Agents (in parallel/sequence)

- Shared memory store + messaging protocol (LangGraph, AutoGen)

Ideal for:

- Cross-domain workflows

- Agents collaborating or specializing (e.g., writer + fact-checker + editor)

Deployment Fit:

- Kubernetes or container mesh with persistent memory

- Fine-grained API orchestration

Example Use Case:

An AI R&D assistant that manages literature review, prototype design, and validation through specialized agents.

4. Scaling Architectures for Multi-Tenant SaaS

If offering your AaaS to external customers, especially as a multi-tenant SaaS:

Key Elements:

- API Gateway: With tenant routing, usage throttling

- Isolated Memory Storage: Namespacing per tenant

- Billing Integration: Usage-based metering (Stripe, Usage APIs)

- Observability: Per-tenant dashboards and alerts

- CI/CD Pipelines: To update agents with tenant-specific logic

Tenant Isolation Models:

- Soft isolation: Logical separation in shared infra

- Hard isolation: Separate containers or VMs per tenant (high-security use cases)

Example Use Case:

An AaaS platform offering AI meeting assistants to enterprises, each tenant getting custom memory and tools.

5. Hybrid Architectures: LLM as a Service + Custom Agent Layer

For teams not ready to host their own LLMs but needing agent orchestration and autonomy:

Structure:

- LLM APIs (OpenAI, Claude) → Agent Layer → Tool Calls, Memory, UI/API

- Optional: Switch between LLMs based on task (routing layer)

Use Cases:

- MVP development

- High availability agent APIs with model fallback

- Prototypes with commercial LLM backends

Routing Techniques:

- Based on latency (Claude faster for summaries)

- Based on cost (OpenRouter cheapest route)

- Based on context window needs (GPT-4 Turbo for long tasks)

Example:

AI customer support system using Claude for long-form empathy and GPT-4 for precise answers.

6. Data Privacy and Security in AaaS Deployments

Regardless of architecture, security must be designed-in, not bolted-on:

Critical Practices:

- Data encryption: TLS, at-rest encryption with key management

- Zero Trust access control: For APIs, memory, and tools

- Prompt Injection Prevention: Input validation and guardrails

- LLM Gatewaying: Proxy LLM access through policy engines (e.g., Guardrails AI, Rebuff)

Compliance Considerations:

- GDPR: Right to forget, consent tracking

- HIPAA: PHI masking, audit logs

- SOC 2: Operational maturity, access controls, observability

Agent-as-a-Service deployment decisions shape the product’s scalability, compliance, and performance. From serverless one-shot agents to GPU-hosted autonomous multi-agent systems, the architecture must align with the agent’s task complexity, data requirements, and business goals. Choosing the right deployment model is foundational—especially when delivering AaaS as a multi-tenant or white-labeled platform.

7. Security, Privacy, and Governance in AaaS

As Agent-as-a-Service (AaaS) platforms gain traction across industries handling sensitive data—like healthcare, finance, legal, and enterprise IT—security, privacy, and governance become critical architectural pillars. Without a robust foundation in these areas, organizations risk compliance violations, model misuse, customer data leaks, and compromised business trust.

This section offers a comprehensive, in-depth breakdown of how to secure AaaS systems, meet privacy obligations, and enforce responsible governance—across development, deployment, and user-facing operations.

1. The Unique Security Challenges of AaaS

AaaS platforms introduce new attack surfaces not commonly seen in traditional SaaS systems:

- Dynamic Prompt Injection (DPI): Malicious user inputs designed to manipulate agent behavior or expose internal logic.

- LLM Response Injection: Model-generated content that could include sensitive data, hallucinations, or insecure tool commands.

- Autonomous Action Risks: Agents that take actions without human oversight—like sending emails or modifying files—can cause real-world damage if compromised.

- Model Supply Chain Risk: Dependence on third-party LLM APIs (e.g., OpenAI, Claude) introduces upstream data handling and reliability concerns.

2. Security Architecture for AaaS Platforms

To safeguard an AaaS system, security must be designed across multiple layers:

a. Perimeter Security

- API Gateways: Use authentication tokens (e.g., OAuth2, JWT) with strict rate limits per user or tenant.

- Ingress Filtering: IP whitelisting, traffic throttling, and DDoS protection via WAFs (Cloudflare, AWS WAF).

- Encryption in Transit: Enforce HTTPS and TLS v1.2+ for all communications between agents, tools, LLMs, and storage.

b. Identity and Access Control

- Zero Trust Principles: Never trust requests based on IP alone; enforce identity at every level.

- RBAC/ABAC: Role-based or attribute-based access control across agent APIs, memory stores, and tools.

- Audit Trails: Log every access to sensitive data and agent decisions for later review.

c. Data Security

- Memory Store Isolation: Encrypt and namespace agent memory per tenant or user.

- Secrets Management: Never hard-code API keys or credentials. Use Vault, AWS Secrets Manager, or GCP Secret Manager.

- PII Masking: Apply regex-based or AI-based detection to redact personal information before LLM prompts.

3. Privacy Compliance in AaaS

If your agents process user or company data, you must follow global privacy frameworks:

a. GDPR (EU)

- Data Minimization: Only collect and process data strictly necessary for agent function.

- Right to Be Forgotten: Implement deletion APIs that purge user history, memory, and logs on request.

- Consent Tracking: Explicit opt-ins for data collection and LLM usage, with audit-ready logs.

b. HIPAA (US)

- PHI Handling: Protect identifiable health information via encryption, access control, and logging.

- Business Associate Agreements (BAA): Required with any vendors handling PHI, including LLM API providers.

c. CPRA/CCPA (California)

- Transparency: Disclose what data agents collect, how it’s used, and with whom it’s shared.

- Opt-Out: Allow users to opt-out of behavioral data collection or automated decision-making.

d. SOC 2 & ISO 27001

- Control Implementation: Access control, change management, disaster recovery, and continuous monitoring.

- Third-Party Risk Management: Vet LLM providers, memory stores, and vector DBs against your compliance checklist.

4. LLM-Specific Threat Mitigation

Agent platforms uniquely depend on LLMs—either hosted or via API. Secure integration requires special handling.

a. Prompt Injection Defense

- Input Sanitization: Strip potentially harmful instructions, escape characters, or hidden payloads.

- System Prompt Reinforcement: Use tightly scoped system prompts with guardrails.

- Reinforcement Checks: Apply pattern matching, structured output enforcement (e.g., JSON schema), or function calling to contain outputs.

b. Output Validation

- Safety Filtering: Post-process LLM output through tools like OpenAI’s Moderation API, Google Perspective API, or custom classifiers.

- Code/Command Sandboxing: Never execute LLM-generated code or shell commands directly. Always sandbox and validate first.

- AI Red Teaming: Simulate malicious inputs and adversarial use cases using tools like Rebuff or PromptInject.

c. Secure Model Access

- Rate Limiting & Usage Control: Prevent LLM abuse by limiting tokens per request/user/day.

- Multi-Vendor Strategy: Don’t rely on a single LLM API. Use fallbacks to mitigate outages or vendor lock-in.

- Private LLM Hosting: For highly sensitive data, host open-source models in VPC with network isolation and encrypted disk.

5. Governance Frameworks for Responsible Agent Behavior

Beyond technical controls, governance ensures that agents behave ethically, predictably, and traceably.

a. Agent Decision Transparency

- Decision Logs: Record reasoning steps, tool uses, and source inputs per task.

- User Explanations: Allow agents to explain their rationale before acting (especially for critical actions).

- Intervention Layer: Insert a human-in-the-loop system for tasks involving risk (e.g., financial transfers, health advice).

b. Agent Capability Controls

- Tool Whitelisting: Restrict which tools an agent can use, per role or tenant.

- Capability Scoping: Limit autonomous behavior (e.g., no outbound email unless explicitly allowed).

- Dynamic Role Enforcement: Use agent profiles that determine action scope based on user privileges.

c. Governance Operations

- Policy Engine: Define rules for acceptable behavior, data usage, and user interactions (e.g., OPA, Rego).

- Ethical Oversight Board: For large-scale AaaS deployments, establish cross-functional teams reviewing AI behaviors quarterly.

6. Reducing Model Hallucinations and Bias

Model outputs can influence decisions in sensitive contexts—like hiring, healthcare, or finance. Ensuring fairness and factual accuracy is part of governance.

a. Retrieval-Augmented Generation (RAG)

- Use retrieval systems (e.g., vector DB + context-aware queries) to reduce hallucination risk and provide verifiable answers.

b. Output Confidence Scoring

- Provide scores for output reliability based on prompt complexity, retrieval quality, and agent feedback loops.

c. Bias Mitigation

- Conduct audits on outputs across demographics and decision contexts.

- Use diverse datasets to fine-tune or pre-train models internally if deploying open-source LLMs.

7. Agent-Level Observability and Monitoring

Ongoing visibility is key to securing and governing agents post-deployment.

Core Observability Metrics:

- Task success rate

- Tool invocation logs

- Agent decision time

- Memory access logs

- LLM API cost tracking

Tooling Ecosystem:

- Langfuse / Helicone: Monitor LLM calls and response latency

- OpenTelemetry + Grafana: Centralize observability for AaaS infrastructure

- Sentry + DataDog: Capture exceptions, latency spikes, and unauthorized tool use

Security, privacy, and governance are not bolt-ons—they are foundational to any responsible Agent-as-a-Service offering. From LLM-specific threats like prompt injection to regulatory obligations like GDPR and HIPAA, AaaS providers must adopt a layered security strategy, enforce strong data handling policies, and maintain transparency in how agents behave and make decisions.

8. Use Cases and Real-World Applications

Agent-as-a-Service (AaaS) platforms are moving beyond prototypes into production systems across diverse verticals. These autonomous or semi-autonomous agents are delivering measurable value in enterprise automation, software operations, customer engagement, and regulated industries like healthcare and finance. Unlike traditional SaaS workflows that require direct user intervention, AaaS offers agents capable of reasoning, interacting with APIs, retrieving context, and making independent decisions—within defined boundaries.

This section details real-world AaaS implementations across key domains, highlighting use cases where agents are already augmenting productivity, reducing operational latency, and delivering cost savings.

1. Enterprise Automation

HR Agents, Legal Review Agents, Procurement Agents

Modern enterprises are deploying domain-specific agents to reduce time spent on repetitive, manual workflows across HR, legal, and procurement.

HR Agents

- Onboarding Agents: Automate collection of employee documents, orientation scheduling, and FAQs via Slack/Teams.

- Benefits Assistants: Help employees understand coverage, generate personalized benefit summaries, and answer policy questions.

Example: A Fortune 500 company deployed an onboarding agent that reduced human HR team effort by over 60% per hire, freeing time for strategic work.

Legal Review Agents

- Contract Clause Detection: Parse NDAs, MSAs, or SaaS agreements to flag risk areas using LLM-based clause recognition.

- Document Summarization: Provide TL;DR of 50+ page legal agreements for in-house counsel with citations from original text.

Impact: One legal tech firm reported a 40% reduction in average review time using an AI legal agent trained on internal clause libraries and legal playbooks.

Procurement Agents

- Vendor Evaluation Agents: Compare supplier profiles, pricing, and contract history to suggest optimal vendors.

- RFP Generation: Draft requests for proposals (RFPs) based on predefined templates and project scopes.

2. AI Ops and DevOps Automation

Incident Triage Agents, CI/CD Agents, Root Cause Analysis

Operational intelligence is a leading frontier for agents. AI Ops agents handle infrastructure logs, telemetry data, and alert streams—reacting in real-time to prevent downtime and automate recovery.

Incident Triage Agents

- Alert Classification: Classify incoming alerts from systems like Prometheus, Datadog, or New Relic based on historical severity.

- Suggested Actions: Recommend mitigations or trigger playbooks based on past incidents and service dependencies.

Case Study: A SaaS company integrated incident agents with PagerDuty and Slack, achieving a 35% reduction in mean time to detect (MTTD).

CI/CD Copilot Agents

- Pipeline Debugging: Identify failing CI steps (e.g., failed test suites) and suggest fixes using LLM-driven analysis of logs.

- Dependency Management: Recommend library upgrades, deprecations, or licensing issues.

3. E-Commerce Applications

Pricing Agents, Customer Service Agents, Merchandising Agents

Agents are becoming integral in customer-facing workflows, particularly in high-volume domains like online retail.

Pricing Intelligence Agents

- Competitive Monitoring: Scrape competitor pricing and recommend real-time adjustments for parity or differentiation.

- Dynamic Discounting: Trigger promotional pricing during peak hours or for expiring inventory, based on demand forecasting.

Example: A mid-sized eCommerce retailer deployed pricing agents that increased sales velocity by 22% during promotional campaigns.

Customer Interaction Agents

- Multi-Channel Support: Respond to customer inquiries over WhatsApp, Messenger, and email with context retention.

- Return Policy Explainers: Use semantic understanding to answer complex refund scenarios without escalating to a human agent.

Merchandising Agents

- Product Description Generation: Automatically create SEO-friendly product pages using structured data and image analysis.

- Inventory Classification: Tag products by theme, style, or seasonal relevance using vision-language models.

4. Healthcare Applications

Diagnostic Assist Agents, Patient Query Agents, Provider Support Agents

In regulated environments like healthcare, AI agents for healthcare—such as Diagnostic Assist Agents, Patient Query Agents, and Provider Support Agents—are deployed with tight constraints, focusing on augmentation rather than automation.

Diagnostic Assist Agents

- Clinical Notes Summarization: Extract structured summaries from unstructured physician notes using HIPAA-compliant LLMs.

- Symptom Triaging: Interpret patient complaints from intake forms and suggest possible diagnostic paths, with disclaimers.

Caution: These agents must operate under human oversight and follow regulatory guidance (e.g., FDA, HIPAA).

Patient Query Bots

- Appointment Scheduling: Coordinate time slots between patient preferences and doctor availability across channels.

- Prescription Info Agents: Explain dosages, refill timelines, or drug interactions in plain language, linking to verified sources.

Provider Support Agents

- Billing Agents: Parse medical billing codes, highlight anomalies, and assist in claims submissions.

- Care Coordination Agents: Share care plan updates with family, specialists, and insurance reps across systems.

5. Developer Productivity Agents

Internal Copilots, Code Review Agents, Project Scaffolding Bots

Agents trained on internal engineering practices are boosting productivity by assisting developers with daily workflows.

Internal Code Copilots

- Codebase Navigation: Search functions, variable definitions, and architectural patterns across monorepos.

- Style Enforcement: Suggest refactors to match internal linting or architectural rules.

Note: Unlike public GitHub Copilot, these agents are trained on private codebases, ensuring alignment with team conventions and confidentiality.

Code Review Agents

- Automated Review Comments: Flag insecure code, missing test coverage, or deviation from architecture guidelines.

- Diff Summarization: Summarize large PRs for reviewers, highlighting areas of concern or complex changes.

Project Scaffolding Agents

- Starter Templates: Generate boilerplate code for common services (e.g., REST APIs, GraphQL endpoints, CI workflows).

- Onboarding Agents: Help new developers set up local environments, understand repo structure, and get assigned their first ticket.

From internal DevOps copilots to external-facing healthcare query bots, AaaS is already being applied in production settings that demand contextual intelligence, reliability, and measurable business outcomes. These agent systems are not replacing human roles outright—they’re augmenting high-friction workflows, enabling teams to focus on judgment-intensive and creative work. As agents mature and specialize, expect domain-specific AaaS adoption to accelerate across every knowledge-driven function.

9. AaaS Economics: Pricing Models and Cost Considerations

Agent-as-a-Service (AaaS) is reshaping not only how intelligent systems are deployed but also how they are monetized and evaluated from a financial standpoint. Unlike traditional software products that are priced based on licenses or feature tiers, AaaS offerings are closer to utility-based computing—consumption-driven, resource-intensive, and often priced based on behavior, interaction volume, or autonomy.

Understanding the cost structure and pricing models behind AaaS is critical for both providers designing sustainable services and enterprises budgeting for adoption. This section breaks down the major pricing approaches, the underlying cost drivers, infrastructure breakdown, and how organizations can model total cost of ownership (TCO) and return on investment (ROI).

1. Pricing Models for Agent-as-a-Service

Several monetization strategies have emerged across AaaS platforms, largely determined by the nature of the agent (task-specific vs. continuous), usage patterns, and customer preferences.

a. Pay-per-Task

In this model, pricing is based on the number of tasks or interactions executed by the agent.

- Best for: Transactional agents like customer query bots, contract summarizers, or invoice processors.

- Example: $0.01 per document processed or $0.005 per message interpreted.

Benefits: Transparent billing tied to unit output.

Limitations: Less predictable for fluctuating usage.

b. Pay-per-Agent

Customers are billed per deployed agent, typically on a monthly or annual basis, regardless of task volume.

- Best for: Persistent agents (e.g., DevOps triage bot, legal review assistant) with consistent uptime requirements.

- Example: $50/month per agent with usage caps on API or inference.

Benefits: Simple pricing logic for budgeting.

Limitations: Risk of underutilization or overuse without granular metering.

c. Subscription-Based Pricing (Tiered)

This model combines agent access with usage caps (e.g., inference calls or task limits), and features increase with each tier.

- Best for: Enterprise clients with multiple agents, high customization needs, or SLAs.

- Example:

- Starter: $199/mo (1 agent, 1000 tasks)

- Pro: $499/mo (5 agents, 10,000 tasks)

- Enterprise: Custom pricing with priority support, compliance features

- Starter: $199/mo (1 agent, 1000 tasks)

Benefits: Scalable and bundled with support, analytics, fine-tuning.

Limitations: May not match all consumption patterns.

2. Core Cost Drivers

To understand AaaS economics, it’s essential to identify what primarily drives costs under the hood.

a. Inference Compute

Inference operations—running a model to generate an output—are the most expensive aspect of an agent’s lifecycle.

- GPU/TPU Hours: Larger models (e.g., GPT-4, Claude) require powerful GPUs with costs ranging from $1–$10/hour.

- Latency-Sensitive Agents: Real-time agents cost more due to demand for low-latency infrastructure.

b. Context and Memory Usage

Persistent agents need access to historical interaction data and long-context models (32k–128k tokens), which cost more per run.

- Example: A 16k context model is ~30–50% more expensive per call than an 8k context equivalent.

- Memory-Intensive Agents: Agents requiring vector database lookups or semantic memory use more CPU + I/O + storage.

c. External API Calls

Many agents are wrappers around external APIs (e.g., CRM, data providers), and pricing must account for:

- API usage limits

- Overages for premium connectors

- Third-party SaaS API pricing dependencies

d. Observability and Monitoring

Operating an agent safely requires logging, tracing, and real-time analytics to track decisions—adding cloud monitoring or data warehousing costs.

3. Infrastructure Cost Breakdown

Component | Example Cost Estimate |

Model Inference | $0.03–$0.12 per 1K tokens (OpenAI, Anthropic) |

GPU Compute (AWS/Azure) | $1.2–$3.5/hour (NVIDIA A100/H100) |

Vector DB (e.g., Pinecone, Weaviate) | $0.05–$0.25 per 1K queries |

Storage (S3, etc.) | $0.023/GB/month |

API Calls | Varies – CRM, ERP, finance data |

Logging & Monitoring | $0.01–$0.10 per 1K logs/events |

4. ROI and TCO Considerations for Enterprises

ROI Modeling

Return on investment in AaaS typically comes from:

- Labor Reduction: Time saved on repetitive tasks (e.g., legal review cut from 2 hours to 15 mins).

- Decision Acceleration: Faster time-to-resolution in operations (e.g., incident mitigation).

- Revenue Impact: Conversion uplift via better personalization (e.g., AI agents in eCommerce chat).

Sample ROI Equation:

ROI = (Annual Cost Savings + Additional Revenue) – Total AaaS Spend

ROI % = (ROI / Total AaaS Spend) × 100

TCO Elements

Enterprises must calculate Total Cost of Ownership beyond usage-based pricing:

- Agent development time and training

- Compliance, security reviews

- Ongoing tuning and retraining

- Downtime mitigation (redundancy, QA)

Note: Enterprises that over-invest in general-purpose agents without clear task boundaries often incur hidden costs in monitoring and hallucination control.

AaaS introduces flexible and scalable pricing models that align cost with performance, but requires deep attention to cost levers like inference, memory context, and integration overhead. For buyers, long-term value hinges on how well the agent reduces effort, enhances decision-making, or generates revenue uplift. For providers, sustainable margins depend on infrastructure efficiency, model optimization, and pricing innovation tailored to customer use patterns.

10. Challenges and Limitations of Agent-as-a-Service

While Agent-as-a-Service (AaaS) offers numerous benefits—such as automation, scalability, and cost-efficiency—its adoption comes with distinct challenges and limitations. From data privacy concerns to model limitations and integration complexities, understanding these barriers is crucial for both providers and enterprises considering the deployment of AaaS solutions. In this section, we explore the primary challenges and limitations associated with AaaS.

1. Data Privacy and Security Concerns

a. Handling Sensitive Data

One of the most significant challenges for AaaS is ensuring the security and privacy of sensitive data, particularly in regulated industries like healthcare, finance, and legal services. When deploying agents that handle sensitive customer data, enterprises must be wary of potential data leaks, unauthorized access, or misuse of information.

- HIPAA Compliance (Healthcare): For healthcare-related agents, ensuring compliance with HIPAA and other regulatory frameworks is a primary concern. AaaS platforms that handle medical records or patient data must have stringent data protection protocols in place, including data encryption, access control, and auditability.

- GDPR Compliance (Europe): In regions like the EU, ensuring that AI agents adhere to GDPR guidelines is essential. This includes acquiring explicit consent from users and providing mechanisms for data deletion upon request.

b. Third-Party Integrations

AaaS platforms often interact with third-party data sources, APIs, or external systems, each of which could introduce additional security vulnerabilities. Enterprises must carefully vet each integration to ensure that it adheres to security protocols and does not compromise the overall system’s integrity.

Example: In a financial context, an agent accessing customer transaction data from a third-party API must ensure that all data in transit is encrypted, and the third party must undergo regular security audits.

2. Model Accuracy and Performance

a. Limited Generalization

Although AaaS solutions are trained on vast datasets, their ability to generalize across industries or specific use cases can be limited. A general-purpose agent may perform well in one environment but struggle in another, especially when domain-specific expertise is required.

- Example: An AI agent trained on generic customer service scripts may not be able to effectively handle highly technical support queries in fields like biotechnology or aerospace.

- Customization: While many AaaS providers offer customizable models, fine-tuning for niche verticals can be time-consuming and expensive, requiring significant investment in training data and domain expertise.

b. Handling Ambiguity and Complex Queries

Agents may struggle to handle ambiguous or poorly structured input. For example, when faced with multiple interpretations of a query or an unstructured data set, agents might fail to provide useful or accurate responses. This is especially problematic in domains like legal review or healthcare diagnostics, where precision and accuracy are critical.

- Example: A legal review agent may misinterpret the context of a clause in a contract or miss out on key language that could have a significant impact on the terms.

3. Integration and Interoperability

a. Complex IT Ecosystems

Enterprises often operate complex IT ecosystems, with disparate systems, databases, and third-party applications in place. Integrating an AaaS solution into such environments can be a daunting task.

- Data Silos: If data is stored in different silos or applications, ensuring seamless access and interaction between agents and these systems becomes difficult. For instance, connecting a customer service agent to a CRM system, inventory management system, and billing software may require extensive customization and additional integration tools.

- Legacy Systems: Many enterprises still rely on legacy systems that may not be easily compatible with modern AaaS platforms. This creates a significant barrier to adoption for businesses that cannot afford a full system overhaul.

b. Maintenance and Monitoring

Once deployed, AaaS solutions require ongoing maintenance and monitoring to ensure they are functioning as expected. This includes tasks like model retraining, performance tuning, and managing updates. Ensuring that these agents continue to operate at optimal levels, particularly in dynamic environments where business needs or data sources evolve, can require significant time and resources.

4. Ethical and Bias Concerns

a. Algorithmic Bias

AI models, including those used in AaaS solutions, are susceptible to biases based on the data they are trained on. If the training dataset is not diverse enough or contains biased information, the agent may produce biased outputs that adversely affect certain demographic groups.

- Example: A recruitment agent trained on historical hiring data might inadvertently perpetuate gender or racial biases in the selection process.

b. Transparency and Accountability

The “black box” nature of many AI models, particularly those based on deep learning, can create challenges in ensuring transparency. Enterprises may struggle to explain how an agent arrived at a specific decision, which is particularly problematic in fields like healthcare and finance, where regulatory frameworks often require detailed auditability.

- Solution: Some companies are exploring explainable AI (XAI) techniques to provide better insight into model decision-making. However, achieving full transparency across all types of agents remains a challenge.

5. Cost and Scalability Issues

a. High Initial Investment

While AaaS solutions often offer scalable pricing models, the initial investment required to deploy these agents can be substantial. Enterprises may need to pay for data integration, model training, and system customization, which can add up quickly, especially for highly specialized agents.

- Example: Deploying a custom legal review agent for a law firm may require training on thousands of legal documents, which can incur significant costs in terms of data processing and AI expertise.

b. Operational Costs

Even after the initial setup, ongoing operational costs such as compute power, storage, and API usage can add up. For AI agents that rely on GPU-heavy models or require constant inference calls, these operational costs can become a bottleneck, particularly for small-to-medium-sized enterprises with limited budgets.

- Example: A chatbot agent for an e-commerce platform may generate substantial costs due to high-volume interactions, especially if it’s integrated with external data sources and databases.

6. Resistance to Change

a. Cultural Barriers

Enterprises may face resistance from employees who are hesitant to adopt AI-driven solutions. Employees might fear job displacement or feel uncomfortable with the introduction of autonomous systems into their workflows. This can slow down AaaS adoption and necessitate cultural change management.

- Solution: Clear communication around AI’s role as an augmentation tool, rather than a replacement, can help reduce resistance. Training and upskilling programs can also ensure employees feel empowered to work alongside these new systems.

The challenges associated with AaaS, from data privacy concerns to the integration complexities and biases within AI models, highlight the need for careful planning and consideration before implementation. While AaaS holds the potential to transform operations and improve efficiency, organizations must approach deployment strategically, addressing potential obstacles in the areas of security, ethics, model limitations, and integration. By understanding these challenges upfront, enterprises can mitigate risks and maximize the benefits of adopting AaaS.

11. The Future of Agent-as-a-Service (2025–2030)

The Agent-as-a-Service (AaaS) landscape is poised for transformative growth between 2025 and 2030. As AI capabilities continue to evolve and enterprise adoption accelerates, AaaS will likely become a cornerstone of automation, decision-making, and customer engagement across multiple industries. However, this future will not be without challenges, as new opportunities will be tempered by the need for robust ethical standards, data privacy considerations, and a continued push toward more intelligent, adaptive systems. In this section, we explore key trends and potential developments in the AaaS domain.

1. Expansion of Use Cases and Industries

a. Broader Enterprise Adoption

By 2030, AaaS will expand its reach across virtually all sectors. Enterprises in traditionally conservative industries such as finance, law, and healthcare are increasingly adopting AaaS to streamline operations, improve customer interactions, and make data-driven decisions.

- Legal Services: Automated legal agents will become even more sophisticated, capable of interpreting legal jargon, offering real-time legal advice, and automating contract creation and reviews. These agents could handle complex cases by analyzing legal precedents and offering insights for lawyers and clients alike.

- Healthcare: AI agents will assist healthcare professionals in diagnostics, prognosis, and personalized treatment planning, using vast amounts of patient data and emerging medical research to generate predictive insights that improve patient outcomes.

- Finance: Financial services will see more use of AaaS for fraud detection, customer service, and personalized financial planning. By 2030, AaaS could deliver fully autonomous wealth management services, offering real-time insights into global financial markets.

b. Expansion of Customer Interactions

Customer experience, a major driver of AaaS adoption, will continue to evolve with the integration of more advanced conversational agents. Voice and text-based agents will be augmented with sophisticated emotional intelligence capabilities, offering hyper-personalized interactions across websites, apps, and customer service platforms.

- Example: E-commerce businesses may offer agents that understand customer sentiment, predict preferences, and provide tailored product recommendations, all while maintaining context across interactions.

2. Advanced AI Capabilities

a. Enhanced Cognitive Abilities

By 2030, AI agents will be able to process and analyze larger datasets in real-time, enabling much deeper insights and decision-making across a variety of use cases. With the advent of multi-modal agents, which combine text, image, video, and sensor data processing, these agents will become more context-aware, adaptive, and able to engage in complex tasks.

- Example: In the healthcare industry, an AI agent could evaluate medical images, read patient medical histories, and interact with doctors in natural language, offering a more holistic diagnosis or suggesting treatment plans.

b. Continual Learning and Self-Improvement

Future AaaS solutions will likely adopt continual learning systems, which allow agents to learn from new data without requiring complete retraining. This will make them more adaptable to changing business environments, customer behaviors, and emerging trends.

- Example: A marketing agent for an e-commerce platform will continuously optimize campaigns in real-time by learning from the latest customer interactions and market trends, improving its recommendations without manual intervention.

c. Quantum Computing Integration

While still in its early stages, quantum computing will start influencing AaaS by 2030. Quantum algorithms could revolutionize optimization tasks, accelerating machine learning processes and enabling AI agents to make decisions with unprecedented speed and accuracy.

- Example: In logistics, quantum-powered agents could optimize supply chains by simulating complex scenarios and predicting supply-demand dynamics with far greater precision than current systems.

3. Ethical and Regulatory Frameworks

a. Ethical AI and Bias Mitigation

As the use of AI agents grows, so will concerns about fairness, transparency, and accountability. By 2030, businesses will be required to adopt more rigorous ethical standards to mitigate bias in AI systems, ensuring that agents treat all users fairly and do not perpetuate harmful stereotypes.

- Example: A financial AI agent used in loan origination will be designed to mitigate racial or gender biases that might arise from historical data, ensuring that all applicants are treated equitably based on their individual financial profiles.

b. Regulation and Governance

Governments and regulatory bodies will introduce more comprehensive regulations governing the use of AI agents, especially in sensitive industries such as healthcare, finance, and legal services. Expect stricter data protection laws, transparency in algorithmic decision-making, and measures to ensure that AI agents are auditable and accountable.

- Example: The EU’s AI Act, set to become more comprehensive by 2030, may impose stricter guidelines on high-risk AI systems, which could impact AaaS adoption in regulated sectors like healthcare and banking.

4. Increased Customization and Personalization

a. Industry-Specific Solutions

As the market matures, AaaS providers will offer more tailored solutions, specifically designed for industry needs. This will allow enterprises to deploy highly specialized agents without the need for significant customization.

- Example: A customer service agent for the insurance industry may be trained to handle specific claims processes, policy language, and regulatory considerations, ensuring seamless interaction with customers while maintaining industry compliance.

b. Dynamic Personalization

AaaS platforms will integrate more advanced personalization engines, allowing agents to adjust their behavior based on the unique needs of users. These agents will understand the user’s context, preferences, and goals, enabling hyper-personalized interactions that improve customer satisfaction and business outcomes.

- Example: A financial planning agent may consider an individual’s investment history, risk tolerance, and life events to dynamically adjust its recommendations, providing personalized financial advice over time.

5. Integration with Other Emerging Technologies

a. 5G and Edge Computing

With the rollout of 5G networks and edge computing, AaaS will benefit from faster, more reliable data processing at the edge of the network, enabling real-time decision-making. This will be particularly useful for industries like autonomous vehicles, manufacturing, and smart cities, where low-latency processing is crucial.

- Example: In autonomous driving, agents deployed in vehicles could leverage 5G and edge computing to process data from sensors and cameras in real-time, enabling quicker responses to environmental changes.

b. Integration with IoT Devices

As the Internet of Things (IoT) expands, AaaS platforms will increasingly interact with IoT devices, enabling more sophisticated and automated control over physical environments. This could enhance efficiency in areas such as manufacturing, home automation, and supply chain management.

- Example: In smart homes, AaaS-powered agents will coordinate with IoT devices to optimize energy usage, security, and household tasks based on real-time data from sensors and user behavior.

6. Challenges and Considerations Ahead

While the future of AaaS is bright, it is not without its challenges. Issues such as data privacy, security concerns, and the potential for AI to disrupt existing jobs will need to be addressed. Enterprises must balance the benefits of automation with the need to maintain ethical standards and human oversight.

Moreover, AaaS providers will need to ensure that their offerings can scale efficiently and remain adaptable to evolving technologies and customer needs. As AI continues to advance, it will be essential for these platforms to remain transparent, accountable, and committed to fostering long-term trust with their users.

The future of Agent-as-a-Service between 2025 and 2030 promises a landscape rich with opportunities for innovation across industries. As AI continues to evolve, so will the capabilities of AaaS platforms, creating smarter, more adaptable, and efficient agents. However, as these systems become more integrated into everyday operations, addressing ethical concerns, regulatory challenges, and the complexities of integration will be critical for ensuring long-term success and responsible adoption.

12. Conclusion and Strategic Recommendations

As we conclude this comprehensive guide on Agent-as-a-Service (AaaS), it is clear that this emerging technology has the potential to transform how businesses operate, engage with customers, and manage internal processes. However, the decision of whether to build, buy, or integrate AaaS solutions should be approached strategically. In this section, we summarize the key takeaways for CTOs, AI leaders, and business decision-makers, and provide a decision-making framework to help organizations navigate this process.

Should Your Company Build, Buy, or Integrate AaaS?

When faced with the choice of building, buying, or integrating an AaaS solution, it’s essential to evaluate several key factors:

- Build: Building an AaaS solution in-house is ideal for organizations with specific, complex requirements that cannot be met by off-the-shelf solutions. It offers the flexibility to customize the agents to fit precise business needs. However, it demands substantial resources, a skilled AI development team, and the ability to manage continuous updates and maintenance.

- Buy: For most companies, purchasing an AaaS solution from a vendor will be the most cost-effective and time-efficient option. This is particularly true for common use cases such as customer service, HR automation, or IT monitoring. AaaS providers offer pre-built, robust agents that can be quickly deployed and scaled, often with strong customer support and compliance features.

- Integrate: Some businesses may choose to integrate existing AaaS solutions into their operations, particularly when adopting a hybrid approach to automation. Integrating a third-party AaaS platform with existing infrastructure, such as CRM or ERP systems, can enhance business processes without the need for complete overhauls. This is often the best approach for companies looking to enhance certain operations without disrupting their entire ecosystem.

Key Takeaways for CTOs and AI Leaders

- AaaS is a game-changer for operational efficiency: AaaS can significantly enhance business processes by automating repetitive tasks, improving decision-making, and providing 24/7 availability.

- Cost and ROI are crucial factors: While AaaS offers clear cost savings in terms of time and labor, organizations must carefully consider pricing models and ongoing infrastructure costs to ensure a favorable ROI.

- Security, privacy, and governance are paramount: When deploying AaaS, it’s critical to prioritize data protection, ethical AI use, and compliance with relevant regulations. This will help build trust and avoid potential legal pitfalls.

- Customization vs. Standardization: The decision to adopt a customizable AaaS solution should be based on the complexity of the business needs. Many industries, especially those with highly specific operational requirements, may benefit from tailored agents.

- The future is hybrid: AaaS will become a key component of a hybrid IT environment where both human and machine work together to drive productivity. Integrating AaaS with existing systems can offer enhanced performance while mitigating disruption.

Decision-Making Framework: When to Deploy Agents and How to Evaluate Providers

When to Deploy Agents

- High Volume, Repetitive Tasks: Deploy agents in areas with high transaction volumes, such as customer service, HR automation, or logistics, where repetitive tasks can be easily automated without sacrificing quality.

- Data-Driven Decision-Making: Implement AaaS when there is a need to analyze large datasets and generate insights in real-time, such as in financial analysis, healthcare diagnostics, or supply chain management.

- 24/7 Operations: Consider AaaS for processes that require continuous availability, such as customer interaction bots, incident triage agents in IT operations, or automated support desks.

How to Evaluate Providers